Product

Introducing Socket Scanning for OpenVSX Extensions

Socket now scans OpenVSX extensions, giving teams early detection of risky behaviors, hidden capabilities, and supply chain threats in developer tools.

Library to help manage AI prompts, models and parameters using the .aiconfig file format.

Full documentation: aiconfig.lastmileai.dev

AIConfig saves prompts, models and model parameters as source control friendly configs. This allows you to iterate on prompts and model parameters separately from your application code.

aiconfig in your application code. AIConfig is designed to be model-agnostic and multi-modal, so you can extend it to work with any generative AI model, including text, image and audio.aiconfig files visually, run prompts, tweak models and model settings, and chain things together.Today, application code is tightly coupled with the gen AI settings for the application -- prompts, parameters, and model-specific logic is all jumbled in with app code.

AIConfig helps unwind complexity by separating prompts, model parameters, and model-specific logic from your application.

config.run()aiconfig in a playground to iterate quicklyaiconfig - it's the AI artifact for your application.![]()

aiconfig format to save prompts and model settings, which you can use for evaluation, reproducibility and simplifying your application code.aiconfig format.aiconfig.aiconfig, and use the SDK to connect it to your application code.Install with your favorite package manager for Node.

npm or yarnnpm install aiconfig

yarn add aiconfig

Detailed installation instructions.

Please see the detailed Getting Started guide

In this quickstart, you will create a customizable NYC travel itinerary using aiconfig.

This AIConfig contains a prompt chain to get a list of travel activities from an LLM and then generate an itinerary in an order specified by the user.

Link to tutorial code: here

https://github.com/lastmile-ai/aiconfig/assets/25641935/d3d41ad2-ab66-4eb6-9deb-012ca283ff81

travel.aiconfig.jsonNote: Don't worry if you don't understand all the pieces of this yet, we'll go over it step by step.

{

"name": "NYC Trip Planner",

"description": "Intrepid explorer with ChatGPT and AIConfig",

"schema_version": "latest",

"metadata": {

"models": {

"gpt-3.5-turbo": {

"model": "gpt-3.5-turbo",

"top_p": 1,

"temperature": 1

},

"gpt-4": {

"model": "gpt-4",

"max_tokens": 3000,

"system_prompt": "You are an expert travel coordinator with exquisite taste."

}

},

"default_model": "gpt-3.5-turbo"

},

"prompts": [

{

"name": "get_activities",

"input": "Tell me 10 fun attractions to do in NYC."

},

{

"name": "gen_itinerary",

"input": "Generate an itinerary ordered by {{order_by}} for these activities: {{get_activities.output}}.",

"metadata": {

"model": "gpt-4",

"parameters": {

"order_by": "geographic location"

}

}

}

]

}

get_activities prompt.Note: Make sure to specify the API keys (such as

OPENAI_API_KEY) in your environment before proceeding.

In your CLI, set the environment variable:

export OPENAI_API_KEY=my_key

You don't need to worry about how to run inference for the model; it's all handled by AIConfig. The prompt runs with gpt-3.5-turbo since that is the default_model for this AIConfig.

import * as path from "path";

import { AIConfigRuntime, InferenceOptions } from "aiconfig";

async function travelWithGPT() {

const aiConfig = AIConfigRuntime.load(

path.join(__dirname, "travel.aiconfig.json")

);

const options: InferenceOptions = {

callbacks: {

streamCallback: (data: any, _acc: any, _idx: any) => {

// Write streamed content to console

process.stdout.write(data?.content || "\n");

},

},

};

// Run a single prompt

await aiConfig.run("get_activities", /*params*/ undefined, options);

}

gen_itinerary prompt.This prompt depends on the output of get_activities. It also takes in parameters (user input) to determine the customized itinerary.

Let's take a closer look:

gen_itinerary prompt:

"Generate an itinerary ordered by {{order_by}} for these activities: {{get_activities.output}}."

prompt metadata:

{

"metadata": {

"model": "gpt-4",

"parameters": {

"order_by": "geographic location"

}

}

}

Observe the following:

get_activities prompt.order_by parameter (using {{handlebars}} syntax)get_activities prompt it depends on uses gpt-3.5-turbo.Effectively, this is a prompt chain between

gen_itineraryandget_activitiesprompts, as well as as a model chain between gpt-3.5-turbo and gpt-4.

Let's run this with AIConfig:

Replace config.run above with this:

// Run a prompt chain, with data passed in as params

// This will first run get_activities with GPT-3.5, and

// then use its output to run the gen_itinerary using GPT-4

await aiConfig.runWithDependencies(

"gen_itinerary",

/*params*/ { order_by: "duration" },

options

);

Notice how simple the syntax is to perform a fairly complex task - running 2 different prompts across 2 different models and chaining one's output as part of the input of another.

The code will just run get_activities, then pipe its output as an input to gen_itinerary, and finally run gen_itinerary.

Let's save the AIConfig back to disk, and serialize the outputs from the latest inference run as well:

// Save the AIConfig to disk, and serialize outputs from the model run

aiConfig.save(

"updated.aiconfig.json",

/*saveOptions*/ { serializeOutputs: true }

);

aiconfig in a notebook editorWe can iterate on an aiconfig using a notebook-like editor called an AI Workbook. Now that we have an aiconfig file artifact that encapsulates the generative AI part of our application, we can iterate on it separately from the application code that uses it.

travel.aiconfig.jsonhttps://github.com/lastmile-ai/aiconfig/assets/81494782/5d901493-bbda-4f8e-93c7-dd9a91bf242e

Try out the workbook playground here: NYC Travel Workbook

We are working on a local editor that you can run yourself. For now, please use the hosted version on https://lastmileai.dev.

There is a lot you can do with aiconfig. We have several other tutorials to help get you started:

Here are some example uses:

AIConfig supports the following model models out of the box:

If you need to use a model that isn't provided out of the box, you can implement a

ModelParserfor it (see Extending AIConfig). We welcome contributions

Read the Usage Guide for more details.

The AIConfig SDK supports CRUD operations for prompts, models, parameters and metadata. Here are some common examples.

The root interface is the AIConfigRuntime object. That is the entrypoint for interacting with an AIConfig programmatically.

Let's go over a few key CRUD operations to give a glimpse.

createconfig = AIConfigRuntime.create("aiconfig name", "description");

resolveresolve deserializes an existing Prompt into the data object that its model expects.

config.resolve("prompt_name", params);

params are overrides you can specify to resolve any {{handlebars}} templates in the prompt. See the gen_itinerary prompt in the Getting Started example.

serializeserialize is the inverse of resolve -- it serializes the data object that a model understands into a Prompt object that can be serialized into the aiconfig format.

config.serialize("model_name", data, "prompt_name");

runrun is used to run inference for the specified Prompt.

config.run("prompt_name", params);

run_with_dependenciesThis is a variant of run -- this re-runs all prompt dependencies.

For example, in travel.aiconfig.json, the gen_itinerary prompt references the output of the get_activities prompt using {{get_activities.output}}.

Running this function will first execute get_activities, and use its output to resolve the gen_itinerary prompt before executing it.

This is transitive, so it computes the Directed Acyclic Graph of dependencies to execute. Complex relationships can be modeled this way.

config.run_with_dependencies("gen_itinerary");

Use the get/setMetadata and get/setParameter methods to interact with metadata and parameters (setParameter is just syntactic sugar to update "metadata.parameters")

config.setMetadata("key", data, "prompt_name");

Note: if "prompt_name" is specified, the metadata is updated specifically for that prompt. Otherwise, the global metadata is updated.

AIConfigRuntime.registerModelParserUse the AIConfigRuntime.registerModelParser if you want to use a different ModelParser, or configure AIConfig to work with an additional model.

AIConfig uses the model name string to retrieve the right ModelParser for a given Prompt (see AIConfigRuntime.getModelParser), so you can register a different ModelParser for the same ID to override which ModelParser handles a Prompt.

For example, suppose I want to use MyOpenAIModelParser to handle gpt-4 prompts. I can do the following at the start of my application:

AIConfigRuntime.registerModelParser(myModelParserInstance, ["gpt-4"]);

Use callback events to trace and monitor what's going on -- helpful for debugging and observability.

import * as path from "path";

import {

AIConfigRuntime,

Callback,

CallbackEvent,

CallbackManager,

} from "aiconfig";

const config = AIConfigRuntime.load(path.join(__dirname, "aiconfig.json"));

const myCustomCallback: Callback = async (event: CallbackEvent) => {

console.log(`Event triggered: ${event.name}`, event);

};

const callbackManager = new CallbackManager([myCustomCallback]);

config.setCallbackManager(callbackManager);

await config.run("prompt_name");

AIConfig is designed to be customized and extended for your use-case. The Extensibility guide goes into more detail.

Currently, there are 3 core ways to extend AIConfig:

aiconfigaiconfigThis is our first open-source project and we'd love your help.

See our contributing guidelines -- we would especially love help adding support for additional models that the community wants.

We provide several guides to demonstrate the power of aiconfig.

See the

cookbooksfolder for examples to clone.

Wizard GPT - speak to a wizard on your CLI

CLI-mate - help you make code-mods interactively on your codebase.

At its core, RAG is about passing data into prompts. Read how to pass data with AIConfig.

A variant of chain-of-thought is Chain of Verification, used to help reduce hallucinations. Check out the aiconfig cookbook for CoVe:

aiconfigThis project is under active development.

If you'd like to help, please see the contributing guidelines.

Please create issues for additional capabilities you'd like to see.

Here's what's already on our roadmap:

aiconfig artifacts to be evaluated with user-defined eval functions.

aiconfig: enable you to interact with aiconfigs more intuitively.aiconfig file?Editing a configshould be done either programmatically via SDK or via the UI (workbooks):

Programmatic editing.

Edit with a workbook editor: this is similar to editing an ipynb file as a notebook (most people never touch the json ipynb directly)

You should only edit the aiconfig by hand for minor modifications, like tweaking a prompt string or updating some metadata.

Out of the box, AIConfig already supports all OpenAI GPT* models, Google’s PaLM model and any “textgeneration” model on Hugging Face (like Mistral). See Supported Models for more details.

Additionally, you can install aiconfig extensions for additional models (see question below).

Yes. This example goes through how to do it.

We are also working on adding support for the Assistants API.

Model support is implemented as “ModelParser”s in the AIConfig SDK, and the idea is that anyone, including you, can define a ModelParser (and even publish it as an extension package).

All that’s needed to use a model with AIConfig is a ModelParser that knows

For more details, see Extensibility.

aiconfig?The AIConfigRuntime object is used to interact with an aiconfig programmatically (see SDK usage guide). As you run prompts, this object keeps track of the outputs returned from the model.

You can choose to serialize these outputs back into the aiconfig by using the config.save(include_outputs=True) API. This can be useful for preserving context -- think of it like session state.

For example, you can use aiconfig to create a chatbot, and use the same format to save the chat history so it can be resumed for the next session.

You can also choose to save outputs to a different file than the original config -- config.save("history.aiconfig.json", include_outputs=True).

aiconfig instead of things like configurator?It helps to have a standardized format specifically for storing generative AI prompts, inference results, model parameters and arbitrary metadata, as opposed to a general-purpose configuration schema.

With that standardization, you just need a layer that knows how to serialize/deserialize from that format into whatever the inference endpoints require.

ipynb for Jupyter notebooksWe believe that notebooks are a perfect iteration environment for generative AI -- they are flexible, multi-modal, and collaborative.

The multi-modality and flexibility offered by notebooks and ipynb offers a good interaction model for generative AI. The aiconfig file format is extensible like ipynb, and AI Workbook editor allows rapid iteration in a notebook-like IDE.

AI Workbooks are to AIConfig what Jupyter notebooks are to ipynb

There are 2 areas where we are going beyond what notebooks offer:

aiconfig is more source-control friendly than ipynb. ipynb stores binary data (images, etc.) by encoding it in the file, while aiconfig recommends using file URI references instead.aiconfig can be imported and connected to application code using the AIConfig SDK.FAQs

Library to help manage AI prompts, models and parameters using the .aiconfig file format.

We found that aiconfig demonstrated a not healthy version release cadence and project activity because the last version was released a year ago. It has 5 open source maintainers collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

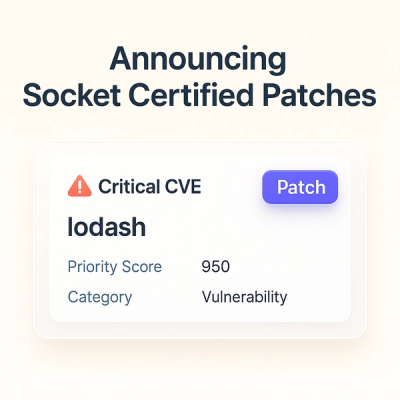

Product

Socket now scans OpenVSX extensions, giving teams early detection of risky behaviors, hidden capabilities, and supply chain threats in developer tools.

Product

Bringing supply chain security to the next generation of JavaScript package managers

Product

A safer, faster way to eliminate vulnerabilities without updating dependencies