Product

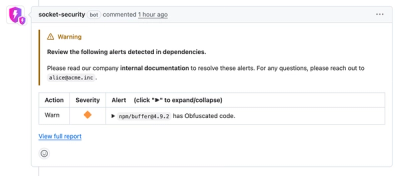

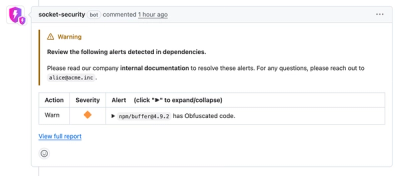

Introducing Custom Pull Request Alert Comment Headers

Socket now lets you customize pull request alert headers, helping security teams share clear guidance right in PRs to speed reviews and reduce back-and-forth.

aws-dynamodb-query-iterator

Advanced tools

Abstraction for DynamoDB queries and scans that handles pagination and parallel worker coordination

This library provides utilities for automatically iterating over all DynamoDB records returned by a query or scan operation using async iterables. Each iterator and paginator included in this package automatically tracks DynamoDB metadata and supports resuming iteration from any point within a full query or scan.

Paginators are asynchronous iterables that yield each page of results returned

by a DynamoDB query or scan operation. For sequential paginators, each

invocation of the next method corresponds to an invocation of the underlying

API operation until all no more pages are available.

Retrieves all pages of a DynamoDB query in order.

import { QueryPaginator } from '@aws/dynamodb-query-iterator';

import DynamoDB = require('aws-sdk/clients/dynamodb');

const paginator = new QueryPaginator(

new DynamoDB({region: 'us-west-2'}),

{

TableName: 'my_table',

KeyConditionExpression: 'partitionKey = :value',

ExpressionAttributeValues: {

':value': {S: 'foo'}

},

ReturnConsumedCapacity: 'INDEXES'

}

);

for await (const page of paginator) {

// do something with `page`

}

// Inspect the total number of items yielded

console.log(paginator.count);

// Inspect the total number of items scanned by this operation

console.log(paginator.scannedCount);

// Inspect the capacity consumed by this operation

// This will only be available if `ReturnConsumedCapacity` was set on the input

console.log(paginator.consumedCapacity);

You can suspend any running query from within the for loop by using the

break keyword. If there are still pages that have not been fetched, the

lastEvaluatedKey property of paginator will be defined. This can be provided

as the ExclusiveStartKey for another QueryPaginator instance:

import { QueryPaginator } from '@aws/dynamodb-query-iterator';

import { QueryInput } from 'aws-sdk/clients/dynamodb';

import DynamoDB = require('aws-sdk/clients/dynamodb');

const dynamoDb = new DynamoDB({region: 'us-west-2'});

const input: QueryInput = {

TableName: 'my_table',

KeyConditionExpression: 'partitionKey = :value',

ExpressionAttributeValues: {

':value': {S: 'foo'}

},

ReturnConsumedCapacity: 'INDEXES'

};

const paginator = new QueryPaginator(dynamoDb, input);

for await (const page of paginator) {

// do something with the first page of results

break

}

for await (const page of new QueryPaginator(dynamoDb, {

...input,

ExclusiveStartKey: paginator.lastEvaluatedKey

})) {

// do something with the remaining pages

}

Suspending and resuming the same paginator instance is not supported.

Retrieves all pages of a DynamoDB scan in order.

import { ScanPaginator } from '@aws/dynamodb-query-iterator';

import DynamoDB = require('aws-sdk/clients/dynamodb');

const paginator = new ScanPaginator(

new DynamoDB({region: 'us-west-2'}),

{

TableName: 'my_table',

ReturnConsumedCapacity: 'INDEXES'

}

);

for await (const page of paginator) {

// do something with `page`

}

// Inspect the total number of items yielded

console.log(paginator.count);

// Inspect the total number of items scanned by this operation

console.log(paginator.scannedCount);

// Inspect the capacity consumed by this operation

// This will only be available if `ReturnConsumedCapacity` was set on the input

console.log(paginator.consumedCapacity);

You can suspend any running scan from within the for loop by using the break

keyword. If there are still pages that have not been fetched, the

lastEvaluatedKey property of paginator will be defined. This can be provided

as the ExclusiveStartKey for another ScanPaginator instance:

import { ScanPaginator } from '@aws/dynamodb-query-iterator';

import { ScanInput } from 'aws-sdk/clients/dynamodb';

import DynamoDB = require('aws-sdk/clients/dynamodb');

const dynamoDb = new DynamoDB({region: 'us-west-2'});

const input: ScanInput = {

TableName: 'my_table',

ReturnConsumedCapacity: 'INDEXES'

};

const paginator = new ScanPaginator(dynamoDb, input);

for await (const page of paginator) {

// do something with the first page of results

break

}

for await (const page of new ScanPaginator(dynamoDb, {

...input,

ExclusiveStartKey: paginator.lastEvaluatedKey

})) {

// do something with the remaining pages

}

Suspending and resuming the same paginator instance is not supported.

Retrieves all pages of a DynamoDB scan utilizing a configurable number of scan

segments that operate in parallel. When performing a parallel scan, you must

specify the total number of segments you wish to use, and neither an

ExclusiveStartKey nor a Segment identifier may be included with the input

provided.

import { ParallelScanPaginator } from '@aws/dynamodb-query-iterator';

import DynamoDB = require('aws-sdk/clients/dynamodb');

const paginator = new ParallelScanPaginator(

new DynamoDB({region: 'us-west-2'}),

{

TableName: 'my_table',

TotalSegments: 4,

ReturnConsumedCapacity: 'INDEXES'

}

);

for await (const page of paginator) {

// do something with `page`

}

// Inspect the total number of items yielded

console.log(paginator.count);

// Inspect the total number of items scanned by this operation

console.log(paginator.scannedCount);

// Inspect the capacity consumed by this operation

// This will only be available if `ReturnConsumedCapacity` was set on the input

console.log(paginator.consumedCapacity);

You can suspend any running scan from within the for loop by using the break

keyword. If there are still pages that have not been fetched, the scanState

property of interrupted paginator can be provided to the constructor of another

ParallelScanPaginator instance:

import {

ParallelScanInput,

ParallelScanPaginator,

} from '@aws/dynamodb-query-iterator';

import DynamoDB = require('aws-sdk/clients/dynamodb');

const client = new DynamoDB({region: 'us-west-2'});

const input: ParallelScanInput = {

TableName: 'my_table',

TotalSegments: 4,

ReturnConsumedCapacity: 'INDEXES'

};

const paginator = new ParallelScanPaginator(client, input);

for await (const page of paginator) {

// do something with the first page of results

break

}

for await (const page of new ParallelScanPaginator(

client,

input,

paginator.scanState

)) {

// do something with the remaining pages

}

Suspending and resuming the same paginator instance is not supported.

Iterators are asynchronous iterables that yield each of record returned by a

DynamoDB query or scan operation. Each invocation of the next method may

invoke the underlying API operation until all no more pages are available.

Retrieves all records of a DynamoDB query in order.

import { QueryIterator } from '@aws/dynamodb-query-iterator';

import DynamoDB = require('aws-sdk/clients/dynamodb');

const iterator = new QueryIterator(

new DynamoDB({region: 'us-west-2'}),

{

TableName: 'my_table',

KeyConditionExpression: 'partitionKey = :value',

ExpressionAttributeValues: {

':value': {S: 'foo'}

},

ReturnConsumedCapacity: 'INDEXES'

},

['partitionKey']

);

for await (const record of iterator) {

// do something with `record`

}

// Inspect the total number of items yielded

console.log(iterator.count);

// Inspect the total number of items scanned by this operation

console.log(iterator.scannedCount);

// Inspect the capacity consumed by this operation

// This will only be available if `ReturnConsumedCapacity` was set on the input

console.log(iterator.consumedCapacity);

Retrieves all records of a DynamoDB scan in order.

import { ScanIterator } from '@aws/dynamodb-query-iterator';

import DynamoDB = require('aws-sdk/clients/dynamodb');

const iterator = new ScanIterator(

new DynamoDB({region: 'us-west-2'}),

{

TableName: 'my_table',

ReturnConsumedCapacity: 'INDEXES'

},

['partitionKey', 'sortKey']

);

for await (const record of iterator) {

// do something with `record`

}

// Inspect the total number of items yielded

console.log(iterator.count);

// Inspect the total number of items scanned by this operation

console.log(iterator.scannedCount);

// Inspect the capacity consumed by this operation

// This will only be available if `ReturnConsumedCapacity` was set on the input

console.log(iterator.consumedCapacity);

Retrieves all pages of a DynamoDB scan utilizing a configurable number of scan

segments that operate in parallel. When performing a parallel scan, you must

specify the total number of segments you wish to use, and neither an

ExclusiveStartKey nor a Segment identifier may be included with the input

provided.

import { ParallelScanIterator} from '@aws/dynamodb-query-iterator';

import DynamoDB = require('aws-sdk/clients/dynamodb');

const iterator = new ParallelScanIterator(

new DynamoDB({region: 'us-west-2'}),

{

TableName: 'my_table',

TotalSegments: 4,

ReturnConsumedCapacity: 'INDEXES'

},

['partitionKey']

);

for await (const record of iterator) {

// do something with `record`

}

// Inspect the total number of items yielded

console.log(iterator.count);

// Inspect the total number of items scanned by this operation

console.log(iterator.scannedCount);

// Inspect the capacity consumed by this operation

// This will only be available if `ReturnConsumedCapacity` was set on the input

console.log(iterator.consumedCapacity);

FAQs

Abstraction for DynamoDB queries and scans that handles pagination and parallel worker coordination

The npm package aws-dynamodb-query-iterator receives a total of 0 weekly downloads. As such, aws-dynamodb-query-iterator popularity was classified as not popular.

We found that aws-dynamodb-query-iterator demonstrated a not healthy version release cadence and project activity because the last version was released a year ago. It has 1 open source maintainer collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Product

Socket now lets you customize pull request alert headers, helping security teams share clear guidance right in PRs to speed reviews and reduce back-and-forth.

Product

Socket's Rust support is moving to Beta: all users can scan Cargo projects and generate SBOMs, including Cargo.toml-only crates, with Rust-aware supply chain checks.

Product

Socket Fix 2.0 brings targeted CVE remediation, smarter upgrade planning, and broader ecosystem support to help developers get to zero alerts.