face-api.js

Advanced tools

Comparing version 0.13.0 to 0.14.0

| "use strict"; | ||

| Object.defineProperty(exports, "__esModule", { value: true }); | ||

| var tfjs_image_recognition_base_1 = require("tfjs-image-recognition-base"); | ||

| var tfjs_tiny_yolov2_1 = require("tfjs-tiny-yolov2"); | ||

| var extractorsFactory_1 = require("./extractorsFactory"); | ||

| function extractParams(weights) { | ||

| var paramMappings = []; | ||

| var _a = tfjs_image_recognition_base_1.extractWeightsFactory(weights), extractWeights = _a.extractWeights, getRemainingWeights = _a.getRemainingWeights; | ||

| var extractConvParams = tfjs_tiny_yolov2_1.extractConvParamsFactory(extractWeights, paramMappings); | ||

| var extractFCParams = tfjs_tiny_yolov2_1.extractFCParamsFactory(extractWeights, paramMappings); | ||

| var conv0 = extractConvParams(3, 32, 3, 'conv0'); | ||

| var conv1 = extractConvParams(32, 64, 3, 'conv1'); | ||

| var conv2 = extractConvParams(64, 64, 3, 'conv2'); | ||

| var conv3 = extractConvParams(64, 64, 3, 'conv3'); | ||

| var conv4 = extractConvParams(64, 64, 3, 'conv4'); | ||

| var conv5 = extractConvParams(64, 128, 3, 'conv5'); | ||

| var conv6 = extractConvParams(128, 128, 3, 'conv6'); | ||

| var conv7 = extractConvParams(128, 256, 3, 'conv7'); | ||

| var fc0 = extractFCParams(6400, 1024, 'fc0'); | ||

| var fc1 = extractFCParams(1024, 136, 'fc1'); | ||

| var _b = extractorsFactory_1.extractorsFactory(extractWeights, paramMappings), extractDenseBlock4Params = _b.extractDenseBlock4Params, extractFCParams = _b.extractFCParams; | ||

| var dense0 = extractDenseBlock4Params(3, 32, 'dense0', true); | ||

| var dense1 = extractDenseBlock4Params(32, 64, 'dense1'); | ||

| var dense2 = extractDenseBlock4Params(64, 128, 'dense2'); | ||

| var dense3 = extractDenseBlock4Params(128, 256, 'dense3'); | ||

| var fc = extractFCParams(256, 136, 'fc'); | ||

| if (getRemainingWeights().length !== 0) { | ||

@@ -25,14 +19,3 @@ throw new Error("weights remaing after extract: " + getRemainingWeights().length); | ||

| paramMappings: paramMappings, | ||

| params: { | ||

| conv0: conv0, | ||

| conv1: conv1, | ||

| conv2: conv2, | ||

| conv3: conv3, | ||

| conv4: conv4, | ||

| conv5: conv5, | ||

| conv6: conv6, | ||

| conv7: conv7, | ||

| fc0: fc0, | ||

| fc1: fc1 | ||

| } | ||

| params: { dense0: dense0, dense1: dense1, dense2: dense2, dense3: dense3, fc: fc } | ||

| }; | ||

@@ -39,0 +22,0 @@ } |

@@ -5,3 +5,4 @@ "use strict"; | ||

| var tf = require("@tensorflow/tfjs-core"); | ||

| var tfjs_tiny_yolov2_1 = require("tfjs-tiny-yolov2"); | ||

| var tfjs_image_recognition_base_1 = require("tfjs-image-recognition-base"); | ||

| var depthwiseSeparableConv_1 = require("./depthwiseSeparableConv"); | ||

| var extractParams_1 = require("./extractParams"); | ||

@@ -11,13 +12,20 @@ var FaceLandmark68NetBase_1 = require("./FaceLandmark68NetBase"); | ||

| var loadQuantizedParams_1 = require("./loadQuantizedParams"); | ||

| function conv(x, params) { | ||

| return tfjs_tiny_yolov2_1.convLayer(x, params, 'valid', true); | ||

| function denseBlock(x, denseBlockParams, isFirstLayer) { | ||

| if (isFirstLayer === void 0) { isFirstLayer = false; } | ||

| return tf.tidy(function () { | ||

| var out1 = tf.relu(isFirstLayer | ||

| ? tf.add(tf.conv2d(x, denseBlockParams.conv0.filters, [2, 2], 'same'), denseBlockParams.conv0.bias) | ||

| : depthwiseSeparableConv_1.depthwiseSeparableConv(x, denseBlockParams.conv0, [2, 2])); | ||

| var out2 = depthwiseSeparableConv_1.depthwiseSeparableConv(out1, denseBlockParams.conv1, [1, 1]); | ||

| var in3 = tf.relu(tf.add(out1, out2)); | ||

| var out3 = depthwiseSeparableConv_1.depthwiseSeparableConv(in3, denseBlockParams.conv2, [1, 1]); | ||

| var in4 = tf.relu(tf.add(out1, tf.add(out2, out3))); | ||

| var out4 = depthwiseSeparableConv_1.depthwiseSeparableConv(in4, denseBlockParams.conv3, [1, 1]); | ||

| return tf.relu(tf.add(out1, tf.add(out2, tf.add(out3, out4)))); | ||

| }); | ||

| } | ||

| function maxPool(x, strides) { | ||

| if (strides === void 0) { strides = [2, 2]; } | ||

| return tf.maxPool(x, [2, 2], strides, 'valid'); | ||

| } | ||

| var FaceLandmark68Net = /** @class */ (function (_super) { | ||

| tslib_1.__extends(FaceLandmark68Net, _super); | ||

| function FaceLandmark68Net() { | ||

| return _super.call(this, 'FaceLandmark68Net') || this; | ||

| return _super.call(this, 'FaceLandmark68LargeNet') || this; | ||

| } | ||

@@ -27,20 +35,14 @@ FaceLandmark68Net.prototype.runNet = function (input) { | ||

| if (!params) { | ||

| throw new Error('FaceLandmark68Net - load model before inference'); | ||

| throw new Error('FaceLandmark68LargeNet - load model before inference'); | ||

| } | ||

| return tf.tidy(function () { | ||

| var batchTensor = input.toBatchTensor(128, true).toFloat(); | ||

| var out = conv(batchTensor, params.conv0); | ||

| out = maxPool(out); | ||

| out = conv(out, params.conv1); | ||

| out = conv(out, params.conv2); | ||

| out = maxPool(out); | ||

| out = conv(out, params.conv3); | ||

| out = conv(out, params.conv4); | ||

| out = maxPool(out); | ||

| out = conv(out, params.conv5); | ||

| out = conv(out, params.conv6); | ||

| out = maxPool(out, [1, 1]); | ||

| out = conv(out, params.conv7); | ||

| var fc0 = tf.relu(fullyConnectedLayer_1.fullyConnectedLayer(out.as2D(out.shape[0], -1), params.fc0)); | ||

| return fullyConnectedLayer_1.fullyConnectedLayer(fc0, params.fc1); | ||

| var batchTensor = input.toBatchTensor(112, true); | ||

| var meanRgb = [122.782, 117.001, 104.298]; | ||

| var normalized = tfjs_image_recognition_base_1.normalize(batchTensor, meanRgb).div(tf.scalar(255)); | ||

| var out = denseBlock(normalized, params.dense0, true); | ||

| out = denseBlock(out, params.dense1); | ||

| out = denseBlock(out, params.dense2); | ||

| out = denseBlock(out, params.dense3); | ||

| out = tf.avgPool(out, [7, 7], [2, 2], 'valid'); | ||

| return fullyConnectedLayer_1.fullyConnectedLayer(out.as2D(out.shape[0], -1), params.fc); | ||

| }); | ||

@@ -47,0 +49,0 @@ }; |

| import { FaceLandmark68Net } from './FaceLandmark68Net'; | ||

| export * from './FaceLandmark68Net'; | ||

| export * from './FaceLandmark68TinyNet'; | ||

| export declare class FaceLandmarkNet extends FaceLandmark68Net { | ||

@@ -4,0 +5,0 @@ } |

@@ -6,2 +6,3 @@ "use strict"; | ||

| tslib_1.__exportStar(require("./FaceLandmark68Net"), exports); | ||

| tslib_1.__exportStar(require("./FaceLandmark68TinyNet"), exports); | ||

| var FaceLandmarkNet = /** @class */ (function (_super) { | ||

@@ -8,0 +9,0 @@ tslib_1.__extends(FaceLandmarkNet, _super); |

@@ -5,23 +5,7 @@ "use strict"; | ||

| var tfjs_image_recognition_base_1 = require("tfjs-image-recognition-base"); | ||

| var loadParamsFactory_1 = require("./loadParamsFactory"); | ||

| var DEFAULT_MODEL_NAME = 'face_landmark_68_model'; | ||

| function extractorsFactory(weightMap, paramMappings) { | ||

| var extractWeightEntry = tfjs_image_recognition_base_1.extractWeightEntryFactory(weightMap, paramMappings); | ||

| function extractConvParams(prefix, mappedPrefix) { | ||

| var filters = extractWeightEntry(prefix + "/kernel", 4, mappedPrefix + "/filters"); | ||

| var bias = extractWeightEntry(prefix + "/bias", 1, mappedPrefix + "/bias"); | ||

| return { filters: filters, bias: bias }; | ||

| } | ||

| function extractFcParams(prefix, mappedPrefix) { | ||

| var weights = extractWeightEntry(prefix + "/kernel", 2, mappedPrefix + "/weights"); | ||

| var bias = extractWeightEntry(prefix + "/bias", 1, mappedPrefix + "/bias"); | ||

| return { weights: weights, bias: bias }; | ||

| } | ||

| return { | ||

| extractConvParams: extractConvParams, | ||

| extractFcParams: extractFcParams | ||

| }; | ||

| } | ||

| function loadQuantizedParams(uri) { | ||

| return tslib_1.__awaiter(this, void 0, void 0, function () { | ||

| var weightMap, paramMappings, _a, extractConvParams, extractFcParams, params; | ||

| var weightMap, paramMappings, _a, extractDenseBlock4Params, extractFcParams, params; | ||

| return tslib_1.__generator(this, function (_b) { | ||

@@ -33,14 +17,9 @@ switch (_b.label) { | ||

| paramMappings = []; | ||

| _a = extractorsFactory(weightMap, paramMappings), extractConvParams = _a.extractConvParams, extractFcParams = _a.extractFcParams; | ||

| _a = loadParamsFactory_1.loadParamsFactory(weightMap, paramMappings), extractDenseBlock4Params = _a.extractDenseBlock4Params, extractFcParams = _a.extractFcParams; | ||

| params = { | ||

| conv0: extractConvParams('conv2d_0', 'conv0'), | ||

| conv1: extractConvParams('conv2d_1', 'conv1'), | ||

| conv2: extractConvParams('conv2d_2', 'conv2'), | ||

| conv3: extractConvParams('conv2d_3', 'conv3'), | ||

| conv4: extractConvParams('conv2d_4', 'conv4'), | ||

| conv5: extractConvParams('conv2d_5', 'conv5'), | ||

| conv6: extractConvParams('conv2d_6', 'conv6'), | ||

| conv7: extractConvParams('conv2d_7', 'conv7'), | ||

| fc0: extractFcParams('dense', 'fc0'), | ||

| fc1: extractFcParams('logits', 'fc1') | ||

| dense0: extractDenseBlock4Params('dense0', true), | ||

| dense1: extractDenseBlock4Params('dense1'), | ||

| dense2: extractDenseBlock4Params('dense2'), | ||

| dense3: extractDenseBlock4Params('dense3'), | ||

| fc: extractFcParams('fc') | ||

| }; | ||

@@ -47,0 +26,0 @@ tfjs_image_recognition_base_1.disposeUnusedWeightTensors(weightMap, paramMappings); |

@@ -0,13 +1,40 @@ | ||

| import * as tf from '@tensorflow/tfjs-core'; | ||

| import { ConvParams, FCParams } from 'tfjs-tiny-yolov2'; | ||

| import { SeparableConvParams } from 'tfjs-tiny-yolov2/build/tinyYolov2/types'; | ||

| export declare type ConvWithBatchNormParams = BatchNormParams & { | ||

| filter: tf.Tensor4D; | ||

| }; | ||

| export declare type BatchNormParams = { | ||

| mean: tf.Tensor1D; | ||

| variance: tf.Tensor1D; | ||

| scale: tf.Tensor1D; | ||

| offset: tf.Tensor1D; | ||

| }; | ||

| export declare type SeparableConvWithBatchNormParams = { | ||

| depthwise: ConvWithBatchNormParams; | ||

| pointwise: ConvWithBatchNormParams; | ||

| }; | ||

| export declare type FCWithBatchNormParams = BatchNormParams & { | ||

| weights: tf.Tensor2D; | ||

| }; | ||

| export declare type DenseBlock3Params = { | ||

| conv0: SeparableConvParams | ConvParams; | ||

| conv1: SeparableConvParams; | ||

| conv2: SeparableConvParams; | ||

| }; | ||

| export declare type DenseBlock4Params = DenseBlock3Params & { | ||

| conv3: SeparableConvParams; | ||

| }; | ||

| export declare type TinyNetParams = { | ||

| dense0: DenseBlock3Params; | ||

| dense1: DenseBlock3Params; | ||

| dense2: DenseBlock3Params; | ||

| fc: FCParams; | ||

| }; | ||

| export declare type NetParams = { | ||

| conv0: ConvParams; | ||

| conv1: ConvParams; | ||

| conv2: ConvParams; | ||

| conv3: ConvParams; | ||

| conv4: ConvParams; | ||

| conv5: ConvParams; | ||

| conv6: ConvParams; | ||

| conv7: ConvParams; | ||

| fc0: FCParams; | ||

| fc1: FCParams; | ||

| dense0: DenseBlock4Params; | ||

| dense1: DenseBlock4Params; | ||

| dense2: DenseBlock4Params; | ||

| dense3: DenseBlock4Params; | ||

| fc: FCParams; | ||

| }; |

@@ -9,2 +9,3 @@ import * as tf from '@tensorflow/tfjs-core'; | ||

| import { FaceLandmark68Net } from './faceLandmarkNet/FaceLandmark68Net'; | ||

| import { FaceLandmark68TinyNet } from './faceLandmarkNet/FaceLandmark68TinyNet'; | ||

| import { FaceRecognitionNet } from './faceRecognitionNet/FaceRecognitionNet'; | ||

@@ -20,2 +21,3 @@ import { Mtcnn } from './mtcnn/Mtcnn'; | ||

| faceLandmark68Net: FaceLandmark68Net; | ||

| faceLandmark68TinyNet: FaceLandmark68TinyNet; | ||

| faceRecognitionNet: FaceRecognitionNet; | ||

@@ -27,2 +29,3 @@ mtcnn: Mtcnn; | ||

| export declare function loadFaceLandmarkModel(url: string): Promise<void>; | ||

| export declare function loadFaceLandmarkTinyModel(url: string): Promise<void>; | ||

| export declare function loadFaceRecognitionModel(url: string): Promise<void>; | ||

@@ -36,2 +39,3 @@ export declare function loadMtcnnModel(url: string): Promise<void>; | ||

| export declare function detectLandmarks(input: TNetInput): Promise<FaceLandmarks68 | FaceLandmarks68[]>; | ||

| export declare function detectLandmarksTiny(input: TNetInput): Promise<FaceLandmarks68 | FaceLandmarks68[]>; | ||

| export declare function computeFaceDescriptor(input: TNetInput): Promise<Float32Array | Float32Array[]>; | ||

@@ -38,0 +42,0 @@ export declare function mtcnn(input: TNetInput, forwardParams: MtcnnForwardParams): Promise<MtcnnResult[]>; |

@@ -6,2 +6,3 @@ "use strict"; | ||

| var FaceLandmark68Net_1 = require("./faceLandmarkNet/FaceLandmark68Net"); | ||

| var FaceLandmark68TinyNet_1 = require("./faceLandmarkNet/FaceLandmark68TinyNet"); | ||

| var FaceRecognitionNet_1 = require("./faceRecognitionNet/FaceRecognitionNet"); | ||

@@ -18,2 +19,3 @@ var Mtcnn_1 = require("./mtcnn/Mtcnn"); | ||

| faceLandmark68Net: exports.landmarkNet, | ||

| faceLandmark68TinyNet: new FaceLandmark68TinyNet_1.FaceLandmark68TinyNet(), | ||

| faceRecognitionNet: exports.recognitionNet, | ||

@@ -31,2 +33,6 @@ mtcnn: new Mtcnn_1.Mtcnn(), | ||

| exports.loadFaceLandmarkModel = loadFaceLandmarkModel; | ||

| function loadFaceLandmarkTinyModel(url) { | ||

| return exports.nets.faceLandmark68TinyNet.load(url); | ||

| } | ||

| exports.loadFaceLandmarkTinyModel = loadFaceLandmarkTinyModel; | ||

| function loadFaceRecognitionModel(url) { | ||

@@ -49,2 +55,3 @@ return exports.nets.faceRecognitionNet.load(url); | ||

| function loadModels(url) { | ||

| console.warn('loadModels will be deprecated in future'); | ||

| return Promise.all([ | ||

@@ -68,2 +75,6 @@ loadSsdMobilenetv1Model(url), | ||

| exports.detectLandmarks = detectLandmarks; | ||

| function detectLandmarksTiny(input) { | ||

| return exports.nets.faceLandmark68TinyNet.detectLandmarks(input); | ||

| } | ||

| exports.detectLandmarksTiny = detectLandmarksTiny; | ||

| function computeFaceDescriptor(input) { | ||

@@ -70,0 +81,0 @@ return exports.nets.faceRecognitionNet.computeFaceDescriptor(input); |

| { | ||

| "name": "face-api.js", | ||

| "version": "0.13.0", | ||

| "version": "0.14.0", | ||

| "description": "JavaScript API for face detection and face recognition in the browser with tensorflow.js", | ||

@@ -5,0 +5,0 @@ "main": "./build/index.js", |

@@ -46,3 +46,4 @@ # face-api.js | ||

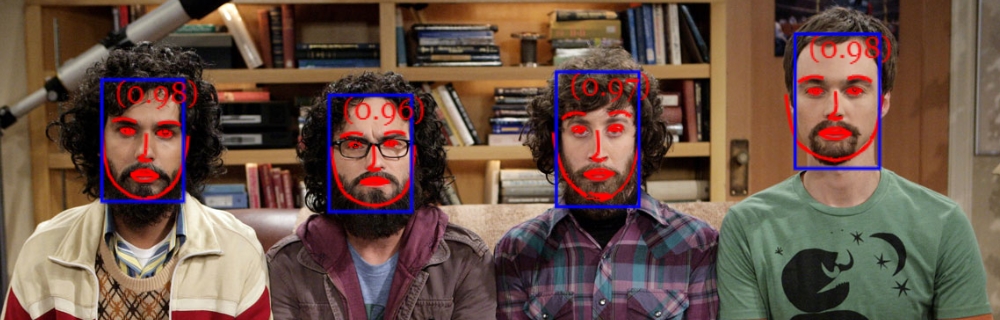

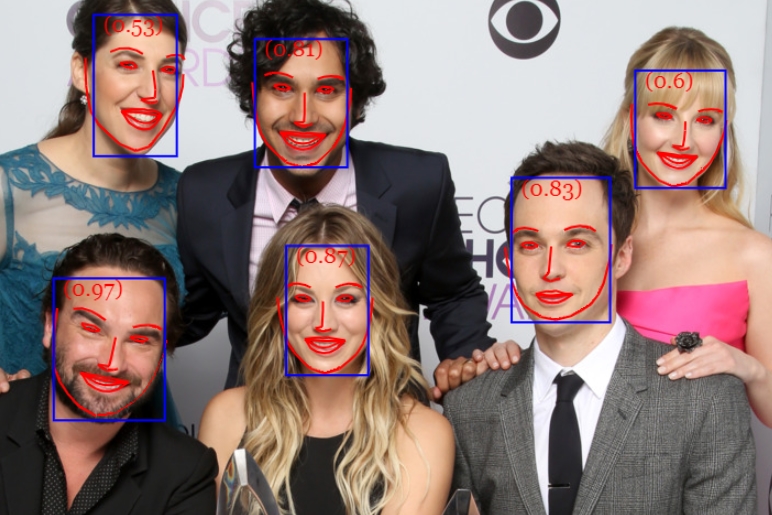

|  | ||

|  | ||

|  | ||

@@ -61,6 +62,2 @@  | ||

| ### Face Alignment | ||

|  | ||

| <a name="running-the-examples"></a> | ||

@@ -94,3 +91,3 @@ | ||

| The Tiny Yolo v2 implementation is a very performant face detector, which can easily adapt to different input image sizes, thus can be used as an alternative to SSD Mobilenet v1 to trade off accuracy for performance (inference time). In general the models ability to locate smaller face bounding boxes is not as accurate as SSD Mobilenet v1. | ||

| The Tiny Yolo v2 implementation is a very performant face detector, which can easily adapt to different input image sizes, thus can be used as an alternative to SSD Mobilenet v1 to trade off accuracy for performance (inference time). In general the models ability to locate smaller face bounding boxes is not as accurate as SSD Mobilenet v1. | ||

@@ -103,3 +100,3 @@ The face detector has been trained on a custom dataset of ~10K images labeled with bounding boxes and uses depthwise separable convolutions instead of regular convolutions, which ensures very fast inference and allows to have a quantized model size of only 1.7MB making the model extremely mobile and web friendly. Thus, the Tiny Yolo v2 face detector should be your GO-TO face detector on mobile devices. | ||

| MTCNN (Multi-task Cascaded Convolutional Neural Networks) represents an alternative face detector to SSD Mobilenet v1 and Tiny Yolo v2, which offers much more room for configuration. By tuning the input parameters, MTCNN is able to detect a wide range of face bounding box sizes. MTCNN is a 3 stage cascaded CNN, which simultanously returns 5 face landmark points along with the bounding boxes and scores for each face. By limiting the minimum size of faces expected in an image, MTCNN allows you to process frames from your webcam in realtime. Additionally with the model size is only 2MB. | ||

| MTCNN (Multi-task Cascaded Convolutional Neural Networks) represents an alternative face detector to SSD Mobilenet v1 and Tiny Yolo v2, which offers much more room for configuration. By tuning the input parameters, MTCNN is able to detect a wide range of face bounding box sizes. MTCNN is a 3 stage cascaded CNN, which simultaneously returns 5 face landmark points along with the bounding boxes and scores for each face. By limiting the minimum size of faces expected in an image, MTCNN allows you to process frames from your webcam in realtime. Additionally with the model size is only 2MB. | ||

@@ -120,6 +117,4 @@ MTCNN has been presented in the paper [Joint Face Detection and Alignment using Multi-task Cascaded Convolutional Networks](https://kpzhang93.github.io/MTCNN_face_detection_alignment/paper/spl.pdf) by Zhang et al. and the model weights are provided in the official [repo](https://github.com/kpzhang93/MTCNN_face_detection_alignment) of the MTCNN implementation. | ||

| This package implements a CNN to detect the 68 point face landmarks for a given face image. | ||

| This package implements a very lightweight and fast, yet accurate 68 point face landmark detector. The default model has a size of only 350kb and the tiny model is only 80kb. Both models employ the ideas of depthwise separable convolutions as well as densely connected blocks. The models have been trained on a dataset of ~35k face images labeled with 68 face landmark points. | ||

| The model has been trained on a variety of public datasets and the model weights are provided by [yinguobing](https://github.com/yinguobing) in [this](https://github.com/yinguobing/head-pose-estimation) repo. | ||

| <a name="usage"></a> | ||

@@ -153,2 +148,3 @@ | ||

| // await faceapi.loadFaceLandmarkModel('/models') | ||

| // await faceapi.loadFaceLandmarkTinyModel('/models') | ||

| // await faceapi.loadFaceRecognitionModel('/models') | ||

@@ -164,3 +160,4 @@ // await faceapi.loadMtcnnModel('/models') | ||

| // accordingly for the other models: | ||

| // const net = new faceapi.FaceLandmarkNet() | ||

| // const net = new faceapi.FaceLandmark68Net() | ||

| // const net = new faceapi.FaceLandmark68TinyNet() | ||

| // const net = new faceapi.FaceRecognitionNet() | ||

@@ -172,8 +169,6 @@ // const net = new faceapi.Mtcnn() | ||

| // await net.load('/models/face_landmark_68_model-weights_manifest.json') | ||

| // await net.load('/models/face_landmark_68_tiny_model-weights_manifest.json') | ||

| // await net.load('/models/face_recognition_model-weights_manifest.json') | ||

| // await net.load('/models/mtcnn_model-weights_manifest.json') | ||

| // await net.load('/models/tiny_yolov2_separable_conv_model-weights_manifest.json') | ||

| // or simply load all models | ||

| await net.load('/models') | ||

| ``` | ||

@@ -238,3 +233,3 @@ | ||

| scoreThreshold: 0.5, | ||

| // any number or one of the predifened sizes: | ||

| // any number or one of the predefined sizes: | ||

| // 'xs' (224 x 224) | 'sm' (320 x 320) | 'md' (416 x 416) | 'lg' (608 x 608) | ||

@@ -266,3 +261,3 @@ inputSize: 'md' | ||

| scoreThresholds: [0.6, 0.7, 0.7], | ||

| // mininum face size to expect, the higher the faster processing will be, | ||

| // minimum face size to expect, the higher the faster processing will be, | ||

| // but smaller faces won't be detected | ||

@@ -410,2 +405,2 @@ minFaceSize: 20 | ||

| console.log(fullFaceDescription0.descriptor) // face descriptor | ||

| ``` | ||

| ``` |

| import { extractWeightsFactory, ParamMapping } from 'tfjs-image-recognition-base'; | ||

| import { extractConvParamsFactory, extractFCParamsFactory } from 'tfjs-tiny-yolov2'; | ||

| import { extractorsFactory } from './extractorsFactory'; | ||

| import { NetParams } from './types'; | ||

@@ -15,15 +15,12 @@ | ||

| const extractConvParams = extractConvParamsFactory(extractWeights, paramMappings) | ||

| const extractFCParams = extractFCParamsFactory(extractWeights, paramMappings) | ||

| const { | ||

| extractDenseBlock4Params, | ||

| extractFCParams | ||

| } = extractorsFactory(extractWeights, paramMappings) | ||

| const conv0 = extractConvParams(3, 32, 3, 'conv0') | ||

| const conv1 = extractConvParams(32, 64, 3, 'conv1') | ||

| const conv2 = extractConvParams(64, 64, 3, 'conv2') | ||

| const conv3 = extractConvParams(64, 64, 3, 'conv3') | ||

| const conv4 = extractConvParams(64, 64, 3, 'conv4') | ||

| const conv5 = extractConvParams(64, 128, 3, 'conv5') | ||

| const conv6 = extractConvParams(128, 128, 3, 'conv6') | ||

| const conv7 = extractConvParams(128, 256, 3, 'conv7') | ||

| const fc0 = extractFCParams(6400, 1024, 'fc0') | ||

| const fc1 = extractFCParams(1024, 136, 'fc1') | ||

| const dense0 = extractDenseBlock4Params(3, 32, 'dense0', true) | ||

| const dense1 = extractDenseBlock4Params(32, 64, 'dense1') | ||

| const dense2 = extractDenseBlock4Params(64, 128, 'dense2') | ||

| const dense3 = extractDenseBlock4Params(128, 256, 'dense3') | ||

| const fc = extractFCParams(256, 136, 'fc') | ||

@@ -36,15 +33,4 @@ if (getRemainingWeights().length !== 0) { | ||

| paramMappings, | ||

| params: { | ||

| conv0, | ||

| conv1, | ||

| conv2, | ||

| conv3, | ||

| conv4, | ||

| conv5, | ||

| conv6, | ||

| conv7, | ||

| fc0, | ||

| fc1 | ||

| } | ||

| params: { dense0, dense1, dense2, dense3, fc } | ||

| } | ||

| } |

| import * as tf from '@tensorflow/tfjs-core'; | ||

| import { NetInput } from 'tfjs-image-recognition-base'; | ||

| import { convLayer, ConvParams } from 'tfjs-tiny-yolov2'; | ||

| import { NetInput, normalize } from 'tfjs-image-recognition-base'; | ||

| import { ConvParams } from 'tfjs-tiny-yolov2'; | ||

| import { SeparableConvParams } from 'tfjs-tiny-yolov2/build/tinyYolov2/types'; | ||

| import { depthwiseSeparableConv } from './depthwiseSeparableConv'; | ||

| import { extractParams } from './extractParams'; | ||

@@ -9,10 +11,28 @@ import { FaceLandmark68NetBase } from './FaceLandmark68NetBase'; | ||

| import { loadQuantizedParams } from './loadQuantizedParams'; | ||

| import { NetParams } from './types'; | ||

| import { DenseBlock4Params, NetParams } from './types'; | ||

| function conv(x: tf.Tensor4D, params: ConvParams): tf.Tensor4D { | ||

| return convLayer(x, params, 'valid', true) | ||

| } | ||

| function denseBlock( | ||

| x: tf.Tensor4D, | ||

| denseBlockParams: DenseBlock4Params, | ||

| isFirstLayer: boolean = false | ||

| ): tf.Tensor4D { | ||

| return tf.tidy(() => { | ||

| const out1 = tf.relu( | ||

| isFirstLayer | ||

| ? tf.add( | ||

| tf.conv2d(x, (denseBlockParams.conv0 as ConvParams).filters, [2, 2], 'same'), | ||

| denseBlockParams.conv0.bias | ||

| ) | ||

| : depthwiseSeparableConv(x, denseBlockParams.conv0 as SeparableConvParams, [2, 2]) | ||

| ) as tf.Tensor4D | ||

| const out2 = depthwiseSeparableConv(out1, denseBlockParams.conv1, [1, 1]) | ||

| function maxPool(x: tf.Tensor4D, strides: [number, number] = [2, 2]): tf.Tensor4D { | ||

| return tf.maxPool(x, [2, 2], strides, 'valid') | ||

| const in3 = tf.relu(tf.add(out1, out2)) as tf.Tensor4D | ||

| const out3 = depthwiseSeparableConv(in3, denseBlockParams.conv2, [1, 1]) | ||

| const in4 = tf.relu(tf.add(out1, tf.add(out2, out3))) as tf.Tensor4D | ||

| const out4 = depthwiseSeparableConv(in4, denseBlockParams.conv3, [1, 1]) | ||

| return tf.relu(tf.add(out1, tf.add(out2, tf.add(out3, out4)))) as tf.Tensor4D | ||

| }) | ||

| } | ||

@@ -23,3 +43,3 @@ | ||

| constructor() { | ||

| super('FaceLandmark68Net') | ||

| super('FaceLandmark68LargeNet') | ||

| } | ||

@@ -32,23 +52,17 @@ | ||

| if (!params) { | ||

| throw new Error('FaceLandmark68Net - load model before inference') | ||

| throw new Error('FaceLandmark68LargeNet - load model before inference') | ||

| } | ||

| return tf.tidy(() => { | ||

| const batchTensor = input.toBatchTensor(128, true).toFloat() as tf.Tensor4D | ||

| const batchTensor = input.toBatchTensor(112, true) | ||

| const meanRgb = [122.782, 117.001, 104.298] | ||

| const normalized = normalize(batchTensor, meanRgb).div(tf.scalar(255)) as tf.Tensor4D | ||

| let out = conv(batchTensor, params.conv0) | ||

| out = maxPool(out) | ||

| out = conv(out, params.conv1) | ||

| out = conv(out, params.conv2) | ||

| out = maxPool(out) | ||

| out = conv(out, params.conv3) | ||

| out = conv(out, params.conv4) | ||

| out = maxPool(out) | ||

| out = conv(out, params.conv5) | ||

| out = conv(out, params.conv6) | ||

| out = maxPool(out, [1, 1]) | ||

| out = conv(out, params.conv7) | ||

| const fc0 = tf.relu(fullyConnectedLayer(out.as2D(out.shape[0], -1), params.fc0)) | ||

| let out = denseBlock(normalized, params.dense0, true) | ||

| out = denseBlock(out, params.dense1) | ||

| out = denseBlock(out, params.dense2) | ||

| out = denseBlock(out, params.dense3) | ||

| out = tf.avgPool(out, [7, 7], [2, 2], 'valid') | ||

| return fullyConnectedLayer(fc0, params.fc1) | ||

| return fullyConnectedLayer(out.as2D(out.shape[0], -1), params.fc) | ||

| }) | ||

@@ -61,2 +75,3 @@ } | ||

| protected extractParams(weights: Float32Array) { | ||

@@ -63,0 +78,0 @@ return extractParams(weights) |

| import { FaceLandmark68Net } from './FaceLandmark68Net'; | ||

| export * from './FaceLandmark68Net'; | ||

| export * from './FaceLandmark68TinyNet'; | ||

@@ -5,0 +6,0 @@ export class FaceLandmarkNet extends FaceLandmark68Net {} |

@@ -1,10 +0,4 @@ | ||

| import * as tf from '@tensorflow/tfjs-core'; | ||

| import { | ||

| disposeUnusedWeightTensors, | ||

| extractWeightEntryFactory, | ||

| loadWeightMap, | ||

| ParamMapping, | ||

| } from 'tfjs-image-recognition-base'; | ||

| import { ConvParams, FCParams } from 'tfjs-tiny-yolov2'; | ||

| import { disposeUnusedWeightTensors, loadWeightMap, ParamMapping } from 'tfjs-image-recognition-base'; | ||

| import { loadParamsFactory } from './loadParamsFactory'; | ||

| import { NetParams } from './types'; | ||

@@ -14,26 +8,2 @@ | ||

| function extractorsFactory(weightMap: any, paramMappings: ParamMapping[]) { | ||

| const extractWeightEntry = extractWeightEntryFactory(weightMap, paramMappings) | ||

| function extractConvParams(prefix: string, mappedPrefix: string): ConvParams { | ||

| const filters = extractWeightEntry<tf.Tensor4D>(`${prefix}/kernel`, 4, `${mappedPrefix}/filters`) | ||

| const bias = extractWeightEntry<tf.Tensor1D>(`${prefix}/bias`, 1, `${mappedPrefix}/bias`) | ||

| return { filters, bias } | ||

| } | ||

| function extractFcParams(prefix: string, mappedPrefix: string): FCParams { | ||

| const weights = extractWeightEntry<tf.Tensor2D>(`${prefix}/kernel`, 2, `${mappedPrefix}/weights`) | ||

| const bias = extractWeightEntry<tf.Tensor1D>(`${prefix}/bias`, 1, `${mappedPrefix}/bias`) | ||

| return { weights, bias } | ||

| } | ||

| return { | ||

| extractConvParams, | ||

| extractFcParams | ||

| } | ||

| } | ||

| export async function loadQuantizedParams( | ||

@@ -47,17 +17,12 @@ uri: string | undefined | ||

| const { | ||

| extractConvParams, | ||

| extractDenseBlock4Params, | ||

| extractFcParams | ||

| } = extractorsFactory(weightMap, paramMappings) | ||

| } = loadParamsFactory(weightMap, paramMappings) | ||

| const params = { | ||

| conv0: extractConvParams('conv2d_0', 'conv0'), | ||

| conv1: extractConvParams('conv2d_1', 'conv1'), | ||

| conv2: extractConvParams('conv2d_2', 'conv2'), | ||

| conv3: extractConvParams('conv2d_3', 'conv3'), | ||

| conv4: extractConvParams('conv2d_4', 'conv4'), | ||

| conv5: extractConvParams('conv2d_5', 'conv5'), | ||

| conv6: extractConvParams('conv2d_6', 'conv6'), | ||

| conv7: extractConvParams('conv2d_7', 'conv7'), | ||

| fc0: extractFcParams('dense', 'fc0'), | ||

| fc1: extractFcParams('logits', 'fc1') | ||

| dense0: extractDenseBlock4Params('dense0', true), | ||

| dense1: extractDenseBlock4Params('dense1'), | ||

| dense2: extractDenseBlock4Params('dense2'), | ||

| dense3: extractDenseBlock4Params('dense3'), | ||

| fc: extractFcParams('fc') | ||

| } | ||

@@ -64,0 +29,0 @@ |

@@ -0,14 +1,49 @@ | ||

| import * as tf from '@tensorflow/tfjs-core'; | ||

| import { ConvParams, FCParams } from 'tfjs-tiny-yolov2'; | ||

| import { SeparableConvParams } from 'tfjs-tiny-yolov2/build/tinyYolov2/types'; | ||

| export type ConvWithBatchNormParams = BatchNormParams & { | ||

| filter: tf.Tensor4D | ||

| } | ||

| export type BatchNormParams = { | ||

| mean: tf.Tensor1D | ||

| variance: tf.Tensor1D | ||

| scale: tf.Tensor1D | ||

| offset: tf.Tensor1D | ||

| } | ||

| export type SeparableConvWithBatchNormParams = { | ||

| depthwise: ConvWithBatchNormParams | ||

| pointwise: ConvWithBatchNormParams | ||

| } | ||

| export declare type FCWithBatchNormParams = BatchNormParams & { | ||

| weights: tf.Tensor2D | ||

| } | ||

| export type DenseBlock3Params = { | ||

| conv0: SeparableConvParams | ConvParams | ||

| conv1: SeparableConvParams | ||

| conv2: SeparableConvParams | ||

| } | ||

| export type DenseBlock4Params = DenseBlock3Params & { | ||

| conv3: SeparableConvParams | ||

| } | ||

| export type TinyNetParams = { | ||

| dense0: DenseBlock3Params | ||

| dense1: DenseBlock3Params | ||

| dense2: DenseBlock3Params | ||

| fc: FCParams | ||

| } | ||

| export type NetParams = { | ||

| conv0: ConvParams | ||

| conv1: ConvParams | ||

| conv2: ConvParams | ||

| conv3: ConvParams | ||

| conv4: ConvParams | ||

| conv5: ConvParams | ||

| conv6: ConvParams | ||

| conv7: ConvParams | ||

| fc0: FCParams | ||

| fc1: FCParams | ||

| } | ||

| dense0: DenseBlock4Params | ||

| dense1: DenseBlock4Params | ||

| dense2: DenseBlock4Params | ||

| dense3: DenseBlock4Params | ||

| fc: FCParams | ||

| } | ||

@@ -11,2 +11,3 @@ import * as tf from '@tensorflow/tfjs-core'; | ||

| import { FaceLandmark68Net } from './faceLandmarkNet/FaceLandmark68Net'; | ||

| import { FaceLandmark68TinyNet } from './faceLandmarkNet/FaceLandmark68TinyNet'; | ||

| import { FaceRecognitionNet } from './faceRecognitionNet/FaceRecognitionNet'; | ||

@@ -26,2 +27,3 @@ import { Mtcnn } from './mtcnn/Mtcnn'; | ||

| faceLandmark68Net: landmarkNet, | ||

| faceLandmark68TinyNet: new FaceLandmark68TinyNet(), | ||

| faceRecognitionNet: recognitionNet, | ||

@@ -40,2 +42,6 @@ mtcnn: new Mtcnn(), | ||

| export function loadFaceLandmarkTinyModel(url: string) { | ||

| return nets.faceLandmark68TinyNet.load(url) | ||

| } | ||

| export function loadFaceRecognitionModel(url: string) { | ||

@@ -58,2 +64,3 @@ return nets.faceRecognitionNet.load(url) | ||

| export function loadModels(url: string) { | ||

| console.warn('loadModels will be deprecated in future') | ||

| return Promise.all([ | ||

@@ -83,2 +90,7 @@ loadSsdMobilenetv1Model(url), | ||

| } | ||

| export function detectLandmarksTiny( | ||

| input: TNetInput | ||

| ): Promise<FaceLandmarks68 | FaceLandmarks68[]> { | ||

| return nets.faceLandmark68TinyNet.detectLandmarks(input) | ||

| } | ||

@@ -85,0 +97,0 @@ export function computeFaceDescriptor( |

Sorry, the diff of this file is not supported yet

Sorry, the diff of this file is not supported yet

Sorry, the diff of this file is not supported yet

Sorry, the diff of this file is not supported yet

Sorry, the diff of this file is not supported yet

Sorry, the diff of this file is too big to display

Sorry, the diff of this file is not supported yet

Sorry, the diff of this file is too big to display

Improved metrics

- Total package byte prevSize

- increased by2.24%

2846692

- Number of package files

- increased by8.66%

301

- Lines of code

- increased by4.66%

14464

Worsened metrics

- Number of lines in readme file

- decreased by-1%

396