face-api.js

Advanced tools

Comparing version 0.14.3 to 0.15.0

@@ -1,4 +0,8 @@ | ||

| import { Dimensions, ObjectDetection, Rect } from 'tfjs-image-recognition-base'; | ||

| export declare class FaceDetection extends ObjectDetection { | ||

| constructor(score: number, relativeBox: Rect, imageDims: Dimensions); | ||

| import { Box, IDimensions, ObjectDetection, Rect } from 'tfjs-image-recognition-base'; | ||

| export interface IFaceDetecion { | ||

| score: number; | ||

| box: Box; | ||

| } | ||

| export declare class FaceDetection extends ObjectDetection implements IFaceDetecion { | ||

| constructor(score: number, relativeBox: Rect, imageDims: IDimensions); | ||

| } |

@@ -1,16 +0,19 @@ | ||

| import { Dimensions, Point, Rect } from 'tfjs-image-recognition-base'; | ||

| import { Dimensions, IDimensions, Point, Rect } from 'tfjs-image-recognition-base'; | ||

| import { FaceDetection } from './FaceDetection'; | ||

| export declare class FaceLandmarks { | ||

| protected _imageWidth: number; | ||

| protected _imageHeight: number; | ||

| export interface IFaceLandmarks { | ||

| positions: Point[]; | ||

| shift: Point; | ||

| } | ||

| export declare class FaceLandmarks implements IFaceLandmarks { | ||

| protected _shift: Point; | ||

| protected _faceLandmarks: Point[]; | ||

| constructor(relativeFaceLandmarkPositions: Point[], imageDims: Dimensions, shift?: Point); | ||

| getShift(): Point; | ||

| getImageWidth(): number; | ||

| getImageHeight(): number; | ||

| getPositions(): Point[]; | ||

| getRelativePositions(): Point[]; | ||

| protected _positions: Point[]; | ||

| protected _imgDims: Dimensions; | ||

| constructor(relativeFaceLandmarkPositions: Point[], imgDims: IDimensions, shift?: Point); | ||

| readonly shift: Point; | ||

| readonly imageWidth: number; | ||

| readonly imageHeight: number; | ||

| readonly positions: Point[]; | ||

| readonly relativePositions: Point[]; | ||

| forSize<T extends FaceLandmarks>(width: number, height: number): T; | ||

| shift<T extends FaceLandmarks>(x: number, y: number): T; | ||

| shiftBy<T extends FaceLandmarks>(x: number, y: number): T; | ||

| shiftByPoint<T extends FaceLandmarks>(pt: Point): T; | ||

@@ -17,0 +20,0 @@ /** |

@@ -10,34 +10,45 @@ "use strict"; | ||

| var FaceLandmarks = /** @class */ (function () { | ||

| function FaceLandmarks(relativeFaceLandmarkPositions, imageDims, shift) { | ||

| function FaceLandmarks(relativeFaceLandmarkPositions, imgDims, shift) { | ||

| if (shift === void 0) { shift = new tfjs_image_recognition_base_1.Point(0, 0); } | ||

| var width = imageDims.width, height = imageDims.height; | ||

| this._imageWidth = width; | ||

| this._imageHeight = height; | ||

| var width = imgDims.width, height = imgDims.height; | ||

| this._imgDims = new tfjs_image_recognition_base_1.Dimensions(width, height); | ||

| this._shift = shift; | ||

| this._faceLandmarks = relativeFaceLandmarkPositions.map(function (pt) { return pt.mul(new tfjs_image_recognition_base_1.Point(width, height)).add(shift); }); | ||

| this._positions = relativeFaceLandmarkPositions.map(function (pt) { return pt.mul(new tfjs_image_recognition_base_1.Point(width, height)).add(shift); }); | ||

| } | ||

| FaceLandmarks.prototype.getShift = function () { | ||

| return new tfjs_image_recognition_base_1.Point(this._shift.x, this._shift.y); | ||

| }; | ||

| FaceLandmarks.prototype.getImageWidth = function () { | ||

| return this._imageWidth; | ||

| }; | ||

| FaceLandmarks.prototype.getImageHeight = function () { | ||

| return this._imageHeight; | ||

| }; | ||

| FaceLandmarks.prototype.getPositions = function () { | ||

| return this._faceLandmarks; | ||

| }; | ||

| FaceLandmarks.prototype.getRelativePositions = function () { | ||

| var _this = this; | ||

| return this._faceLandmarks.map(function (pt) { return pt.sub(_this._shift).div(new tfjs_image_recognition_base_1.Point(_this._imageWidth, _this._imageHeight)); }); | ||

| }; | ||

| Object.defineProperty(FaceLandmarks.prototype, "shift", { | ||

| get: function () { return new tfjs_image_recognition_base_1.Point(this._shift.x, this._shift.y); }, | ||

| enumerable: true, | ||

| configurable: true | ||

| }); | ||

| Object.defineProperty(FaceLandmarks.prototype, "imageWidth", { | ||

| get: function () { return this._imgDims.width; }, | ||

| enumerable: true, | ||

| configurable: true | ||

| }); | ||

| Object.defineProperty(FaceLandmarks.prototype, "imageHeight", { | ||

| get: function () { return this._imgDims.height; }, | ||

| enumerable: true, | ||

| configurable: true | ||

| }); | ||

| Object.defineProperty(FaceLandmarks.prototype, "positions", { | ||

| get: function () { return this._positions; }, | ||

| enumerable: true, | ||

| configurable: true | ||

| }); | ||

| Object.defineProperty(FaceLandmarks.prototype, "relativePositions", { | ||

| get: function () { | ||

| var _this = this; | ||

| return this._positions.map(function (pt) { return pt.sub(_this._shift).div(new tfjs_image_recognition_base_1.Point(_this.imageWidth, _this.imageHeight)); }); | ||

| }, | ||

| enumerable: true, | ||

| configurable: true | ||

| }); | ||

| FaceLandmarks.prototype.forSize = function (width, height) { | ||

| return new this.constructor(this.getRelativePositions(), { width: width, height: height }); | ||

| return new this.constructor(this.relativePositions, { width: width, height: height }); | ||

| }; | ||

| FaceLandmarks.prototype.shift = function (x, y) { | ||

| return new this.constructor(this.getRelativePositions(), { width: this._imageWidth, height: this._imageHeight }, new tfjs_image_recognition_base_1.Point(x, y)); | ||

| FaceLandmarks.prototype.shiftBy = function (x, y) { | ||

| return new this.constructor(this.relativePositions, this._imgDims, new tfjs_image_recognition_base_1.Point(x, y)); | ||

| }; | ||

| FaceLandmarks.prototype.shiftByPoint = function (pt) { | ||

| return this.shift(pt.x, pt.y); | ||

| return this.shiftBy(pt.x, pt.y); | ||

| }; | ||

@@ -58,5 +69,5 @@ /** | ||

| var box = detection instanceof FaceDetection_1.FaceDetection | ||

| ? detection.getBox().floor() | ||

| ? detection.box.floor() | ||

| : detection; | ||

| return this.shift(box.x, box.y).align(); | ||

| return this.shiftBy(box.x, box.y).align(); | ||

| } | ||

@@ -72,3 +83,3 @@ var centers = this.getRefPointsForAlignment(); | ||

| var y = Math.floor(Math.max(0, refPoint.y - (relY * size))); | ||

| return new tfjs_image_recognition_base_1.Rect(x, y, Math.min(size, this._imageWidth + x), Math.min(size, this._imageHeight + y)); | ||

| return new tfjs_image_recognition_base_1.Rect(x, y, Math.min(size, this.imageWidth + x), Math.min(size, this.imageHeight + y)); | ||

| }; | ||

@@ -75,0 +86,0 @@ FaceLandmarks.prototype.getRefPointsForAlignment = function () { |

@@ -12,3 +12,3 @@ "use strict"; | ||

| FaceLandmarks5.prototype.getRefPointsForAlignment = function () { | ||

| var pts = this.getPositions(); | ||

| var pts = this.positions; | ||

| return [ | ||

@@ -15,0 +15,0 @@ pts[0], |

@@ -12,21 +12,21 @@ "use strict"; | ||

| FaceLandmarks68.prototype.getJawOutline = function () { | ||

| return this._faceLandmarks.slice(0, 17); | ||

| return this.positions.slice(0, 17); | ||

| }; | ||

| FaceLandmarks68.prototype.getLeftEyeBrow = function () { | ||

| return this._faceLandmarks.slice(17, 22); | ||

| return this.positions.slice(17, 22); | ||

| }; | ||

| FaceLandmarks68.prototype.getRightEyeBrow = function () { | ||

| return this._faceLandmarks.slice(22, 27); | ||

| return this.positions.slice(22, 27); | ||

| }; | ||

| FaceLandmarks68.prototype.getNose = function () { | ||

| return this._faceLandmarks.slice(27, 36); | ||

| return this.positions.slice(27, 36); | ||

| }; | ||

| FaceLandmarks68.prototype.getLeftEye = function () { | ||

| return this._faceLandmarks.slice(36, 42); | ||

| return this.positions.slice(36, 42); | ||

| }; | ||

| FaceLandmarks68.prototype.getRightEye = function () { | ||

| return this._faceLandmarks.slice(42, 48); | ||

| return this.positions.slice(42, 48); | ||

| }; | ||

| FaceLandmarks68.prototype.getMouth = function () { | ||

| return this._faceLandmarks.slice(48, 68); | ||

| return this.positions.slice(48, 68); | ||

| }; | ||

@@ -33,0 +33,0 @@ FaceLandmarks68.prototype.getRefPointsForAlignment = function () { |

| import { FaceDetection } from './FaceDetection'; | ||

| import { FaceDetectionWithLandmarks, IFaceDetectionWithLandmarks } from './FaceDetectionWithLandmarks'; | ||

| import { FaceLandmarks } from './FaceLandmarks'; | ||

| export declare class FullFaceDescription { | ||

| private _detection; | ||

| private _landmarks; | ||

| import { FaceLandmarks68 } from './FaceLandmarks68'; | ||

| export interface IFullFaceDescription<TFaceLandmarks extends FaceLandmarks = FaceLandmarks68> extends IFaceDetectionWithLandmarks<TFaceLandmarks> { | ||

| detection: FaceDetection; | ||

| landmarks: TFaceLandmarks; | ||

| descriptor: Float32Array; | ||

| } | ||

| export declare class FullFaceDescription<TFaceLandmarks extends FaceLandmarks = FaceLandmarks68> extends FaceDetectionWithLandmarks<TFaceLandmarks> implements IFullFaceDescription<TFaceLandmarks> { | ||

| private _descriptor; | ||

| constructor(_detection: FaceDetection, _landmarks: FaceLandmarks, _descriptor: Float32Array); | ||

| readonly detection: FaceDetection; | ||

| readonly landmarks: FaceLandmarks; | ||

| constructor(detection: FaceDetection, unshiftedLandmarks: TFaceLandmarks, descriptor: Float32Array); | ||

| readonly descriptor: Float32Array; | ||

| forSize(width: number, height: number): FullFaceDescription; | ||

| forSize(width: number, height: number): FullFaceDescription<TFaceLandmarks>; | ||

| } |

| "use strict"; | ||

| Object.defineProperty(exports, "__esModule", { value: true }); | ||

| var FullFaceDescription = /** @class */ (function () { | ||

| function FullFaceDescription(_detection, _landmarks, _descriptor) { | ||

| this._detection = _detection; | ||

| this._landmarks = _landmarks; | ||

| this._descriptor = _descriptor; | ||

| var tslib_1 = require("tslib"); | ||

| var FaceDetectionWithLandmarks_1 = require("./FaceDetectionWithLandmarks"); | ||

| var FullFaceDescription = /** @class */ (function (_super) { | ||

| tslib_1.__extends(FullFaceDescription, _super); | ||

| function FullFaceDescription(detection, unshiftedLandmarks, descriptor) { | ||

| var _this = _super.call(this, detection, unshiftedLandmarks) || this; | ||

| _this._descriptor = descriptor; | ||

| return _this; | ||

| } | ||

| Object.defineProperty(FullFaceDescription.prototype, "detection", { | ||

| get: function () { | ||

| return this._detection; | ||

| }, | ||

| enumerable: true, | ||

| configurable: true | ||

| }); | ||

| Object.defineProperty(FullFaceDescription.prototype, "landmarks", { | ||

| get: function () { | ||

| return this._landmarks; | ||

| }, | ||

| enumerable: true, | ||

| configurable: true | ||

| }); | ||

| Object.defineProperty(FullFaceDescription.prototype, "descriptor", { | ||

@@ -31,7 +20,8 @@ get: function () { | ||

| FullFaceDescription.prototype.forSize = function (width, height) { | ||

| return new FullFaceDescription(this._detection.forSize(width, height), this._landmarks.forSize(width, height), this._descriptor); | ||

| var _a = _super.prototype.forSize.call(this, width, height), detection = _a.detection, landmarks = _a.landmarks; | ||

| return new FullFaceDescription(detection, landmarks, this.descriptor); | ||

| }; | ||

| return FullFaceDescription; | ||

| }()); | ||

| }(FaceDetectionWithLandmarks_1.FaceDetectionWithLandmarks)); | ||

| exports.FullFaceDescription = FullFaceDescription; | ||

| //# sourceMappingURL=FullFaceDescription.js.map |

| export * from './FaceDetection'; | ||

| export * from './FaceDetectionWithLandmarks'; | ||

| export * from './FaceLandmarks'; | ||

| export * from './FaceLandmarks5'; | ||

| export * from './FaceLandmarks68'; | ||

| export * from './FaceMatch'; | ||

| export * from './FullFaceDescription'; | ||

| export * from './LabeledFaceDescriptors'; |

@@ -5,6 +5,9 @@ "use strict"; | ||

| tslib_1.__exportStar(require("./FaceDetection"), exports); | ||

| tslib_1.__exportStar(require("./FaceDetectionWithLandmarks"), exports); | ||

| tslib_1.__exportStar(require("./FaceLandmarks"), exports); | ||

| tslib_1.__exportStar(require("./FaceLandmarks5"), exports); | ||

| tslib_1.__exportStar(require("./FaceLandmarks68"), exports); | ||

| tslib_1.__exportStar(require("./FaceMatch"), exports); | ||

| tslib_1.__exportStar(require("./FullFaceDescription"), exports); | ||

| tslib_1.__exportStar(require("./LabeledFaceDescriptors"), exports); | ||

| //# sourceMappingURL=index.js.map |

@@ -32,3 +32,3 @@ "use strict"; | ||

| ctx.fillStyle = color; | ||

| landmarks.getPositions().forEach(function (pt) { return ctx.fillRect(pt.x - ptOffset, pt.y - ptOffset, lineWidth, lineWidth); }); | ||

| landmarks.positions.forEach(function (pt) { return ctx.fillRect(pt.x - ptOffset, pt.y - ptOffset, lineWidth, lineWidth); }); | ||

| }); | ||

@@ -35,0 +35,0 @@ } |

@@ -41,3 +41,3 @@ "use strict"; | ||

| boxes = detections.map(function (det) { return det instanceof FaceDetection_1.FaceDetection | ||

| ? det.forSize(canvas.width, canvas.height).getBox().floor() | ||

| ? det.forSize(canvas.width, canvas.height).box.floor() | ||

| : det; }) | ||

@@ -44,0 +44,0 @@ .map(function (box) { return box.clipAtImageBorders(canvas.width, canvas.height); }); |

@@ -26,3 +26,3 @@ "use strict"; | ||

| var boxes = detections.map(function (det) { return det instanceof FaceDetection_1.FaceDetection | ||

| ? det.forSize(imgWidth, imgHeight).getBox() | ||

| ? det.forSize(imgWidth, imgHeight).box | ||

| : det; }) | ||

@@ -29,0 +29,0 @@ .map(function (box) { return box.clipAtImageBorders(imgWidth, imgHeight); }); |

@@ -28,3 +28,3 @@ "use strict"; | ||

| function FaceLandmark68Net() { | ||

| return _super.call(this, 'FaceLandmark68LargeNet') || this; | ||

| return _super.call(this, 'FaceLandmark68Net') || this; | ||

| } | ||

@@ -34,3 +34,3 @@ FaceLandmark68Net.prototype.runNet = function (input) { | ||

| if (!params) { | ||

| throw new Error('FaceLandmark68LargeNet - load model before inference'); | ||

| throw new Error('FaceLandmark68Net - load model before inference'); | ||

| } | ||

@@ -37,0 +37,0 @@ return tf.tidy(function () { |

| import * as tf from '@tensorflow/tfjs-core'; | ||

| import { NetInput, NeuralNetwork, TNetInput, Dimensions } from 'tfjs-image-recognition-base'; | ||

| import { IDimensions, NetInput, NeuralNetwork, TNetInput } from 'tfjs-image-recognition-base'; | ||

| import { FaceLandmarks68 } from '../classes/FaceLandmarks68'; | ||

@@ -8,3 +8,3 @@ export declare class FaceLandmark68NetBase<NetParams> extends NeuralNetwork<NetParams> { | ||

| runNet(_: NetInput): tf.Tensor2D; | ||

| postProcess(output: tf.Tensor2D, inputSize: number, originalDimensions: Dimensions[]): tf.Tensor2D; | ||

| postProcess(output: tf.Tensor2D, inputSize: number, originalDimensions: IDimensions[]): tf.Tensor2D; | ||

| forwardInput(input: NetInput): tf.Tensor2D; | ||

@@ -11,0 +11,0 @@ forward(input: TNetInput): Promise<tf.Tensor2D>; |

@@ -7,2 +7,1 @@ import { FaceLandmark68Net } from './FaceLandmark68Net'; | ||

| export declare function createFaceLandmarkNet(weights: Float32Array): FaceLandmarkNet; | ||

| export declare function faceLandmarkNet(weights: Float32Array): FaceLandmarkNet; |

@@ -21,7 +21,2 @@ "use strict"; | ||

| exports.createFaceLandmarkNet = createFaceLandmarkNet; | ||

| function faceLandmarkNet(weights) { | ||

| console.warn('faceLandmarkNet(weights: Float32Array) will be deprecated in future, use createFaceLandmarkNet instead'); | ||

| return createFaceLandmarkNet(weights); | ||

| } | ||

| exports.faceLandmarkNet = faceLandmarkNet; | ||

| //# sourceMappingURL=index.js.map |

| import { FaceRecognitionNet } from './FaceRecognitionNet'; | ||

| export * from './FaceRecognitionNet'; | ||

| export declare function createFaceRecognitionNet(weights: Float32Array): FaceRecognitionNet; | ||

| export declare function faceRecognitionNet(weights: Float32Array): FaceRecognitionNet; |

@@ -12,7 +12,2 @@ "use strict"; | ||

| exports.createFaceRecognitionNet = createFaceRecognitionNet; | ||

| function faceRecognitionNet(weights) { | ||

| console.warn('faceRecognitionNet(weights: Float32Array) will be deprecated in future, use createFaceRecognitionNet instead'); | ||

| return createFaceRecognitionNet(weights); | ||

| } | ||

| exports.faceRecognitionNet = faceRecognitionNet; | ||

| //# sourceMappingURL=index.js.map |

@@ -7,3 +7,2 @@ import * as tf from '@tensorflow/tfjs-core'; | ||

| export * from './euclideanDistance'; | ||

| export * from './faceDetectionNet'; | ||

| export * from './faceLandmarkNet'; | ||

@@ -13,2 +12,4 @@ export * from './faceRecognitionNet'; | ||

| export * from './mtcnn'; | ||

| export * from './ssdMobilenetv1'; | ||

| export * from './tinyFaceDetector'; | ||

| export * from './tinyYolov2'; |

@@ -10,3 +10,2 @@ "use strict"; | ||

| tslib_1.__exportStar(require("./euclideanDistance"), exports); | ||

| tslib_1.__exportStar(require("./faceDetectionNet"), exports); | ||

| tslib_1.__exportStar(require("./faceLandmarkNet"), exports); | ||

@@ -16,3 +15,5 @@ tslib_1.__exportStar(require("./faceRecognitionNet"), exports); | ||

| tslib_1.__exportStar(require("./mtcnn"), exports); | ||

| tslib_1.__exportStar(require("./ssdMobilenetv1"), exports); | ||

| tslib_1.__exportStar(require("./tinyFaceDetector"), exports); | ||

| tslib_1.__exportStar(require("./tinyYolov2"), exports); | ||

| //# sourceMappingURL=index.js.map |

| import * as tf from '@tensorflow/tfjs-core'; | ||

| import { Box, Dimensions } from 'tfjs-image-recognition-base'; | ||

| export declare function extractImagePatches(img: HTMLCanvasElement, boxes: Box[], {width, height}: Dimensions): Promise<tf.Tensor4D[]>; | ||

| import { Box, IDimensions } from 'tfjs-image-recognition-base'; | ||

| export declare function extractImagePatches(img: HTMLCanvasElement, boxes: Box[], {width, height}: IDimensions): Promise<tf.Tensor4D[]>; |

| import { Mtcnn } from './Mtcnn'; | ||

| export * from './Mtcnn'; | ||

| export * from './MtcnnOptions'; | ||

| export declare function createMtcnn(weights: Float32Array): Mtcnn; |

@@ -6,2 +6,3 @@ "use strict"; | ||

| tslib_1.__exportStar(require("./Mtcnn"), exports); | ||

| tslib_1.__exportStar(require("./MtcnnOptions"), exports); | ||

| function createMtcnn(weights) { | ||

@@ -8,0 +9,0 @@ var net = new Mtcnn_1.Mtcnn(); |

| import { NetInput, NeuralNetwork, TNetInput } from 'tfjs-image-recognition-base'; | ||

| import { MtcnnForwardParams, MtcnnResult, NetParams } from './types'; | ||

| import { FaceDetectionWithLandmarks } from '../classes/FaceDetectionWithLandmarks'; | ||

| import { FaceLandmarks5 } from '../classes/FaceLandmarks5'; | ||

| import { IMtcnnOptions } from './MtcnnOptions'; | ||

| import { NetParams } from './types'; | ||

| export declare class Mtcnn extends NeuralNetwork<NetParams> { | ||

| constructor(); | ||

| forwardInput(input: NetInput, forwardParams?: MtcnnForwardParams): Promise<{ | ||

| results: MtcnnResult[]; | ||

| forwardInput(input: NetInput, forwardParams?: IMtcnnOptions): Promise<{ | ||

| results: FaceDetectionWithLandmarks<FaceLandmarks5>[]; | ||

| stats: any; | ||

| }>; | ||

| forward(input: TNetInput, forwardParams?: MtcnnForwardParams): Promise<MtcnnResult[]>; | ||

| forwardWithStats(input: TNetInput, forwardParams?: MtcnnForwardParams): Promise<{ | ||

| results: MtcnnResult[]; | ||

| forward(input: TNetInput, forwardParams?: IMtcnnOptions): Promise<FaceDetectionWithLandmarks<FaceLandmarks5>[]>; | ||

| forwardWithStats(input: TNetInput, forwardParams?: IMtcnnOptions): Promise<{ | ||

| results: FaceDetectionWithLandmarks<FaceLandmarks5>[]; | ||

| stats: any; | ||

@@ -13,0 +16,0 @@ }>; |

@@ -7,2 +7,3 @@ "use strict"; | ||

| var FaceDetection_1 = require("../classes/FaceDetection"); | ||

| var FaceDetectionWithLandmarks_1 = require("../classes/FaceDetectionWithLandmarks"); | ||

| var FaceLandmarks5_1 = require("../classes/FaceLandmarks5"); | ||

@@ -12,5 +13,5 @@ var bgrToRgbTensor_1 = require("./bgrToRgbTensor"); | ||

| var extractParams_1 = require("./extractParams"); | ||

| var getDefaultMtcnnForwardParams_1 = require("./getDefaultMtcnnForwardParams"); | ||

| var getSizesForScale_1 = require("./getSizesForScale"); | ||

| var loadQuantizedParams_1 = require("./loadQuantizedParams"); | ||

| var MtcnnOptions_1 = require("./MtcnnOptions"); | ||

| var pyramidDown_1 = require("./pyramidDown"); | ||

@@ -52,3 +53,3 @@ var stage1_1 = require("./stage1"); | ||

| _a = imgTensor.shape.slice(1), height = _a[0], width = _a[1]; | ||

| _b = Object.assign({}, getDefaultMtcnnForwardParams_1.getDefaultMtcnnForwardParams(), forwardParams), minFaceSize = _b.minFaceSize, scaleFactor = _b.scaleFactor, maxNumScales = _b.maxNumScales, scoreThresholds = _b.scoreThresholds, scaleSteps = _b.scaleSteps; | ||

| _b = new MtcnnOptions_1.MtcnnOptions(forwardParams), minFaceSize = _b.minFaceSize, scaleFactor = _b.scaleFactor, maxNumScales = _b.maxNumScales, scoreThresholds = _b.scoreThresholds, scaleSteps = _b.scaleSteps; | ||

| scales = (scaleSteps || pyramidDown_1.pyramidDown(minFaceSize, scaleFactor, [height, width])) | ||

@@ -87,9 +88,6 @@ .filter(function (scale) { | ||

| stats.total_stage3 = Date.now() - ts; | ||

| results = out3.boxes.map(function (box, idx) { return ({ | ||

| faceDetection: new FaceDetection_1.FaceDetection(out3.scores[idx], new tfjs_image_recognition_base_1.Rect(box.left / width, box.top / height, box.width / width, box.height / height), { | ||

| height: height, | ||

| width: width | ||

| }), | ||

| faceLandmarks: new FaceLandmarks5_1.FaceLandmarks5(out3.points[idx].map(function (pt) { return pt.div(new tfjs_image_recognition_base_1.Point(width, height)); }), { width: width, height: height }) | ||

| }); }); | ||

| results = out3.boxes.map(function (box, idx) { return new FaceDetectionWithLandmarks_1.FaceDetectionWithLandmarks(new FaceDetection_1.FaceDetection(out3.scores[idx], new tfjs_image_recognition_base_1.Rect(box.left / width, box.top / height, box.width / width, box.height / height), { | ||

| height: height, | ||

| width: width | ||

| }), new FaceLandmarks5_1.FaceLandmarks5(out3.points[idx].map(function (pt) { return pt.sub(new tfjs_image_recognition_base_1.Point(box.left, box.top)).div(new tfjs_image_recognition_base_1.Point(box.width, box.height)); }), { width: box.width, height: box.height })); }); | ||

| return [2 /*return*/, onReturn({ results: results, stats: stats })]; | ||

@@ -96,0 +94,0 @@ } |

| import * as tf from '@tensorflow/tfjs-core'; | ||

| import { ConvParams, FCParams } from 'tfjs-tiny-yolov2'; | ||

| import { FaceDetection } from '../classes/FaceDetection'; | ||

| import { FaceLandmarks5 } from '../classes/FaceLandmarks5'; | ||

| export declare type SharedParams = { | ||

@@ -37,12 +35,1 @@ conv1: ConvParams; | ||

| }; | ||

| export declare type MtcnnResult = { | ||

| faceDetection: FaceDetection; | ||

| faceLandmarks: FaceLandmarks5; | ||

| }; | ||

| export declare type MtcnnForwardParams = { | ||

| minFaceSize?: number; | ||

| scaleFactor?: number; | ||

| maxNumScales?: number; | ||

| scoreThresholds?: number[]; | ||

| scaleSteps?: number[]; | ||

| }; |

| import { Point, TNetInput } from 'tfjs-image-recognition-base'; | ||

| import { TinyYolov2 as TinyYolov2Base, TinyYolov2Types } from 'tfjs-tiny-yolov2'; | ||

| import { ITinyYolov2Options, TinyYolov2 as TinyYolov2Base } from 'tfjs-tiny-yolov2'; | ||

| import { FaceDetection } from '../classes'; | ||

@@ -8,4 +8,4 @@ export declare class TinyYolov2 extends TinyYolov2Base { | ||

| readonly anchors: Point[]; | ||

| locateFaces(input: TNetInput, forwardParams: TinyYolov2Types.TinyYolov2ForwardParams): Promise<FaceDetection[]>; | ||

| locateFaces(input: TNetInput, forwardParams: ITinyYolov2Options): Promise<FaceDetection[]>; | ||

| protected loadQuantizedParams(modelUri: string | undefined): any; | ||

| } |

@@ -1,4 +0,8 @@ | ||

| import { Dimensions, ObjectDetection, Rect } from 'tfjs-image-recognition-base'; | ||

| export declare class FaceDetection extends ObjectDetection { | ||

| constructor(score: number, relativeBox: Rect, imageDims: Dimensions); | ||

| import { Box, IDimensions, ObjectDetection, Rect } from 'tfjs-image-recognition-base'; | ||

| export interface IFaceDetecion { | ||

| score: number; | ||

| box: Box; | ||

| } | ||

| export declare class FaceDetection extends ObjectDetection implements IFaceDetecion { | ||

| constructor(score: number, relativeBox: Rect, imageDims: IDimensions); | ||

| } |

@@ -1,16 +0,19 @@ | ||

| import { Dimensions, Point, Rect } from 'tfjs-image-recognition-base'; | ||

| import { Dimensions, IDimensions, Point, Rect } from 'tfjs-image-recognition-base'; | ||

| import { FaceDetection } from './FaceDetection'; | ||

| export declare class FaceLandmarks { | ||

| protected _imageWidth: number; | ||

| protected _imageHeight: number; | ||

| export interface IFaceLandmarks { | ||

| positions: Point[]; | ||

| shift: Point; | ||

| } | ||

| export declare class FaceLandmarks implements IFaceLandmarks { | ||

| protected _shift: Point; | ||

| protected _faceLandmarks: Point[]; | ||

| constructor(relativeFaceLandmarkPositions: Point[], imageDims: Dimensions, shift?: Point); | ||

| getShift(): Point; | ||

| getImageWidth(): number; | ||

| getImageHeight(): number; | ||

| getPositions(): Point[]; | ||

| getRelativePositions(): Point[]; | ||

| protected _positions: Point[]; | ||

| protected _imgDims: Dimensions; | ||

| constructor(relativeFaceLandmarkPositions: Point[], imgDims: IDimensions, shift?: Point); | ||

| readonly shift: Point; | ||

| readonly imageWidth: number; | ||

| readonly imageHeight: number; | ||

| readonly positions: Point[]; | ||

| readonly relativePositions: Point[]; | ||

| forSize<T extends FaceLandmarks>(width: number, height: number): T; | ||

| shift<T extends FaceLandmarks>(x: number, y: number): T; | ||

| shiftBy<T extends FaceLandmarks>(x: number, y: number): T; | ||

| shiftByPoint<T extends FaceLandmarks>(pt: Point): T; | ||

@@ -17,0 +20,0 @@ /** |

@@ -1,2 +0,2 @@ | ||

| import { getCenterPoint, Point, Rect } from 'tfjs-image-recognition-base'; | ||

| import { Dimensions, getCenterPoint, Point, Rect } from 'tfjs-image-recognition-base'; | ||

| import { FaceDetection } from './FaceDetection'; | ||

@@ -8,34 +8,45 @@ // face alignment constants | ||

| var FaceLandmarks = /** @class */ (function () { | ||

| function FaceLandmarks(relativeFaceLandmarkPositions, imageDims, shift) { | ||

| function FaceLandmarks(relativeFaceLandmarkPositions, imgDims, shift) { | ||

| if (shift === void 0) { shift = new Point(0, 0); } | ||

| var width = imageDims.width, height = imageDims.height; | ||

| this._imageWidth = width; | ||

| this._imageHeight = height; | ||

| var width = imgDims.width, height = imgDims.height; | ||

| this._imgDims = new Dimensions(width, height); | ||

| this._shift = shift; | ||

| this._faceLandmarks = relativeFaceLandmarkPositions.map(function (pt) { return pt.mul(new Point(width, height)).add(shift); }); | ||

| this._positions = relativeFaceLandmarkPositions.map(function (pt) { return pt.mul(new Point(width, height)).add(shift); }); | ||

| } | ||

| FaceLandmarks.prototype.getShift = function () { | ||

| return new Point(this._shift.x, this._shift.y); | ||

| }; | ||

| FaceLandmarks.prototype.getImageWidth = function () { | ||

| return this._imageWidth; | ||

| }; | ||

| FaceLandmarks.prototype.getImageHeight = function () { | ||

| return this._imageHeight; | ||

| }; | ||

| FaceLandmarks.prototype.getPositions = function () { | ||

| return this._faceLandmarks; | ||

| }; | ||

| FaceLandmarks.prototype.getRelativePositions = function () { | ||

| var _this = this; | ||

| return this._faceLandmarks.map(function (pt) { return pt.sub(_this._shift).div(new Point(_this._imageWidth, _this._imageHeight)); }); | ||

| }; | ||

| Object.defineProperty(FaceLandmarks.prototype, "shift", { | ||

| get: function () { return new Point(this._shift.x, this._shift.y); }, | ||

| enumerable: true, | ||

| configurable: true | ||

| }); | ||

| Object.defineProperty(FaceLandmarks.prototype, "imageWidth", { | ||

| get: function () { return this._imgDims.width; }, | ||

| enumerable: true, | ||

| configurable: true | ||

| }); | ||

| Object.defineProperty(FaceLandmarks.prototype, "imageHeight", { | ||

| get: function () { return this._imgDims.height; }, | ||

| enumerable: true, | ||

| configurable: true | ||

| }); | ||

| Object.defineProperty(FaceLandmarks.prototype, "positions", { | ||

| get: function () { return this._positions; }, | ||

| enumerable: true, | ||

| configurable: true | ||

| }); | ||

| Object.defineProperty(FaceLandmarks.prototype, "relativePositions", { | ||

| get: function () { | ||

| var _this = this; | ||

| return this._positions.map(function (pt) { return pt.sub(_this._shift).div(new Point(_this.imageWidth, _this.imageHeight)); }); | ||

| }, | ||

| enumerable: true, | ||

| configurable: true | ||

| }); | ||

| FaceLandmarks.prototype.forSize = function (width, height) { | ||

| return new this.constructor(this.getRelativePositions(), { width: width, height: height }); | ||

| return new this.constructor(this.relativePositions, { width: width, height: height }); | ||

| }; | ||

| FaceLandmarks.prototype.shift = function (x, y) { | ||

| return new this.constructor(this.getRelativePositions(), { width: this._imageWidth, height: this._imageHeight }, new Point(x, y)); | ||

| FaceLandmarks.prototype.shiftBy = function (x, y) { | ||

| return new this.constructor(this.relativePositions, this._imgDims, new Point(x, y)); | ||

| }; | ||

| FaceLandmarks.prototype.shiftByPoint = function (pt) { | ||

| return this.shift(pt.x, pt.y); | ||

| return this.shiftBy(pt.x, pt.y); | ||

| }; | ||

@@ -56,5 +67,5 @@ /** | ||

| var box = detection instanceof FaceDetection | ||

| ? detection.getBox().floor() | ||

| ? detection.box.floor() | ||

| : detection; | ||

| return this.shift(box.x, box.y).align(); | ||

| return this.shiftBy(box.x, box.y).align(); | ||

| } | ||

@@ -70,3 +81,3 @@ var centers = this.getRefPointsForAlignment(); | ||

| var y = Math.floor(Math.max(0, refPoint.y - (relY * size))); | ||

| return new Rect(x, y, Math.min(size, this._imageWidth + x), Math.min(size, this._imageHeight + y)); | ||

| return new Rect(x, y, Math.min(size, this.imageWidth + x), Math.min(size, this.imageHeight + y)); | ||

| }; | ||

@@ -73,0 +84,0 @@ FaceLandmarks.prototype.getRefPointsForAlignment = function () { |

@@ -10,3 +10,3 @@ import * as tslib_1 from "tslib"; | ||

| FaceLandmarks5.prototype.getRefPointsForAlignment = function () { | ||

| var pts = this.getPositions(); | ||

| var pts = this.positions; | ||

| return [ | ||

@@ -13,0 +13,0 @@ pts[0], |

@@ -10,21 +10,21 @@ import * as tslib_1 from "tslib"; | ||

| FaceLandmarks68.prototype.getJawOutline = function () { | ||

| return this._faceLandmarks.slice(0, 17); | ||

| return this.positions.slice(0, 17); | ||

| }; | ||

| FaceLandmarks68.prototype.getLeftEyeBrow = function () { | ||

| return this._faceLandmarks.slice(17, 22); | ||

| return this.positions.slice(17, 22); | ||

| }; | ||

| FaceLandmarks68.prototype.getRightEyeBrow = function () { | ||

| return this._faceLandmarks.slice(22, 27); | ||

| return this.positions.slice(22, 27); | ||

| }; | ||

| FaceLandmarks68.prototype.getNose = function () { | ||

| return this._faceLandmarks.slice(27, 36); | ||

| return this.positions.slice(27, 36); | ||

| }; | ||

| FaceLandmarks68.prototype.getLeftEye = function () { | ||

| return this._faceLandmarks.slice(36, 42); | ||

| return this.positions.slice(36, 42); | ||

| }; | ||

| FaceLandmarks68.prototype.getRightEye = function () { | ||

| return this._faceLandmarks.slice(42, 48); | ||

| return this.positions.slice(42, 48); | ||

| }; | ||

| FaceLandmarks68.prototype.getMouth = function () { | ||

| return this._faceLandmarks.slice(48, 68); | ||

| return this.positions.slice(48, 68); | ||

| }; | ||

@@ -31,0 +31,0 @@ FaceLandmarks68.prototype.getRefPointsForAlignment = function () { |

| import { FaceDetection } from './FaceDetection'; | ||

| import { FaceDetectionWithLandmarks, IFaceDetectionWithLandmarks } from './FaceDetectionWithLandmarks'; | ||

| import { FaceLandmarks } from './FaceLandmarks'; | ||

| export declare class FullFaceDescription { | ||

| private _detection; | ||

| private _landmarks; | ||

| import { FaceLandmarks68 } from './FaceLandmarks68'; | ||

| export interface IFullFaceDescription<TFaceLandmarks extends FaceLandmarks = FaceLandmarks68> extends IFaceDetectionWithLandmarks<TFaceLandmarks> { | ||

| detection: FaceDetection; | ||

| landmarks: TFaceLandmarks; | ||

| descriptor: Float32Array; | ||

| } | ||

| export declare class FullFaceDescription<TFaceLandmarks extends FaceLandmarks = FaceLandmarks68> extends FaceDetectionWithLandmarks<TFaceLandmarks> implements IFullFaceDescription<TFaceLandmarks> { | ||

| private _descriptor; | ||

| constructor(_detection: FaceDetection, _landmarks: FaceLandmarks, _descriptor: Float32Array); | ||

| readonly detection: FaceDetection; | ||

| readonly landmarks: FaceLandmarks; | ||

| constructor(detection: FaceDetection, unshiftedLandmarks: TFaceLandmarks, descriptor: Float32Array); | ||

| readonly descriptor: Float32Array; | ||

| forSize(width: number, height: number): FullFaceDescription; | ||

| forSize(width: number, height: number): FullFaceDescription<TFaceLandmarks>; | ||

| } |

@@ -1,21 +0,10 @@ | ||

| var FullFaceDescription = /** @class */ (function () { | ||

| function FullFaceDescription(_detection, _landmarks, _descriptor) { | ||

| this._detection = _detection; | ||

| this._landmarks = _landmarks; | ||

| this._descriptor = _descriptor; | ||

| import * as tslib_1 from "tslib"; | ||

| import { FaceDetectionWithLandmarks } from './FaceDetectionWithLandmarks'; | ||

| var FullFaceDescription = /** @class */ (function (_super) { | ||

| tslib_1.__extends(FullFaceDescription, _super); | ||

| function FullFaceDescription(detection, unshiftedLandmarks, descriptor) { | ||

| var _this = _super.call(this, detection, unshiftedLandmarks) || this; | ||

| _this._descriptor = descriptor; | ||

| return _this; | ||

| } | ||

| Object.defineProperty(FullFaceDescription.prototype, "detection", { | ||

| get: function () { | ||

| return this._detection; | ||

| }, | ||

| enumerable: true, | ||

| configurable: true | ||

| }); | ||

| Object.defineProperty(FullFaceDescription.prototype, "landmarks", { | ||

| get: function () { | ||

| return this._landmarks; | ||

| }, | ||

| enumerable: true, | ||

| configurable: true | ||

| }); | ||

| Object.defineProperty(FullFaceDescription.prototype, "descriptor", { | ||

@@ -29,7 +18,8 @@ get: function () { | ||

| FullFaceDescription.prototype.forSize = function (width, height) { | ||

| return new FullFaceDescription(this._detection.forSize(width, height), this._landmarks.forSize(width, height), this._descriptor); | ||

| var _a = _super.prototype.forSize.call(this, width, height), detection = _a.detection, landmarks = _a.landmarks; | ||

| return new FullFaceDescription(detection, landmarks, this.descriptor); | ||

| }; | ||

| return FullFaceDescription; | ||

| }()); | ||

| }(FaceDetectionWithLandmarks)); | ||

| export { FullFaceDescription }; | ||

| //# sourceMappingURL=FullFaceDescription.js.map |

| export * from './FaceDetection'; | ||

| export * from './FaceDetectionWithLandmarks'; | ||

| export * from './FaceLandmarks'; | ||

| export * from './FaceLandmarks5'; | ||

| export * from './FaceLandmarks68'; | ||

| export * from './FaceMatch'; | ||

| export * from './FullFaceDescription'; | ||

| export * from './LabeledFaceDescriptors'; |

| export * from './FaceDetection'; | ||

| export * from './FaceDetectionWithLandmarks'; | ||

| export * from './FaceLandmarks'; | ||

| export * from './FaceLandmarks5'; | ||

| export * from './FaceLandmarks68'; | ||

| export * from './FaceMatch'; | ||

| export * from './FullFaceDescription'; | ||

| export * from './LabeledFaceDescriptors'; | ||

| //# sourceMappingURL=index.js.map |

@@ -30,5 +30,5 @@ import { getContext2dOrThrow, getDefaultDrawOptions, resolveInput } from 'tfjs-image-recognition-base'; | ||

| ctx.fillStyle = color; | ||

| landmarks.getPositions().forEach(function (pt) { return ctx.fillRect(pt.x - ptOffset, pt.y - ptOffset, lineWidth, lineWidth); }); | ||

| landmarks.positions.forEach(function (pt) { return ctx.fillRect(pt.x - ptOffset, pt.y - ptOffset, lineWidth, lineWidth); }); | ||

| }); | ||

| } | ||

| //# sourceMappingURL=drawLandmarks.js.map |

@@ -39,3 +39,3 @@ import * as tslib_1 from "tslib"; | ||

| boxes = detections.map(function (det) { return det instanceof FaceDetection | ||

| ? det.forSize(canvas.width, canvas.height).getBox().floor() | ||

| ? det.forSize(canvas.width, canvas.height).box.floor() | ||

| : det; }) | ||

@@ -42,0 +42,0 @@ .map(function (box) { return box.clipAtImageBorders(canvas.width, canvas.height); }); |

@@ -24,3 +24,3 @@ import * as tslib_1 from "tslib"; | ||

| var boxes = detections.map(function (det) { return det instanceof FaceDetection | ||

| ? det.forSize(imgWidth, imgHeight).getBox() | ||

| ? det.forSize(imgWidth, imgHeight).box | ||

| : det; }) | ||

@@ -27,0 +27,0 @@ .map(function (box) { return box.clipAtImageBorders(imgWidth, imgHeight); }); |

@@ -26,3 +26,3 @@ import * as tslib_1 from "tslib"; | ||

| function FaceLandmark68Net() { | ||

| return _super.call(this, 'FaceLandmark68LargeNet') || this; | ||

| return _super.call(this, 'FaceLandmark68Net') || this; | ||

| } | ||

@@ -32,3 +32,3 @@ FaceLandmark68Net.prototype.runNet = function (input) { | ||

| if (!params) { | ||

| throw new Error('FaceLandmark68LargeNet - load model before inference'); | ||

| throw new Error('FaceLandmark68Net - load model before inference'); | ||

| } | ||

@@ -35,0 +35,0 @@ return tf.tidy(function () { |

| import * as tf from '@tensorflow/tfjs-core'; | ||

| import { NetInput, NeuralNetwork, TNetInput, Dimensions } from 'tfjs-image-recognition-base'; | ||

| import { IDimensions, NetInput, NeuralNetwork, TNetInput } from 'tfjs-image-recognition-base'; | ||

| import { FaceLandmarks68 } from '../classes/FaceLandmarks68'; | ||

@@ -8,3 +8,3 @@ export declare class FaceLandmark68NetBase<NetParams> extends NeuralNetwork<NetParams> { | ||

| runNet(_: NetInput): tf.Tensor2D; | ||

| postProcess(output: tf.Tensor2D, inputSize: number, originalDimensions: Dimensions[]): tf.Tensor2D; | ||

| postProcess(output: tf.Tensor2D, inputSize: number, originalDimensions: IDimensions[]): tf.Tensor2D; | ||

| forwardInput(input: NetInput): tf.Tensor2D; | ||

@@ -11,0 +11,0 @@ forward(input: TNetInput): Promise<tf.Tensor2D>; |

@@ -7,2 +7,1 @@ import { FaceLandmark68Net } from './FaceLandmark68Net'; | ||

| export declare function createFaceLandmarkNet(weights: Float32Array): FaceLandmarkNet; | ||

| export declare function faceLandmarkNet(weights: Float32Array): FaceLandmarkNet; |

@@ -18,6 +18,2 @@ import * as tslib_1 from "tslib"; | ||

| } | ||

| export function faceLandmarkNet(weights) { | ||

| console.warn('faceLandmarkNet(weights: Float32Array) will be deprecated in future, use createFaceLandmarkNet instead'); | ||

| return createFaceLandmarkNet(weights); | ||

| } | ||

| //# sourceMappingURL=index.js.map |

| import { FaceRecognitionNet } from './FaceRecognitionNet'; | ||

| export * from './FaceRecognitionNet'; | ||

| export declare function createFaceRecognitionNet(weights: Float32Array): FaceRecognitionNet; | ||

| export declare function faceRecognitionNet(weights: Float32Array): FaceRecognitionNet; |

@@ -8,6 +8,2 @@ import { FaceRecognitionNet } from './FaceRecognitionNet'; | ||

| } | ||

| export function faceRecognitionNet(weights) { | ||

| console.warn('faceRecognitionNet(weights: Float32Array) will be deprecated in future, use createFaceRecognitionNet instead'); | ||

| return createFaceRecognitionNet(weights); | ||

| } | ||

| //# sourceMappingURL=index.js.map |

@@ -7,3 +7,2 @@ import * as tf from '@tensorflow/tfjs-core'; | ||

| export * from './euclideanDistance'; | ||

| export * from './faceDetectionNet'; | ||

| export * from './faceLandmarkNet'; | ||

@@ -13,2 +12,4 @@ export * from './faceRecognitionNet'; | ||

| export * from './mtcnn'; | ||

| export * from './ssdMobilenetv1'; | ||

| export * from './tinyFaceDetector'; | ||

| export * from './tinyYolov2'; |

@@ -7,3 +7,2 @@ import * as tf from '@tensorflow/tfjs-core'; | ||

| export * from './euclideanDistance'; | ||

| export * from './faceDetectionNet'; | ||

| export * from './faceLandmarkNet'; | ||

@@ -13,3 +12,5 @@ export * from './faceRecognitionNet'; | ||

| export * from './mtcnn'; | ||

| export * from './ssdMobilenetv1'; | ||

| export * from './tinyFaceDetector'; | ||

| export * from './tinyYolov2'; | ||

| //# sourceMappingURL=index.js.map |

| import * as tf from '@tensorflow/tfjs-core'; | ||

| import { Box, Dimensions } from 'tfjs-image-recognition-base'; | ||

| export declare function extractImagePatches(img: HTMLCanvasElement, boxes: Box[], {width, height}: Dimensions): Promise<tf.Tensor4D[]>; | ||

| import { Box, IDimensions } from 'tfjs-image-recognition-base'; | ||

| export declare function extractImagePatches(img: HTMLCanvasElement, boxes: Box[], {width, height}: IDimensions): Promise<tf.Tensor4D[]>; |

| import { Mtcnn } from './Mtcnn'; | ||

| export * from './Mtcnn'; | ||

| export * from './MtcnnOptions'; | ||

| export declare function createMtcnn(weights: Float32Array): Mtcnn; |

| import { Mtcnn } from './Mtcnn'; | ||

| export * from './Mtcnn'; | ||

| export * from './MtcnnOptions'; | ||

| export function createMtcnn(weights) { | ||

@@ -4,0 +5,0 @@ var net = new Mtcnn(); |

| import { NetInput, NeuralNetwork, TNetInput } from 'tfjs-image-recognition-base'; | ||

| import { MtcnnForwardParams, MtcnnResult, NetParams } from './types'; | ||

| import { FaceDetectionWithLandmarks } from '../classes/FaceDetectionWithLandmarks'; | ||

| import { FaceLandmarks5 } from '../classes/FaceLandmarks5'; | ||

| import { IMtcnnOptions } from './MtcnnOptions'; | ||

| import { NetParams } from './types'; | ||

| export declare class Mtcnn extends NeuralNetwork<NetParams> { | ||

| constructor(); | ||

| forwardInput(input: NetInput, forwardParams?: MtcnnForwardParams): Promise<{ | ||

| results: MtcnnResult[]; | ||

| forwardInput(input: NetInput, forwardParams?: IMtcnnOptions): Promise<{ | ||

| results: FaceDetectionWithLandmarks<FaceLandmarks5>[]; | ||

| stats: any; | ||

| }>; | ||

| forward(input: TNetInput, forwardParams?: MtcnnForwardParams): Promise<MtcnnResult[]>; | ||

| forwardWithStats(input: TNetInput, forwardParams?: MtcnnForwardParams): Promise<{ | ||

| results: MtcnnResult[]; | ||

| forward(input: TNetInput, forwardParams?: IMtcnnOptions): Promise<FaceDetectionWithLandmarks<FaceLandmarks5>[]>; | ||

| forwardWithStats(input: TNetInput, forwardParams?: IMtcnnOptions): Promise<{ | ||

| results: FaceDetectionWithLandmarks<FaceLandmarks5>[]; | ||

| stats: any; | ||

@@ -13,0 +16,0 @@ }>; |

@@ -5,2 +5,3 @@ import * as tslib_1 from "tslib"; | ||

| import { FaceDetection } from '../classes/FaceDetection'; | ||

| import { FaceDetectionWithLandmarks } from '../classes/FaceDetectionWithLandmarks'; | ||

| import { FaceLandmarks5 } from '../classes/FaceLandmarks5'; | ||

@@ -10,5 +11,5 @@ import { bgrToRgbTensor } from './bgrToRgbTensor'; | ||

| import { extractParams } from './extractParams'; | ||

| import { getDefaultMtcnnForwardParams } from './getDefaultMtcnnForwardParams'; | ||

| import { getSizesForScale } from './getSizesForScale'; | ||

| import { loadQuantizedParams } from './loadQuantizedParams'; | ||

| import { MtcnnOptions } from './MtcnnOptions'; | ||

| import { pyramidDown } from './pyramidDown'; | ||

@@ -50,3 +51,3 @@ import { stage1 } from './stage1'; | ||

| _a = imgTensor.shape.slice(1), height = _a[0], width = _a[1]; | ||

| _b = Object.assign({}, getDefaultMtcnnForwardParams(), forwardParams), minFaceSize = _b.minFaceSize, scaleFactor = _b.scaleFactor, maxNumScales = _b.maxNumScales, scoreThresholds = _b.scoreThresholds, scaleSteps = _b.scaleSteps; | ||

| _b = new MtcnnOptions(forwardParams), minFaceSize = _b.minFaceSize, scaleFactor = _b.scaleFactor, maxNumScales = _b.maxNumScales, scoreThresholds = _b.scoreThresholds, scaleSteps = _b.scaleSteps; | ||

| scales = (scaleSteps || pyramidDown(minFaceSize, scaleFactor, [height, width])) | ||

@@ -85,9 +86,6 @@ .filter(function (scale) { | ||

| stats.total_stage3 = Date.now() - ts; | ||

| results = out3.boxes.map(function (box, idx) { return ({ | ||

| faceDetection: new FaceDetection(out3.scores[idx], new Rect(box.left / width, box.top / height, box.width / width, box.height / height), { | ||

| height: height, | ||

| width: width | ||

| }), | ||

| faceLandmarks: new FaceLandmarks5(out3.points[idx].map(function (pt) { return pt.div(new Point(width, height)); }), { width: width, height: height }) | ||

| }); }); | ||

| results = out3.boxes.map(function (box, idx) { return new FaceDetectionWithLandmarks(new FaceDetection(out3.scores[idx], new Rect(box.left / width, box.top / height, box.width / width, box.height / height), { | ||

| height: height, | ||

| width: width | ||

| }), new FaceLandmarks5(out3.points[idx].map(function (pt) { return pt.sub(new Point(box.left, box.top)).div(new Point(box.width, box.height)); }), { width: box.width, height: box.height })); }); | ||

| return [2 /*return*/, onReturn({ results: results, stats: stats })]; | ||

@@ -94,0 +92,0 @@ } |

| import * as tf from '@tensorflow/tfjs-core'; | ||

| import { ConvParams, FCParams } from 'tfjs-tiny-yolov2'; | ||

| import { FaceDetection } from '../classes/FaceDetection'; | ||

| import { FaceLandmarks5 } from '../classes/FaceLandmarks5'; | ||

| export declare type SharedParams = { | ||

@@ -37,12 +35,1 @@ conv1: ConvParams; | ||

| }; | ||

| export declare type MtcnnResult = { | ||

| faceDetection: FaceDetection; | ||

| faceLandmarks: FaceLandmarks5; | ||

| }; | ||

| export declare type MtcnnForwardParams = { | ||

| minFaceSize?: number; | ||

| scaleFactor?: number; | ||

| maxNumScales?: number; | ||

| scoreThresholds?: number[]; | ||

| scaleSteps?: number[]; | ||

| }; |

| import { Point, TNetInput } from 'tfjs-image-recognition-base'; | ||

| import { TinyYolov2 as TinyYolov2Base, TinyYolov2Types } from 'tfjs-tiny-yolov2'; | ||

| import { ITinyYolov2Options, TinyYolov2 as TinyYolov2Base } from 'tfjs-tiny-yolov2'; | ||

| import { FaceDetection } from '../classes'; | ||

@@ -8,4 +8,4 @@ export declare class TinyYolov2 extends TinyYolov2Base { | ||

| readonly anchors: Point[]; | ||

| locateFaces(input: TNetInput, forwardParams: TinyYolov2Types.TinyYolov2ForwardParams): Promise<FaceDetection[]>; | ||

| locateFaces(input: TNetInput, forwardParams: ITinyYolov2Options): Promise<FaceDetection[]>; | ||

| protected loadQuantizedParams(modelUri: string | undefined): any; | ||

| } |

@@ -17,2 +17,16 @@ const dataFiles = [ | ||

| const exclude = process.env.UUT | ||

| ? [ | ||

| 'dom', | ||

| 'faceLandmarkNet', | ||

| 'faceRecognitionNet', | ||

| 'ssdMobilenetv1', | ||

| 'tinyFaceDetector', | ||

| 'mtcnn', | ||

| 'tinyYolov2' | ||

| ] | ||

| .filter(ex => ex !== process.env.UUT) | ||

| .map(ex => `test/tests/${ex}/*.ts`) | ||

| : [] | ||

| module.exports = function(config) { | ||

@@ -25,2 +39,3 @@ config.set({ | ||

| ].concat(dataFiles), | ||

| exclude, | ||

| preprocessors: { | ||

@@ -27,0 +42,0 @@ '**/*.ts': ['karma-typescript'] |

| { | ||

| "name": "face-api.js", | ||

| "version": "0.14.3", | ||

| "version": "0.15.0", | ||

| "description": "JavaScript API for face detection and face recognition in the browser with tensorflow.js", | ||

@@ -14,3 +14,10 @@ "module": "./build/es6/index.js", | ||

| "build": "npm run rollup && npm run rollup-min && npm run tsc && npm run tsc-es6", | ||

| "test": "karma start" | ||

| "test": "karma start", | ||

| "test-facelandmarknets": "set UUT=faceLandmarkNet&& karma start", | ||

| "test-facerecognitionnet": "set UUT=faceRecognitionNet&& karma start", | ||

| "test-ssdmobilenetv1": "set UUT=ssdMobilenetv1&& karma start", | ||

| "test-tinyfacedetector": "set UUT=tinyFaceDetector&& karma start", | ||

| "test-mtcnn": "set UUT=mtcnn&& karma start", | ||

| "test-tinyyolov2": "set UUT=tinyYolov2&& karma start", | ||

| "docs": "typedoc --options ./typedoc.config.js ./src" | ||

| }, | ||

@@ -28,4 +35,4 @@ "keywords": [ | ||

| "@tensorflow/tfjs-core": "^0.13.2", | ||

| "tfjs-image-recognition-base": "^0.1.2", | ||

| "tfjs-tiny-yolov2": "^0.1.3", | ||

| "tfjs-image-recognition-base": "^0.1.3", | ||

| "tfjs-tiny-yolov2": "^0.2.1", | ||

| "tslib": "^1.9.3" | ||

@@ -32,0 +39,0 @@ }, |

611

README.md

@@ -7,2 +7,35 @@ # face-api.js | ||

| Table of Contents: | ||

| * **[Resources](#resources)** | ||

| * **[Live Demos](#live-demos)** | ||

| * **[Tutorials](#tutorials)** | ||

| * **[Examples](#examples)** | ||

| * **[Running the Examples](#running-the-examples)** | ||

| * **[Available Models](#models)** | ||

| * **[Face Detection Models](#models-face-detection)** | ||

| * **[68 Point Face Landmark Detection Models](#models-face-landmark-detection)** | ||

| * **[Face Recognition Model](#models-face-recognition)** | ||

| * **[Usage](#usage)** | ||

| * **[Loading the Models](#usage-loading-models)** | ||

| * **[High Level API](#usage-high-level-api)** | ||

| * **[Displaying Detection Results](#usage-displaying-detection-results)** | ||

| * **[Face Detection Options](#usage-face-detection-options)** | ||

| * **[Utility Classes](#usage-utility-classes)** | ||

| * **[Other Useful Utility](#other-useful-utility)** | ||

| <a name="resources"></a> | ||

| # Resources | ||

| <a name="live-demos"></a> | ||

| ## Live Demos | ||

| **[Check out the live demos!](https://justadudewhohacks.github.io/face-api.js/)** | ||

| <a name="tutorials"></a> | ||

| ## Tutorials | ||

| Check out my face-api.js tutorials: | ||

@@ -13,37 +46,18 @@ | ||

| **Check out the live demos [here](https://justadudewhohacks.github.io/face-api.js/)!** | ||

| <a name="examples"></a> | ||

| Table of Contents: | ||

| # Examples | ||

| * **[Running the Examples](#running-the-examples)** | ||

| * **[About the Package](#about-the-package)** | ||

| * **[Face Detection - SSD Mobilenet v1](#about-face-detection-ssd)** | ||

| * **[Face Detection - Tiny Yolo v2](#about-face-detection-yolo)** | ||

| * **[Face Detection & 5 Point Face Landmarks - MTCNN](#about-face-detection-mtcnn)** | ||

| * **[Face Recognition](#about-face-recognition)** | ||

| * **[68 Point Face Landmark Detection](#about-face-landmark-detection)** | ||

| * **[Usage](#usage)** | ||

| * **[Loading the Models](#usage-load-models)** | ||

| * **[Face Detection - SSD Mobilenet v1](#usage-face-detection-ssd)** | ||

| * **[Face Detection - Tiny Yolo v2](#usage-face-detection-yolo)** | ||

| * **[Face Detection & 5 Point Face Landmarks - MTCNN](#usage-face-detection-mtcnn)** | ||

| * **[Face Recognition](#usage-face-recognition)** | ||

| * **[68 Point Face Landmark Detection](#usage-face-landmark-detection)** | ||

| * **[Shortcut Functions for Full Face Description](#shortcut-functions)** | ||

| ## Face Recognition | ||

| ## Examples | ||

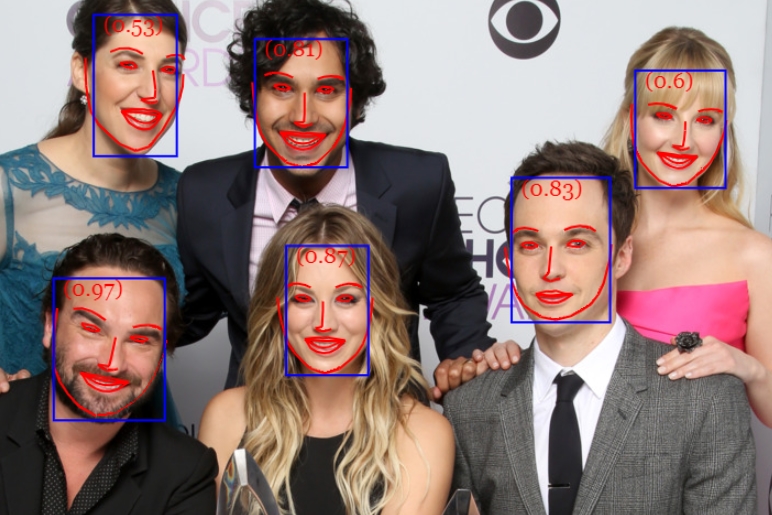

| ### Face Recognition | ||

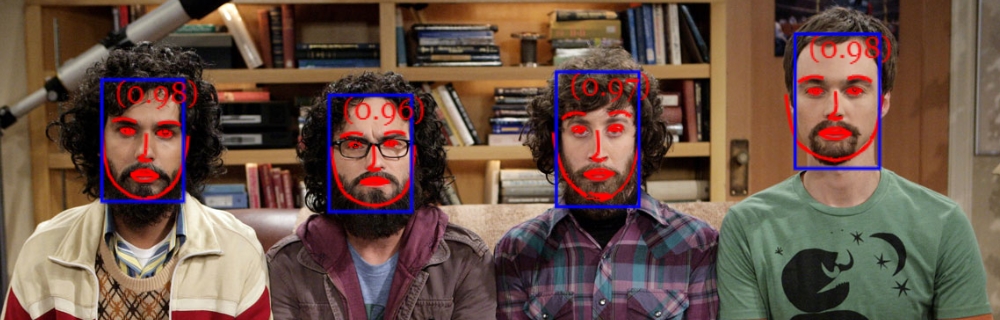

|  | ||

|  | ||

|  | ||

| ### Face Similarity | ||

| ## Face Similarity | ||

|  | ||

| ### Face Landmarks | ||

| ## Face Landmark Detection | ||

|  | ||

|  | ||

@@ -53,10 +67,8 @@ | ||

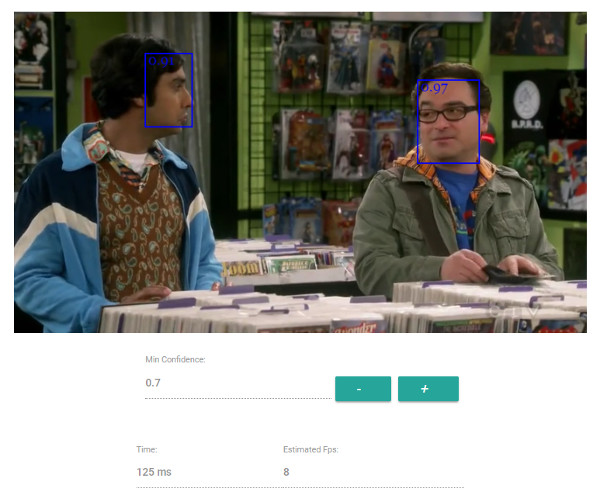

| ### Live Face Detection | ||

| ## Realtime Face Tracking | ||

| **SSD Mobilenet v1** | ||

|  | ||

|  | ||

| ## MTCNN | ||

| **MTCNN** | ||

|  | ||

@@ -69,3 +81,4 @@ | ||

| ``` bash | ||

| cd examples | ||

| git clone https://github.com/justadudewhohacks/face-api.js.git | ||

| cd face-api.js/examples | ||

| npm i | ||

@@ -77,64 +90,54 @@ npm start | ||

| <a name="about-the-package"></a> | ||

| <a name="models"></a> | ||

| ## About the Package | ||

| # Available Models | ||

| <a name="about-face-detection-ssd"></a> | ||

| <a name="models-face-detection"></a> | ||

| ### Face Detection - SSD Mobilenet v1 | ||

| ## Face Detection Models | ||

| For face detection, this project implements a SSD (Single Shot Multibox Detector) based on MobileNetV1. The neural net will compute the locations of each face in an image and will return the bounding boxes together with it's probability for each face. This face detector is aiming towards obtaining high accuracy in detecting face bounding boxes instead of low inference time. | ||

| ### SSD Mobilenet V1 | ||

| For face detection, this project implements a SSD (Single Shot Multibox Detector) based on MobileNetV1. The neural net will compute the locations of each face in an image and will return the bounding boxes together with it's probability for each face. This face detector is aiming towards obtaining high accuracy in detecting face bounding boxes instead of low inference time. The size of the quantized model is about 5.4 MB (**ssd_mobilenetv1_model**). | ||

| The face detection model has been trained on the [WIDERFACE dataset](http://mmlab.ie.cuhk.edu.hk/projects/WIDERFace/) and the weights are provided by [yeephycho](https://github.com/yeephycho) in [this](https://github.com/yeephycho/tensorflow-face-detection) repo. | ||

| <a name="about-face-detection-yolo"></a> | ||

| ### Tiny Face Detector | ||

| ### Face Detection - Tiny Yolo v2 | ||

| The Tiny Face Detector is a very performant, realtime face detector, which is much faster, smaller and less resource consuming compared to the SSD Mobilenet V1 face detector, in return it performs slightly less well on detecting small faces. This model is extremely mobile and web friendly, thus it should be your GO-TO face detector on mobile devices and resource limited clients. The size of the quantized model is only 190 KB (**tiny_face_detector_model**). | ||

| The Tiny Yolo v2 implementation is a very performant face detector, which can easily adapt to different input image sizes, thus can be used as an alternative to SSD Mobilenet v1 to trade off accuracy for performance (inference time). In general the models ability to locate smaller face bounding boxes is not as accurate as SSD Mobilenet v1. | ||

| The face detector has been trained on a custom dataset of ~14K images labeled with bounding boxes. Furthermore the model has been trained to predict bounding boxes, which entirely cover facial feature points, thus it in general produces better results in combination with subsequent face landmark detection than SSD Mobilenet V1. | ||

| The face detector has been trained on a custom dataset of ~10K images labeled with bounding boxes and uses depthwise separable convolutions instead of regular convolutions, which ensures very fast inference and allows to have a quantized model size of only 1.7MB making the model extremely mobile and web friendly. Thus, the Tiny Yolo v2 face detector should be your GO-TO face detector on mobile devices. | ||

| This model is basically an even tinier version of Tiny Yolo V2, replacing the regular convolutions of Yolo with depthwise separable convolutions. Yolo is fully convolutional, thus can easily adapt to different input image sizes to trade off accuracy for performance (inference time). | ||

| <a name="about-face-detection-mtcnn"></a> | ||

| ### MTCNN | ||

| ### Face Detection & 5 Point Face Landmarks - MTCNN | ||

| **Note, this model is mostly kept in this repo for experimental reasons. In general the other face detectors should perform better, but of course you are free to play around with MTCNN.** | ||

| MTCNN (Multi-task Cascaded Convolutional Neural Networks) represents an alternative face detector to SSD Mobilenet v1 and Tiny Yolo v2, which offers much more room for configuration. By tuning the input parameters, MTCNN is able to detect a wide range of face bounding box sizes. MTCNN is a 3 stage cascaded CNN, which simultaneously returns 5 face landmark points along with the bounding boxes and scores for each face. By limiting the minimum size of faces expected in an image, MTCNN allows you to process frames from your webcam in realtime. Additionally with the model size is only 2MB. | ||

| MTCNN (Multi-task Cascaded Convolutional Neural Networks) represents an alternative face detector to SSD Mobilenet v1 and Tiny Yolo v2, which offers much more room for configuration. By tuning the input parameters, MTCNN should be able to detect a wide range of face bounding box sizes. MTCNN is a 3 stage cascaded CNN, which simultaneously returns 5 face landmark points along with the bounding boxes and scores for each face. Additionally the model size is only 2MB. | ||

| MTCNN has been presented in the paper [Joint Face Detection and Alignment using Multi-task Cascaded Convolutional Networks](https://kpzhang93.github.io/MTCNN_face_detection_alignment/paper/spl.pdf) by Zhang et al. and the model weights are provided in the official [repo](https://github.com/kpzhang93/MTCNN_face_detection_alignment) of the MTCNN implementation. | ||

| <a name="about-face-recognition"></a> | ||

| <a name="models-face-landmark-detection"></a> | ||

| ### Face Recognition | ||

| ## 68 Point Face Landmark Detection Models | ||

| For face recognition, a ResNet-34 like architecture is implemented to compute a face descriptor (a feature vector with 128 values) from any given face image, which is used to describe the characteristics of a persons face. The model is **not** limited to the set of faces used for training, meaning you can use it for face recognition of any person, for example yourself. You can determine the similarity of two arbitrary faces by comparing their face descriptors, for example by computing the euclidean distance or using any other classifier of your choice. | ||

| This package implements a very lightweight and fast, yet accurate 68 point face landmark detector. The default model has a size of only 350kb (**face_landmark_68_model**) and the tiny model is only 80kb (**face_landmark_68_tiny_model**). Both models employ the ideas of depthwise separable convolutions as well as densely connected blocks. The models have been trained on a dataset of ~35k face images labeled with 68 face landmark points. | ||

| The neural net is equivalent to the **FaceRecognizerNet** used in [face-recognition.js](https://github.com/justadudewhohacks/face-recognition.js) and the net used in the [dlib](https://github.com/davisking/dlib/blob/master/examples/dnn_face_recognition_ex.cpp) face recognition example. The weights have been trained by [davisking](https://github.com/davisking) and the model achieves a prediction accuracy of 99.38% on the LFW (Labeled Faces in the Wild) benchmark for face recognition. | ||

| <a name="models-face-recognition"></a> | ||

| <a name="about-face-landmark-detection"></a> | ||

| ## Face Recognition Model | ||

| ### 68 Point Face Landmark Detection | ||

| For face recognition, a ResNet-34 like architecture is implemented to compute a face descriptor (a feature vector with 128 values) from any given face image, which is used to describe the characteristics of a persons face. The model is **not** limited to the set of faces used for training, meaning you can use it for face recognition of any person, for example yourself. You can determine the similarity of two arbitrary faces by comparing their face descriptors, for example by computing the euclidean distance or using any other classifier of your choice. | ||

| This package implements a very lightweight and fast, yet accurate 68 point face landmark detector. The default model has a size of only 350kb and the tiny model is only 80kb. Both models employ the ideas of depthwise separable convolutions as well as densely connected blocks. The models have been trained on a dataset of ~35k face images labeled with 68 face landmark points. | ||

| The neural net is equivalent to the **FaceRecognizerNet** used in [face-recognition.js](https://github.com/justadudewhohacks/face-recognition.js) and the net used in the [dlib](https://github.com/davisking/dlib/blob/master/examples/dnn_face_recognition_ex.cpp) face recognition example. The weights have been trained by [davisking](https://github.com/davisking) and the model achieves a prediction accuracy of 99.38% on the LFW (Labeled Faces in the Wild) benchmark for face recognition. | ||

| <a name="usage"></a> | ||

| The size of the quantized model is roughly 6.2 MB (**face_recognition_model**). | ||

| ## Usage | ||

| # Usage | ||

| Get the latest build from dist/face-api.js or dist/face-api.min.js and include the script: | ||

| <a name="usage-loading-models"></a> | ||

| ``` html | ||

| <script src="face-api.js"></script> | ||

| ``` | ||

| ## Loading the Models | ||

| Or install the package: | ||

| ``` bash | ||

| npm i face-api.js | ||

| ``` | ||

| <a name="usage-load-models"></a> | ||

| ### Loading the Models | ||

| To load a model, you have provide the corresponding manifest.json file as well as the model weight files (shards) as assets. Simply copy them to your public or assets folder. The manifest.json and shard files of a model have to be located in the same directory / accessible under the same route. | ||

@@ -145,28 +148,16 @@ | ||

| ``` javascript | ||

| await faceapi.loadFaceDetectionModel('/models') | ||

| await faceapi.loadSsdMobilenetv1Model('/models') | ||

| // accordingly for the other models: | ||

| // await faceapi.loadTinyFaceDetectorModel('/models') | ||

| // await faceapi.loadMtcnnModel('/models') | ||

| // await faceapi.loadFaceLandmarkModel('/models') | ||

| // await faceapi.loadFaceLandmarkTinyModel('/models') | ||

| // await faceapi.loadFaceRecognitionModel('/models') | ||

| // await faceapi.loadMtcnnModel('/models') | ||

| // await faceapi.loadTinyYolov2Model('/models') | ||

| ``` | ||

| As an alternative, you can also create instance of the neural nets: | ||

| Alternatively, you can also create instance of the neural nets: | ||

| ``` javascript | ||

| const net = new faceapi.FaceDetectionNet() | ||

| // accordingly for the other models: | ||

| // const net = new faceapi.FaceLandmark68Net() | ||

| // const net = new faceapi.FaceLandmark68TinyNet() | ||

| // const net = new faceapi.FaceRecognitionNet() | ||

| // const net = new faceapi.Mtcnn() | ||

| // const net = new faceapi.TinyYolov2() | ||

| await net.load('/models/face_detection_model-weights_manifest.json') | ||

| // await net.load('/models/face_landmark_68_model-weights_manifest.json') | ||

| // await net.load('/models/face_landmark_68_tiny_model-weights_manifest.json') | ||

| // await net.load('/models/face_recognition_model-weights_manifest.json') | ||

| // await net.load('/models/mtcnn_model-weights_manifest.json') | ||

| // await net.load('/models/tiny_yolov2_separable_conv_model-weights_manifest.json') | ||

| const net = new faceapi.SsdMobilenetv1() | ||

| await net.load('/models') | ||

| ``` | ||

@@ -178,5 +169,3 @@ | ||

| // using fetch | ||

| const res = await fetch('/models/face_detection_model.weights') | ||

| const weights = new Float32Array(await res.arrayBuffer()) | ||

| net.load(weights) | ||

| net.load(await faceapi.fetchNetWeights('/models/face_detection_model.weights')) | ||

@@ -189,157 +178,372 @@ // using axios | ||

| <a name="usage-face-detection-ssd"></a> | ||

| ## High Level API | ||

| ### Face Detection - SSD Mobilenet v1 | ||

| In the following **input** can be an HTML img, video or canvas element or the id of that element. | ||

| Detect faces and get the bounding boxes and scores: | ||

| ``` html | ||

| <img id="myImg" src="images/example.png" /> | ||

| <video id="myVideo" src="media/example.mp4" /> | ||

| <canvas id="myCanvas" /> | ||

| ``` | ||

| ``` javascript | ||

| // optional arguments | ||

| const minConfidence = 0.8 | ||

| const maxResults = 10 | ||

| const input = document.getElementById('myImg') | ||

| // const input = document.getElementById('myVideo') | ||

| // const input = document.getElementById('myCanvas') | ||

| // or simply: | ||

| // const input = 'myImg' | ||

| ``` | ||

| // inputs can be html canvas, img or video element or their ids ... | ||

| const myImg = document.getElementById('myImg') | ||

| const detections = await faceapi.ssdMobilenetv1(myImg, minConfidence, maxResults) | ||

| ### Detecting Faces | ||

| Detect all faces in an image. Returns **Array<[FaceDetection](#interface-face-detection)>**: | ||

| ``` javascript | ||

| const detections = await faceapi.detectAllFaces(input) | ||

| ``` | ||

| Draw the detected faces to a canvas: | ||

| Detect the face with the highest confidence score in an image. Returns **[FaceDetection](#interface-face-detection) | undefined**: | ||

| ``` javascript | ||

| const detection = await faceapi.detectSingleFace(input) | ||

| ``` | ||

| By default **detectAllFaces** and **detectSingleFace** utilize the SSD Mobilenet V1 Face Detector. You can specify the face detector by passing the corresponding options object: | ||

| ``` javascript | ||

| const detections1 = await faceapi.detectAllFaces(input, new SsdMobilenetv1Options()) | ||

| const detections2 = await faceapi.detectAllFaces(input, new TinyFaceDetectorOptions()) | ||

| const detections3 = await faceapi.detectAllFaces(input, new MtcnnOptions()) | ||

| ``` | ||

| You can tune the options of each face detector as shown [here](#usage-face-detection-options). | ||

| ### Detecting 68 Face Landmark Points | ||

| **After face detection, we can furthermore predict the facial landmarks for each detected face as follows:** | ||

| Detect all faces in an image + computes 68 Point Face Landmarks for each detected face. Returns **Array<[FaceDetectionWithLandmarks](#interface-face-detection-with-landmarks)>**: | ||

| ``` javascript | ||

| const detectionsWithLandmarks = await faceapi.detectAllFaces(input).withFaceLandmarks() | ||

| ``` | ||

| Detect the face with the highest confidence score in an image + computes 68 Point Face Landmarks for that face. Returns **[FaceDetectionWithLandmarks](#interface-face-detection-with-landmarks) | undefined**: | ||

| ``` javascript | ||

| const detectionWithLandmarks = await faceapi.detectSingleFace(input).withFaceLandmarks() | ||

| ``` | ||

| You can also specify to use the tiny model instead of the default model: | ||

| ``` javascript | ||

| const useTinyModel = true | ||

| const detectionsWithLandmarks = await faceapi.detectAllFaces(input).withFaceLandmarks(useTinyModel) | ||

| ``` | ||

| ### Computing Face Descriptors | ||

| **After face detection and facial landmark prediction the face descriptors for each face can be computed as follows:** | ||

| Detect all faces in an image + computes 68 Point Face Landmarks for each detected face. Returns **Array<[FullFaceDescription](#interface-full-face-description)>**: | ||

| ``` javascript | ||

| const fullFaceDescriptions = await faceapi.detectAllFaces(input).withFaceLandmarks().withFaceDescriptors() | ||

| ``` | ||

| Detect the face with the highest confidence score in an image + computes 68 Point Face Landmarks and face descriptor for that face. Returns **[FullFaceDescription](#interface-full-face-description) | undefined**: | ||

| ``` javascript | ||

| const fullFaceDescription = await faceapi.detectSingleFace(input).withFaceLandmarks().withFaceDescriptor() | ||

| ``` | ||

| ### Face Recognition by Matching Descriptors | ||

| To perform face recognition, one can use faceapi.FaceMatcher to compare reference face descriptors to query face descriptors. | ||

| First, we initialize the FaceMatcher with the reference data, for example we can simply detect faces in a **referenceImage** and match the descriptors of the detected faces to faces of subsquent images: | ||

| ``` javascript | ||

| const fullFaceDescriptions = await faceapi | ||

| .detectAllFaces(referenceImage) | ||

| .withFaceLandmarks() | ||

| .withFaceDescriptors() | ||

| if (!fullFaceDescriptions.length) { | ||

| return | ||

| } | ||

| // create FaceMatcher with automatically assigned labels | ||

| // from the detection results for the reference image | ||

| const faceMatcher = new faceapi.FaceMatcher(fullFaceDescriptions) | ||

| ``` | ||

| Now we can recognize a persons face shown in **queryImage1**: | ||

| ``` javascript | ||

| const singleFullFaceDescription = await faceapi | ||

| .detectSingleFace(queryImage1) | ||

| .withFaceLandmarks() | ||

| .withFaceDescriptor() | ||

| if (singleFullFaceDescription) { | ||

| const bestMatch = faceMatcher.findBestMatch(singleFullFaceDescription.descriptor) | ||

| console.log(bestMatch.toString()) | ||

| } | ||

| ``` | ||

| Or we can recognize all faces shown in **queryImage2**: | ||

| ``` javascript | ||

| const fullFaceDescriptions = await faceapi | ||

| .detectAllFaces(queryImage2) | ||

| .withFaceLandmarks() | ||

| .withFaceDescriptors() | ||

| fullFaceDescriptions.forEach(fd => { | ||

| const bestMatch = faceMatcher.findBestMatch(fd.descriptor) | ||

| console.log(bestMatch.toString()) | ||

| }) | ||

| ``` | ||

| You can also create labeled reference descriptors as follows: | ||

| ``` javascript | ||

| const labeledDescriptors = [ | ||

| new faceapi.LabeledFaceDescriptors( | ||

| 'obama' | ||

| [descriptorObama1, descriptorObama2] | ||

| ), | ||

| new faceapi.LabeledFaceDescriptors( | ||

| 'trump' | ||

| [descriptorTrump] | ||

| ) | ||

| ] | ||

| const faceMatcher = new faceapi.FaceMatcher(labeledDescriptors) | ||

| ``` | ||

| <a name="usage-displaying-detection-results"></a> | ||

| ## Displaying Detection Results | ||

| Drawing the detected faces into a canvas: | ||

| ``` javascript | ||

| const detections = await faceapi.detectAllFaces(input) | ||

| // resize the detected boxes in case your displayed image has a different size then the original | ||

| const detectionsForSize = detections.map(det => det.forSize(myImg.width, myImg.height)) | ||

| const detectionsForSize = detections.map(det => det.forSize(input.width, input.height)) | ||

| // draw them into a canvas | ||

| const canvas = document.getElementById('overlay') | ||

| canvas.width = myImg.width | ||

| canvas.height = myImg.height | ||

| faceapi.drawDetection(canvas, detectionsForSize, { withScore: false }) | ||

| canvas.width = input.width | ||

| canvas.height = input.height | ||

| faceapi.drawDetection(canvas, detectionsForSize, { withScore: true }) | ||

| ``` | ||

| You can also obtain the tensors of the unfiltered bounding boxes and scores for each image in the batch (tensors have to be disposed manually): | ||

| Drawing face landmarks into a canvas: | ||

| ``` javascript | ||

| const { boxes, scores } = await net.forward('myImg') | ||

| const detectionsWithLandmarks = await faceapi | ||

| .detectAllFaces(input) | ||

| .withFaceLandmarks() | ||

| // resize the detected boxes and landmarks in case your displayed image has a different size then the original | ||

| const detectionsWithLandmarksForSize = detectionsWithLandmarks.map(det => det.forSize(input.width, input.height)) | ||

| // draw them into a canvas | ||

| const canvas = document.getElementById('overlay') | ||

| canvas.width = input.width | ||

| canvas.height = input.height | ||

| faceapi.drawLandmarks(canvas, detectionsWithLandmarks, { drawLines: true }) | ||

| ``` | ||

| <a name="usage-face-detection-yolo"></a> | ||

| Finally you can also draw boxes with custom text: | ||

| ### Face Detection - Tiny Yolo v2 | ||

| ``` javascript | ||

| const boxesWithText = [ | ||

| new faceapi.BoxWithText(new faceapi.Rect(x, y, width, height), text)) | ||

| new faceapi.BoxWithText(new faceapi.Rect(0, 0, 50, 50), 'some text')) | ||

| ] | ||

| Detect faces and get the bounding boxes and scores: | ||

| const canvas = document.getElementById('overlay') | ||

| faceapi.drawDetection(canvas, boxesWithText) | ||

| ``` | ||

| <a name="usage-face-detection-options"></a> | ||

| ## Face Detection Options | ||

| ### SsdMobilenetv1Options | ||

| ``` javascript | ||

| // defaults parameters shown: | ||

| const forwardParams = { | ||

| scoreThreshold: 0.5, | ||

| // any number or one of the predefined sizes: | ||

| // 'xs' (224 x 224) | 'sm' (320 x 320) | 'md' (416 x 416) | 'lg' (608 x 608) | ||

| inputSize: 'md' | ||

| export interface ISsdMobilenetv1Options { | ||

| // minimum confidence threshold | ||

| // default: 0.5 | ||

| minConfidence?: number | ||

| // maximum number of faces to return | ||

| // default: 100 | ||

| maxResults?: number | ||

| } | ||

| const detections = await faceapi.tinyYolov2(document.getElementById('myImg'), forwardParams) | ||

| // example | ||

| const options = new SsdMobilenetv1Options({ minConfidence: 0.8 }) | ||

| ``` | ||

| <a name="usage-face-detection-mtcnn"></a> | ||

| ### TinyFaceDetectorOptions | ||

| ### Face Detection & 5 Point Face Landmarks - MTCNN | ||

| ``` javascript | ||