node-moving-things-tracker

node-moving-things-tracker is a javascript implementation of a "tracker by detections" for realtime multiple object tracking (MOT) in node.js / browsers.

Commissioned by moovel lab for Beat the Traffic X and the Open Data Cam project.

Problem

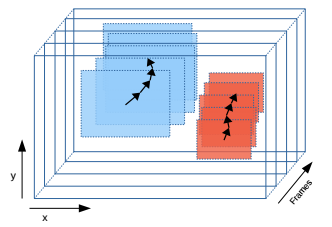

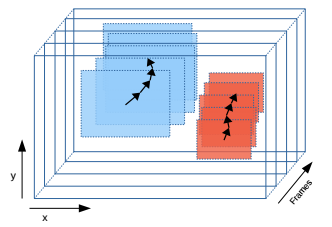

How to track persistently multiple moving things from frame-by-frame object detections inputs? How to assign an unique identifier to frame-by-frame object detection results?

Often object detection framework don't have any memory of their detection results over time e.g. Yolo provides every frame an array of detections results in the form of [[x,y,w,h,confidence,name] ...], note that there isn't any unique ID to indentify the same detected object in future frames.

Detections Input

Raw Yolo detection results

Tracker Output

Yolo detection results enhanced with unique IDs after beeing processed by node-moving-things-tracker. Note that now every object has been assigned an unique ID

Installation

npm install -g node-moving-things-tracker

npm install --save node-moving-things-tracker

Usage

As a node module

const Tracker = require('node-moving-things-tracker').Tracker;

Tracker.updateTrackedItemsWithNewFrame(detectionScaledOfThisFrame, currentFrame);

const trackerDataForThisFrame = Tracker.getJSONOfTrackedItems();

Example: Opendatacam.js of the Open Data Cam project.

Command line usage

node-moving-things-tracker takes as an input a txt file generated by node-yolo and outputs a tracker.json file that assigns unique IDs to the YOLO detections bbox.

The detections entry file could also be generated by another object detection algorithm than YOLO, it just needs to respects the same format.

NOTE : usage is customized for the use case of Beat the Traffic X

node-moving-things-tracker --input PATH_TO_YOLO_DETECTIONS.txt

Detections Input and tracker output format

See example here:

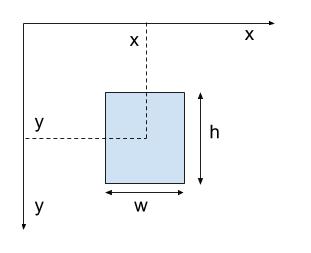

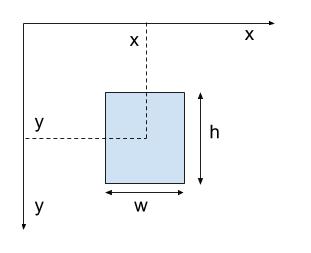

Coordinate space:

Detections Input

rawdetections.txt

{"frame":0,"detections":[{"x":699,"y":99,"w":32,"h":19,"confidence":34,"name":"car"},{"x":285,"y":170,"w":40,"h":32,"confidence":26,"name":"car"},{"x":259,"y":178,"w":75,"h":46,"confidence":42,"name":"car"},{"x":39,"y":222,"w":91,"h":52,"confidence":61,"name":"car"},{"x":148,"y":199,"w":123,"h":55,"confidence":53,"name":"car"}]}

{"frame":1,"detections":[{"x":699,"y":99,"w":32,"h":19,"confidence":31,"name":"car"},{"x":694,"y":116,"w":34,"h":23,"confidence":25,"name":"car"},{"x":285,"y":170,"w":40,"h":32,"confidence":27,"name":"car"},{"x":259,"y":178,"w":75,"h":46,"confidence":42,"name":"car"},{"x":39,"y":222,"w":91,"h":52,"confidence":61,"name":"car"},{"x":148,"y":199,"w":123,"h":55,"confidence":52,"name":"car"}]}

Tracker Output

Normal mode:

{

"43": [

{

"id": 0,

"x": 628,

"y": 144,

"w": 48,

"h": 29,

"confidence": 80,

"name": "car",

"isZombie": false

},

{

"id": 1,

"x": 620,

"y": 154,

"w": 50,

"h": 35,

"confidence": 80,

"name": "car",

"isZombie": true

}

]

}

Debug mode:

node-moving-things-tracker --debug --input PATH_TO_YOLO_DETECTIONS.txt

{

"43": [

{

"id": "900e36a2-cbc7-427c-83a9-819d072391f0",

"idDisplay": 0,

"x": 628,

"y": 144,

"w": 48,

"h": 29,

"confidence": 80,

"name": "car",

"isZombie": false,

"zombieOpacity": 1,

"appearFrame": 35,

"disappearFrame": null

},

{

"id": "38939c38-c977-40a9-ad6a-3bb916c37fa1",

"idDisplay": 1,

"x": 620,

"y": 154,

"w": 50,

"h": 35,

"confidence": 80,

"name": "car",

"isZombie": false,

"zombieOpacity": 1,

"appearFrame": 43,

"disappearFrame": null

}

]

}

Run on opendatacam/darknet detection data

Usage with opendatacam/darknet (https://github.com/opendatacam/darknet/pull/2) generated tracker data

node-moving-things-tracker --mode opendatacam-darknet --input detectionsFromDarknet.json

Example detections.json file

[

{

"frame_id":0,

"objects": [

{"class_id":5, "name":"bus", "relative_coordinates":{"center_x":0.394363, "center_y":0.277938, "width":0.032596, "height":0.106158}, "confidence":0.414157},

{"class_id":5, "name":"bus", "relative_coordinates":{"center_x":0.363555, "center_y":0.285264, "width":0.062474, "height":0.133008}, "confidence":0.402736}

]

},

{

"frame_id":0,

"objects": [

{"class_id":5, "name":"bus", "relative_coordinates":{"center_x":0.394363, "center_y":0.277938, "width":0.032596, "height":0.106158}, "confidence":0.414157},

{"class_id":5, "name":"bus", "relative_coordinates":{"center_x":0.363555, "center_y":0.285264, "width":0.062474, "height":0.133008}, "confidence":0.402736}

]

}

]

Run on MOT Challenge dataset

node-moving-things-tracker --mode motchallenge --input PATH_TO_MOT_DETECTIONS.txt

Benchmark

How to benchmark against MOT Challenge : https://github.com/opendatacam/node-moving-things-tracker/blob/master/documentation/BENCHMARK.md

Limitations

No params tweaking is possible via command-line for now, it is currently optimized for tracking cars in traffic videos.

How does it work

Based on V-IOU tracker: https://github.com/bochinski/iou-tracker/ , paper: http://elvera.nue.tu-berlin.de/files/1547Bochinski2018.pdf

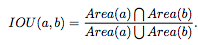

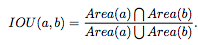

In order to define if an object is the same from one frame to another, we compare the overlapping areas between the two detections between the frames.

By computing the intersection over union:

On top of this we added some prediction mecanism for next frame based on velocity / acceleration vector to avoid ID reassignment when the object is missed only for a few frames.

License

MIT License

Acknowledgments

node-moving-things-tracker is based on the ideas and work of the following people. References are listed chronologicaly how we encounter them.