Product

Introducing Socket Firewall Enterprise: Flexible, Configurable Protection for Modern Package Ecosystems

Socket Firewall Enterprise is now available with flexible deployment, configurable policies, and expanded language support.

audio-separator-fork

Advanced tools

Easy to use vocal separation on CLI or as a python package, using the amazing MDX-Net models from UVR trained by @Anjok07

Summary: Easy to use vocal separation on CLI or as a python package, using the amazing MDX-Net models from UVR trained by @Anjok07

Audio Separator is a Python package that allows you to separate an audio file into two stems, primary and secondary, using a model in the ONNX format trained by @Anjok07 for use with UVR (https://github.com/Anjok07/ultimatevocalremovergui).

The primary stem typically contains the instrumental part of the audio, while the secondary stem contains the vocals, but in some models this is reversed.

You can install Audio Separator using pip:

pip install audio-separator

Unfortunately the way Torch and ONNX Runtime are published means the correct platform-specific dependencies for CUDA use don't get installed by the package published to PyPI with Poetry.

As such, if you want to use audio-separator with a CUDA-capable Nvidia GPU, you need to reinstall them directly, allowing pip to calculate the right versions for your platform:

pip uninstall torch onnxruntimepip cache purgepip install torch "optimum[onnxruntime-gpu]"This should get you set up to run audio-separator with CUDA acceleration, using the --use_cuda argument.

Note: if anyone has a way to make this cleaner so we can support both CPU and CUDA transcodes without separate installation processes, please let me know or submit a PR!

You can use Audio Separator via the command line:

audio-separator [audio_file] --model_name [model_name]

audio_file: The path to the audio file to be separated. Supports all common formats (WAV, MP3, FLAC, M4A, etc.)

log_level: (Optional) Logging level, e.g. info, debug, warning. Default: INFO

log_formatter: (Optional) The log format. Default: '%(asctime)s - %(name)s - %(levelname)s - %(message)s'

model_name: (Optional) The name of the model to use for separation. Default: UVR_MDXNET_KARA_2

model_file_dir: (Optional) Directory to cache model files in. Default: /tmp/audio-separator-models/

output_dir: (Optional) The directory where the separated files will be saved. If not specified, outputs to current dir.

use_cuda: (Optional) Flag to use Nvidia GPU via CUDA for separation if available. Default: False

denoise_enabled: (Optional) Flag to enable or disable denoising as part of the separation process. Default: True

normalization_enabled: (Optional) Flag to enable or disable normalization as part of the separation process. Default: False

output_format: (Optional) Format to encode output files, any common format (WAV, MP3, FLAC, M4A, etc.). Default: WAV

single_stem: (Optional) Output only single stem, either instrumental or vocals. Example: --single_stem=instrumental

Example:

audio-separator /path/to/your/audio.wav --model_name UVR_MDXNET_KARA_2

This command will process the file and generate two new files in the current directory, one for each stem.

You can also use Audio Separator in your Python project. Here's how you can use it:

from audio_separator import Separator

# Initialize the Separator with the audio file and model name

separator = Separator('/path/to/your/audio.m4a', model_name='UVR_MDXNET_KARA_2')

# Perform the separation

primary_stem_path, secondary_stem_path = separator.separate()

print(f'Primary stem saved at {primary_stem_path}')

print(f'Secondary stem saved at {secondary_stem_path}')

Python >= 3.9

Libraries: onnx, onnxruntime, numpy, soundfile, librosa, torch, wget, six

This project uses Poetry for dependency management and packaging. Follow these steps to setup a local development environment:

Clone the repository to your local machine:

git clone https://github.com/YOUR_USERNAME/audio-separator.git

cd audio-separator

Replace YOUR_USERNAME with your GitHub username if you've forked the repository, or use the main repository URL if you have the permissions.

Run the following command to install the project dependencies:

poetry install

To activate the virtual environment, use the following command:

poetry shell

You can run the CLI command directly within the virtual environment. For example:

audio-separator path/to/your/audio-file.wav

Once you are done with your development work, you can exit the virtual environment by simply typing:

exit

To build the package for distribution, use the following command:

poetry build

This will generate the distribution packages in the dist directory - but for now only @beveradb will be able to publish to PyPI.

Contributions are very much welcome! Please fork the repository and submit a pull request with your changes, and I'll try to review, merge and publish promptly!

This project is licensed under the MIT License.

For questions or feedback, please raise an issue or reach out to @beveradb (Andrew Beveridge) directly.

FAQs

Easy to use vocal separation on CLI or as a python package, using the amazing MDX-Net models from UVR trained by @Anjok07

We found that audio-separator-fork demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 1 open source maintainer collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Product

Socket Firewall Enterprise is now available with flexible deployment, configurable policies, and expanded language support.

Security News

Open source dashboard CNAPulse tracks CVE Numbering Authorities’ publishing activity, highlighting trends and transparency across the CVE ecosystem.

Product

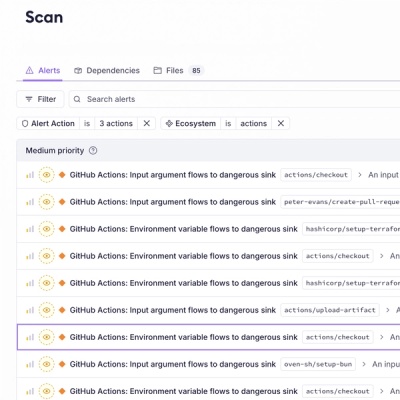

Detect malware, unsafe data flows, and license issues in GitHub Actions with Socket’s new workflow scanning support.