Security News

The Nightmare Before Deployment

Season’s greetings from Socket, and here’s to a calm end of year: clean dependencies, boring pipelines, no surprises.

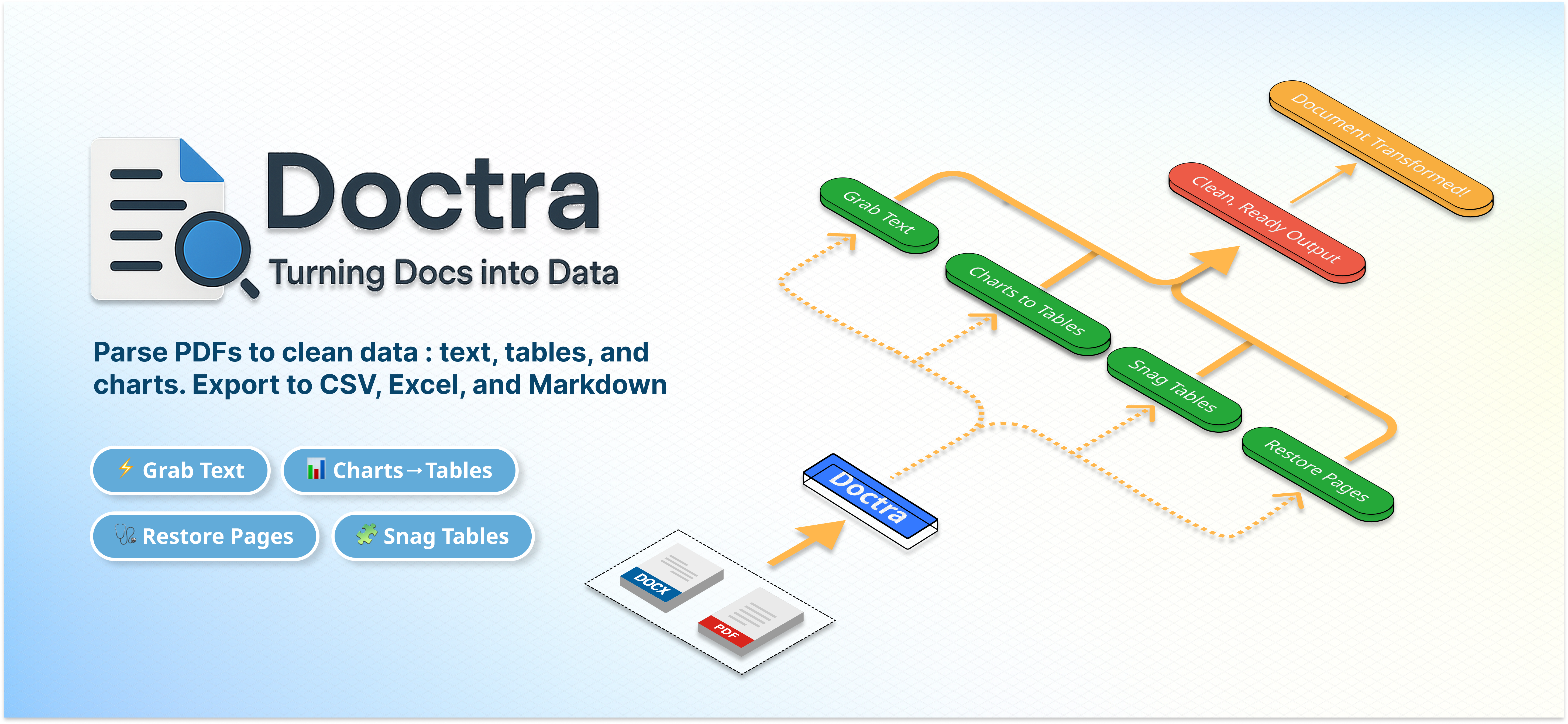

doctra

Advanced tools

pip install doctra

git clone https://github.com/AdemBoukhris457/Doctra.git

cd Doctra

pip install .

Doctra requires Poppler for PDF processing. Install it based on your operating system:

sudo apt install poppler-utils

brew install poppler

Download and install from Poppler for Windows or use conda:

conda install -c conda-forge poppler

!sudo apt install poppler-utils

from doctra.parsers.structured_pdf_parser import StructuredPDFParser

# Initialize the parser

parser = StructuredPDFParser()

# Parse a PDF document

parser.parse("path/to/your/document.pdf")

The StructuredPDFParser is a comprehensive PDF parser that extracts all types of content from PDF documents. It processes PDFs through layout detection, extracts text using OCR, saves images for visual elements, and optionally converts charts/tables to structured data using Vision Language Models (VLM).

from doctra.parsers.structured_pdf_parser import StructuredPDFParser

# Basic parser without VLM (uses default PyTesseract OCR engine)

parser = StructuredPDFParser()

# Parser with VLM for structured data extraction

from doctra.engines.vlm.service import VLMStructuredExtractor

# Initialize VLM engine

vlm_engine = VLMStructuredExtractor(

vlm_provider="openai", # or "gemini", "anthropic", "openrouter", "qianfan", "ollama"

api_key="your_api_key_here"

)

# Pass VLM engine to parser

parser = StructuredPDFParser(vlm=vlm_engine)

# Parse document

parser.parse("document.pdf")

Doctra uses a dependency injection pattern for OCR engines. You initialize the OCR engine externally and pass it to the parser:

from doctra.parsers.structured_pdf_parser import StructuredPDFParser

from doctra.engines.ocr import PytesseractOCREngine, PaddleOCREngine

# Option 1: Use default PyTesseract (automatic if ocr_engine=None)

parser = StructuredPDFParser() # Creates default PyTesseractOCREngine internally

# Option 2: Explicitly configure PyTesseract

tesseract_ocr = PytesseractOCREngine(

lang="eng", # Language code

psm=4, # Page segmentation mode

oem=3, # OCR engine mode

extra_config="" # Additional Tesseract config

)

parser = StructuredPDFParser(ocr_engine=tesseract_ocr)

# Option 3: Use PaddleOCR for better accuracy

paddle_ocr = PaddleOCREngine(

device="gpu", # "gpu" or "cpu"

use_doc_orientation_classify=False, # Document orientation detection

use_doc_unwarping=False, # Text image rectification

use_textline_orientation=False # Text line orientation

)

parser = StructuredPDFParser(ocr_engine=paddle_ocr)

# Option 4: Reuse OCR engine across multiple parsers

shared_ocr = PytesseractOCREngine(lang="eng", psm=6, oem=3)

parser1 = StructuredPDFParser(ocr_engine=shared_ocr)

parser2 = EnhancedPDFParser(ocr_engine=shared_ocr) # Reuse same instance

Doctra uses the same dependency injection pattern for VLM engines. You initialize the VLM engine externally and pass it to the parser:

from doctra.parsers.structured_pdf_parser import StructuredPDFParser

from doctra.engines.vlm.service import VLMStructuredExtractor

# Option 1: No VLM (default)

parser = StructuredPDFParser() # VLM processing disabled

# Option 2: Initialize VLM engine and pass to parser

vlm_engine = VLMStructuredExtractor(

vlm_provider="openai", # or "gemini", "anthropic", "openrouter", "qianfan", "ollama"

vlm_model="gpt-5", # Optional, uses default if None

api_key="your_api_key"

)

parser = StructuredPDFParser(vlm=vlm_engine)

# Option 3: Reuse VLM engine across multiple parsers

shared_vlm = VLMStructuredExtractor(

vlm_provider="gemini",

api_key="your_api_key"

)

parser1 = StructuredPDFParser(vlm=shared_vlm)

parser2 = EnhancedPDFParser(vlm=shared_vlm) # Reuse same instance

parser3 = ChartTablePDFParser(vlm=shared_vlm) # Reuse same instance

from doctra.engines.ocr import PytesseractOCREngine, PaddleOCREngine

# Option 1: Using PyTesseract (default)

ocr_engine = PytesseractOCREngine(

lang="eng",

psm=4,

oem=3,

extra_config=""

)

# Initialize VLM engine

from doctra.engines.vlm.service import VLMStructuredExtractor

vlm_engine = VLMStructuredExtractor(

vlm_provider="openai",

vlm_model="gpt-5", # Optional, uses default if None

api_key="your_api_key"

)

parser = StructuredPDFParser(

# VLM Engine (pass the initialized engine)

vlm=vlm_engine, # or None to disable VLM

# Layout Detection Settings

layout_model_name="PP-DocLayout_plus-L",

dpi=200,

min_score=0.0,

# OCR Engine (pass the initialized engine)

ocr_engine=ocr_engine, # or None for default PyTesseract

# Output Settings

box_separator="\n"

)

# Option 2: Using PaddleOCR for better accuracy

paddle_ocr = PaddleOCREngine(

device="gpu", # Use "cpu" if no GPU available

use_doc_orientation_classify=False,

use_doc_unwarping=False,

use_textline_orientation=False

)

parser = StructuredPDFParser(

ocr_engine=paddle_ocr,

# ... other settings

)

The EnhancedPDFParser extends the StructuredPDFParser with advanced image restoration capabilities using DocRes. This parser is ideal for processing scanned documents, low-quality PDFs, or documents with visual distortions that need enhancement before parsing.

from doctra.parsers.enhanced_pdf_parser import EnhancedPDFParser

# Basic enhanced parser with image restoration

parser = EnhancedPDFParser(

use_image_restoration=True,

restoration_task="appearance" # Default restoration task

)

# Parse document with enhancement

parser.parse("scanned_document.pdf")

from doctra.engines.ocr import PytesseractOCREngine, PaddleOCREngine

# Initialize OCR engine (PyTesseract or PaddleOCR)

ocr_engine = PytesseractOCREngine(

lang="eng",

psm=6,

oem=3

)

# Initialize VLM engine

from doctra.engines.vlm.service import VLMStructuredExtractor

vlm_engine = VLMStructuredExtractor(

vlm_provider="openai",

vlm_model="gpt-4-vision", # Optional, uses default if None

api_key="your_api_key"

)

parser = EnhancedPDFParser(

# Image Restoration Settings

use_image_restoration=True,

restoration_task="dewarping", # Correct perspective distortion

restoration_device="cuda", # Use GPU for faster processing

restoration_dpi=300, # Higher DPI for better quality

# VLM Engine (pass the initialized engine)

vlm=vlm_engine, # or None to disable VLM

# Layout Detection Settings

layout_model_name="PP-DocLayout_plus-L",

dpi=200,

min_score=0.5,

# OCR Engine (pass the initialized engine)

ocr_engine=ocr_engine, # or None for default PyTesseract

)

| Task | Description | Best For |

|---|---|---|

appearance | General appearance enhancement | Most documents (default) |

dewarping | Correct perspective distortion | Scanned documents with perspective issues |

deshadowing | Remove shadows and lighting artifacts | Documents with shadow problems |

deblurring | Reduce blur and improve sharpness | Blurry or low-quality scans |

binarization | Convert to black and white | Documents needing clean binarization |

end2end | Complete restoration pipeline | Severely degraded documents |

The ChartTablePDFParser is a specialized parser focused specifically on extracting charts and tables from PDF documents. It's optimized for scenarios where you only need these specific elements, providing faster processing and more targeted output.

from doctra.parsers.table_chart_extractor import ChartTablePDFParser

# Extract both charts and tables

parser = ChartTablePDFParser(

extract_charts=True,

extract_tables=True

)

# Extract only charts

parser = ChartTablePDFParser(

extract_charts=True,

extract_tables=False

)

# Parse with custom output directory

parser.parse("document.pdf", output_base_dir="my_outputs")

# Initialize VLM engine

from doctra.engines.vlm.service import VLMStructuredExtractor

vlm_engine = VLMStructuredExtractor(

vlm_provider="openai",

vlm_model="gpt-5", # Optional, uses default if None

api_key="your_api_key"

)

parser = ChartTablePDFParser(

# Extraction Settings

extract_charts=True,

extract_tables=True,

# VLM Engine (pass the initialized engine)

vlm=vlm_engine, # or None to disable VLM

# Layout Detection Settings

layout_model_name="PP-DocLayout_plus-L",

dpi=200,

min_score=0.0

)

The PaddleOCRVLPDFParser uses PaddleOCRVL (Vision-Language Model) for end-to-end document parsing. It combines PaddleOCRVL's advanced document understanding capabilities with DocRes image restoration and split table merging, providing a comprehensive solution for complex document processing.

Before using PaddleOCRVLPDFParser, install the required dependencies:

pip install -U "paddleocr[doc-parser]"

For Linux systems:

python -m pip install https://paddle-whl.bj.bcebos.com/nightly/cu126/safetensors/safetensors-0.6.2.dev0-cp38-abi3-linux_x86_64.whl

For Windows systems:

python -m pip install https://xly-devops.cdn.bcebos.com/safetensors-nightly/safetensors-0.6.2.dev0-cp38-abi3-win_amd64.whl

from doctra import PaddleOCRVLPDFParser

# Basic parser with default settings

parser = PaddleOCRVLPDFParser(

use_image_restoration=True, # Enable DocRes restoration

use_chart_recognition=True, # Enable chart recognition

merge_split_tables=True, # Enable split table merging

device="gpu" # Use GPU for processing

)

# Parse a PDF document

parser.parse("document.pdf")

from doctra import PaddleOCRVLPDFParser

parser = PaddleOCRVLPDFParser(

# DocRes Image Restoration Settings

use_image_restoration=True,

restoration_task="appearance", # Options: appearance, dewarping, deshadowing, deblurring, binarization, end2end

restoration_device="cuda", # or "cpu" or None for auto-detect

restoration_dpi=300, # DPI for restoration processing

# PaddleOCRVL Settings

use_chart_recognition=True, # Enable chart recognition and extraction

use_doc_orientation_classify=True, # Enable document orientation classification

use_doc_unwarping=True, # Enable document unwarping

use_layout_detection=True, # Enable layout detection

device="gpu", # "gpu" or "cpu"

# Split Table Merging Settings

merge_split_tables=True, # Enable split table detection and merging

bottom_threshold_ratio=0.20, # Ratio for "too close to bottom" detection

top_threshold_ratio=0.15, # Ratio for "too close to top" detection

max_gap_ratio=0.25, # Maximum allowed gap between tables

column_alignment_tolerance=10.0, # Pixel tolerance for column alignment

min_merge_confidence=0.65 # Minimum confidence score for merging

)

# Parse with custom output directory

parser.parse("document.pdf", output_dir="custom_output")

The parser generates output in outputs/{document_name}/paddleocr_vl_parse/ with:

The parser extracts various document elements:

The StructuredDOCXParser is a comprehensive parser for Microsoft Word documents (.docx files) that extracts text, tables, images, and structured content while preserving document formatting and order. It supports VLM integration for enhanced content analysis and structured data extraction.

from doctra.parsers.structured_docx_parser import StructuredDOCXParser

# Basic DOCX parsing

parser = StructuredDOCXParser(

extract_images=True,

preserve_formatting=True,

table_detection=True,

export_excel=True

)

# Parse DOCX document

parser.parse("document.docx")

# Initialize VLM engine

from doctra.engines.vlm.service import VLMStructuredExtractor

vlm_engine = VLMStructuredExtractor(

vlm_provider="openai", # or "gemini", "anthropic", "openrouter", "qianfan", "ollama"

vlm_model="gpt-4-vision", # Optional, uses default if None

api_key="your_api_key"

)

parser = StructuredDOCXParser(

# VLM Engine (pass the initialized engine)

vlm=vlm_engine, # or None to disable VLM

# Processing Options

extract_images=True,

preserve_formatting=True,

table_detection=True,

export_excel=True

)

# Parse with VLM enhancement

parser.parse("document.docx")

When parsing a DOCX document, the parser creates:

outputs/document_name/

├── document.md # Markdown version with all content

├── document.html # HTML version with styling

├── tables.xlsx # Excel file with extracted tables

│ ├── Table of Contents # Summary sheet with hyperlinks

│ ├── Table 1 # Individual table sheets

│ ├── Table 2

│ └── ...

└── images/ # Extracted images

├── image1.png

├── image2.jpg

└── ...

When VLM is enabled, the parser:

# Basic DOCX parsing

doctra parse-docx document.docx

# With VLM enhancement

doctra parse-docx document.docx --use-vlm --vlm-provider openai --vlm-api-key your_key

# Custom options

doctra parse-docx document.docx \

--extract-images \

--preserve-formatting \

--table-detection \

--export-excel

The DocResEngine provides direct access to DocRes image restoration capabilities. This engine is perfect for standalone image restoration tasks or when you need fine-grained control over the restoration process.

from doctra.engines.image_restoration import DocResEngine

# Initialize DocRes engine

docres = DocResEngine(device="cuda") # or "cpu" or None for auto-detect

# Restore a single image

restored_img, metadata = docres.restore_image(

image="path/to/image.jpg",

task="appearance"

)

# Restore entire PDF

enhanced_pdf = docres.restore_pdf(

pdf_path="document.pdf",

output_path="enhanced_document.pdf",

task="appearance"

)

# Initialize with custom settings

docres = DocResEngine(

device="cuda", # Force GPU usage

use_half_precision=True, # Use half precision for faster processing

model_path="custom/model.pth", # Custom model path (optional)

mbd_path="custom/mbd.pth" # Custom MBD model path (optional)

)

# Process multiple images

images = ["doc1.jpg", "doc2.jpg", "doc3.jpg"]

for img_path in images:

restored_img, metadata = docres.restore_image(

image=img_path,

task="dewarping"

)

print(f"Processed {img_path}: {metadata}")

# Batch PDF processing

pdfs = ["report1.pdf", "report2.pdf"]

for pdf_path in pdfs:

output_path = f"enhanced_{os.path.basename(pdf_path)}"

docres.restore_pdf(

pdf_path=pdf_path,

output_path=output_path,

task="end2end" # Complete restoration pipeline

)

| Task | Description | Use Case |

|---|---|---|

appearance | General appearance enhancement | Default choice for most documents |

dewarping | Correct document perspective distortion | Scanned documents with perspective issues |

deshadowing | Remove shadows and lighting artifacts | Documents with shadow problems |

deblurring | Reduce blur and improve sharpness | Blurry or low-quality scans |

binarization | Convert to black and white | Documents needing clean binarization |

end2end | Complete restoration pipeline | Severely degraded documents |

Doctra provides a comprehensive web interface built with Gradio that makes document processing accessible to non-technical users.

from doctra.ui.app import launch_ui

# Launch the web interface

launch_ui()

Or from command line:

python gradio_app.py

Doctra includes a powerful CLI for batch processing and automation.

# Full document parsing

doctra parse document.pdf

# DOCX document parsing

doctra parse-docx document.docx

# Enhanced parsing with image restoration

doctra enhance document.pdf --restoration-task appearance

# Extract only charts and tables

doctra extract charts document.pdf

doctra extract tables document.pdf

doctra extract both document.pdf --use-vlm

# Visualize layout detection

doctra visualize document.pdf

# Quick document analysis

doctra analyze document.pdf

# System information

doctra info

# Enhanced parsing with custom settings

doctra enhance document.pdf \

--restoration-task dewarping \

--restoration-device cuda \

--use-vlm \

--vlm-provider openai \

--vlm-api-key your_key

# Extract charts with VLM

doctra extract charts document.pdf \

--use-vlm \

--vlm-provider gemini \

--vlm-api-key your_key

# Batch processing

doctra parse *.pdf --output-dir results/

Doctra provides powerful visualization capabilities to help you understand how the layout detection works and verify the accuracy of element extraction.

The StructuredPDFParser includes a built-in visualization method that displays PDF pages with bounding boxes overlaid on detected elements. This is perfect for:

from doctra.parsers.structured_pdf_parser import StructuredPDFParser

# Initialize parser (OCR engine is optional for visualization)

parser = StructuredPDFParser()

# Display visualization (opens in default image viewer)

parser.display_pages_with_boxes("document.pdf")

# Custom visualization configuration

parser.display_pages_with_boxes(

pdf_path="document.pdf",

num_pages=5, # Number of pages to visualize

cols=3, # Number of columns in grid

page_width=600, # Width of each page in pixels

spacing=30, # Spacing between pages

save_path="layout_visualization.png" # Save to file instead of displaying

)

The visualization shows:

| Parameter | Default | Description |

|---|---|---|

num_pages | 3 | Number of pages to visualize |

cols | 2 | Number of columns in grid layout |

page_width | 800 | Width of each page in pixels |

spacing | 40 | Spacing between pages in pixels |

save_path | None | Path to save visualization (if None, displays on screen) |

| Notebook | Colab Badge | Description |

|---|---|---|

| 01_doctra_quick_start | Comprehensive tutorial covering layout detection, content extraction, and multi-format outputs with visual examples |

from doctra.parsers.structured_pdf_parser import StructuredPDFParser

# Initialize parser (uses default PyTesseract OCR engine)

parser = StructuredPDFParser()

# Process document

parser.parse("financial_report.pdf")

# Output will be saved to: outputs/financial_report/

# - Extracted text content

# - Cropped images of figures, charts, and tables

# - Markdown file with all content

from doctra.parsers.enhanced_pdf_parser import EnhancedPDFParser

from doctra.engines.ocr import PytesseractOCREngine

# Initialize OCR engine (optional - defaults to PyTesseract if not provided)

ocr_engine = PytesseractOCREngine(lang="eng", psm=4, oem=3)

# Initialize VLM engine

from doctra.engines.vlm.service import VLMStructuredExtractor

vlm_engine = VLMStructuredExtractor(

vlm_provider="openai",

api_key="your_api_key"

)

# Initialize enhanced parser with image restoration

parser = EnhancedPDFParser(

use_image_restoration=True,

restoration_task="dewarping", # Correct perspective distortion

restoration_device="cuda", # Use GPU for faster processing

ocr_engine=ocr_engine, # Pass OCR engine instance

vlm=vlm_engine # Pass VLM engine instance

)

# Process scanned document with enhancement

parser.parse("scanned_document.pdf")

# Output will include:

# - Enhanced PDF with restored images

# - All standard parsing outputs

# - Improved OCR accuracy due to restoration

from doctra.parsers.structured_pdf_parser import StructuredPDFParser

from doctra.engines.ocr import PaddleOCREngine

# Initialize PaddleOCR engine with custom settings

paddle_ocr = PaddleOCREngine(

device="gpu", # Use "cpu" if no GPU available

use_doc_orientation_classify=False,

use_doc_unwarping=False,

use_textline_orientation=False

)

# Create parser with PaddleOCR engine

parser = StructuredPDFParser(

ocr_engine=paddle_ocr

)

# Process document with PaddleOCR

parser.parse("complex_document.pdf")

# PaddleOCR provides:

# - Higher accuracy for complex documents

# - Better performance on GPU

# - Automatic model management

from doctra.engines.image_restoration import DocResEngine

# Initialize DocRes engine

docres = DocResEngine(device="cuda")

# Restore individual images

restored_img, metadata = docres.restore_image(

image="blurry_document.jpg",

task="deblurring"

)

# Restore entire PDF

docres.restore_pdf(

pdf_path="low_quality.pdf",

output_path="enhanced.pdf",

task="appearance"

)

from doctra.parsers.structured_docx_parser import StructuredDOCXParser

# Basic DOCX parsing

parser = StructuredDOCXParser(

extract_images=True,

preserve_formatting=True,

table_detection=True,

export_excel=True

)

# Parse Word document

parser.parse("report.docx")

# Output will include:

# - Markdown file with all content

# - HTML file with styling

# - Excel file with extracted tables

# - Extracted images in organized folders

from doctra.parsers.structured_docx_parser import StructuredDOCXParser

# Initialize VLM engine

from doctra.engines.vlm.service import VLMStructuredExtractor

vlm_engine = VLMStructuredExtractor(

vlm_provider="openai",

vlm_model="gpt-4-vision", # Optional, uses default if None

api_key="your_api_key"

)

# DOCX parsing with VLM for enhanced analysis

parser = StructuredDOCXParser(

vlm=vlm_engine, # Pass VLM engine instance

extract_images=True,

preserve_formatting=True,

table_detection=True,

export_excel=True

)

# Parse with AI enhancement

parser.parse("financial_report.docx")

# Output will include:

# - All standard outputs

# - VLM-extracted tables from images

# - Enhanced Excel with Table of Contents

# - Smart content display (tables instead of images)

from doctra import PaddleOCRVLPDFParser

# Initialize PaddleOCRVL parser with all features enabled

parser = PaddleOCRVLPDFParser(

use_image_restoration=True, # Enable DocRes restoration

restoration_task="appearance", # Use appearance enhancement

use_chart_recognition=True, # Enable chart recognition

merge_split_tables=True, # Enable split table merging

device="gpu" # Use GPU for faster processing

)

# Parse document - automatically handles all content types

parser.parse("financial_report.pdf")

# Output will be in: outputs/financial_report/paddleocr_vl_parse/

# - result.md: All content in Markdown

# - result.html: Formatted HTML output

# - tables.xlsx: All tables and charts in Excel format

# - tables.html: Structured tables and charts

from doctra.parsers.table_chart_extractor import ChartTablePDFParser

# Initialize VLM engine

from doctra.engines.vlm.service import VLMStructuredExtractor

vlm_engine = VLMStructuredExtractor(

vlm_provider="openai",

api_key="your_api_key"

)

# Initialize parser with VLM

parser = ChartTablePDFParser(

extract_charts=True,

extract_tables=True,

vlm=vlm_engine # Pass VLM engine instance

)

# Process document

parser.parse("data_report.pdf", output_base_dir="extracted_data")

# Output will include:

# - Cropped chart and table images

# - Structured data in Excel format

# - Markdown tables with extracted data

from doctra.ui.app import launch_ui

# Launch the web interface

launch_ui()

# Or build the interface programmatically

from doctra.ui.app import build_demo

demo = build_demo()

demo.launch(share=True) # Share publicly

# DOCX parsing with VLM

doctra parse-docx document.docx \

--use-vlm \

--vlm-provider openai \

--vlm-api-key your_key \

--extract-images \

--export-excel

# Enhanced parsing with custom settings

doctra enhance document.pdf \

--restoration-task dewarping \

--restoration-device cuda \

--use-vlm \

--vlm-provider openai \

--vlm-api-key your_key

# Extract charts with VLM

doctra extract charts document.pdf \

--use-vlm \

--vlm-provider gemini \

--vlm-api-key your_key

# Batch processing

doctra parse *.pdf --output-dir results/

from doctra.parsers.structured_pdf_parser import StructuredPDFParser

# Initialize parser (OCR engine not needed for visualization)

parser = StructuredPDFParser()

# Create a comprehensive visualization

parser.display_pages_with_boxes(

pdf_path="research_paper.pdf",

num_pages=6, # Visualize first 6 pages

cols=2, # 2 columns layout

page_width=700, # Larger pages for better detail

spacing=50, # More spacing between pages

save_path="research_paper_layout.png" # Save for documentation

)

# For quick preview (displays on screen)

parser.display_pages_with_boxes("document.pdf")

Doctra builds upon several excellent open-source projects:

We thank the developers and contributors of these projects for their valuable work that makes Doctra possible.

FAQs

Parse, extract, and analyze documents with ease

We found that doctra demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 1 open source maintainer collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Security News

Season’s greetings from Socket, and here’s to a calm end of year: clean dependencies, boring pipelines, no surprises.

Research

/Security News

Impostor NuGet package Tracer.Fody.NLog typosquats Tracer.Fody and its author, using homoglyph tricks, and exfiltrates Stratis wallet JSON/passwords to a Russian IP address.

Security News

Deno 2.6 introduces deno audit with a new --socket flag that plugs directly into Socket to bring supply chain security checks into the Deno CLI.