Product

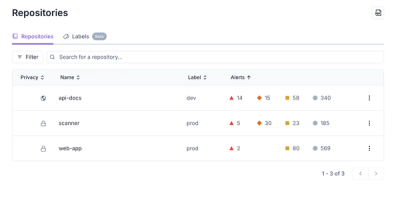

Redesigned Repositories Page: A Faster Way to Prioritize Security Risk

Our redesigned Repositories page adds alert severity, filtering, and tabs for faster triage and clearer insights across all your projects.

etllib

.. contents::

ETLlib provides functionality for munging through and repackaging JSON, TSV and other data for preparation and submission (ETL) to Apache Solr. The library takes advantage of Apache Tika, and is callable from Apache OODT.

Using ETLlib

Installing the ETLlib package makes available four things on your computer:

repackage command

The repackage command takes an original paged JSON file, strips

off the paging information, isolates the identified object type (e.g., "teams"

or "journal_entries", etc.), and may perform some basic cleaning and metadata

addition using Apache Tika on the fields within the JSON document.

poster command

The poster command lets post individual reformulated JSON documents

to Apache Solr.

repackageandpost command

The repackageandpost command combines repackage, and poster, and

obviates the need to store the repackaged JSON doc as an intermediate file, and

then repackages (keeps docs in memory) and then posts directly to Apache Solr.

tsvtojson command

Takes an input TSV file and parses it with a set of column headers and outputs a

JSON file.

translatejson command

Takes an input JSON file and a column header file and cred file and translates from

source lang to dest lang using Bing's API and Apache Tika.

imagesimilarity command

Computes the similarity between a directory full of image files using a feature-based

approach based on Jaccard's algorithm. Clusters scores. Uses Tika.

ETLlib Library The ETLlib Library is a Python-based API for munging data and doing ETL. The library was originally developed as a set of Python scripts to be integrated into an Apache OODT ETL process through parsing/Apache Tika cleanup and then on to Apache Solr for analytics.

This document describes how to use the above three items, with special attention to the ETLlib library.

After installing the ETLlib package, new commands are made available on

your system, repackage and poster and repackageandpost and

tsvtojson and translatejson and imagesimiliarity..

These commands enable you to reformulate

aggregate JSON documents, cleanse their fields (which may contain UTF-8 or other

weird encodings), convert from different formats (e.g., TSV to JSON), translate

fields within the documents using Apache Tika, and then to post those documents to Apache Solr.

These were developed initially independently as python scripts that are wrapped using

Apache OODT ETL workflows, but later Chris Mattmann mattmann@apache.org

decided they would be useful as a installable python library.

To use these commands from your interactive prompt, you just need to make sure your shell's PATH environment variable includes the directory where the commands are installed. On most systems, these two commands are installed in::

/usr/bin

However, on Mac OS X, the installation location may be::

/Library/Frameworks/Python.framework/Versions/Current/bin

And on Windows, it may be::

c:\Program Files\Python

Note also that some interactive shells create a cache of commands in order to

execute your requests more quickly. You may need to force your shell to

re-build that cache. The csh and tcsh shells are two such examples; you can

make these shells rebuild their caches by running the rehash command.

The repackage and poster and repackageandpost and tsvtojson and

translatejson and imagesimilarity commands may be used

from shell scripts as well. The only

requirement for making these commands available to shell scripts is the same as

for interactive sessions: the shell's PATH environment variable must include the

directory that contains the commands.

Here is a sample shell script that repackages a Teams JSON file of 20 aggregate records, and outputs 20 individual Teams JSON files ::

#!/bin/bash

for ag in $(ls /data/xdata/Kiva/raw/RAW__json_teams_9Feb2013); do

repackage -j $ag -o teams

done

The above shell script assumes that repackage will be found in

/usr/local/bin, /usr/bin, or /bin. It then loops through

the aggregate teams JSON files from the Kiva raw dataset and then hands

each aggregate JSON file to the repackage script, which unravels those

1234 teams JSON data files into 1234 * 20 = 24680 individual team JSON files.

The rest of the commands may also be used from a shell script.

Some example working commands are:

Pipe a single JSON journal entries file into the repackageandpost script::

echo "/data/xdata/Kiva/raw/RAW__json_journalEntries_04Mar2013/365/191677_journalentries_pg1_retreived-2013_03_04_21_56.json"

| repackageandpost -u "http://localhost:8080/solr/journalentries/update/json?commit=true" -o journal_entries -v

Take in an input TSV file named computrabajo-ve-20121108.tsv and turn it into a JSON file with a root object named employmentjobs using the provided colheaders.txt file::

tsvtojson -t data/staging/computrabajo-ve-20121108.tsv -j data/jobs/tsvtojson/1/output/computrabajo-ve-20121108.json -c conf/colheaders.txt -o employmentjobs

Extract out the ~9000 or so jobs present in computrabajo-ve-20121108.json under the "employmentjobs" key:

repackage -j ../../../../../data/jobs/tsvtojson/1/output/computrabajo-ve-20121108.json -o employmentjobs

Translate the fields defined in translate.cols in the JSON file named 648c3a4a-22d1-4a43-b0da-9c8e45716e40.json from spanish ("es") to english ("en") and output the translated JSON named 648c3a4a-22d1-4a43-b0da-9c8e45716e40-t.json, using Bing and Apache Tika and the provided credentials::

translatejson -i data/jobs/repackager/1/output/648c3a4a-22d1-4a43-b0da-9c8e45716e40.json -j data/jobs/translate/1/output/648c3a4a-22d1-4a43-b0da-9c8e45716e40-t.json -c src/tika-python/lib/translate.cols -p src/tika-python/lib/translator.creds -f es -t en -v

Compute the similarity of images in your $HOME/Pictures directory on Mac:

cd $HOME/Pictures && imagesimilarity -m -f . > similarity-scores.txt

The ETLlib Library is a Python-based application programming interface (API) for

munging and processing JSON data for ETL and analytics. In fact, the commands

poster and repackage and repackageandpost and tsvtojson and translatejson

and imagesimilarity are implemented using the ETLlib Library. If

shell-script programming is not to your taste, and you

know Python, then using the ETLlib Library may be right for you.

Changelog

Current Development.

Includes tools to handle image similarity.

This is an initial release of etllib supporting capability for reformulating JSON data using Tika_ and JSON read/write in prep for ETL using OODT_ into Solr_.

.. References: .. _OODT: http://oodt.apache.org/ .. _Tika: http://tika.apache.org/ .. _Solr: http://lucene.apache.org/solr/

FAQs

Extract, Transform and Load library.

We found that etllib demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 1 open source maintainer collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Product

Our redesigned Repositories page adds alert severity, filtering, and tabs for faster triage and clearer insights across all your projects.

Security News

Slopsquatting is a new supply chain threat where AI-assisted code generators recommend hallucinated packages that attackers register and weaponize.

Security News

Multiple deserialization flaws in PyTorch Lightning could allow remote code execution when loading untrusted model files, affecting versions up to 2.4.0.