Security News

Crates.io Users Targeted by Phishing Emails

The Rust Security Response WG is warning of phishing emails from rustfoundation.dev targeting crates.io users.

HPSv3: Towards Wide-Spectrum Human Preference Score - A VLM-based preference model for image quality assessment

Yuhang Ma1,3* Yunhao Shui1,4* Xiaoshi Wu2 Keqiang Sun1,2† Hongsheng Li2,4,5†

1Mizzen AI 2CUHK MMLab 3King’s College London 4Shanghai Jiaotong University

5Shanghai AI Laboratory 6CPII, InnoHK

*Equal Contribution †Equal Advising

This is the official implementation for the paper: HPSv3: Towards Wide-Spectrum Human Preference Score. First, we introduce a VLM-based preference model HPSv3, trained on a "wide spectrum" preference dataset HPDv3 with 1.08M text-image pairs and 1.17M annotated pairwise comparisons, covering both state-of-the-art and earlier generative models, as well as high- and low-quality real-world images. Second, we propose a novel reasoning approach for iterative image refinement, CoHP(Chain-of-Human-Preference), which efficiently improves image quality without requiring additional training data.

HPSv3 is a state-of-the-art human preference score model for evaluating image quality and prompt alignment. It builds upon the Qwen2-VL architecture to provide accurate assessments of generated images.

# Install locally for development or training.

git clone https://github.com/MizzenAI/HPSv3.git

cd HPSv3

conda env create -f environment.yaml

conda activate hpsv3

# Recommend: Install flash-attn

pip install flash-attn==2.7.4.post1

pip install -e .

from hpsv3 import HPSv3RewardInferencer

# Initialize the model

inferencer = HPSv3RewardInferencer(device='cuda')

# Evaluate images

image_paths = ["assets/example1.png", "assets/example2.png"]

prompts = [

"cute chibi anime cartoon fox, smiling wagging tail with a small cartoon heart above sticker",

"cute chibi anime cartoon fox, smiling wagging tail with a small cartoon heart above sticker"

]

# Get preference scores

rewards = inferencer.reward(image_paths, prompts)

scores = [reward[0].item() for reward in rewards] # Extract mu values

print(f"Image scores: {scores}")

Launch an interactive web interface to test HPSv3:

python gradio_demo/demo.py

The demo will be available at http://localhost:7860 and provides:

Human Preference Dataset v3 (HPD v3) comprises 1.08M text-image pairs and 1.17M annotated pairwise data. To modeling the wide spectrum of human preference, we introduce newest state-of-the-art generative models and high quality real photographs while maintaining old models and lower quality real images.

| Image Source | Type | Num Image | Prompt Source | Split |

|---|---|---|---|---|

| High Quality Image (HQI) | Real Image | 57759 | VLM Caption | Train & Test |

| MidJourney | - | 331955 | User | Train |

| CogView4 | DiT | 400 | HQI+HPDv2+JourneyDB | Test |

| FLUX.1 dev | DiT | 48927 | HQI+HPDv2+JourneyDB | Train & Test |

| Infinity | Autoregressive | 27061 | HQI+HPDv2+JourneyDB | Train & Test |

| Kolors | DiT | 49705 | HQI+HPDv2+JourneyDB | Train & Test |

| HunyuanDiT | DiT | 46133 | HQI+HPDv2+JourneyDB | Train & Test |

| Stable Diffusion 3 Medium | DiT | 49266 | HQI+HPDv2+JourneyDB | Train & Test |

| Stable Diffusion XL | Diffusion | 49025 | HQI+HPDv2+JourneyDB | Train & Test |

| Pixart Sigma | Diffusion | 400 | HQI+HPDv2+JourneyDB | Test |

| Stable Diffusion 2 | Diffusion | 19124 | HQI+JourneyDB | Train & Test |

| CogView2 | Autoregressive | 3823 | HQI+JourneyDB | Train & Test |

| FuseDream | Diffusion | 468 | HQI+JourneyDB | Train & Test |

| VQ-Diffusion | Diffusion | 18837 | HQI+JourneyDB | Train & Test |

| Glide | Diffusion | 19989 | HQI+JourneyDB | Train & Test |

| Stable Diffusion 1.4 | Diffusion | 18596 | HQI+JourneyDB | Train & Test |

| Stable Diffusion 1.1 | Diffusion | 19043 | HQI+JourneyDB | Train & Test |

| Curated HPDv2 | - | 327763 | - | Train |

HPDv3 is comming soon! Stay tuned!

Important Note: For simplicity, path1's image is always the prefered one

[

{

"prompt": "A beautiful landscape painting",

"path1": "path/to/better/image.jpg",

"path2": "path/to/worse/image.jpg",

"confidence": 0.95

},

...

]

# Use Method 2 to install locally

git clone https://github.com/MizzenAI/HPSv3.git

cd HPSv3

conda env create -f environment.yaml

conda activate hpsv3

# Recommend: Install flash-attn

pip install flash-attn==2.7.4.post1

pip install -e .

# Train with 7B model

deepspeed hpsv3/train.py --config hpsv3/config/HPSv3_7B.yaml

| Configuration Section | Parameter | Value | Description |

|---|---|---|---|

| Model Configuration | rm_head_type | "ranknet" | Type of reward model head architecture |

lora_enable | False | Enable LoRA (Low-Rank Adaptation) for efficient fine-tuning. If False, language tower is fully trainable | |

vision_lora | False | Apply LoRA specifically to vision components. If False, vision tower is fully trainable | |

model_name_or_path | "Qwen/Qwen2-VL-7B-Instruct" | Path to the base model checkpoint | |

| Data Configuration | confidence_threshold | 0.95 | Minimum confidence score for training data |

train_json_list | [example_train.json] | List of training data files | |

test_json_list | [validation_sets] | List of validation datasets with names | |

output_dim | 2 | Output dimension of the reward head for $\mu$ and $\sigma$ | |

loss_type | "uncertainty" | Loss function type for training |

To evaluate HPSv3 preference accuracy or human preference score of image generation model, follow the detail instruction is in Evaluate Insctruction

| Model | ImageReward | Pickscore | HPDv2 | HPDv3 |

|---|---|---|---|---|

| CLIP ViT-H/14 | 57.1 | 60.8 | 65.1 | 48.6 |

| Aesthetic Score Predictor | 57.4 | 56.8 | 76.8 | 59.9 |

| ImageReward | 65.1 | 61.1 | 74.0 | 58.6 |

| PickScore | 61.6 | 70.5 | 79.8 | 65.6 |

| HPS | 61.2 | 66.7 | 77.6 | 63.8 |

| HPSv2 | 65.7 | 63.8 | 83.3 | 65.3 |

| MPS | 67.5 | 63.1 | 83.5 | 64.3 |

| HPSv3 | 66.8 | 72.8 | 85.4 | 76.9 |

| Model | Overall | Characters | Arts | Design | Architecture | Animals | Natural Scenery | Transportation | Products | Others | Plants | Food | Science |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Kolors | 10.55 | 11.79 | 10.47 | 9.87 | 10.82 | 10.60 | 9.89 | 10.68 | 10.93 | 10.50 | 10.63 | 11.06 | 9.51 |

| Flux-dev | 10.43 | 11.70 | 10.32 | 9.39 | 10.93 | 10.38 | 10.01 | 10.84 | 11.24 | 10.21 | 10.38 | 11.24 | 9.16 |

| Playgroundv2.5 | 10.27 | 11.07 | 9.84 | 9.64 | 10.45 | 10.38 | 9.94 | 10.51 | 10.62 | 10.15 | 10.62 | 10.84 | 9.39 |

| Infinity | 10.26 | 11.17 | 9.95 | 9.43 | 10.36 | 9.27 | 10.11 | 10.36 | 10.59 | 10.08 | 10.30 | 10.59 | 9.62 |

| CogView4 | 9.61 | 10.72 | 9.86 | 9.33 | 9.88 | 9.16 | 9.45 | 9.69 | 9.86 | 9.45 | 9.49 | 10.16 | 8.97 |

| PixArt-Σ | 9.37 | 10.08 | 9.07 | 8.41 | 9.83 | 8.86 | 8.87 | 9.44 | 9.57 | 9.52 | 9.73 | 10.35 | 8.58 |

| Gemini 2.0 Flash | 9.21 | 9.98 | 8.44 | 7.64 | 10.11 | 9.42 | 9.01 | 9.74 | 9.64 | 9.55 | 10.16 | 7.61 | 9.23 |

| SDXL | 8.20 | 8.67 | 7.63 | 7.53 | 8.57 | 8.18 | 7.76 | 8.65 | 8.85 | 8.32 | 8.43 | 8.78 | 7.29 |

| HunyuanDiT | 8.19 | 7.96 | 8.11 | 8.28 | 8.71 | 7.24 | 7.86 | 8.33 | 8.55 | 8.28 | 8.31 | 8.48 | 8.20 |

| Stable Diffusion 3 Medium | 5.31 | 6.70 | 5.98 | 5.15 | 5.25 | 4.09 | 5.24 | 4.25 | 5.71 | 5.84 | 6.01 | 5.71 | 4.58 |

| SD2 | -0.24 | -0.34 | -0.56 | -1.35 | -0.24 | -0.54 | -0.32 | 1.00 | 1.11 | -0.01 | -0.38 | -0.38 | -0.84 |

COHP is our novel reasoning approach for iterative image refinement that efficiently improves image quality without requiring additional training data. It works by generating images with multiple diffusion models, selecting the best one using reward models, and then iteratively refining it through image-to-image generation.

python hpsv3/cohp/run_cohp.py \

--prompt "A beautiful sunset over mountains" \

--index "sample_001" \

--device "cuda:0" \

--reward_model "hpsv3"

--prompt: Text prompt for image generation (required)--index: Unique identifier for saving results (required)--device: GPU device to use (default: 'cuda:1')--reward_model: Reward model for scoring images

hpsv3: HPSv3 model (default, recommended)hpsv2: HPSv2 modelimagereward: ImageReward modelpickscore: PickScore modelCOHP uses multiple state-of-the-art diffusion models for initial generation: FLUX.1 dev, Kolors, Stable Diffusion 3 Medium, Playground v2.5

We perform DanceGRPO as the reinforcement learning method. Here are some results. All experiments using the same setting and we use Stable Diffusion 1.4 as our backbone.

If you find HPSv3 useful in your research, please cite our work:

@inproceedings{hpsv3,

title={HPSv3: Towards Wide-Spectrum Human Preference Score},

author={Ma, Yuhang and Wu, Xiaoshi and Sun, Keqiang and Li, Hongsheng},

booktitle={Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

year={2025}

}

We would like to thank the VideoAlign codebase for providing valuable references.

For questions and support:

FAQs

HPSv3: Towards Wide-Spectrum Human Preference Score - A VLM-based preference model for image quality assessment

We found that hpsv3 demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 1 open source maintainer collaborating on the project.

Did you know?

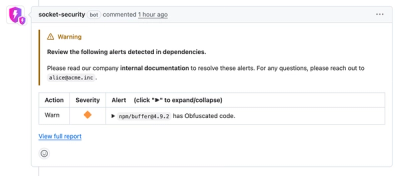

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Security News

The Rust Security Response WG is warning of phishing emails from rustfoundation.dev targeting crates.io users.

Product

Socket now lets you customize pull request alert headers, helping security teams share clear guidance right in PRs to speed reviews and reduce back-and-forth.

Product

Socket's Rust support is moving to Beta: all users can scan Cargo projects and generate SBOMs, including Cargo.toml-only crates, with Rust-aware supply chain checks.