Security News

Opengrep Adds Apex Support and New Rule Controls in Latest Updates

The latest Opengrep releases add Apex scanning, precision rule tuning, and performance gains for open source static code analysis.

Installation | Quick Start | Environments | Baselines | Citation

We provide blazingly fast goal-conditioned environments based on MJX and BRAX for quick experimentation with goal-conditioned self-supervised reinforcement learning.

After cloning the repository, run one of the following commands.

With GPU on Linux:

pip install -e . -f https://storage.googleapis.com/jax-releases/jax_releases.html

With CPU on Mac:

export SDKROOT="$(xcrun --show-sdk-path)" # may be needed to build brax dependencies

pip install -e .

The package is also available on PyPI:

pip install jaxgcrl -f https://storage.googleapis.com/jax-releases/jax_releases.html

To verify the installation, run a test experiment:

jaxgcrl crl --env ant

The jaxgcrl command is equivalent to invoking python run.py with the same arguments

[!NOTE]

If you haven't yet configuredwandb, you may be prompted to log in.

See scripts/train.sh for an example config.

A description of the available agents can be generated with jaxgcrl --help.

Available configs can be listed with jaxgcrl {crl,ppo,sac,td3} --help.

Common flags you may want to change include:

jaxgcrl/utils/env.py for a list of available environments.Environments can be controlled with the reset and step functions. These methods return a state object, which is a dataclass containing the following fields:

state.pipeline_state: current, internal state of the environment

state.obs: current observation

state.done: flag indicating if the agent reached the goal

state.metrics: agent performance metrics

state.info: additional info

The following code demonstrates how to interact with the environment:

import jax

from utils.env import create_env

key = jax.random.PRNGKey(0)

# Initialize the environment

env = create_env('ant')

# Use JIT compilation to make environment's reset and step functions execute faster

jit_env_reset = jax.jit(env.reset)

jit_env_step = jax.jit(env.step)

NUM_STEPS = 1000

# Reset the environment and obtain the initial state

state = jit_env_reset(key)

# Simulate the environment for a fixed number of steps

for _ in range(NUM_STEPS):

# Generate a random action

key, key_act = jax.random.split(key, 2)

random_action = jax.random.uniform(key_act, shape=(8,), minval=-1, maxval=1)

# Perform an environment step with the generated action

state = jit_env_step(state, random_action)

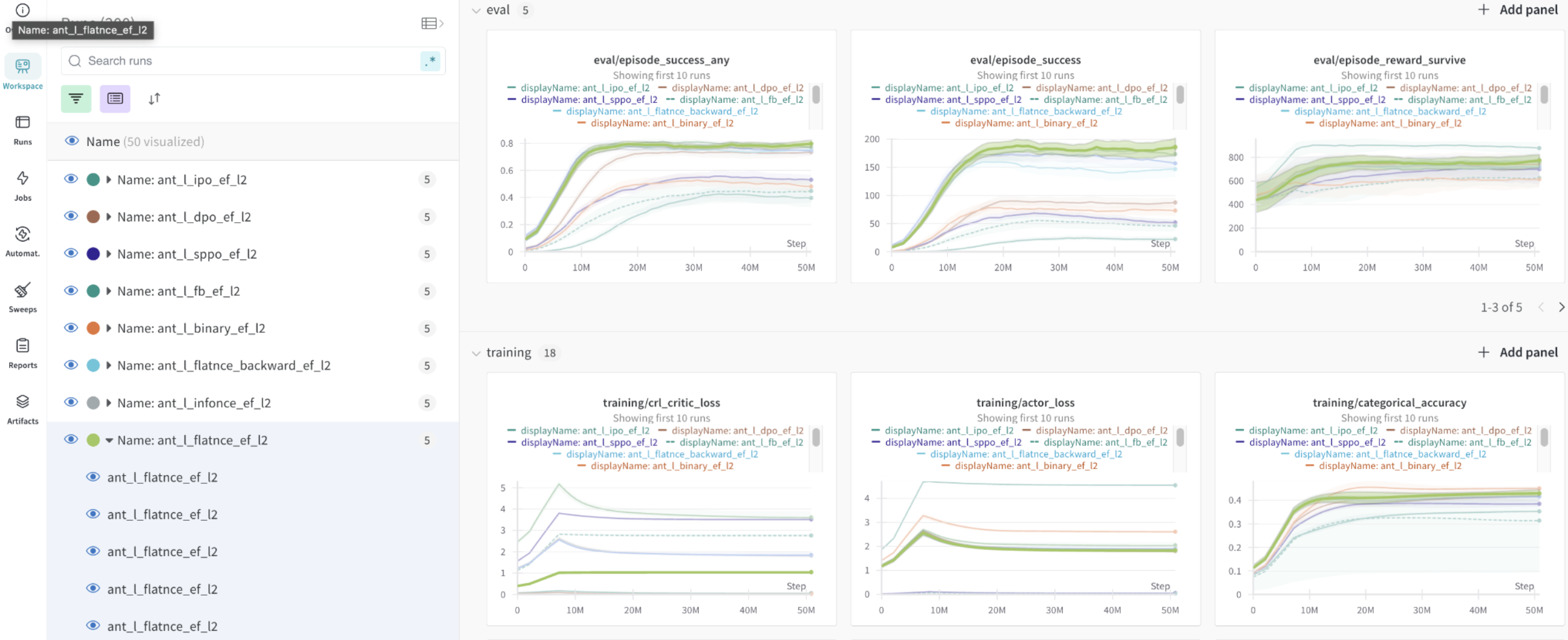

We strongly recommend using Wandb for tracking and visualizing results (Wandb support). Enable Wandb logging with the --log_wandb flag. The following flags are also available to organize experiments:

--project_name--group_name--exp_nameThe --log_wandb flag logs metrics to Wandb. By default, metrics are logged to a CSV.

sweep:wandb sweep --project example_sweep ./scripts/sweep.yml

wandb agent with :wandb agent <previous_command_output>

We also render videos of the learned policies as wandb artifacts.

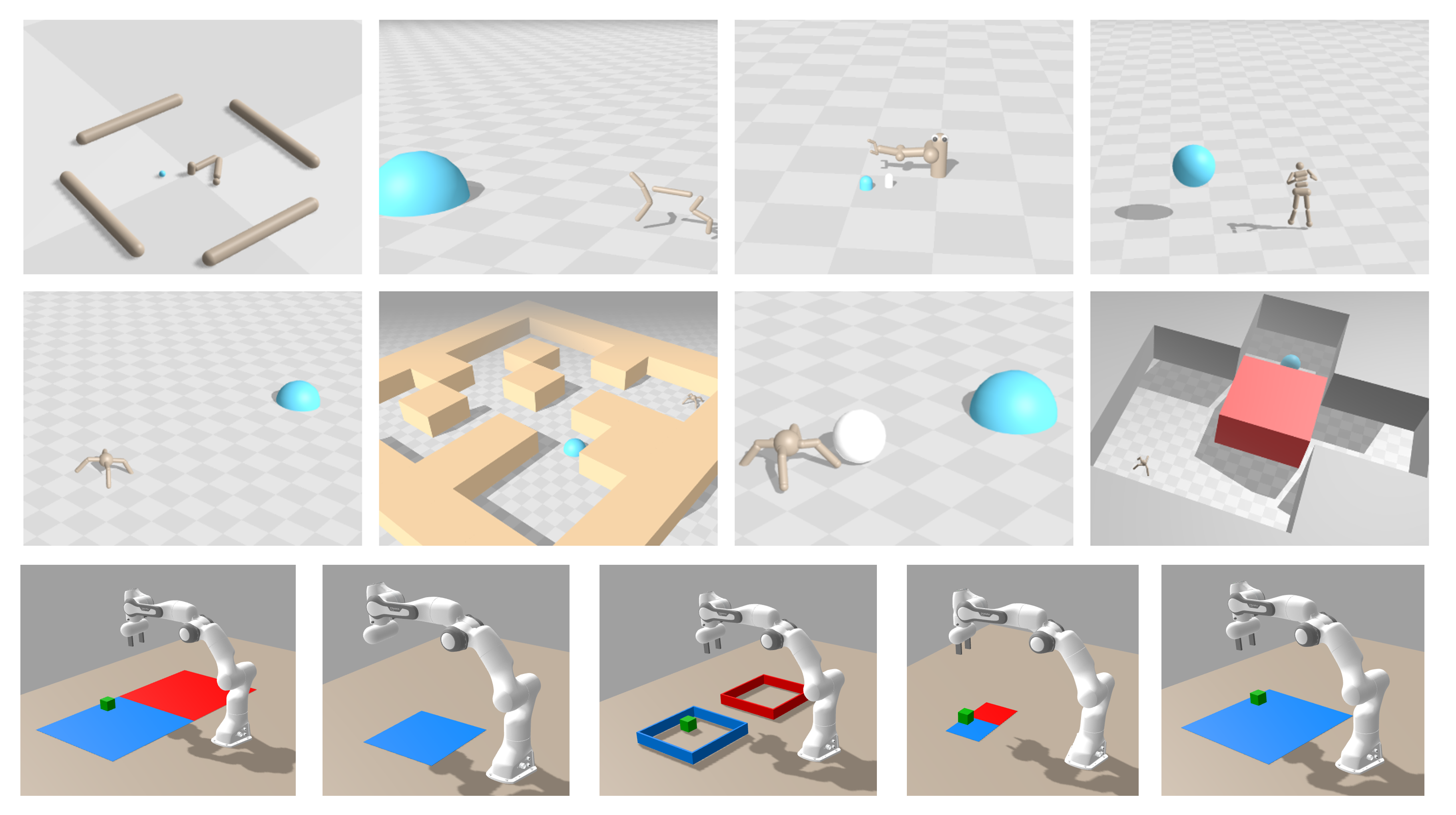

We currently support a variety of continuous control environments:

| Environment | Env name | Code |

|---|---|---|

| Reacher | reacher | link |

| Half Cheetah | cheetah | link |

| Pusher | pusher_easy pusher_hard | link |

| Ant | ant | link |

| Ant Maze | ant_u_maze ant_big_maze ant_hardest_maze | link |

| Ant Soccer | ant_ball | link |

| Ant Push | ant_push | link |

| Humanoid | humanoid | link |

| Humanoid Maze | humanoid_u_maze humanoid_big_maze humanoid_hardest_maze | link |

| Arm Reach | arm_reach | link |

| Arm Grasp | arm_grasp | link |

| Arm Push | arm_push_easy arm_push_hard | link |

| Arm Binpick | arm_binpick_easy arm_binpick_hard | link |

To add new environments: add an XML to envs/assets, add a python environment file in envs, and register the environment name in utils.py.

We currently support following algorithms:

| Algorithm | How to run | Code |

|---|---|---|

| CRL | python run.py crl ... | link |

| PPO | python run.py ppo ... | link |

| SAC | python run.py sac ... | link |

| SAC + HER | python run.py sac ... --use_her | link |

| TD3 | python run.py td3 ... | link |

| TD3 + HER | python run.py td3 ... --use_her | link |

The core structure of the codebase is as follows:

run.py: Takes the name of an agent and runs with the specified configs.

agents/

├── agents/

│ ├── crl/

│ │ ├── crl.py CRL algorithm

│ │ ├── losses.py contrastive losses and energy functions

│ │ └── networks.py CRL network architectures

│ ├── ppo/

│ │ └── ppo.py PPO algorithm

│ ├── sac/

│ │ ├── sac.py SAC algorithm

│ │ └── networks.py SAC network architectures

│ └── td3/

│ ├── td3.py TD3 algorithm

│ ├── losses.py TD3 loss functions

│ └── networks.py TD3 network architectures

├── utils/

│ ├── config.py Base run configs

│ ├── env.py Logic for rendering and environment initialization

│ ├── replay_buffer.py: Contains replay buffer, including logic for state, action, and goal sampling for training.

│ └── evaluator.py: Runs evaluation and collects metrics.

├── envs/

│ ├── ant.py, humanoid.py, ...: Most environments are here.

│ ├── assets: Contains XMLs for environments.

│ └── manipulation: Contains all manipulation environments.

└── scripts/train.sh: Modify to choose environment and hyperparameters.

The architecture can be adjusted in networks.py.

Help us build JaxGCRL into the best possible tool for the GCRL community. Reach out and start contributing or just add an Issue/PR!

To run tests (make sure you have access to a GPU):

python -m pytest

@inproceedings{bortkiewicz2025accelerating,

author = {Bortkiewicz, Micha\l{} and Pa\l{}ucki, W\l{}adek and Myers, Vivek and

Dziarmaga, Tadeusz and Arczewski, Tomasz and Kuci\'{n}ski, \L{}ukasz and

Eysenbach, Benjamin},

booktitle = {{International Conference} on {Learning Representations}},

title = {{Accelerating Goal-Conditioned RL Algorithms} and {Research}},

url = {https://arxiv.org/pdf/2408.11052},

year = {2025},

}

If you have any questions, comments, or suggestions, please reach out to Michał Bortkiewicz (michalbortkiewicz8@gmail.com).

There are a number of other libraries which inspired this work, we encourage you to take a look!

JAX-native algorithms:

JAX-native environments:

FAQs

Blazingly fast goal-conditioned environments based on MJX and BRAX.

We found that jaxgcrl demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 2 open source maintainers collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Security News

The latest Opengrep releases add Apex scanning, precision rule tuning, and performance gains for open source static code analysis.

Security News

npm now supports Trusted Publishing with OIDC, enabling secure package publishing directly from CI/CD workflows without relying on long-lived tokens.

Research

/Security News

A RubyGems malware campaign used 60 malicious packages posing as automation tools to steal credentials from social media and marketing tool users.