Security News

npm Adopts OIDC for Trusted Publishing in CI/CD Workflows

npm now supports Trusted Publishing with OIDC, enabling secure package publishing directly from CI/CD workflows without relying on long-lived tokens.

A python library to build Model Trees with Linear Models at the leaves.

linear-tree provides also the implementations of LinearForest and LinearBoost inspired from these works.

Linear Trees combine the learning ability of Decision Tree with the predictive and explicative power of Linear Models. Like in tree-based algorithms, the data are split according to simple decision rules. The goodness of slits is evaluated in gain terms fitting Linear Models in the nodes. This implies that the models in the leaves are linear instead of constant approximations like in classical Decision Trees.

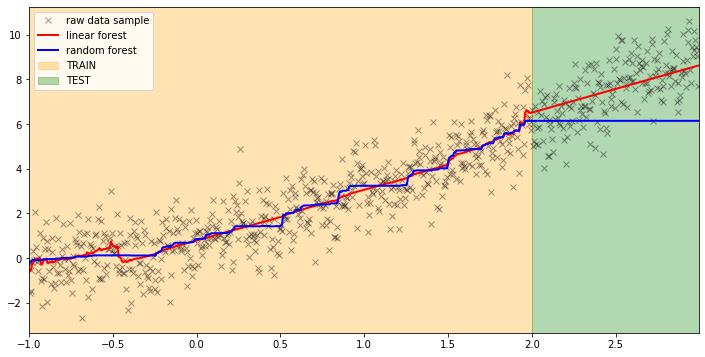

Linear Forests generalize the well known Random Forests by combining Linear Models with the same Random Forests. The key idea is to use the strength of Linear Models to improve the nonparametric learning ability of tree-based algorithms. Firstly, a Linear Model is fitted on the whole dataset, then a Random Forest is trained on the same dataset but using the residuals of the previous steps as target. The final predictions are the sum of the raw linear predictions and the residuals modeled by the Random Forest.

Linear Boosting is a two stage learning process. Firstly, a linear model is trained on the initial dataset to obtain predictions. Secondly, the residuals of the previous step are modeled with a decision tree using all the available features. The tree identifies the path leading to highest error (i.e. the worst leaf). The leaf contributing to the error the most is used to generate a new binary feature to be used in the first stage. The iterations continue until a certain stopping criterion is met.

linear-tree is developed to be fully integrable with scikit-learn. LinearTreeRegressor and LinearTreeClassifier are provided as scikit-learn BaseEstimator to build a decision tree using linear estimators. LinearForestRegressor and LinearForestClassifier use the RandomForest from sklearn to model residuals. LinearBoostRegressor and LinearBoostClassifier are available also as TransformerMixin in order to be integrated, in any pipeline, also for automated features engineering. All the models available in sklearn.linear_model can be used as base learner.

pip install --upgrade linear-tree

The module depends on NumPy, SciPy and Scikit-Learn (>=0.24.2). Python 3.6 or above is supported.

from sklearn.linear_model import LinearRegression

from lineartree import LinearTreeRegressor

from sklearn.datasets import make_regression

X, y = make_regression(n_samples=100, n_features=4,

n_informative=2, n_targets=1,

random_state=0, shuffle=False)

regr = LinearTreeRegressor(base_estimator=LinearRegression())

regr.fit(X, y)

from sklearn.linear_model import RidgeClassifier

from lineartree import LinearTreeClassifier

from sklearn.datasets import make_classification

X, y = make_classification(n_samples=100, n_features=4,

n_informative=2, n_redundant=0,

random_state=0, shuffle=False)

clf = LinearTreeClassifier(base_estimator=RidgeClassifier())

clf.fit(X, y)

from sklearn.linear_model import LinearRegression

from lineartree import LinearForestRegressor

from sklearn.datasets import make_regression

X, y = make_regression(n_samples=100, n_features=4,

n_informative=2, n_targets=1,

random_state=0, shuffle=False)

regr = LinearForestRegressor(base_estimator=LinearRegression())

regr.fit(X, y)

from sklearn.linear_model import LinearRegression

from lineartree import LinearForestClassifier

from sklearn.datasets import make_classification

X, y = make_classification(n_samples=100, n_features=4,

n_informative=2, n_redundant=0,

random_state=0, shuffle=False)

clf = LinearForestClassifier(base_estimator=LinearRegression())

clf.fit(X, y)

from sklearn.linear_model import LinearRegression

from lineartree import LinearBoostRegressor

from sklearn.datasets import make_regression

X, y = make_regression(n_samples=100, n_features=4,

n_informative=2, n_targets=1,

random_state=0, shuffle=False)

regr = LinearBoostRegressor(base_estimator=LinearRegression())

regr.fit(X, y)

from sklearn.linear_model import RidgeClassifier

from lineartree import LinearBoostClassifier

from sklearn.datasets import make_classification

X, y = make_classification(n_samples=100, n_features=4,

n_informative=2, n_redundant=0,

random_state=0, shuffle=False)

clf = LinearBoostClassifier(base_estimator=RidgeClassifier())

clf.fit(X, y)

More examples in the notebooks folder.

Check the API Reference to see the parameter configurations and the available methods.

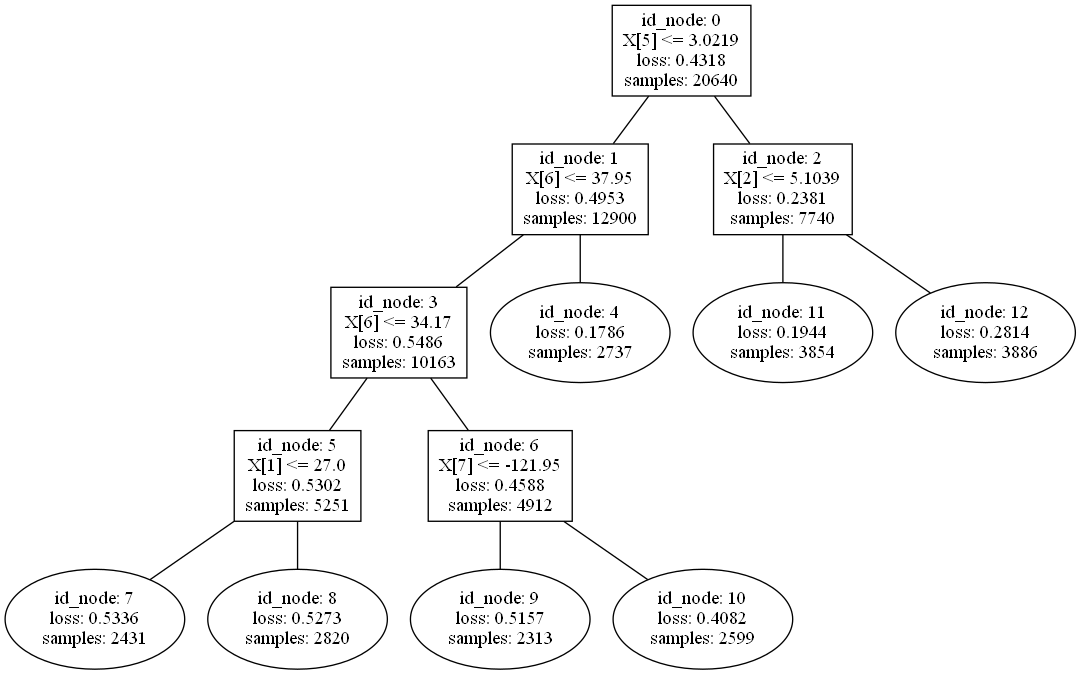

Show the linear tree learning path:

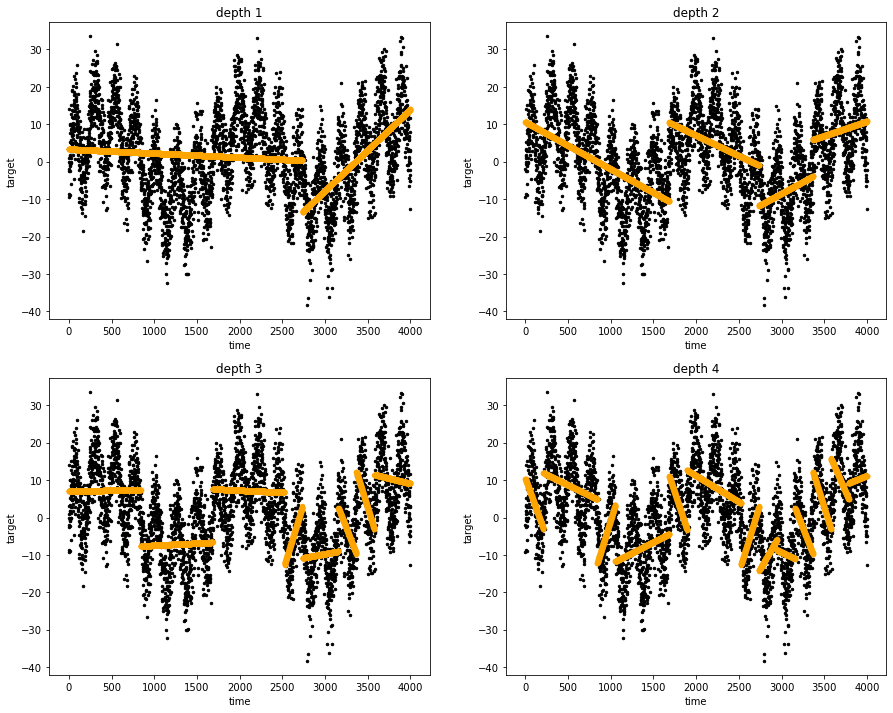

Linear Tree Regressor at work:

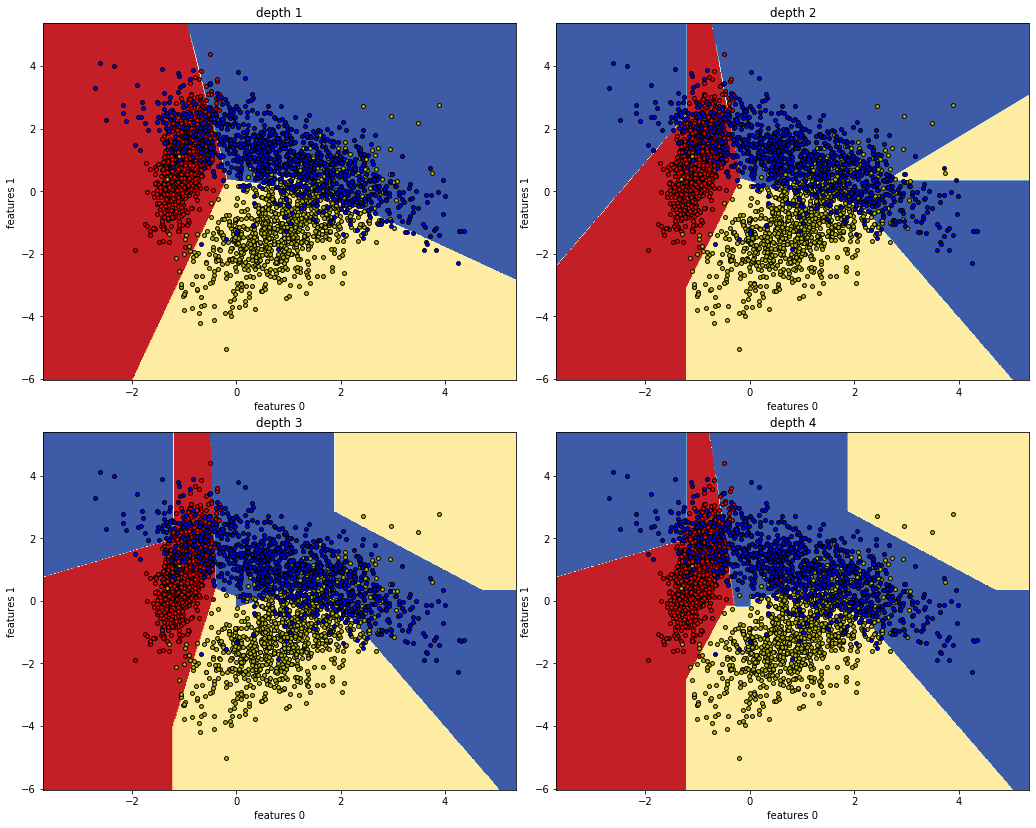

Linear Tree Classifier at work:

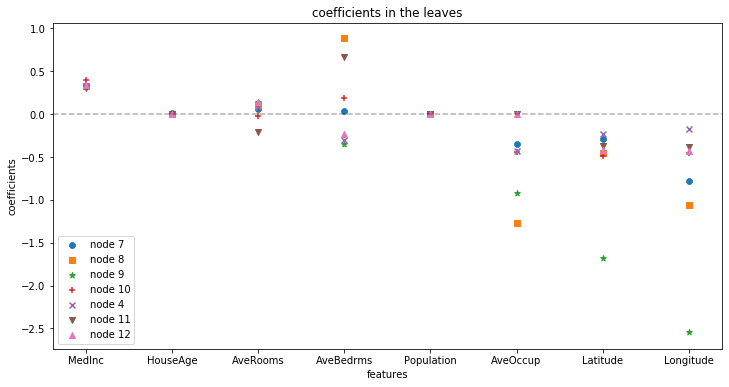

Extract and examine coefficients at the leaves:

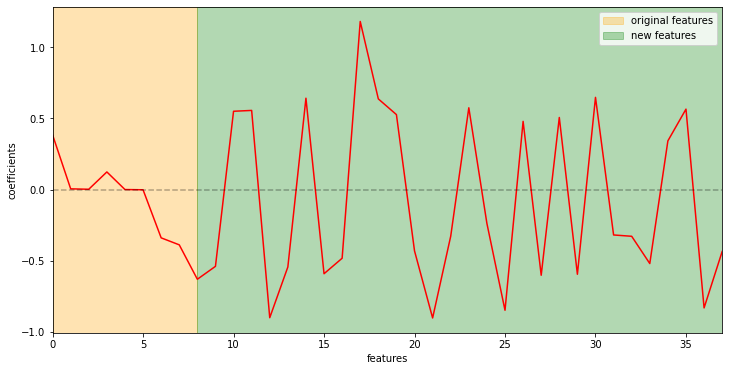

Impact of the features automatically generated with Linear Boosting:

Comparing predictions of Linear Forest and Random Forest:

FAQs

A python library to build Model Trees with Linear Models at the leaves.

We found that linear-tree demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 1 open source maintainer collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Security News

npm now supports Trusted Publishing with OIDC, enabling secure package publishing directly from CI/CD workflows without relying on long-lived tokens.

Research

/Security News

A RubyGems malware campaign used 60 malicious packages posing as automation tools to steal credentials from social media and marketing tool users.

Security News

The CNA Scorecard ranks CVE issuers by data completeness, revealing major gaps in patch info and software identifiers across thousands of vulnerabilities.