Product

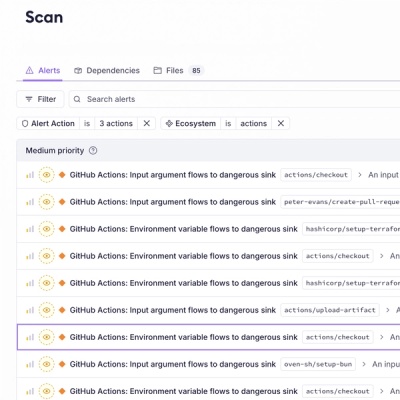

Introducing GitHub Actions Scanning Support

Detect malware, unsafe data flows, and license issues in GitHub Actions with Socket’s new workflow scanning support.

openai-unofficial

Advanced tools

Free & Unlimited Unofficial OpenAI API Python SDK - Supports all OpenAI Models & Endpoints

An Free & Unlimited unofficial Python SDK for the OpenAI API, providing seamless integration and easy-to-use methods for interacting with OpenAI's latest powerful AI models, including GPT-4o (Including gpt-4o-audio-preview & gpt-4o-realtime-preview Models), GPT-4, GPT-3.5 Turbo, DALL·E 3, Whisper & Text-to-Speech (TTS) models for Free

gpt-4o-audio-preview to receive both audio and text responses.Install the package via pip:

pip install -U openai-unofficial

from openai_unofficial import OpenAIUnofficial

# Initialize the client

client = OpenAIUnofficial()

# Basic chat completion

response = client.chat.completions.create(

messages=[{"role": "user", "content": "Say hello!"}],

model="gpt-4o"

)

print(response.choices[0].message.content)

from openai_unofficial import OpenAIUnofficial

client = OpenAIUnofficial()

models = client.list_models()

print("Available Models:")

for model in models['data']:

print(f"- {model['id']}")

from openai_unofficial import OpenAIUnofficial

client = OpenAIUnofficial()

response = client.chat.completions.create(

messages=[{"role": "user", "content": "Tell me a joke."}],

model="gpt-4o"

)

print("ChatBot:", response.choices[0].message.content)

from openai_unofficial import OpenAIUnofficial

client = OpenAIUnofficial()

response = client.chat.completions.create(

messages=[{

"role": "user",

"content": [

{"type": "text", "text": "What's in this image?"},

{

"type": "image_url",

"image_url": {

"url": "https://upload.wikimedia.org/wikipedia/commons/thumb/d/dd/Gfp-wisconsin-madison-the-nature-boardwalk.jpg/2560px-Gfp-wisconsin-madison-the-nature-boardwalk.jpg",

}

},

],

}],

model="gpt-4o-mini-2024-07-18"

)

print("Response:", response.choices[0].message.content)

from openai_unofficial import OpenAIUnofficial

client = OpenAIUnofficial()

completion_stream = client.chat.completions.create(

messages=[{"role": "user", "content": "Write a short story in 3 sentences."}],

model="gpt-4o-mini-2024-07-18",

stream=True

)

for chunk in completion_stream:

content = chunk.choices[0].delta.content

if content:

print(content, end='', flush=True)

from openai_unofficial import OpenAIUnofficial

client = OpenAIUnofficial()

audio_data = client.audio.create(

input_text="This is a test of the TTS capabilities!",

model="tts-1-hd",

voice="nova"

)

with open("tts_output.mp3", "wb") as f:

f.write(audio_data)

print("TTS Audio saved as tts_output.mp3")

from openai_unofficial import OpenAIUnofficial

client = OpenAIUnofficial()

response = client.chat.completions.create(

messages=[{"role": "user", "content": "Tell me a fun fact."}],

model="gpt-4o-audio-preview",

modalities=["text", "audio"],

audio={"voice": "fable", "format": "wav"}

)

message = response.choices[0].message

print("Text Response:", message.content)

if message.audio and 'data' in message.audio:

from base64 import b64decode

with open("audio_preview.wav", "wb") as f:

f.write(b64decode(message.audio['data']))

print("Audio saved as audio_preview.wav")

from openai_unofficial import OpenAIUnofficial

client = OpenAIUnofficial()

response = client.image.create(

prompt="A futuristic cityscape at sunset",

model="dall-e-3",

size="1024x1024"

)

print("Image URL:", response.data[0].url)

from openai_unofficial import OpenAIUnofficial

client = OpenAIUnofficial()

with open("speech.mp3", "rb") as audio_file:

transcription = client.audio.transcribe(

file=audio_file,

model="whisper-1"

)

print("Transcription:", transcription.text)

The SDK supports OpenAI's function calling capabilities, allowing you to define and use tools/functions in your conversations. Here are examples of function calling & tool usage:

⚠️ Important Note: In the current version (0.1.2), complex or multiple function calling is not yet fully supported. The SDK currently supports basic function calling capabilities. Support for multiple function calls and more complex tool usage patterns will be added in upcoming releases.

from openai_unofficial import OpenAIUnofficial

import json

client = OpenAIUnofficial()

# Define your functions as tools

tools = [

{

"type": "function",

"function": {

"name": "get_current_weather",

"description": "Get the current weather in a given location",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "The city and state, e.g., San Francisco, CA"

},

"unit": {

"type": "string",

"enum": ["celsius", "fahrenheit"],

"description": "The temperature unit"

}

},

"required": ["location"]

}

}

}

]

# Function to actually get weather data

def get_current_weather(location: str, unit: str = "celsius") -> str:

# This is a mock function - replace with actual weather API call

return f"The current weather in {location} is 22°{unit[0].upper()}"

# Initial conversation message

messages = [

{"role": "user", "content": "What's the weather like in London?"}

]

# First API call to get function calling response

response = client.chat.completions.create(

model="gpt-4o-mini-2024-07-18",

messages=messages,

tools=tools,

tool_choice="auto"

)

# Get the assistant's message

assistant_message = response.choices[0].message

messages.append(assistant_message.to_dict())

# Check if the model wants to call a function

if assistant_message.tool_calls:

# Process each tool call

for tool_call in assistant_message.tool_calls:

function_name = tool_call.function.name

function_args = json.loads(tool_call.function.arguments)

# Call the function and get the result

function_response = get_current_weather(**function_args)

# Append the function response to messages

messages.append({

"role": "tool",

"tool_call_id": tool_call.id,

"name": function_name,

"content": function_response

})

# Get the final response from the model

final_response = client.chat.completions.create(

model="gpt-4o-mini-2024-07-18",

messages=messages

)

print("Final Response:", final_response.choices[0].message.content)

Contributions are welcome! Please follow these steps:

git checkout -b feature/my-feature.git commit -am 'Add new feature'.git push origin feature/my-feature.Please ensure your code adheres to the project's coding standards and passes all tests.

This project is licensed under the MIT License - see the LICENSE file for details.

Note: This SDK is unofficial and not affiliated with OpenAI.

If you encounter any issues or have suggestions, please open an issue on GitHub.

Here's a partial list of models that the SDK currently supports. For Complete list, check out the /models endpoint:

Chat Models:

gpt-4gpt-4-turbogpt-4ogpt-4o-minigpt-3.5-turbogpt-3.5-turbo-16kgpt-3.5-turbo-instructgpt-4o-realtime-previewgpt-4o-audio-previewImage Generation Models:

dall-e-2dall-e-3Text-to-Speech (TTS) Models:

tts-1tts-1-hdtts-1-1106tts-1-hd-1106Audio Models:

whisper-1Embedding Models:

text-embedding-ada-002text-embedding-3-smalltext-embedding-3-largeFAQs

Free & Unlimited Unofficial OpenAI API Python SDK - Supports all OpenAI Models & Endpoints

We found that openai-unofficial demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 1 open source maintainer collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Product

Detect malware, unsafe data flows, and license issues in GitHub Actions with Socket’s new workflow scanning support.

Product

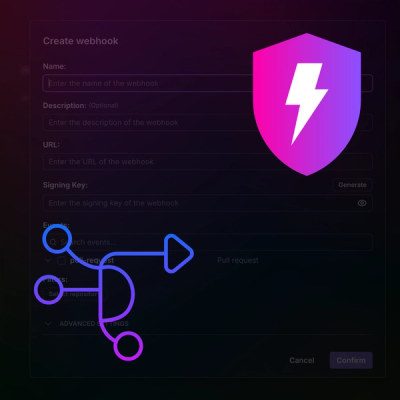

Add real-time Socket webhook events to your workflows to automatically receive pull request scan results and security alerts in real time.

Research

The Socket Threat Research Team uncovered malicious NuGet packages typosquatting the popular Nethereum project to steal wallet keys.