Security News

OWASP 2025 Top 10 Adds Software Supply Chain Failures, Ranked Top Community Concern

OWASP’s 2025 Top 10 introduces Software Supply Chain Failures as a new category, reflecting rising concern over dependency and build system risks.

pandas-dataset-handler

Advanced tools

A tool to process and export datasets in various formats including ORC, Parquet, XML, JSON, HTML, CSV, HDF5, XLSX and Markdown.

PandasDatasetHandler is a Python package that provides utility functions for loading, saving, and processing datasets using Pandas DataFrames. It supports multiple file formats for reading and writing, as well as partitioning datasets into smaller chunks.

To install the package, you can use pip:

pip install pandas-dataset-handler

This table provides a quick reference for mapping common file types to their corresponding argument names used in functions or libraries that require specifying the file format.

| File Type | Function Argument Name |

|---|---|

| CSV | 'csv' |

| JSON | 'json' |

| Parquet | 'parquet' |

| ORC | 'orc' |

| XML | 'xml' |

| HTML | 'html' |

| HDF5 | 'hdf5' |

| XLSX | 'xlsx' |

| Markdown | 'md' |

import pandas as pd

from pandas_dataset_handler import PandasDatasetHandler

You can load a dataset using the load_dataset method. It will automatically detect the file format based on the extension.

dataset = PandasDatasetHandler.load_dataset('path/to/your/file.csv')

To save a DataFrame in a specific file format, use the save_dataset method. You can specify the directory, base filename, and the format (e.g., CSV, JSON, Parquet, etc.).

PandasDatasetHandler.save_dataset(

dataset=dataset,

action_type='write', # action type should be 'write' for saving

file_format='csv', # file format such as 'csv', 'json', 'parquet', etc.

path='./output', # path where the file will be saved

base_filename='output_file' # base filename for the saved file

)

You can partition a dataset into smaller DataFrames for distributed processing or other use cases:

partitions = PandasDatasetHandler.generate_partitioned_datasets(dataset, num_parts=5)

import pandas as pd

from pandas_dataset_handler import PandasDatasetHandler

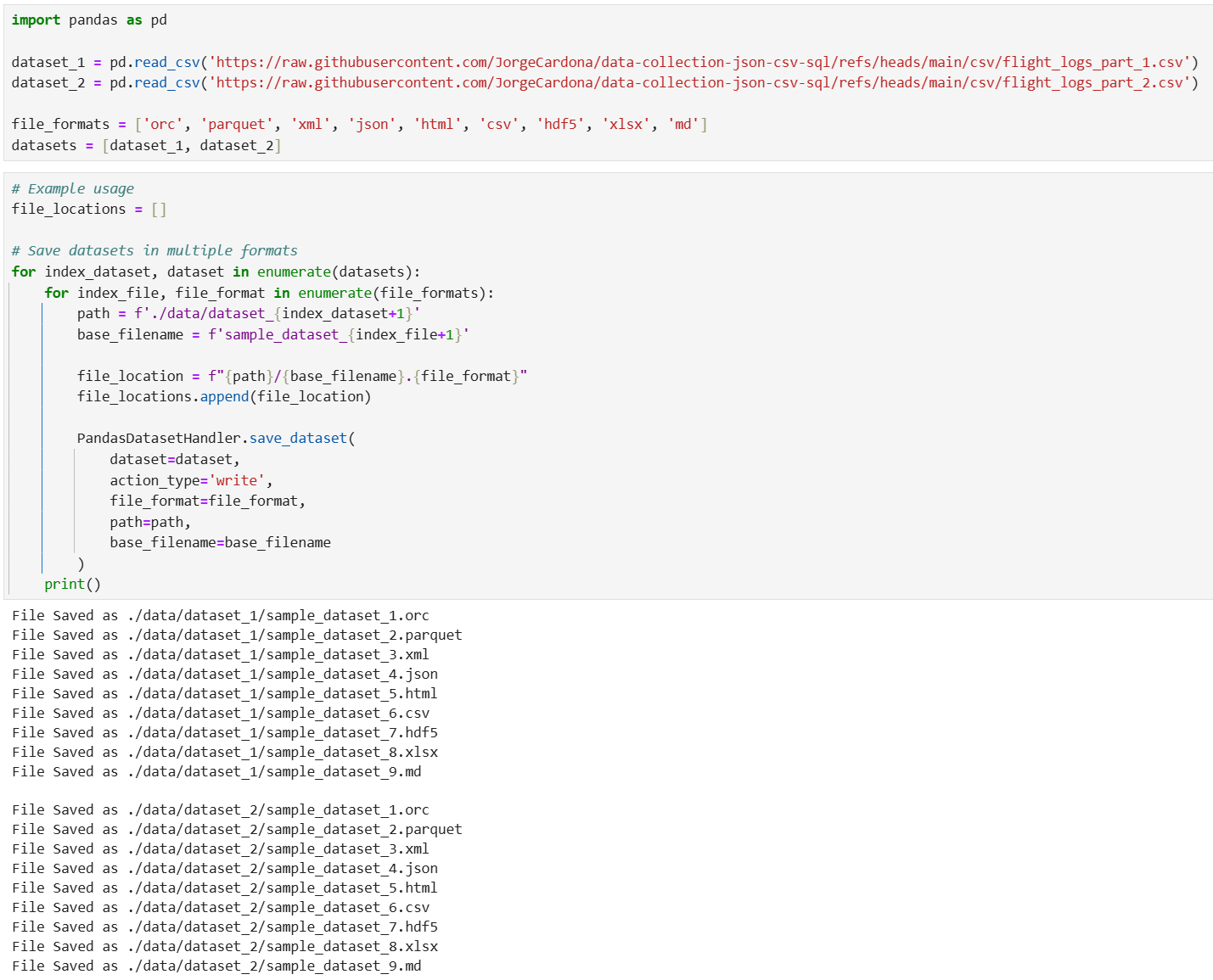

dataset_1 = pd.read_csv('https://raw.githubusercontent.com/JorgeCardona/data-collection-json-csv-sql/refs/heads/main/csv/flight_logs_part_1.csv')

dataset_2 = pd.read_csv('https://raw.githubusercontent.com/JorgeCardona/data-collection-json-csv-sql/refs/heads/main/csv/flight_logs_part_2.csv')

file_formats = ['orc', 'parquet', 'xml', 'json', 'html', 'csv', 'hdf5', 'xlsx', 'md']

datasets = [dataset_1, dataset_2]

# Example usage

file_locations = []

# Save datasets in multiple formats

for index_dataset, dataset in enumerate(datasets):

for index_file, file_format in enumerate(file_formats):

path = f'./data/dataset_{index_dataset+1}'

base_filename = f'sample_dataset_{index_file+1}'

file_location = f"{path}/{base_filename}.{file_format}"

file_locations.append(file_location)

PandasDatasetHandler.save_dataset(

dataset=dataset,

action_type='write',

file_format=file_format,

path=path,

base_filename=base_filename

)

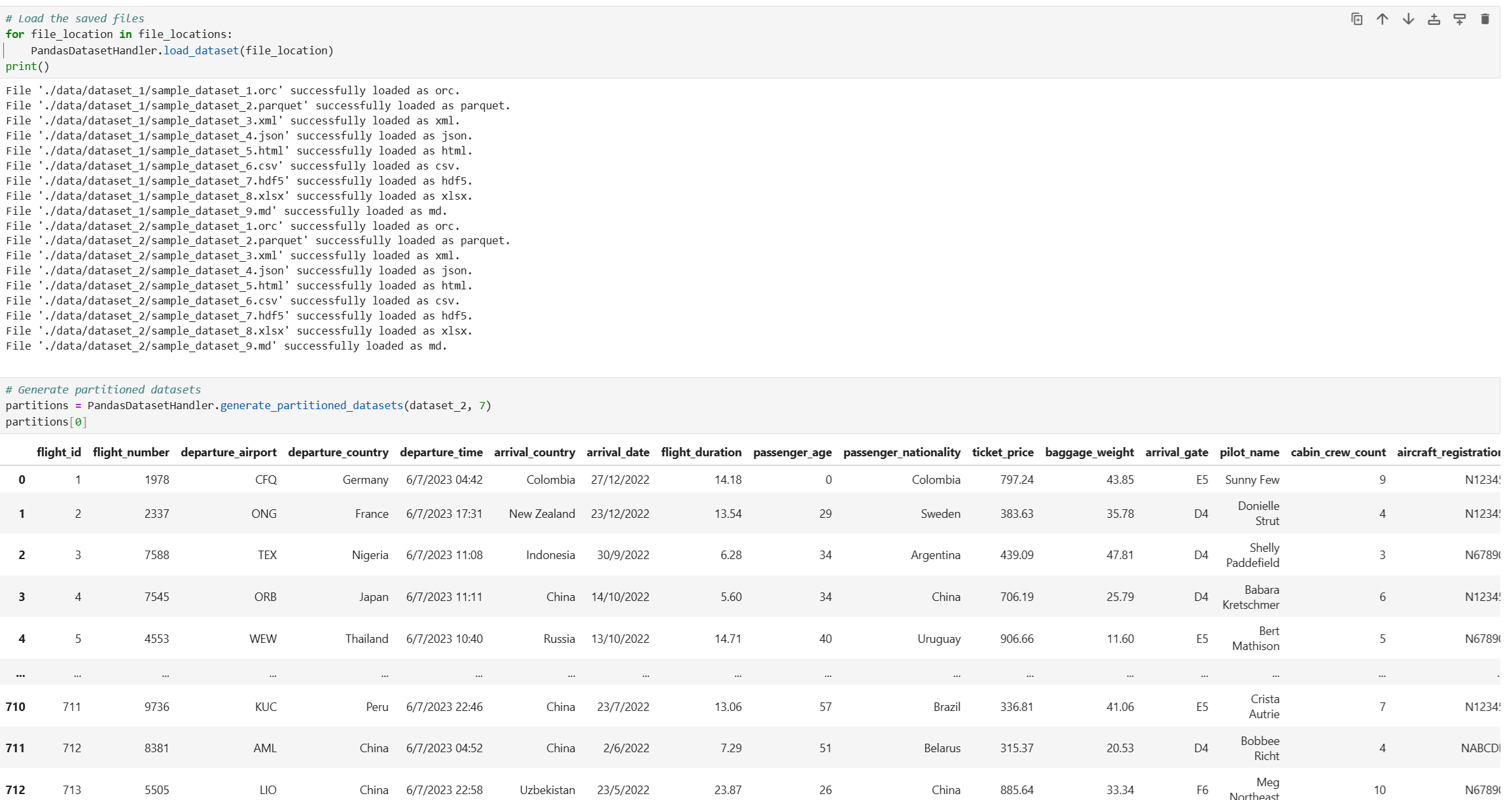

# Load the saved files

for file_location in file_locations:

PandasDatasetHandler.load_dataset(file_location)

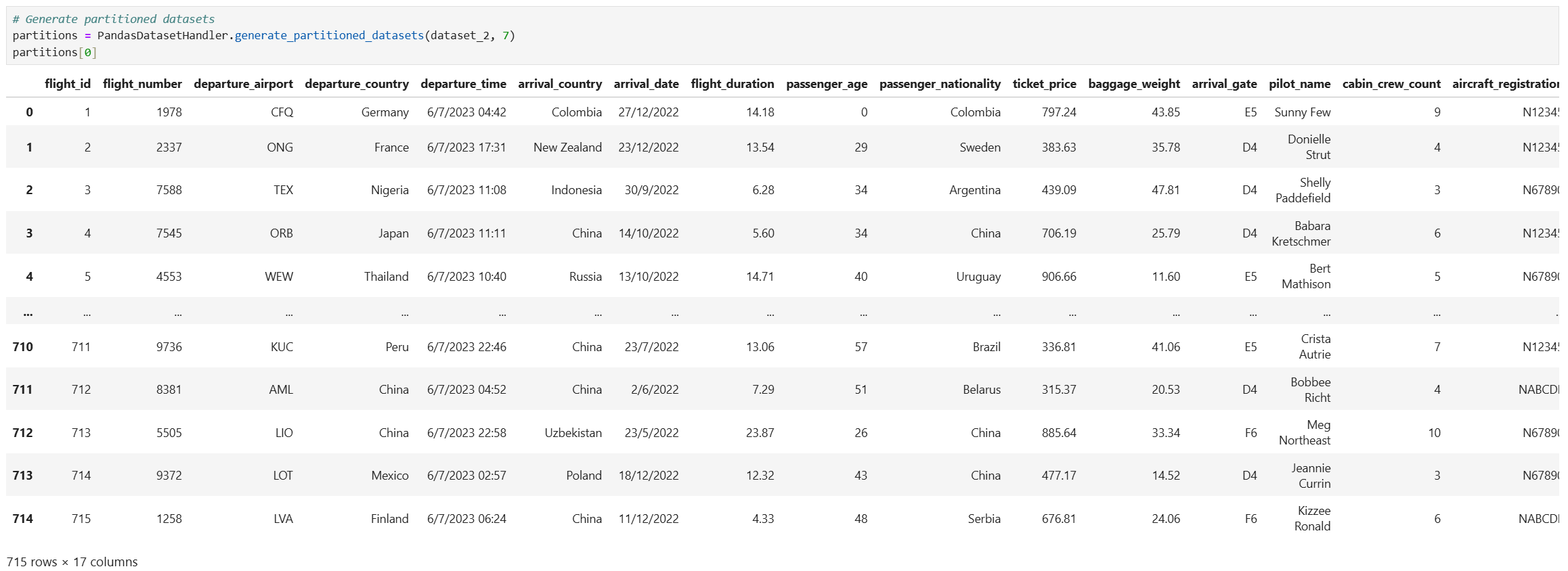

# Generate partitioned datasets

partitions = PandasDatasetHandler.generate_partitioned_datasets(dataset_2, 7)

partitions[0]

The package raises custom exceptions for handling different error scenarios:

read_orc() is not compatible with Windows OS.IncompatibleActionError: Raised when the specified action is not supported (e.g., trying to read a dataset when an action to write is expected).IncompatibleFormatError: Raised when the file format is not supported.IncompatibleProcessingError: Raised when neither the action nor the format is supported for processing.SaveDatasetError: Raised when an error occurs while saving a dataset in a specific format.LoadDatasetError: Raised when an error occurs while loading a file in a specific format.try:

PandasDatasetHandler.save_dataset(dataset, 'write', 'xml', './output', 'example')

except SaveDatasetError as e:

print(f"Error saving the dataset: {e}")

except IncompatibleFormatError as e:

print(f"Unsupported format: {e}")

except IncompatibleActionError as e:

print(f"Unsupported action: {e}")

except IncompatibleProcessingError as e:

print(f"Processing not supported: {e}")

This package is licensed under the MIT License. See the LICENSE file for more details.

FAQs

A tool to process and export datasets in various formats including ORC, Parquet, XML, JSON, HTML, CSV, HDF5, XLSX and Markdown.

We found that pandas-dataset-handler demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 1 open source maintainer collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Security News

OWASP’s 2025 Top 10 introduces Software Supply Chain Failures as a new category, reflecting rising concern over dependency and build system risks.

Research

/Security News

Socket researchers discovered nine malicious NuGet packages that use time-delayed payloads to crash applications and corrupt industrial control systems.

Security News

Socket CTO Ahmad Nassri discusses why supply chain attacks now target developer machines and what AI means for the future of enterprise security.