Product

Introducing Socket Firewall Enterprise: Flexible, Configurable Protection for Modern Package Ecosystems

Socket Firewall Enterprise is now available with flexible deployment, configurable policies, and expanded language support.

pyspdbutils

Advanced tools

Production-grade database utilities with universal interface and hash collision detection

A production-grade database utilities package that provides a unified interface for working with multiple database types. Built with enterprise requirements in mind, it includes hash collision detection, automatic schema validation, and robust error handling.

👋 New to db_utils? Start here:

🔧 For developers:

pip install pyspdbutils

Install with specific database support:

# PostgreSQL

pip install pyspdbutils[postgresql]

# MySQL

pip install pyspdbutils[mysql]

# SQL Server

pip install pyspdbutils[sqlserver]

# Oracle

pip install pyspdbutils[oracle]

# Snowflake

pip install pyspdbutils[snowflake]

# Teradata

pip install pyspdbutils[teradata]

# Databricks

pip install pyspdbutils[databricks]

# MariaDB

pip install pyspdbutils[mariadb]

# Azure SQL

pip install pyspdbutils[azuresql]

# All databases

pip install pyspdbutils[all]

from db_utils import DBManager, DBConfig

from db_utils.interfaces import SQLiteInterface

# Initialize database interface

interface = SQLiteInterface("example.db")

# Create manager with hash collision detection

manager = DBManager(interface, hash_columns=["id", "email"])

# Create table

schema = {

"id": "INTEGER PRIMARY KEY",

"name": "VARCHAR(100)",

"email": "VARCHAR(255) UNIQUE",

"created_at": "TIMESTAMP DEFAULT CURRENT_TIMESTAMP"

}

manager.create_table("users", schema)

# Insert data with automatic duplicate detection

user_data = {"id": 1, "name": "John Doe", "email": "john@example.com"}

result = manager.insert("users", user_data)

if result == "duplicate":

print("User already exists!")

else:

print("User created successfully!")

# Query data

users = manager.select("users", conditions={"name": "John Doe"})

print(f"Found {len(users)} users")

from db_utils import DBManager, DBConfig

from db_utils.interfaces import PostgreSQLInterface

# Initialize PostgreSQL interface

interface = PostgreSQLInterface(

host="localhost",

port=5432,

user="username",

password="password",

database="mydb"

)

# Or use environment variables with DBConfig

config = DBConfig.from_env("postgresql")

from db_utils.interfaces import SQLAlchemyInterface

interface = SQLAlchemyInterface(config.conn_str)

manager = DBManager(interface)

# Rest of the code is the same...

Create a .env file:

POSTGRESQL_HOST=localhost

POSTGRESQL_PORT=5432

POSTGRESQL_USER=myuser

POSTGRESQL_PASSWORD=mypassword

POSTGRESQL_DATABASE=mydatabase

MYSQL_HOST=localhost

MYSQL_USER=root

MYSQL_PASSWORD=password

MYSQL_DATABASE=testdb

SNOWFLAKE_ACCOUNT=your-account

SNOWFLAKE_USER=your-user

SNOWFLAKE_PASSWORD=your-password

SNOWFLAKE_WAREHOUSE=your-warehouse

SNOWFLAKE_DATABASE=your-database

SNOWFLAKE_SCHEMA=your-schema

Then use DBConfig:

from db_utils import DBConfig

from db_utils.interfaces import SQLAlchemyInterface

# Load configuration from environment

config = DBConfig.from_env("postgresql")

interface = SQLAlchemyInterface(config.connection_string)

# Configure hash columns for duplicate detection

manager = DBManager(interface, hash_columns=["email", "phone"])

# Insert will automatically check for duplicates

data = {"name": "Jane Doe", "email": "jane@example.com", "phone": "+1234567890"}

result = manager.insert("users", data, skip_duplicates=True)

if result == "duplicate":

print("Record with same email/phone already exists")

# Using context manager for automatic transaction handling

with interface:

manager.insert("users", user1_data)

manager.insert("users", user2_data)

# Automatically commits on success, rolls back on error

# Create complex table with constraints

schema = {

"id": "SERIAL PRIMARY KEY",

"username": "VARCHAR(50) UNIQUE NOT NULL",

"email": "VARCHAR(100) UNIQUE NOT NULL",

"password_hash": "VARCHAR(255) NOT NULL",

"is_active": "BOOLEAN DEFAULT TRUE",

"created_at": "TIMESTAMP DEFAULT CURRENT_TIMESTAMP",

"updated_at": "TIMESTAMP DEFAULT CURRENT_TIMESTAMP"

}

manager.create_table("users", schema)

# Add indexes (database-specific)

if hasattr(interface, 'execute_query'):

interface.execute_query("CREATE INDEX idx_users_email ON users(email)")

# Bulk insert with duplicate checking

users_data = [

{"name": "User 1", "email": "user1@example.com"},

{"name": "User 2", "email": "user2@example.com"},

{"name": "User 3", "email": "user3@example.com"},

]

inserted_count = 0

duplicate_count = 0

for user_data in users_data:

result = manager.insert("users", user_data)

if result == "duplicate":

duplicate_count += 1

else:

inserted_count += 1

print(f"Inserted: {inserted_count}, Duplicates: {duplicate_count}")

from db_utils import QueryBuilder

# Custom query building

builder = QueryBuilder("postgresql")

# Build complex SELECT query

query, params = builder.build_query_params(

"SELECT",

"users",

columns=["id", "name", "email"],

conditions={"is_active": True, "created_at": "2024-01-01"},

limit=10,

offset=20

)

results = interface.execute_query(str(query), params, fetch="all")

The package includes comprehensive error handling:

from db_utils.exception import (

DBOperationError,

ValidationError,

ConnectionError,

ConfigurationError

)

try:

manager.insert("users", invalid_data)

except ValidationError as e:

print(f"Data validation failed: {e}")

except DBOperationError as e:

print(f"Database operation failed: {e}")

except ConnectionError as e:

print(f"Database connection failed: {e}")

Direct instantiation:

config = DBConfig("postgresql", host="localhost", user="user", password="pass")

Environment variables:

config = DBConfig.from_env("postgresql")

Mixed approach:

config = DBConfig("postgresql", host="custom-host") # Other params from env

config = DBConfig("snowflake",

account="your-account",

user="username",

password="password",

warehouse="compute_wh",

database="analytics",

schema="public",

role="analyst"

)

config = DBConfig("databricks",

host="your-workspace.cloud.databricks.com",

password="your-token", # Personal access token

database="/your/database/path"

)

Run the test suite:

# Install development dependencies

pip install production-db-utils[dev]

# Run tests

pytest

# Run with coverage

pytest --cov=db_utils --cov-report=html

git checkout -b feature/amazing-feature)pytest)git commit -m 'Add amazing feature')git push origin feature/amazing-feature)This project is licensed under the MIT License - see the LICENSE file for details.

FAQs

Production-grade database utilities with universal interface and hash collision detection

We found that pyspdbutils demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 1 open source maintainer collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Product

Socket Firewall Enterprise is now available with flexible deployment, configurable policies, and expanded language support.

Security News

Open source dashboard CNAPulse tracks CVE Numbering Authorities’ publishing activity, highlighting trends and transparency across the CVE ecosystem.

Product

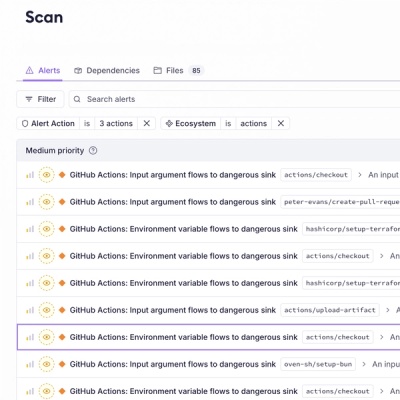

Detect malware, unsafe data flows, and license issues in GitHub Actions with Socket’s new workflow scanning support.