Product

Introducing Socket Firewall Enterprise: Flexible, Configurable Protection for Modern Package Ecosystems

Socket Firewall Enterprise is now available with flexible deployment, configurable policies, and expanded language support.

@dmitrysoshnikov/lex-js

Advanced tools

Lexer generator from RegExp spec.

The tool can be installed as an npm module:

npm install -g @dmitrysoshnikov/lex-js

lex-js --help

npm test passes (add new tests if needed)git clone https://github.com/<your-github-account>/lex-js.git

cd lex-js

npm install

npm test

./bin/lex-js --help

The module allows creating tokenizers from RegExp specs at runtime:

const {Tokenizer} = require('@dmitrysoshnikov/lex-js');

/**

* Create a new tokenizer from spec.

*/

const tokenizer = Tokenizer.fromSpec([

[/\s+/, v => 'WS'],

[/\d+/, v => 'NUMBER'],

[/\w+/, v => 'WORD'],

]);

tokenizer.init('Score 255');

console.log(tokenizer.getAllTokens());

/*

Result:

[

{type: 'WORD', value: 'Score'},

{type: 'WS', value: ' '},

{type: 'NUMBER', value: '255'},

]

*/

The CLI allows generating a tokenizer module from the spec file.

Example ~/spec.lex:

{

rules: [

[/\s+/, v => 'WS'],

[/\d+/, v => 'NUMBER'],

[/\w+/, v => 'WORD'],

],

options: {

captureLocations: false,

},

}

To generate the tokenizer module:

lex-js --spec ~/spec.lex --output ./lexer.js

✓ Successfully generated: ~/lexer.js

The generated file ./lexer.js contains the tokenizer module which can be required in Node.js app:

const lexer = require('./lexer');

lexer.init('Score 250');

console.log(lexer.getAllTokens());

/*

Result:

[

{type: 'WORD', value: 'Score'},

{type: 'WS', value: ' '},

{type: 'NUMBER', value: '255'},

]

*/

The following methods are available on the Tokenizer class.

Creates a new tokenizer from spec:

const {Tokenizer} = require('@dmitrysoshnikov/lex-js');

/**

* Create a new tokenizer from spec.

*/

const tokenizer = Tokenizer.fromSpec([

[/\s+/, v => 'WS'],

[/\d+/, v => 'NUMBER'],

[/\w+/, v => 'WORD'],

]);

tokenizer.init('Score 255');

console.log(tokenizer.getAllTokens());

tokenizer.init(string, options = {})

Initializes the tokenizer instance with a string and parsing options:

tokenizer.init('Score 255', {captureLocations: true});

Note: initString is an alias for init for compatibility with tokenizer API from Syntax tool.

tokenizer.reset()

Rewinds the string to the beginning, resets tokens.

tokenizer.hasMoreTokens()

Whether there are still more tokens.

tokenizer.getNextToken()

Returns the next token from the iterator.

tokenizer.tokens()

Returns tokens iterator.

[...tokenizer.tokens()];

// Same as:

tokenizer.getAllTokens();

// Same as:

[...tokenizer];

// Iterate through tokens:

for (const token of tokenizer.tokens()) {

// Pull lazily tokens

}

tokenizer.getAllTokens()

Returns all tokens as an array.

tokenizer.setOptions()

Sets lexer options.

Supported options:

captureLocations: boolean: whether to capture locations.tokenizer.setOptions({captureLocations: true});

tokenizer.init('Score 250');

console.log(tokenizer.getNextToken());

/*

Result:

{

type: 'WORD',

value: 'Score',

endColumn: 5,

endLine: 1,

endOffset: 5,

startColumn: 0,

startLine: 1,

startOffset: 0,

}

*/

The options can also be passed with each init call:

tokenizer.init('Score 250', {captureLocations: false});

console.log(tokenizer.getNextToken());

/*

Result:

{type: 'WORD', value: 'Score'}

*/

Tokenizer throws "Unexpected token" exception if a token is not recognized from spec:

tokenizer.init('Score: 250');

tokenizer.getAllTokens();

/*

Result:

SyntaxError:

Score: 255

^

Unexpected token: ":" at 1:5

*/

See examples for multiple spec formats.

The lex-js supports spec formats as the rules with callback functions:

{

rules: [

[/\s+/, v => 'WS'],

[/\d+/, v => 'NUMBER'],

[/\w+/, v => 'WORD'],

],

options: {

captureLocations: true,

},

}

This format can be shorter and contain only rules:

[

[/\s+/, v => 'WS'],

[/\d+/, v => 'NUMBER'],

[/\w+/, v => 'WORD'],

];

The advantages of this format are the RegExp rules are passed actual regular expressions, and the handlers as actual functions, controlling the parameter name v for the matching token.

The JSON format of the Syntax tool is also supported:

{

"rules": [

["\\s+", "return 'WS'"],

["\\d+", "return 'NUMBER'"],

["\\w+", "return 'WORD'"]

],

"options": {

"captureLocations": true

}

}

An anonymous function is created from the handler string, and the matched token is passed as the yytext parameter in this case.

The Yacc/Lex format is supported as well:

%%

\s+ return 'WS'

\d+ return 'NUMBER'

\w+ return 'WORD'

FAQs

Lexer generator from RegExp spec

The npm package @dmitrysoshnikov/lex-js receives a total of 230 weekly downloads. As such, @dmitrysoshnikov/lex-js popularity was classified as not popular.

We found that @dmitrysoshnikov/lex-js demonstrated a not healthy version release cadence and project activity because the last version was released a year ago. It has 1 open source maintainer collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Product

Socket Firewall Enterprise is now available with flexible deployment, configurable policies, and expanded language support.

Security News

Open source dashboard CNAPulse tracks CVE Numbering Authorities’ publishing activity, highlighting trends and transparency across the CVE ecosystem.

Product

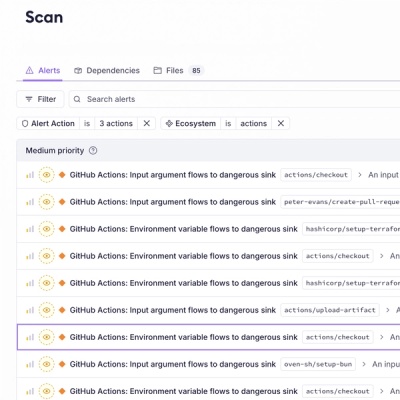

Detect malware, unsafe data flows, and license issues in GitHub Actions with Socket’s new workflow scanning support.