Product

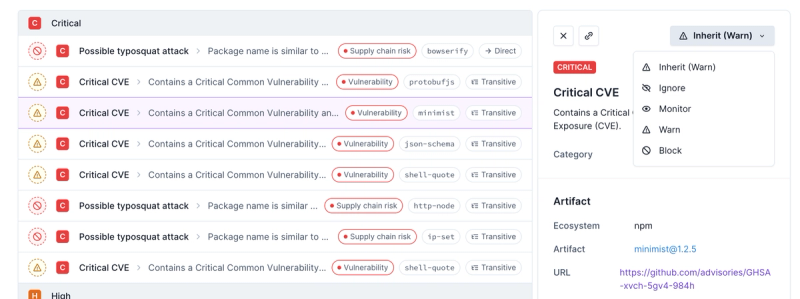

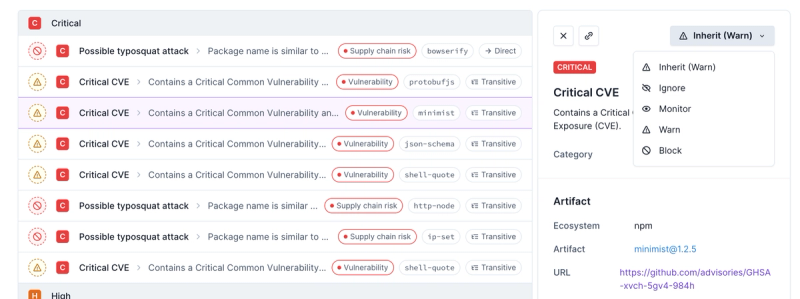

Introducing Enhanced Alert Actions and Triage Functionality

Socket now supports four distinct alert actions instead of the previous two, and alert triaging allows users to override the actions taken for all individual alerts.

@extractus/feed-extractor

Advanced tools

Readme

To read & normalize RSS/ATOM/JSON feed data.

feed-extractor is a part of tool sets for content builder:

You can use one or combination of these tools to build news sites, create automated content systems for marketing campaign or gather dataset for NLP projects...

feed-reader has been renamed to @extractus/feed-extractor since v6.1.4

npm i @extractus/feed-extractor

# pnpm

pnpm i @extractus/feed-extractor

# yarn

yarn add @extractus/feed-extractor

// es6 module

import { read } from '@extractus/feed-extractor'

// CommonJS

const { read } = require('@extractus/feed-extractor')

// or specify exactly path to CommonJS variant

const { read } = require('@extractus/feed-extractor/dist/cjs/feed-extractor.js')

// deno < 1.28

import { read } from 'https://esm.sh/@extractus/feed-extractor'

// deno > 1.28

import { read } from 'npm:@extractus/feed-extractor'

import { read } from 'https://unpkg.com/@extractus/feed-extractor@latest/dist/feed-extractor.esm.js'

Please check the examples for reference.

For Deta devs please refer the source code and guideline here or simply click the button below.

read()Load and extract feed data from given RSS/ATOM/JSON source. Return a Promise object.

read(String url)

read(String url, Object options)

read(String url, Object options, Object fetchOptions)

url requiredURL of a valid feed source

Feed content must be accessible and conform one of the following standards:

For example:

import { read } from '@extractus/feed-extractor'

const result = await read('https://news.google.com/atom')

console.log(result)

Without any options, the result should have the following structure:

{

title: String,

link: String,

description: String,

generator: String,

language: String,

published: ISO Date String,

entries: Array[

{

title: String,

link: String,

description: String,

published: ISO Datetime String

},

// ...

]

}

options optionalObject with all or several of the following properties:

normalization: Boolean, normalize feed data or keep original. Default true.useISODateFormat: Boolean, convert datetime to ISO format. Default true.descriptionMaxLen: Number, to truncate description. Default 210 (characters).xmlParserOptions: Object, used by xml parser, view fast-xml-parser's docsgetExtraFeedFields: Function, to get more fields from feed datagetExtraEntryFields: Function, to get more fields from feed entry dataFor example:

import { read } from '@extractus/feed-extractor'

await read('https://news.google.com/atom', {

useISODateFormat: false

})

await read('https://news.google.com/rss', {

useISODateFormat: false,

getExtraFeedFields: (feedData) => {

return {

subtitle: feedData.subtitle || ''

}

},

getExtraEntryFields: (feedEntry) => {

const {

enclosure,

category

} = feedEntry

return {

enclosure: {

url: enclosure['@_url'],

type: enclosure['@_type'],

length: enclosure['@_length']

},

category: isString(category) ? category : {

text: category['@_text'],

domain: category['@_domain']

}

}

}

})

fetchOptions optionalYou can use this param to set request headers to fetch.

For example:

import { read } from '@extractus/feed-extractor'

const url = 'https://news.google.com/rss'

await read(url, null, {

headers: {

'user-agent': 'Opera/9.60 (Windows NT 6.0; U; en) Presto/2.1.1'

}

})

You can also specify a proxy endpoint to load remote content, instead of fetching directly.

For example:

import { read } from '@extractus/feed-extractor'

const url = 'https://news.google.com/rss'

await read(url, null, {

headers: {

'user-agent': 'Opera/9.60 (Windows NT 6.0; U; en) Presto/2.1.1'

},

proxy: {

target: 'https://your-secret-proxy.io/loadXml?url=',

headers: {

'Proxy-Authorization': 'Bearer YWxhZGRpbjpvcGVuc2VzYW1l...'

}

}

})

Passing requests to proxy is useful while running @extractus/feed-extractor on browser. View examples/browser-feed-reader as reference example.

git clone https://github.com/extractus/feed-extractor.git

cd feed-extractor

npm install

node eval.js --url=https://news.google.com/rss --normalization=y --useISODateFormat=y --includeEntryContent=n --includeOptionalElements=n

The MIT License (MIT)

FAQs

To read and normalize RSS/ATOM/JSON feed data

The npm package @extractus/feed-extractor receives a total of 2,547 weekly downloads. As such, @extractus/feed-extractor popularity was classified as popular.

We found that @extractus/feed-extractor demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 2 open source maintainers collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Product

Socket now supports four distinct alert actions instead of the previous two, and alert triaging allows users to override the actions taken for all individual alerts.

Security News

Polyfill.io has been serving malware for months via its CDN, after the project's open source maintainer sold the service to a company based in China.

Security News

OpenSSF is warning open source maintainers to stay vigilant against reputation farming on GitHub, where users artificially inflate their status by manipulating interactions on closed issues and PRs.