Product

Introducing Socket Firewall Enterprise: Flexible, Configurable Protection for Modern Package Ecosystems

Socket Firewall Enterprise is now available with flexible deployment, configurable policies, and expanded language support.

@juspay/zephyr-mind

Advanced tools

Production-ready AI toolkit with multi-provider support, automatic fallback, and full TypeScript integration.

Zephyr-Mind provides a unified interface for AI providers (OpenAI, Amazon Bedrock, Google Vertex AI) with intelligent fallback, streaming support, and type-safe APIs. Extracted from production use at Juspay.

npm install zephyr-mind ai @ai-sdk/amazon-bedrock @ai-sdk/openai @ai-sdk/google-vertex zod

import { createBestAIProvider } from 'zephyr-mind';

// Auto-selects best available provider

const provider = createBestAIProvider();

const result = await provider.generateText({

prompt: "Hello, AI!"

});

console.log(result.text);

🔄 Multi-Provider Support - OpenAI, Amazon Bedrock, Google Vertex AI ⚡ Automatic Fallback - Seamless provider switching on failures 📡 Streaming & Non-Streaming - Real-time responses and standard generation 🎯 TypeScript First - Full type safety and IntelliSense support 🛡️ Production Ready - Extracted from proven production systems 🔧 Zero Config - Works out of the box with environment variables

# npm

npm install zephyr-mind ai @ai-sdk/amazon-bedrock @ai-sdk/openai @ai-sdk/google-vertex zod

# yarn

yarn add zephyr-mind ai @ai-sdk/amazon-bedrock @ai-sdk/openai @ai-sdk/google-vertex zod

# pnpm (recommended)

pnpm add zephyr-mind ai @ai-sdk/amazon-bedrock @ai-sdk/openai @ai-sdk/google-vertex zod

# Choose one or more providers

export OPENAI_API_KEY="sk-your-openai-key"

export AWS_ACCESS_KEY_ID="your-aws-key"

export AWS_SECRET_ACCESS_KEY="your-aws-secret"

export GOOGLE_APPLICATION_CREDENTIALS="path/to/service-account.json"

import { createBestAIProvider } from 'zephyr-mind';

const provider = createBestAIProvider();

// Basic generation

const result = await provider.generateText({

prompt: "Explain TypeScript generics",

temperature: 0.7,

maxTokens: 500

});

console.log(result.text);

console.log(`Used: ${result.provider}`);

import { createBestAIProvider } from 'zephyr-mind';

const provider = createBestAIProvider();

const result = await provider.streamText({

prompt: "Write a story about AI",

temperature: 0.8,

maxTokens: 1000

});

// Handle streaming chunks

for await (const chunk of result.textStream) {

process.stdout.write(chunk);

}

import { AIProviderFactory } from 'zephyr-mind';

// Use specific provider

const openai = AIProviderFactory.createProvider('openai', 'gpt-4o');

const bedrock = AIProviderFactory.createProvider('bedrock', 'claude-3-7-sonnet');

// With fallback

const { primary, fallback } = AIProviderFactory.createProviderWithFallback(

'bedrock', 'openai'

);

src/routes/api/chat/+server.ts)import { createBestAIProvider } from 'zephyr-mind';

import type { RequestHandler } from './$types';

export const POST: RequestHandler = async ({ request }) => {

try {

const { message } = await request.json();

const provider = createBestAIProvider();

const result = await provider.streamText({

prompt: message,

temperature: 0.7,

maxTokens: 1000

});

return new Response(result.toReadableStream(), {

headers: {

'Content-Type': 'text/plain; charset=utf-8',

'Cache-Control': 'no-cache'

}

});

} catch (error) {

return new Response(JSON.stringify({ error: error.message }), {

status: 500,

headers: { 'Content-Type': 'application/json' }

});

}

};

src/routes/chat/+page.svelte)<script lang="ts">

let message = '';

let response = '';

let isLoading = false;

async function sendMessage() {

if (!message.trim()) return;

isLoading = true;

response = '';

try {

const res = await fetch('/api/chat', {

method: 'POST',

headers: { 'Content-Type': 'application/json' },

body: JSON.stringify({ message })

});

if (!res.body) throw new Error('No response');

const reader = res.body.getReader();

const decoder = new TextDecoder();

while (true) {

const { done, value } = await reader.read();

if (done) break;

response += decoder.decode(value, { stream: true });

}

} catch (error) {

response = `Error: ${error.message}`;

} finally {

isLoading = false;

}

}

</script>

<div class="chat">

<input bind:value={message} placeholder="Ask something..." />

<button on:click={sendMessage} disabled={isLoading}>

{isLoading ? 'Sending...' : 'Send'}

</button>

{#if response}

<div class="response">{response}</div>

{/if}

</div>

app/api/ai/route.ts)import { createBestAIProvider } from 'zephyr-mind';

import { NextRequest, NextResponse } from 'next/server';

export async function POST(request: NextRequest) {

try {

const { prompt, ...options } = await request.json();

const provider = createBestAIProvider();

const result = await provider.generateText({

prompt,

temperature: 0.7,

maxTokens: 1000,

...options

});

return NextResponse.json({

text: result.text,

provider: result.provider,

usage: result.usage

});

} catch (error) {

return NextResponse.json(

{ error: error.message },

{ status: 500 }

);

}

}

components/AIChat.tsx)'use client';

import { useState } from 'react';

export default function AIChat() {

const [prompt, setPrompt] = useState('');

const [result, setResult] = useState<string>('');

const [loading, setLoading] = useState(false);

const generate = async () => {

if (!prompt.trim()) return;

setLoading(true);

try {

const response = await fetch('/api/ai', {

method: 'POST',

headers: { 'Content-Type': 'application/json' },

body: JSON.stringify({ prompt })

});

const data = await response.json();

setResult(data.text);

} catch (error) {

setResult(`Error: ${error.message}`);

} finally {

setLoading(false);

}

};

return (

<div className="space-y-4">

<div className="flex gap-2">

<input

value={prompt}

onChange={(e) => setPrompt(e.target.value)}

placeholder="Enter your prompt..."

className="flex-1 p-2 border rounded"

/>

<button

onClick={generate}

disabled={loading}

className="px-4 py-2 bg-blue-500 text-white rounded disabled:opacity-50"

>

{loading ? 'Generating...' : 'Generate'}

</button>

</div>

{result && (

<div className="p-4 bg-gray-100 rounded">

{result}

</div>

)}

</div>

);

}

import express from 'express';

import { createBestAIProvider, AIProviderFactory } from 'zephyr-mind';

const app = express();

app.use(express.json());

// Simple generation endpoint

app.post('/api/generate', async (req, res) => {

try {

const { prompt, options = {} } = req.body;

const provider = createBestAIProvider();

const result = await provider.generateText({

prompt,

...options

});

res.json({

success: true,

text: result.text,

provider: result.provider

});

} catch (error) {

res.status(500).json({

success: false,

error: error.message

});

}

});

// Streaming endpoint

app.post('/api/stream', async (req, res) => {

try {

const { prompt } = req.body;

const provider = createBestAIProvider();

const result = await provider.streamText({ prompt });

res.setHeader('Content-Type', 'text/plain');

res.setHeader('Cache-Control', 'no-cache');

for await (const chunk of result.textStream) {

res.write(chunk);

}

res.end();

} catch (error) {

res.status(500).json({ error: error.message });

}

});

app.listen(3000, () => {

console.log('Server running on http://localhost:3000');

});

import { useState, useCallback } from 'react';

interface AIOptions {

temperature?: number;

maxTokens?: number;

provider?: string;

}

export function useAI() {

const [loading, setLoading] = useState(false);

const [error, setError] = useState<string | null>(null);

const generate = useCallback(async (

prompt: string,

options: AIOptions = {}

) => {

setLoading(true);

setError(null);

try {

const response = await fetch('/api/ai', {

method: 'POST',

headers: { 'Content-Type': 'application/json' },

body: JSON.stringify({ prompt, ...options })

});

if (!response.ok) {

throw new Error(`Request failed: ${response.statusText}`);

}

const data = await response.json();

return data.text;

} catch (err) {

const message = err instanceof Error ? err.message : 'Unknown error';

setError(message);

return null;

} finally {

setLoading(false);

}

}, []);

return { generate, loading, error };

}

// Usage

function MyComponent() {

const { generate, loading, error } = useAI();

const handleClick = async () => {

const result = await generate("Explain React hooks", {

temperature: 0.7,

maxTokens: 500

});

console.log(result);

};

return (

<button onClick={handleClick} disabled={loading}>

{loading ? 'Generating...' : 'Generate'}

</button>

);

}

createBestAIProvider(requestedProvider?, modelName?)Creates the best available AI provider based on environment configuration.

const provider = createBestAIProvider();

const provider = createBestAIProvider('openai'); // Prefer OpenAI

const provider = createBestAIProvider('bedrock', 'claude-3-7-sonnet');

createAIProviderWithFallback(primary, fallback, modelName?)Creates a provider with automatic fallback.

const { primary, fallback } = createAIProviderWithFallback('bedrock', 'openai');

try {

const result = await primary.generateText({ prompt });

} catch {

const result = await fallback.generateText({ prompt });

}

createProvider(providerName, modelName?)Creates a specific provider instance.

const openai = AIProviderFactory.createProvider('openai', 'gpt-4o');

const bedrock = AIProviderFactory.createProvider('bedrock', 'claude-3-7-sonnet');

const vertex = AIProviderFactory.createProvider('vertex', 'gemini-2.5-flash');

All providers implement the same interface:

interface AIProvider {

generateText(options: GenerateTextOptions): Promise<GenerateTextResult>;

streamText(options: StreamTextOptions): Promise<StreamTextResult>;

}

interface GenerateTextOptions {

prompt: string;

temperature?: number;

maxTokens?: number;

systemPrompt?: string;

}

interface GenerateTextResult {

text: string;

provider: string;

model: string;

usage?: {

promptTokens: number;

completionTokens: number;

totalTokens: number;

};

}

gpt-4o (default)gpt-4o-minigpt-4-turboclaude-3-7-sonnet (default)claude-3-5-sonnetclaude-3-haikugemini-2.5-flash (default)claude-4.0-sonnetexport OPENAI_API_KEY="sk-your-key-here"

export AWS_ACCESS_KEY_ID="your-access-key"

export AWS_SECRET_ACCESS_KEY="your-secret-key"

export AWS_REGION="us-east-1"

export GOOGLE_APPLICATION_CREDENTIALS="/path/to/service-account.json"

export GOOGLE_VERTEX_PROJECT="your-project-id"

export GOOGLE_VERTEX_LOCATION="us-central1"

# Provider selection (optional)

AI_DEFAULT_PROVIDER="bedrock"

AI_FALLBACK_PROVIDER="openai"

# Debug mode

ZEPHYR_MIND_DEBUG="true"

import { AIProviderFactory } from 'zephyr-mind';

// Environment-based provider selection

const isDev = process.env.NODE_ENV === 'development';

const provider = isDev

? AIProviderFactory.createProvider('openai', 'gpt-4o-mini') // Cheaper for dev

: AIProviderFactory.createProvider('bedrock', 'claude-3-7-sonnet'); // Production

// Multiple providers for different use cases

const providers = {

creative: AIProviderFactory.createProvider('openai', 'gpt-4o'),

analytical: AIProviderFactory.createProvider('bedrock', 'claude-3-7-sonnet'),

fast: AIProviderFactory.createProvider('vertex', 'gemini-2.5-flash')

};

async function generateCreativeContent(prompt: string) {

return await providers.creative.generateText({

prompt,

temperature: 0.9,

maxTokens: 2000

});

}

const cache = new Map<string, { text: string; timestamp: number }>();

const CACHE_DURATION = 5 * 60 * 1000; // 5 minutes

async function cachedGenerate(prompt: string) {

const key = prompt.toLowerCase().trim();

const cached = cache.get(key);

if (cached && Date.now() - cached.timestamp < CACHE_DURATION) {

return { ...cached, fromCache: true };

}

const provider = createBestAIProvider();

const result = await provider.generateText({ prompt });

cache.set(key, { text: result.text, timestamp: Date.now() });

return { text: result.text, fromCache: false };

}

async function processBatch(prompts: string[]) {

const provider = createBestAIProvider();

const chunkSize = 5;

const results = [];

for (let i = 0; i < prompts.length; i += chunkSize) {

const chunk = prompts.slice(i, i + chunkSize);

const chunkResults = await Promise.allSettled(

chunk.map(prompt => provider.generateText({ prompt, maxTokens: 500 }))

);

results.push(...chunkResults);

// Rate limiting

if (i + chunkSize < prompts.length) {

await new Promise(resolve => setTimeout(resolve, 1000));

}

}

return results.map((result, index) => ({

prompt: prompts[index],

success: result.status === 'fulfilled',

result: result.status === 'fulfilled' ? result.value : result.reason

}));

}

ValidationException: Your account is not authorized to invoke this API operation.

bedrock:InvokeModel permissionsError: Cannot find API key for OpenAI provider

Cannot find package '@google-cloud/vertexai' imported from...

npm install @google-cloud/vertexaiThe security token included in the request is expired

import { createBestAIProvider } from 'zephyr-mind';

async function robustGenerate(prompt: string, maxRetries = 3) {

let attempt = 0;

while (attempt < maxRetries) {

try {

const provider = createBestAIProvider();

return await provider.generateText({ prompt });

} catch (error) {

attempt++;

console.error(`Attempt ${attempt} failed:`, error.message);

if (attempt >= maxRetries) {

throw new Error(`Failed after ${maxRetries} attempts: ${error.message}`);

}

// Exponential backoff

await new Promise(resolve =>

setTimeout(resolve, Math.pow(2, attempt) * 1000)

);

}

}

}

async function generateWithFallback(prompt: string) {

const providers = ['bedrock', 'openai', 'vertex'];

for (const providerName of providers) {

try {

const provider = AIProviderFactory.createProvider(providerName);

return await provider.generateText({ prompt });

} catch (error) {

console.warn(`${providerName} failed:`, error.message);

if (error.message.includes('API key') || error.message.includes('credentials')) {

console.log(`${providerName} not configured, trying next...`);

continue;

}

}

}

throw new Error('All providers failed or are not configured');

}

// Provider not configured

if (error.message.includes('API key')) {

console.error('Provider API key not set');

}

// Rate limiting

if (error.message.includes('rate limit')) {

console.error('Rate limit exceeded, implement backoff');

}

// Model not available

if (error.message.includes('model')) {

console.error('Requested model not available');

}

// Network issues

if (error.message.includes('network') || error.message.includes('timeout')) {

console.error('Network connectivity issue');

}

Choose Right Models for Use Case

// Fast responses for simple tasks

const fast = AIProviderFactory.createProvider('vertex', 'gemini-2.5-flash');

// High quality for complex tasks

const quality = AIProviderFactory.createProvider('bedrock', 'claude-3-7-sonnet');

// Cost-effective for development

const dev = AIProviderFactory.createProvider('openai', 'gpt-4o-mini');

Streaming for Long Responses

// Use streaming for better UX on long content

const result = await provider.streamText({

prompt: "Write a detailed article...",

maxTokens: 2000

});

Appropriate Token Limits

// Set reasonable limits to control costs

const result = await provider.generateText({

prompt: "Summarize this text",

maxTokens: 150 // Just enough for a summary

});

We welcome contributions! Here's how to get started:

git clone https://github.com/juspay/zephyr-mind

cd zephyr-mind

pnpm install

pnpm test # Run all tests

pnpm test:watch # Watch mode

pnpm test:coverage # Coverage report

pnpm build # Build the library

pnpm check # Type checking

pnpm lint # Lint code

MIT © Juspay Technologies

Built with ❤️ by Juspay Technologies

FAQs

AI toolkit extracted from lighthouse with multi-provider support

We found that @juspay/zephyr-mind demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 7 open source maintainers collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Product

Socket Firewall Enterprise is now available with flexible deployment, configurable policies, and expanded language support.

Security News

Open source dashboard CNAPulse tracks CVE Numbering Authorities’ publishing activity, highlighting trends and transparency across the CVE ecosystem.

Product

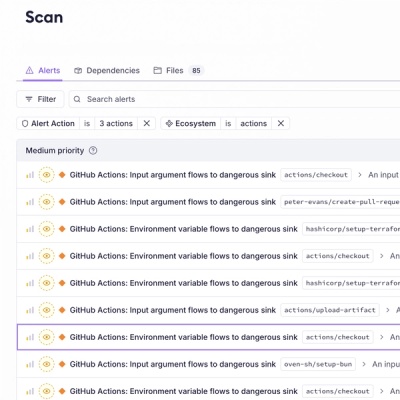

Detect malware, unsafe data flows, and license issues in GitHub Actions with Socket’s new workflow scanning support.