Product

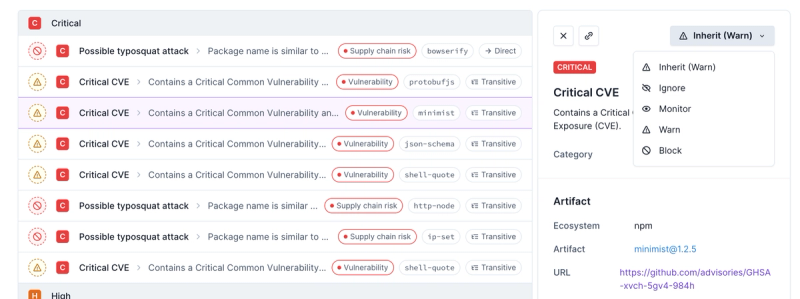

Introducing Enhanced Alert Actions and Triage Functionality

Socket now supports four distinct alert actions instead of the previous two, and alert triaging allows users to override the actions taken for all individual alerts.

stream-buffers

Advanced tools

Package description

The stream-buffers npm package provides a way to use in-memory storage as a stream. This can be useful when you want to work with streams without actually reading from or writing to the file system. It allows you to use buffers as readable and writable streams.

WritableStreamBuffer

This feature allows you to create a writable stream that you can write data to. The data is stored in memory and can be retrieved as a Buffer.

const streamBuffers = require('stream-buffers');

const myWritableStreamBuffer = new streamBuffers.WritableStreamBuffer();

myWritableStreamBuffer.write('Some data');

const data = myWritableStreamBuffer.getContents();ReadableStreamBuffer

This feature allows you to create a readable stream that you can push data into. The data can then be read from the stream as if it were being read from a file or other data source.

const streamBuffers = require('stream-buffers');

const myReadableStreamBuffer = new streamBuffers.ReadableStreamBuffer();

myReadableStreamBuffer.put('Some data');

myReadableStreamBuffer.on('data', (dataChunk) => {

console.log(dataChunk.toString());

});This package provides similar functionality to stream-buffers by allowing you to create readable and writable streams that operate on in-memory data. It is a simple implementation that can be used for testing or simple stream manipulation.

Buffer List (bl) is a storage object for collections of Node.js Buffers, which can also be used as a duplex stream. It is similar to stream-buffers in that it allows you to work with data in memory, but it is more focused on collecting buffers and streaming them.

Concat-stream is a writable stream that concatenates data and calls a callback with the result. It is similar to the writable part of stream-buffers but is specifically designed for concatenating stream data into a single buffer.

Readme

!! Consider using Node 16+ Utility Consumers rather than this library. !!

Simple Readable and Writable Streams that use a Buffer to store received data, or for data to send out. Useful for test code, debugging, and a wide range of other utilities.

npm install stream-buffers --save

To use the stream buffers in your module, simply import it and away you go.

var streamBuffers = require('stream-buffers');

WritableStreamBuffer implements the standard stream.Writable interface. All writes to this stream will accumulate in an internal Buffer. If the internal buffer overflows it will be resized automatically. The initial size of the Buffer and the amount in which it grows can be configured in the constructor.

var myWritableStreamBuffer = new streamBuffers.WritableStreamBuffer({

initialSize: (100 * 1024), // start at 100 kilobytes.

incrementAmount: (10 * 1024) // grow by 10 kilobytes each time buffer overflows.

});

The default initial size and increment amount are stored in the following constants:

streamBuffers.DEFAULT_INITIAL_SIZE // (8 * 1024)

streamBuffers.DEFAULT_INCREMENT_AMOUNT // (8 * 1024)

Writing is standard Stream stuff:

myWritableStreamBuffer.write(myBuffer);

// - or -

myWritableStreamBuffer.write('\u00bd + \u00bc = \u00be', 'utf8');

You can query the size of the data being held in the Buffer, and also how big the Buffer's max capacity currently is:

myWritableStreamBuffer.write('ASDF');

streamBuffers.size(); // 4.

streamBuffers.maxSize(); // Whatever was configured as initial size. In our example: (100 * 1024).

Retrieving the contents of the Buffer is simple.

// Gets all held data as a Buffer.

myWritableStreamBuffer.getContents();

// Gets all held data as a utf8 string.

myWritableStreamBuffer.getContentsAsString('utf8');

// Gets first 5 bytes as a Buffer.

myWritableStreamBuffer.getContents(5);

// Gets first 5 bytes as a utf8 string.

myWritableStreamBuffer.getContentsAsString('utf8', 5);

Care should be taken when getting encoded strings from WritableStream, as it doesn't really care about the contents (multi-byte characters will not be respected).

Destroying or ending the WritableStream will not delete the contents of Buffer, but will disallow any further writes.

myWritableStreamBuffer.write('ASDF');

myWritableStreamBuffer.end();

myWritableStreamBuffer.getContentsAsString(); // -> 'ASDF'

ReadableStreamBuffer implements the standard stream.Readable, but can have data inserted into it. This data will then be pumped out in chunks as readable events. The data to be sent out is held in a Buffer, which can grow in much the same way as a WritableStreamBuffer does, if data is being put in Buffer faster than it is being pumped out.

The frequency in which chunks are pumped out, and the size of the chunks themselves can be configured in the constructor. The initial size and increment amount of internal Buffer can be configured too. In the following example 2kb chunks will be output every 10 milliseconds:

var myReadableStreamBuffer = new streamBuffers.ReadableStreamBuffer({

frequency: 10, // in milliseconds.

chunkSize: 2048 // in bytes.

});

Default frequency and chunk size:

streamBuffers.DEFAULT_CHUNK_SIZE // (1024)

streamBuffers.DEFAULT_FREQUENCY // (1)

Putting data in Buffer to be pumped out is easy:

myReadableStreamBuffer.put(aBuffer);

myReadableStreamBuffer.put('A String', 'utf8');

Chunks are pumped out via standard stream.Readable semantics. This means you can use the old streams1 way:

myReadableStreamBuffer.on('data', function(data) {

// streams1.x style data

assert.isTrue(data instanceof Buffer);

});

Or the streams2+ way:

myReadableStreamBuffer.on('readable', function(data) {

var chunk;

while((chunk = myReadableStreamBuffer.read()) !== null) {

assert.isTrue(chunk instanceof Buffer);

}

});

Because ReadableStreamBuffer is simply an implementation of stream.Readable, it implements pause / resume / setEncoding / etc.

Once you're done putting data into a ReadableStreamBuffer, you can call stop() on it.

myReadableStreamBuffer.put('the last data this stream will ever see');

myReadableStreamBuffer.stop();

Once the ReadableStreamBuffer is done pumping out the data in its internal buffer, it will emit the usual end event. You cannot write any more data to the stream once you've called stop() on it.

Not supposed to be a speed demon, it's more for tests/debugging or weird edge cases. It works with an internal buffer that it copies contents to/from/around.

Thank you to all the wonderful contributors who have kept this package alive throughout the years.

node-stream-buffer is free and unencumbered public domain software. For more information, see the accompanying UNLICENSE file.

FAQs

Buffer-backed Streams for reading and writing.

The npm package stream-buffers receives a total of 3,643,301 weekly downloads. As such, stream-buffers popularity was classified as popular.

We found that stream-buffers demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 1 open source maintainer collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Product

Socket now supports four distinct alert actions instead of the previous two, and alert triaging allows users to override the actions taken for all individual alerts.

Security News

Polyfill.io has been serving malware for months via its CDN, after the project's open source maintainer sold the service to a company based in China.

Security News

OpenSSF is warning open source maintainers to stay vigilant against reputation farming on GitHub, where users artificially inflate their status by manipulating interactions on closed issues and PRs.