Research

Security News

Malicious npm Package Typosquats react-login-page to Deploy Keylogger

Socket researchers unpack a typosquatting package with malicious code that logs keystrokes and exfiltrates sensitive data to a remote server.

wink-nlp

Advanced tools

Changelog

Version 1.1.0 September 18, 2020

its helpers. 🎉its.stem helper to obtain stems of the words using Porter Stemmer Algorithm V2. 👏Readme

winkNLP is a JavaScript library for Natural Language Processing (NLP). Designed specifically to make development of NLP solutions easier and faster, winkNLP is optimized for the right balance of performance and accuracy. The package can handle large amount of raw text at speeds over 525,000 tokens/second. And with a test coverage of ~100%, winkNLP is a tool for building production grade systems with confidence.

It packs a rich feature set into a small foot print codebase of under 1500 lines:

Use npm install:

npm install wink-nlp --save

In order to use winkNLP after its installation, you also need to install a language model. The following command installs the latest version of default language model — the light weight English language model called wink-eng-lite-model.

node -e "require( 'wink-nlp/models/install' )"

Any required model can be installed by specifying its name as the last parameter in the above command. For example:

node -e "require( 'wink-nlp/models/install' )" wink-eng-lite-model

The "Hello World!" in winkNLP is given below. As the next step, we recommend a dive into winkNLP's concepts.

// Load wink-nlp package & helpers.

const winkNLP = require( 'wink-nlp' );

// Load "its" helper to extract item properties.

const its = require( 'wink-nlp/src/its.js' );

// Load "as" reducer helper to reduce a collection.

const as = require( 'wink-nlp/src/as.js' );

// Load english language model — light version.

const model = require( 'wink-eng-lite-model' );

// Instantiate winkNLP.

const nlp = winkNLP( model );

// NLP Code.

const text = 'Hello World🌎! How are you?';

const doc = nlp.readDoc( text );

console.log( doc.out() );

// -> Hello World🌎! How are you?

console.log( doc.sentences().out() );

// -> [ 'Hello World🌎!', 'How are you?' ]

console.log( doc.entities().out( its.detail ) );

// -> [ { value: '🌎', type: 'EMOJI' } ]

console.log( doc.tokens().out() );

// -> [ 'Hello', 'World', '🌎', '!', 'How', 'are', 'you', '?' ]

console.log( doc.tokens().out( its.type, as.freqTable ) );

// -> [ [ 'word', 5 ], [ 'punctuation', 2 ], [ 'emoji', 1 ] ]

The winkNLP processes raw text at ~525,000 tokens per second with its default language model — wink-eng-lite-model, when benchmarked using "Ch 13 of Ulysses by James Joyce" on a 2.2 GHz Intel Core i7 machine with 16GB RAM. The processing included the entire NLP pipeline — tokenization, sentence boundary detection, negation handling, sentiment analysis, part-of-speech tagging, and named entity extraction. This speed is way ahead of the prevailing speed benchmarks.

The benchmark was conducted on Node.js versions 14.8.0, 12.18.3 and 10.22.0.

It pos tags a subset of WSJ corpus with an accuracy of ~94.7% — this includes tokenization of raw text prior to pos tagging. The current state-of-the-art is at ~97% accuracy but at lower speeds and is generally computed using gold standard pre-tokenized corpus.

Its general purpose sentiment analysis delivers a f-score of ~84.5%, when validated using Amazon Product Review Sentiment Labelled Sentences Data Set at UCI Machine Learning Repository. The current benchmark accuracy for specifically trained models can range around 95%.

Wink NLP delivers this performance with the minimal load on RAM. For example, it processes the entire History of India Volume I with a total peak memory requirement of under 80MB. The book has around 350 pages which translates to over 125,000 tokens.

Please ask at Stack Overflow or discuss it at Wink JS Gitter Lobby.

If you spot a bug and the same has not yet been reported, raise a new issue or consider fixing it and sending a PR.

Looking for a new feature, request it via a new issue or consider becoming a contributor.

Wink is a family of open source packages for Natural Language Processing, Machine Learning, and Statistical Analysis in NodeJS. The code is thoroughly documented for easy human comprehension and has a test coverage of ~100% for reliability to build production grade solutions.

Wink NLP is copyright 2017-20 GRAYPE Systems Private Limited.

It is licensed under the terms of the MIT License.

FAQs

Unknown package

We found that wink-nlp demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 1 open source maintainer collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Research

Security News

Socket researchers unpack a typosquatting package with malicious code that logs keystrokes and exfiltrates sensitive data to a remote server.

Security News

The JavaScript community has launched the e18e initiative to improve ecosystem performance by cleaning up dependency trees, speeding up critical parts of the ecosystem, and documenting lighter alternatives to established tools.

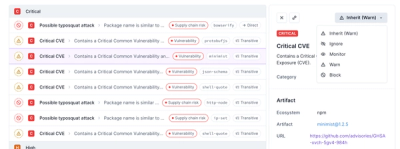

Product

Socket now supports four distinct alert actions instead of the previous two, and alert triaging allows users to override the actions taken for all individual alerts.