Product

Socket Now Protects the Chrome Extension Ecosystem

Socket is launching experimental protection for Chrome extensions, scanning for malware and risky permissions to prevent silent supply chain attacks.

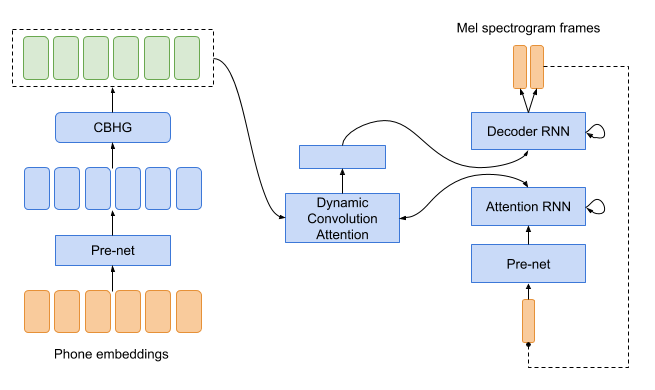

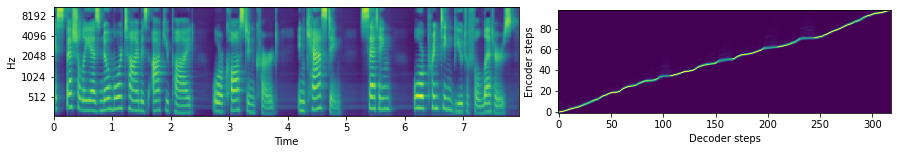

A PyTorch implementation of Location-Relative Attention Mechanisms For Robust Long-Form Speech Synthesis.

A PyTorch implementation of Location-Relative Attention Mechanisms For Robust Long-Form Speech Synthesis. Audio samples can be found here. Colab demo can be found here.

Ensure you have Python 3.6 and PyTorch 1.7 or greater installed. Then install this package (along with the univoc vocoder):

pip install tacotron univoc

import torch

import soundfile as sf

from univoc import Vocoder

from tacotron import load_cmudict, text_to_id, Tacotron

# download pretrained weights for the vocoder (and optionally move to GPU)

vocoder = Vocoder.from_pretrained(

"https://github.com/bshall/UniversalVocoding/releases/download/v0.2/univoc-ljspeech-7mtpaq.pt"

).cuda()

# download pretrained weights for tacotron (and optionally move to GPU)

tacotron = Tacotron.from_pretrained(

"https://github.com/bshall/Tacotron/releases/download/v0.1/tacotron-ljspeech-yspjx3.pt"

).cuda()

# load cmudict and add pronunciation of PyTorch

cmudict = load_cmudict()

cmudict["PYTORCH"] = "P AY1 T AO2 R CH"

text = "A PyTorch implementation of Location-Relative Attention Mechanisms For Robust Long-Form Speech Synthesis."

# convert text to phone ids

x = torch.LongTensor(text_to_id(text, cmudict)).unsqueeze(0).cuda()

# synthesize audio

with torch.no_grad():

mel, _ = tacotron.generate(x)

wav, sr = vocoder.generate(mel.transpose(1, 2))

# save output

sf.write("location_relative_attention.wav", wav, sr)

git clone https://github.com/bshall/Tacotron

cd ./Tacotron

pipenv install

wget https://data.keithito.com/data/speech/LJSpeech-1.1.tar.bz2

tar -xvjf LJSpeech-1.1.tar.bz2

pipenv run python preprocess.py path/to/LJSpeech-1.1 datasets/LJSpeech-1.1

usage: preprocess.py [-h] in_dir out_dir

Preprocess an audio dataset.

positional arguments:

in_dir Path to the dataset directory

out_dir Path to the output directory

optional arguments:

-h, --help show this help message and exit

pipenv run python train.py ljspeech datasets/LJSpeech-1.1 path/to/LJSpeech-1.1/metadata.csv

usage: train.py [-h] [--resume RESUME] checkpoint_dir text_path dataset_dir

Train Tacotron with dynamic convolution attention.

positional arguments:

checkpoint_dir Path to the directory where model checkpoints will be saved

text_path Path to the dataset transcripts

dataset_dir Path to the preprocessed data directory

optional arguments:

-h, --help show this help message and exit

--resume RESUME Path to the checkpoint to resume from

Pretrained weights for the LJSpeech model are available here.

FAQs

A PyTorch implementation of Location-Relative Attention Mechanisms For Robust Long-Form Speech Synthesis.

We found that tacotron demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 1 open source maintainer collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Product

Socket is launching experimental protection for Chrome extensions, scanning for malware and risky permissions to prevent silent supply chain attacks.

Product

Add secure dependency scanning to Claude Desktop with Socket MCP, a one-click extension that keeps your coding conversations safe from malicious packages.

Product

Socket now supports Scala and Kotlin, bringing AI-powered threat detection to JVM projects with easy manifest generation and fast, accurate scans.