@a_kawashiro/jendeley

Advanced tools

@a_kawashiro/jendeley - npm Package Compare versions

Comparing version 0.0.22 to 0.0.23

| "use strict"; | ||

| var __createBinding = (this && this.__createBinding) || (Object.create ? (function(o, m, k, k2) { | ||

| if (k2 === undefined) k2 = k; | ||

| var desc = Object.getOwnPropertyDescriptor(m, k); | ||

| if (!desc || ("get" in desc ? !m.__esModule : desc.writable || desc.configurable)) { | ||

| desc = { enumerable: true, get: function() { return m[k]; } }; | ||

| } | ||

| Object.defineProperty(o, k2, desc); | ||

| }) : (function(o, m, k, k2) { | ||

| if (k2 === undefined) k2 = k; | ||

| o[k2] = m[k]; | ||

| })); | ||

| var __setModuleDefault = (this && this.__setModuleDefault) || (Object.create ? (function(o, v) { | ||

| Object.defineProperty(o, "default", { enumerable: true, value: v }); | ||

| }) : function(o, v) { | ||

| o["default"] = v; | ||

| }); | ||

| var __importStar = (this && this.__importStar) || function (mod) { | ||

| if (mod && mod.__esModule) return mod; | ||

| var result = {}; | ||

| if (mod != null) for (var k in mod) if (k !== "default" && Object.prototype.hasOwnProperty.call(mod, k)) __createBinding(result, mod, k); | ||

| __setModuleDefault(result, mod); | ||

| return result; | ||

| }; | ||

| var __awaiter = (this && this.__awaiter) || function (thisArg, _arguments, P, generator) { | ||

@@ -49,3 +26,2 @@ function adopt(value) { return value instanceof P ? value : new P(function (resolve) { resolve(value); }); } | ||

| const gen_1 = require("./gen"); | ||

| const E = __importStar(require("fp-ts/lib/Either")); | ||

| const load_db_1 = require("./load_db"); | ||

@@ -338,7 +314,7 @@ const path_util_1 = require("./path_util"); | ||

| const newDBOrError = (0, gen_1.registerWeb)(jsonDB, req.url, title, req.comments, tags); | ||

| if (E.isRight(newDBOrError)) { | ||

| (0, load_db_1.saveDB)(E.toUnion(newDBOrError), dbPath); | ||

| if (newDBOrError._tag === "right") { | ||

| (0, load_db_1.saveDB)(newDBOrError.right, dbPath); | ||

| const r = { | ||

| isSucceeded: true, | ||

| message: "addPdfFromUrl succeeded", | ||

| message: "addWebFromUrl succeeded", | ||

| }; | ||

@@ -348,3 +324,3 @@ response.status(200).json(r); | ||

| else { | ||

| const err = E.toUnion(newDBOrError); | ||

| const err = newDBOrError.left; | ||

| const r = { | ||

@@ -410,8 +386,10 @@ isSucceeded: false, | ||

| const date = new Date(); | ||

| const date_tag = date.toISOString().split("T")[0]; | ||

| const date_tag = new Date(date.getTime() - date.getTimezoneOffset() * 60000) | ||

| .toISOString() | ||

| .split("T")[0]; | ||

| const tags = req.tags; | ||

| tags.push(date_tag); | ||

| const idEntryOrError = yield (0, gen_1.registerNonBookPDF)(dbPath.slice(0, dbPath.length - 1), [filename], jsonDB, undefined, req.comments, tags, req.filename == undefined, req.url); | ||

| if (E.isRight(idEntryOrError)) { | ||

| const t = E.toUnion(idEntryOrError); | ||

| if (idEntryOrError._tag === "right") { | ||

| const t = idEntryOrError.right; | ||

| if (t[0] in jsonDB) { | ||

@@ -437,3 +415,3 @@ fs_1.default.rmSync(fullpathOfDownloadFile); | ||

| fs_1.default.rmSync(fullpathOfDownloadFile); | ||

| const err = E.toUnion(idEntryOrError); | ||

| const err = idEntryOrError.left; | ||

| const r = { | ||

@@ -440,0 +418,0 @@ isSucceeded: false, |

@@ -38,3 +38,3 @@ "use strict"; | ||

| exports.ENTRY_DATA_FROM_ARXIV = ENTRY_DATA_FROM_ARXIV; | ||

| const JENDELEY_VERSION = "0.0.22"; | ||

| const JENDELEY_VERSION = "0.0.23"; | ||

| exports.JENDELEY_VERSION = JENDELEY_VERSION; | ||

@@ -41,0 +41,0 @@ const JENDELEY_DIR = ".jendeley"; |

| "use strict"; | ||

| var __createBinding = (this && this.__createBinding) || (Object.create ? (function(o, m, k, k2) { | ||

| if (k2 === undefined) k2 = k; | ||

| var desc = Object.getOwnPropertyDescriptor(m, k); | ||

| if (!desc || ("get" in desc ? !m.__esModule : desc.writable || desc.configurable)) { | ||

| desc = { enumerable: true, get: function() { return m[k]; } }; | ||

| } | ||

| Object.defineProperty(o, k2, desc); | ||

| }) : (function(o, m, k, k2) { | ||

| if (k2 === undefined) k2 = k; | ||

| o[k2] = m[k]; | ||

| })); | ||

| var __setModuleDefault = (this && this.__setModuleDefault) || (Object.create ? (function(o, v) { | ||

| Object.defineProperty(o, "default", { enumerable: true, value: v }); | ||

| }) : function(o, v) { | ||

| o["default"] = v; | ||

| }); | ||

| var __importStar = (this && this.__importStar) || function (mod) { | ||

| if (mod && mod.__esModule) return mod; | ||

| var result = {}; | ||

| if (mod != null) for (var k in mod) if (k !== "default" && Object.prototype.hasOwnProperty.call(mod, k)) __createBinding(result, mod, k); | ||

| __setModuleDefault(result, mod); | ||

| return result; | ||

| }; | ||

| var __awaiter = (this && this.__awaiter) || function (thisArg, _arguments, P, generator) { | ||

@@ -45,3 +22,3 @@ function adopt(value) { return value instanceof P ? value : new P(function (resolve) { resolve(value); }); } | ||

| const pdf_js_extract_1 = require("pdf.js-extract"); | ||

| const E = __importStar(require("fp-ts/lib/Either")); | ||

| const either_1 = require("./either"); | ||

| const error_messages_1 = require("./error_messages"); | ||

@@ -146,5 +123,5 @@ const path_util_1 = require("./path_util"); | ||

| for (const f of foundArxiv) { | ||

| return E.right({ docIDType: "arxiv", arxiv: f[1] }); | ||

| return (0, either_1.genRight)({ docIDType: "arxiv", arxiv: f[1] }); | ||

| } | ||

| return E.left(error_messages_1.ERROR_GET_DOCID_FROM_URL); | ||

| return (0, either_1.genLeft)(error_messages_1.ERROR_GET_DOCID_FROM_URL); | ||

| } | ||

@@ -165,3 +142,3 @@ exports.getDocIDFromUrl = getDocIDFromUrl; | ||

| d = d.replaceAll("_", "."); | ||

| return E.right({ docIDType: "doi", doi: d }); | ||

| return (0, either_1.genRight)({ docIDType: "doi", doi: d }); | ||

| } | ||

@@ -179,3 +156,3 @@ const regexpDOI2 = new RegExp("\\[\\s*jendeley\\s+doi\\s+(10_[0-9]{4}_[A-Z]{1,3}[0-9]+[0-9X])\\s*\\]", "g"); | ||

| d = d.replaceAll("_", "."); | ||

| return E.right({ docIDType: "doi", doi: d }); | ||

| return (0, either_1.genRight)({ docIDType: "doi", doi: d }); | ||

| } | ||

@@ -193,3 +170,3 @@ const regexpDOI3 = new RegExp("\\[\\s*jendeley\\s+doi\\s+(10_[0-9]{4}_[a-zA-z]+_[0-9]+_[0-9]+)\\s*\\]", "g"); | ||

| d = d.replaceAll("_", "."); | ||

| return E.right({ docIDType: "doi", doi: d }); | ||

| return (0, either_1.genRight)({ docIDType: "doi", doi: d }); | ||

| } | ||

@@ -206,3 +183,3 @@ const regexpDOI4 = new RegExp("\\[\\s*jendeley\\s+doi\\s+(10_[0-9]{4}_[0-9X-]+_[0-9]{1,})\\s*\\]", "g"); | ||

| d.substring(3 + 4 + 1); | ||

| return E.right({ docIDType: "doi", doi: d }); | ||

| return (0, either_1.genRight)({ docIDType: "doi", doi: d }); | ||

| } | ||

@@ -220,3 +197,3 @@ const regexpDOI6 = new RegExp("\\[\\s*jendeley\\s+doi\\s+(10_[0-9]{4}_[a-zA-z]+-[0-9]+-[0-9]+)\\s*\\]", "g"); | ||

| d = d.replaceAll("_", "."); | ||

| return E.right({ docIDType: "doi", doi: d }); | ||

| return (0, either_1.genRight)({ docIDType: "doi", doi: d }); | ||

| } | ||

@@ -234,3 +211,3 @@ const regexpDOI7 = new RegExp("\\[\\s*jendeley\\s+doi\\s+(10_[0-9]{4}_978-[0-9-]+)\\s*\\]", "g"); | ||

| d = d.replaceAll("_", "."); | ||

| return E.right({ docIDType: "doi", doi: d }); | ||

| return (0, either_1.genRight)({ docIDType: "doi", doi: d }); | ||

| } | ||

@@ -242,3 +219,3 @@ const regexpArxiv = new RegExp("\\[\\s*jendeley\\s+arxiv\\s+([0-9]{4}_[0-9v]+)\\s*\\]", "g"); | ||

| d = d.substring(0, 4) + "." + d.substring(5); | ||

| return E.right({ docIDType: "arxiv", arxiv: d }); | ||

| return (0, either_1.genRight)({ docIDType: "arxiv", arxiv: d }); | ||

| } | ||

@@ -249,8 +226,8 @@ const regexpISBN = new RegExp(".*\\[\\s*jendeley\\s+isbn\\s+([0-9]{10,})\\s*\\]", "g"); | ||

| let d = f[1]; | ||

| return E.right({ docIDType: "isbn", isbn: d }); | ||

| return (0, either_1.genRight)({ docIDType: "isbn", isbn: d }); | ||

| } | ||

| if (filename.includes(constants_1.JENDELEY_NO_ID)) { | ||

| return E.right({ docIDType: "path", path: pdf }); | ||

| return (0, either_1.genRight)({ docIDType: "path", path: pdf }); | ||

| } | ||

| return E.left("Failed getDocIDManuallyWritten."); | ||

| return (0, either_1.genLeft)("Failed getDocIDManuallyWritten."); | ||

| } | ||

@@ -336,11 +313,12 @@ exports.getDocIDManuallyWritten = getDocIDManuallyWritten; | ||

| const doi = data["message"]["items"][i]["DOI"]; | ||

| return E.right({ docIDType: "doi", doi: doi }); | ||

| return (0, either_1.genRight)({ docIDType: "doi", doi: doi }); | ||

| } | ||

| } | ||

| } | ||

| catch (_a) { | ||

| catch (error) { | ||

| logger_1.logger.warn("error = " + error); | ||

| logger_1.logger.warn("Failed to get information from doi: " + URL); | ||

| } | ||

| } | ||

| return E.left("Failed to get DocID in getDocIDFromTitle"); | ||

| return (0, either_1.genLeft)("Failed to get DocID in getDocIDFromTitle"); | ||

| }); | ||

@@ -375,3 +353,3 @@ } | ||

| const manuallyWrittenDocID = getDocIDManuallyWritten(pdf); | ||

| if (E.isRight(manuallyWrittenDocID)) { | ||

| if (manuallyWrittenDocID._tag === "right") { | ||

| return manuallyWrittenDocID; | ||

@@ -382,3 +360,3 @@ } | ||

| const docIDFromUrl = getDocIDFromUrl(downloadUrl); | ||

| if (E.isRight(docIDFromUrl)) { | ||

| if (docIDFromUrl._tag === "right") { | ||

| return docIDFromUrl; | ||

@@ -391,3 +369,3 @@ } | ||

| const docIDFromTitle = yield getDocIDFromTitle(pdf, papersDir); | ||

| if (E.isRight(docIDFromTitle)) { | ||

| if (docIDFromTitle._tag === "right") { | ||

| return docIDFromTitle; | ||

@@ -404,3 +382,3 @@ } | ||

| logger_1.logger.warn(msg); | ||

| return E.left(msg); | ||

| return (0, either_1.genLeft)(msg); | ||

| } | ||

@@ -416,3 +394,3 @@ let texts = []; | ||

| logger_1.logger.warn(err.message); | ||

| return E.left("Failed to extract text from " + pdfFullpath); | ||

| return (0, either_1.genLeft)("Failed to extract text from " + pdfFullpath); | ||

| } | ||

@@ -427,3 +405,3 @@ const ids = sortDocIDs(getDocIDFromTexts(texts), num_pages); | ||

| if (i.docIDType == "isbn") { | ||

| return E.right(i); | ||

| return (0, either_1.genRight)(i); | ||

| } | ||

@@ -434,3 +412,3 @@ } | ||

| if (ids.length >= 1) { | ||

| return E.right(ids[0]); | ||

| return (0, either_1.genRight)(ids[0]); | ||

| } | ||

@@ -440,3 +418,3 @@ else { | ||

| logger_1.logger.warn(error_message); | ||

| return E.left(error_message); | ||

| return (0, either_1.genLeft)(error_message); | ||

| } | ||

@@ -446,3 +424,3 @@ } | ||

| logger_1.logger.warn("Cannot decide docID of " + pdf); | ||

| return E.left(error_messages_1.ERROR_GET_DOC_ID + pdf); | ||

| return (0, either_1.genLeft)(error_messages_1.ERROR_GET_DOC_ID + pdf); | ||

| }); | ||

@@ -449,0 +427,0 @@ } |

159

dist/gen.js

| "use strict"; | ||

| var __createBinding = (this && this.__createBinding) || (Object.create ? (function(o, m, k, k2) { | ||

| if (k2 === undefined) k2 = k; | ||

| var desc = Object.getOwnPropertyDescriptor(m, k); | ||

| if (!desc || ("get" in desc ? !m.__esModule : desc.writable || desc.configurable)) { | ||

| desc = { enumerable: true, get: function() { return m[k]; } }; | ||

| } | ||

| Object.defineProperty(o, k2, desc); | ||

| }) : (function(o, m, k, k2) { | ||

| if (k2 === undefined) k2 = k; | ||

| o[k2] = m[k]; | ||

| })); | ||

| var __setModuleDefault = (this && this.__setModuleDefault) || (Object.create ? (function(o, v) { | ||

| Object.defineProperty(o, "default", { enumerable: true, value: v }); | ||

| }) : function(o, v) { | ||

| o["default"] = v; | ||

| }); | ||

| var __importStar = (this && this.__importStar) || function (mod) { | ||

| if (mod && mod.__esModule) return mod; | ||

| var result = {}; | ||

| if (mod != null) for (var k in mod) if (k !== "default" && Object.prototype.hasOwnProperty.call(mod, k)) __createBinding(result, mod, k); | ||

| __setModuleDefault(result, mod); | ||

| return result; | ||

| }; | ||

| var __awaiter = (this && this.__awaiter) || function (thisArg, _arguments, P, generator) { | ||

@@ -48,3 +25,3 @@ function adopt(value) { return value instanceof P ? value : new P(function (resolve) { resolve(value); }); } | ||

| const validate_db_1 = require("./validate_db"); | ||

| const E = __importStar(require("fp-ts/lib/Either")); | ||

| const either_1 = require("./either"); | ||

| const load_db_1 = require("./load_db"); | ||

@@ -124,7 +101,12 @@ const path_util_1 = require("./path_util"); | ||

| const data = (yield got(URL, options).json()); | ||

| return data; | ||

| logger_1.logger.info("data = " + JSON.stringify(data)); | ||

| return (0, either_1.genRight)(data); | ||

| } | ||

| catch (_a) { | ||

| logger_1.logger.warn("Failed to get information from doi: " + URL); | ||

| return new Object(); | ||

| catch (error) { | ||

| logger_1.logger.warn("error = " + error); | ||

| const error_message = "Failed to get information from DOI: " + | ||

| URL + | ||

| " in getDoiJSON. This DOI is not registered."; | ||

| logger_1.logger.warn(error_message); | ||

| return (0, either_1.genLeft)(error_message); | ||

| } | ||

@@ -184,8 +166,6 @@ }); | ||

| return __awaiter(this, void 0, void 0, function* () { | ||

| let json_r = undefined; | ||

| let db_id = undefined; | ||

| if (docID.docIDType == "arxiv") { | ||

| let dataFromArxiv = yield getArxivJson(docID.arxiv); | ||

| const dataFromArxiv = yield getArxivJson(docID.arxiv); | ||

| if (dataFromArxiv != undefined) { | ||

| let json = { | ||

| const json = { | ||

| path: pathPDF, | ||

@@ -197,17 +177,20 @@ idType: constants_1.ID_TYPE_ARXIV, | ||

| dataFromArxiv: dataFromArxiv, | ||

| reservedForUser: undefined, | ||

| }; | ||

| json_r = json; | ||

| db_id = "arxiv_" + docID.arxiv; | ||

| const dbID = "arxiv_" + docID.arxiv; | ||

| return (0, either_1.genRight)({ dbID: dbID, dbEntry: json }); | ||

| } | ||

| else { | ||

| logger_1.logger.warn("Failed to get information of " + | ||

| const error_message = "Failed to get information of " + | ||

| JSON.stringify(docID) + | ||

| " using arxiv " + | ||

| pathPDF); | ||

| " using arxiv. Path: " + | ||

| pathPDF; | ||

| logger_1.logger.warn(error_message); | ||

| return (0, either_1.genLeft)(error_message); | ||

| } | ||

| } | ||

| if (docID.docIDType == "doi" && json_r == undefined) { | ||

| let dataFromCrossref = yield getDoiJSON(docID.doi); | ||

| if (dataFromCrossref != undefined) { | ||

| let json = { | ||

| else if (docID.docIDType == "doi") { | ||

| const dataFromCrossref = yield getDoiJSON(docID.doi); | ||

| if (dataFromCrossref._tag === "right") { | ||

| const json = { | ||

| path: pathPDF, | ||

@@ -218,18 +201,16 @@ idType: constants_1.ID_TYPE_DOI, | ||

| userSpecifiedTitle: undefined, | ||

| dataFromCrossref: dataFromCrossref, | ||

| dataFromCrossref: dataFromCrossref.right, | ||

| reservedForUser: undefined, | ||

| }; | ||

| json_r = json; | ||

| db_id = "doi_" + docID.doi; | ||

| const dbID = "doi_" + docID.doi; | ||

| return (0, either_1.genRight)({ dbID: dbID, dbEntry: json }); | ||

| } | ||

| else { | ||

| logger_1.logger.warn("Failed to get information of " + | ||

| JSON.stringify(docID) + | ||

| " using doi " + | ||

| pathPDF); | ||

| return dataFromCrossref; | ||

| } | ||

| } | ||

| if (docID.docIDType == "isbn" && json_r == undefined) { | ||

| let dataFromNodeIsbn = yield getIsbnJson(docID.isbn); | ||

| else if (docID.docIDType == "isbn") { | ||

| const dataFromNodeIsbn = yield getIsbnJson(docID.isbn); | ||

| if (dataFromNodeIsbn != undefined) { | ||

| let json = { | ||

| const json = { | ||

| path: pathPDF, | ||

@@ -241,15 +222,17 @@ idType: constants_1.ID_TYPE_ISBN, | ||

| dataFromNodeIsbn: dataFromNodeIsbn, | ||

| reservedForUser: undefined, | ||

| }; | ||

| json_r = json; | ||

| db_id = "isbn_" + docID.isbn; | ||

| const dbID = "isbn_" + docID.isbn; | ||

| return (0, either_1.genRight)({ dbID: dbID, dbEntry: json }); | ||

| } | ||

| else { | ||

| logger_1.logger.warn("Failed to get information of " + | ||

| const error_message = "Failed to get information of " + | ||

| JSON.stringify(docID) + | ||

| " using isbn " + | ||

| pathPDF); | ||

| " using isbn. Path: " + | ||

| pathPDF; | ||

| return (0, either_1.genLeft)(error_message); | ||

| } | ||

| } | ||

| if (docID.docIDType == "path" && json_r == undefined) { | ||

| let json = { | ||

| else if (docID.docIDType == "path") { | ||

| const json = { | ||

| path: pathPDF, | ||

@@ -261,19 +244,10 @@ title: docID.path.join(path_1.default.sep), | ||

| userSpecifiedTitle: undefined, | ||

| reservedForUser: undefined, | ||

| }; | ||

| json_r = json; | ||

| db_id = "path_" + docID.path.join("_"); | ||

| const dbID = "path_" + docID.path.join("_"); | ||

| return (0, either_1.genRight)({ dbID: dbID, dbEntry: json }); | ||

| } | ||

| if (json_r == undefined || db_id == undefined) { | ||

| logger_1.logger.warn("Failed to get information of " + | ||

| JSON.stringify(docID) + | ||

| " path = " + | ||

| pathPDF + | ||

| " json_r = " + | ||

| JSON.stringify(json_r) + | ||

| " db_id = " + | ||

| JSON.stringify(db_id)); | ||

| return undefined; | ||

| } | ||

| else { | ||

| return [json_r, db_id]; | ||

| logger_1.logger.fatal("Invalid docID.docIDType = " + docID.docIDType + "for getJson."); | ||

| process.exit(1); | ||

| } | ||

@@ -298,2 +272,3 @@ }); | ||

| comments: crypto_1.default.randomBytes(40).toString("hex"), | ||

| reservedForUser: undefined, | ||

| }; | ||

@@ -323,2 +298,3 @@ jsonDB[id] = e; | ||

| idType: constants_1.ID_TYPE_URL, | ||

| reservedForUser: undefined, | ||

| }; | ||

@@ -328,3 +304,3 @@ if (isValidJsonEntry(json)) { | ||

| if (id in jsonDB) { | ||

| return E.left("Failed to register url_" + | ||

| return (0, either_1.genLeft)("Failed to register url_" + | ||

| url + | ||

@@ -338,7 +314,7 @@ ". Because " + | ||

| logger_1.logger.info("Register url_" + url); | ||

| return E.right(jsonDB); | ||

| return (0, either_1.genRight)(jsonDB); | ||

| } | ||

| } | ||

| else { | ||

| return E.left("Failed to register url_" + url + ". Because got JSON is not valid."); | ||

| return (0, either_1.genLeft)("Failed to register url_" + url + ". Because got JSON is not valid."); | ||

| } | ||

@@ -380,3 +356,3 @@ } | ||

| const docID = yield (0, docid_1.getDocID)(pdf, papersDir, false, downloadUrl); | ||

| if (E.isLeft(docID)) { | ||

| if (docID._tag === "left") { | ||

| logger_1.logger.warn("Cannot get docID of " + pdf); | ||

@@ -386,8 +362,8 @@ return docID; | ||

| logger_1.logger.info("docID = " + JSON.stringify(docID)); | ||

| const t = yield getJson(E.toUnion(docID), JSON.parse(JSON.stringify(pdf))); | ||

| if (t == undefined) { | ||

| return E.left(pdf + " is not valid."); | ||

| const t = yield getJson(docID.right, JSON.parse(JSON.stringify(pdf))); | ||

| if (t._tag === "left") { | ||

| return t; | ||

| } | ||

| const json = t[0]; | ||

| const dbID = t[1]; | ||

| const json = t.right.dbEntry; | ||

| const dbID = t.right.dbID; | ||

| json.comments = comments; | ||

@@ -398,3 +374,3 @@ json.tags = tags; | ||

| console.warn("mv ", '"' + pdf + '" duplicated'); | ||

| return E.left(pdf + | ||

| return (0, either_1.genLeft)(pdf + | ||

| " is duplicated. You can find another file in " + | ||

@@ -422,3 +398,3 @@ jsonDB[dbID][constants_1.ENTRY_PATH] + | ||

| logger_1.logger.warn(err); | ||

| return E.left(err); | ||

| return (0, either_1.genLeft)(err); | ||

| } | ||

@@ -428,3 +404,3 @@ fs_1.default.renameSync((0, path_util_1.concatDirs)(papersDir.concat(oldFilename)), (0, path_util_1.concatDirs)(papersDir.concat(newFilename))); | ||

| } | ||

| return E.right([dbID, json]); | ||

| return (0, either_1.genRight)([dbID, json]); | ||

| }); | ||

@@ -522,10 +498,10 @@ } | ||

| bookChapters[(0, path_util_1.concatDirs)(papersDir.concat(bd))].pdfs.push(p); | ||

| if (E.isRight(docID)) { | ||

| const i = E.toUnion(docID); | ||

| if (docID._tag === "right") { | ||

| const i = docID.right; | ||

| const t = yield getJson(i, p); | ||

| if (t != undefined && | ||

| t[0].idType == constants_1.ID_TYPE_ISBN && | ||

| if (t._tag === "right" && | ||

| t.right.dbEntry.idType == constants_1.ID_TYPE_ISBN && | ||

| i.docIDType == "isbn") { | ||

| bookChapters[(0, path_util_1.concatDirs)(papersDir.concat(bd))].isbnEntry = [ | ||

| t[0], | ||

| t.right.dbEntry, | ||

| i.isbn, | ||

@@ -539,4 +515,4 @@ ]; | ||

| const idEntryOrError = yield registerNonBookPDF(papersDir, p, jsonDB, undefined, "", [], false, undefined); | ||

| if (E.isRight(idEntryOrError)) { | ||

| const t = E.toUnion(idEntryOrError); | ||

| if (idEntryOrError._tag === "right") { | ||

| const t = idEntryOrError.right; | ||

| jsonDB[t[0]] = t[1]; | ||

@@ -576,2 +552,3 @@ } | ||

| dataFromNodeIsbn: isbnEntry.dataFromNodeIsbn, | ||

| reservedForUser: undefined, | ||

| }; | ||

@@ -578,0 +555,0 @@ const chapterID = "book_" + isbn + "_" + path_1.default.basename(pdf[pdf.length - 1]); |

| "use strict"; | ||

| var __createBinding = (this && this.__createBinding) || (Object.create ? (function(o, m, k, k2) { | ||

| if (k2 === undefined) k2 = k; | ||

| var desc = Object.getOwnPropertyDescriptor(m, k); | ||

| if (!desc || ("get" in desc ? !m.__esModule : desc.writable || desc.configurable)) { | ||

| desc = { enumerable: true, get: function() { return m[k]; } }; | ||

| } | ||

| Object.defineProperty(o, k2, desc); | ||

| }) : (function(o, m, k, k2) { | ||

| if (k2 === undefined) k2 = k; | ||

| o[k2] = m[k]; | ||

| })); | ||

| var __setModuleDefault = (this && this.__setModuleDefault) || (Object.create ? (function(o, v) { | ||

| Object.defineProperty(o, "default", { enumerable: true, value: v }); | ||

| }) : function(o, v) { | ||

| o["default"] = v; | ||

| }); | ||

| var __importStar = (this && this.__importStar) || function (mod) { | ||

| if (mod && mod.__esModule) return mod; | ||

| var result = {}; | ||

| if (mod != null) for (var k in mod) if (k !== "default" && Object.prototype.hasOwnProperty.call(mod, k)) __createBinding(result, mod, k); | ||

| __setModuleDefault(result, mod); | ||

| return result; | ||

| }; | ||

| var __awaiter = (this && this.__awaiter) || function (thisArg, _arguments, P, generator) { | ||

@@ -37,14 +14,14 @@ function adopt(value) { return value instanceof P ? value : new P(function (resolve) { resolve(value); }); } | ||

| const docid_1 = require("./docid"); | ||

| const E = __importStar(require("fp-ts/lib/Either")); | ||

| const either_1 = require("./either"); | ||

| function rightDoi(doi) { | ||

| return E.right({ docIDType: "doi", doi: doi }); | ||

| return (0, either_1.genRight)({ docIDType: "doi", doi: doi }); | ||

| } | ||

| function rightIsbn(isbn) { | ||

| return E.right({ docIDType: "isbn", isbn: isbn }); | ||

| return (0, either_1.genRight)({ docIDType: "isbn", isbn: isbn }); | ||

| } | ||

| function rightPath(path) { | ||

| return E.right({ docIDType: "path", path: path }); | ||

| return (0, either_1.genRight)({ docIDType: "path", path: path }); | ||

| } | ||

| function rightArxiv(arxiv) { | ||

| return E.right({ docIDType: "arxiv", arxiv: arxiv }); | ||

| return (0, either_1.genRight)({ docIDType: "arxiv", arxiv: arxiv }); | ||

| } | ||

@@ -70,12 +47,10 @@ test.skip("DOI from title", () => __awaiter(void 0, void 0, void 0, function* () { | ||

| const docID = yield (0, docid_1.getDocID)(pdf, ["hoge"], false, undefined); | ||

| if (E.isRight(docID)) { | ||

| const t = yield (0, gen_1.getJson)(E.toUnion(docID), pdf); | ||

| expect(t).toBeTruthy(); | ||

| if (t == undefined) | ||

| if (docID._tag === "right") { | ||

| const t = yield (0, gen_1.getJson)(docID.right, pdf); | ||

| if (t._tag === "left") | ||

| return; | ||

| const json = t[0]; | ||

| expect(json).toBeTruthy(); | ||

| if (json == undefined) | ||

| const json = t.right.dbEntry; | ||

| if (json.idType !== "path") | ||

| return; | ||

| expect(json["title"]).toBe("DistributedLearning/[Jeffrey Dean] Large Scale Distributed Deep Networks [jendeley no id].pdf"); | ||

| expect(json.title).toBe("DistributedLearning/[Jeffrey Dean] Large Scale Distributed Deep Networks [jendeley no id].pdf"); | ||

| } | ||

@@ -82,0 +57,0 @@ })); |

@@ -6,3 +6,3 @@ { | ||

| }, | ||

| "version": "0.0.22", | ||

| "version": "0.0.23", | ||

| "description": "", | ||

@@ -37,3 +37,3 @@ "main": "index.js", | ||

| }, | ||

| "homepage": "https://github.com/akawashiro/jendeley/tree/main/jendeley-backend#readme", | ||

| "homepage": "https://akawashiro.github.io/jendeley/", | ||

| "devDependencies": { | ||

@@ -54,3 +54,2 @@ "@types/base-64": "^1.0.0", | ||

| "dependencies": { | ||

| "fp-ts": "^2.13.1", | ||

| "base-64": "^1.0.0", | ||

@@ -57,0 +56,0 @@ "body-parser": "^1.20.1", |

@@ -16,62 +16,1 @@ # This software is still experimental. Please wait until 1.0.0 for heavy use. | ||

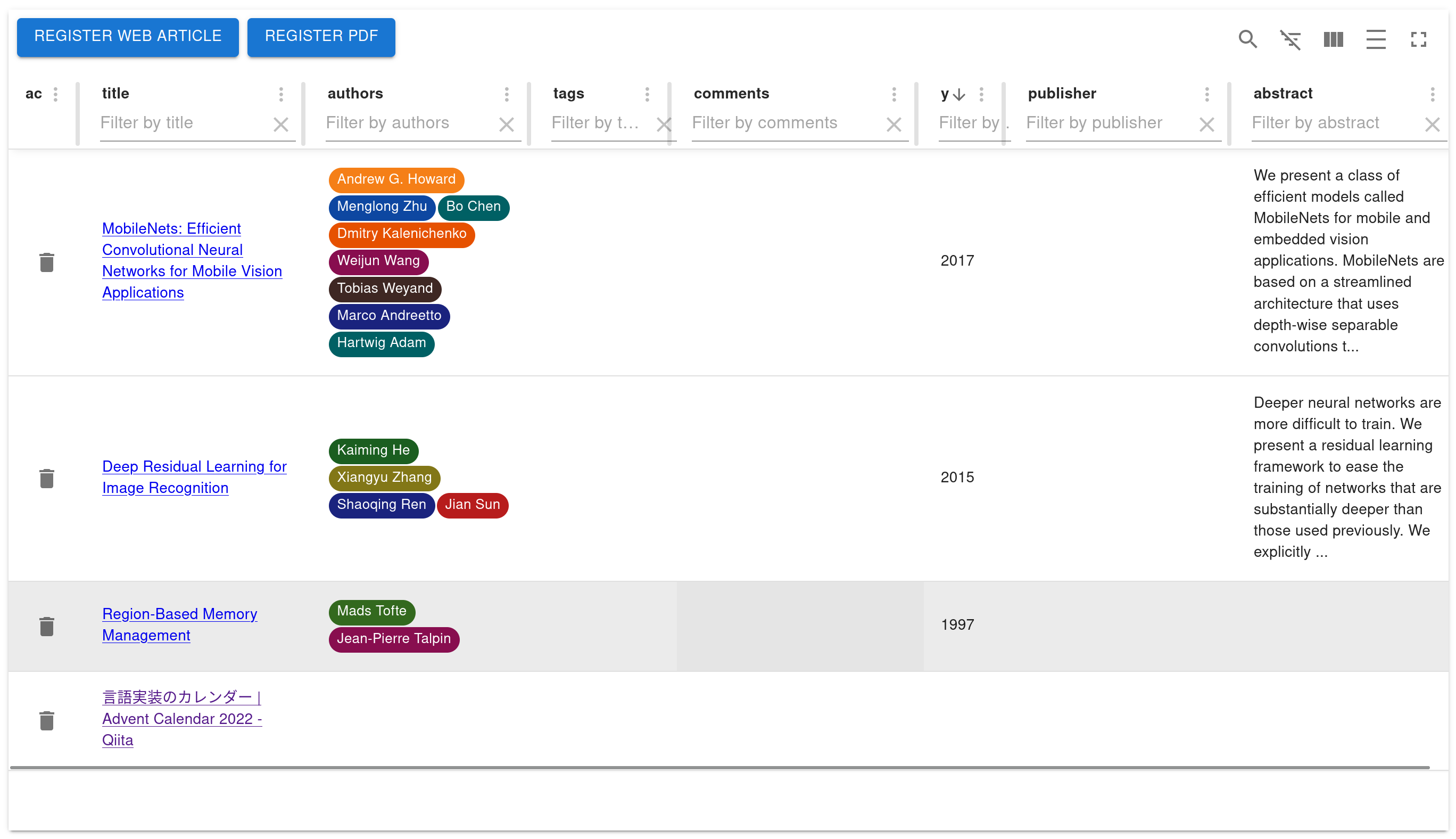

|  | ||

| ## How to install | ||

| ``` | ||

| npm install @a_kawashiro/jendeley -g | ||

| ``` | ||

| ## How to scan your PDFs | ||

| This command emits the database to `<YOUR PDFs DIR>/jendeley_db.json`. When `jendeley` failed to scan some PDFs, it emit a shellscript `edit_and_run.sh`. Please read the next subsection and rename files using it. | ||

| ``` | ||

| jendeley scan --papers_dir <YOUR PDFs DIR> | ||

| ``` | ||

| #### Recommended filename style | ||

| `jendeley` use filename also to find the document ID (e.g. [DOI](https://www.doi.org/) or [ISBN](https://en.wikipedia.org/wiki/ISBN))). `jendeley` recognizes the filename other than surrounded by `[` and `]` as the title of the file. So I recommend you to name file such way. For example, | ||

| - `Register Allocation and Optimal Spill Code Scheduling in Software Pipelined Loops Using 0-1 Integer Linear Programming Formulation.pdf` | ||

| - When the title of document includes spaces, the filename should also includes spaces. | ||

| - `RustHorn CHC-based Verification for Rust Programs [matushita].pdf` | ||

| - If you want to write additional information in the filename, please surround by `[` and `]`. | ||

| #### When failed to scan your PDFs | ||

| `jendeley` is heavily dependent on [DOI](https://www.doi.org/) or [ISBN](https://en.wikipedia.org/wiki/ISBN) to find title, authors and published year of PDFs. So `jendeley` try to find DOI of given PDFs in many ways. But sometimes all of them fails to find DOI. In that case, you can specify DOI of PDF manually using filename. | ||

| - To specify DOI, change the filename to include `[jendeley doi <DOI replaced all delimiters with underscore>]`. For example, `cyclone [jendeley doi 10_1145_512529_512563].pdf`. | ||

| - To specify ISBN, change the filename to include `[jendeley isbn <ISBN>]`. For example, `hoge [jendeley isbn 9781467330763].pdf`. | ||

| ## Launch jendeley UI | ||

| ``` | ||

| jendeley launch --db <YOUR PDFs DIR>/jendeley_db.json | ||

| ``` | ||

| You can use `--port` option to change the default port. | ||

| #### If you want to launch `jendeley` automatically | ||

| When you are using Linux, you can launch `jendeley` automatically using `systemd`. Please make `~/.config/systemd/user/jendeley.service` with the following contents, run `systemctl --user enable jendeley && systemctl --user start jendeley` and access [http://localhost:5000](http://localhost:5000). You can check log with `journalctl --user -f -u jendeley.service`. | ||

| ``` | ||

| [Unit] | ||

| Description=jendeley JSON based document organization software | ||

| [Service] | ||

| ExecStart=jendeley launch --db <FILL PATH TO THE YOUR DATABASE JSON FILE> --no_browser | ||

| [Install] | ||

| WantedBy=default.target | ||

| ``` | ||

| ## Check your database | ||

| Because `jendeley` is fully JSON-based, you can check the contents of the | ||

| database easily. For example, you can use `jq` command to list up all titles in | ||

| your database with the following command. | ||

| ``` | ||

| > cat jendeley_db.json | jq '.' | head | ||

| { | ||

| "jendeley_meta": { | ||

| "idType": "meta", | ||

| "version": "0.0.17" | ||

| }, | ||

| "doi_10.1145/1122445.1122456": { | ||

| "path": "/A Comprehensive Survey of Neural Architecture Search.pdf", | ||

| "idType": "doi", | ||

| "tags": [], | ||

| "comments": "", | ||

| ``` |

Sorry, the diff of this file is not supported yet

Sorry, the diff of this file is not supported yet

Sorry, the diff of this file is not supported yet

Sorry, the diff of this file is not supported yet

New alerts

License Policy Violation

LicenseThis package is not allowed per your license policy. Review the package's license to ensure compliance.

Found 1 instance in 1 package

Fixed alerts

License Policy Violation

LicenseThis package is not allowed per your license policy. Review the package's license to ensure compliance.

Found 1 instance in 1 package

Major refactor

Supply chain riskPackage has recently undergone a major refactor. It may be unstable or indicate significant internal changes. Use caution when updating to versions that include significant changes.

Found 1 instance in 1 package

Improved metrics

- Dependency count

- decreased by-5.88%

16

- Number of package files

- increased by3.7%

56

- Number of medium supply chain risk alerts

- decreased by-8.33%

11

Worsened metrics

- Total package byte prevSize

- decreased by-0.13%

4268869

- Lines of code

- decreased by-1.23%

6172

- Number of lines in readme file

- decreased by-79.22%

16

Dependency changes

+ Addednanoid@3.3.7(transitive)

- Removedfp-ts@^2.13.1

- Removedfp-ts@2.16.9(transitive)

- Removednanoid@3.3.8(transitive)