Product

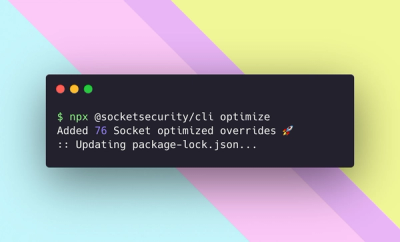

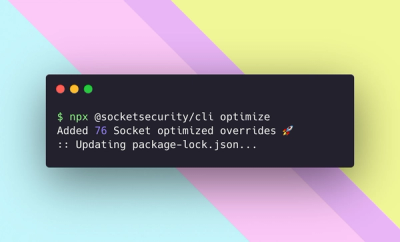

Introducing Socket Optimize

We're excited to introduce Socket Optimize, a powerful CLI command to secure open source dependencies with tested, optimized package overrides.

compromise

Advanced tools

npm install compromise

compromise tries its best to turn text into data.

compromise tries its best to turn text into data.

it makes limited and sensible decisions.

it makes limited and sensible decisions.

it is not as smart as you'd think.

it is not as smart as you'd think.

import nlp from 'compromise'

let doc = nlp('she sells seashells by the seashore.')

doc.verbs().toPastTense()

doc.text()

// 'she sold seashells by the seashore.'

if (doc.has('simon says #Verb')) {

return true

}

let doc = nlp(entireNovel)

doc.match('the #Adjective of times').text()

// "the blurst of times?"

compute metadata, and grab it:

import plg from 'compromise-speech'

nlp.extend(plg)

let doc = nlp('Milwaukee has certainly had its share of visitors..')

doc.compute('syllables')

doc.places().json()

/*

[{

"text": "Milwaukee",

"terms": [{

"normal": "milwaukee",

"syllables": ["mil", "waukee"]

}]

}]

*/

quickly flip between parsed and unparsed forms:

let doc = nlp('soft and yielding like a nerf ball')

doc.out({ '#Adjective': (m)=>`<i>${m.text()}</i>` })

// '<i>soft</i> and <i>yielding</i> like a nerf ball'

avoid idiomatic problems, and brittle parsers:

let doc = nlp("we're not gonna take it..")

doc.has('gonna') // true

doc.has('going to') // true (implicit)

// transform

doc.contractions().expand()

dox.text()

// 'we are not going to take it..'

whip stuff around like it's data:

let doc = nlp('ninety five thousand and fifty two')

doc.numbers().add(20)

doc.text()

// 'ninety five thousand and seventy two'

because it actually is:

let doc = nlp('the purple dinosaur')

doc.nouns().toPlural()

doc.text()

// 'the purple dinosaurs'

Use it on the client-side:

<script src="https://unpkg.com/compromise"></script>

<script>

var doc = nlp('two bottles of beer')

doc.numbers().minus(1)

document.body.innerHTML = doc.text()

// 'one bottle of beer'

</script>

or likewise:

import nlp from 'compromise'

var doc = nlp('London is calling')

doc.verbs().toNegative()

// 'London is not calling'

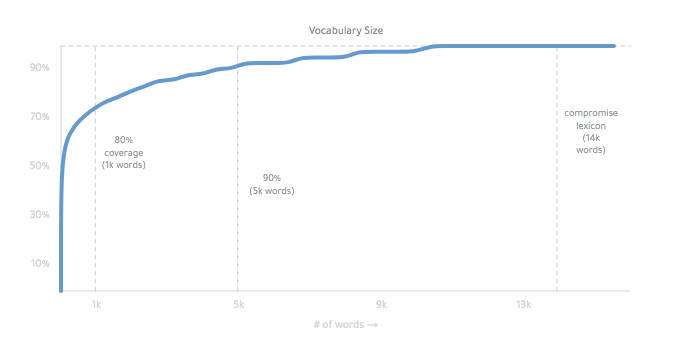

compromise is ~200kb (minified):

it's pretty fast. It can run on keypress:

it works mainly by conjugating all forms of a basic word list.

The final lexicon is ~14,000 words:

you can read more about how it works, here. it's weird.

okay,

compromise/one

A tokenizer of words, sentences, and punctuation.

import nlp from 'compromise/one'

let doc = nlp("Wayne's World, party time")

let data = doc.json()

/* [{

normal:"wayne's world party time",

terms:[{ text: "Wayne's", normal: "wayne" },

...

]

}]

*/

one splits your text up, wraps it in a handy API,

(match methods use the match-syntax.)

one is fast - most sentences take a 10th of a millisecond.

It can do ~1mb of text a second - or 10 wikipedia pages.

Infinite jest is takes 3s.

You can also paralellize, or stream text to it with compromise-speed.

compromise/two

A part-of-speech tagger, and grammar-interpreter.

import nlp from 'compromise/two'

let doc = nlp("Wayne's World, party time")

let str = doc.match('#Possessive #Noun').text()

// "Wayne's World"

this is more useful than people sometimes realize.

Really light grammar helps you write cleaner templates, and get closer to the information.

Part-of-speech tagging is profoundly-difficult task to get 100% on. It is also a profoundly easy task to get 85% on.

you can see the grammar of each word by running doc.debug(), and the reasoning for each tag with nlp.verbose('tagger').

compromise has 83 tags, arranged in a handsome graph.

#FirstName → #Person → #ProperNoun → #Noun

if you prefer Penn tags, you can derive them with:

let doc = nlp('welcome thrillho')

doc.compute('penn')

doc.json()

compromise/three

Phrase and sentence tooling.

import nlp from 'compromise/three'

let doc = nlp("Wayne's World, party time")

let str = doc.people().normalize().text()

// "wayne"

three is a set of tooling to zoom into and operate on parts of a text.

.numbers() grabs all the numbers in a document, for example - and extends it with new methods, like .subtract().

'football captain' → 'football captains''turnovers' → 'turnover''s to the end, in a safe manner.'will go' → 'went''walked' → 'walks''walked' → 'will walk''walks' → 'walk''walks' → 'walking''drive' → 'driven' - otherwise simple-past ('walked')'went' → 'did not go'"didn't study" → 'studied'25 kilos'1/3rdfive or fifth5 or 5thfifth or 5thfive or 5'$2.50'

he walks -> he walkedhe walked -> he walkshe walks -> he will walkhe walks -> he didn't walkhe doesn't walk -> he walks?!? or !!?.'wash-out''(939) 555-0113''#nlp''hi@compromise.cool':)💋'@nlp_compromise''compromise.cool''quickly''he''but''of''Mrs.'people() + places() + organizations()"Spencer's"'FBI''eats, shoots, and leaves'

compromise comes with a considerate, common-sense baseline for english grammar. You're free to change, or lay-waste to any settings - which is the fun part actually.

the easiest part is just to suggest tags for any given words:

let myWords = {

kermit: 'FirstName',

fozzie: 'FirstName',

}

let doc = nlp(muppetText, myWords)

or make heavier changes with a compromise-plugin.

import nlp from 'compromise'

nlp.extend({

// add new tags

tags: {

Character: {

isA: 'Person',

notA: 'Adjective',

},

},

// add or change words in the lexicon

words: {

kermit: 'Character',

gonzo: 'Character',

},

// add new methods to compromise

api: (View) => {

View.prototype.kermitVoice = function () {

this.sentences().prepend('well,')

this.match('i [(am|was)]').prepend('um,')

return this

}

}

})

(these methods are on the nlp object)

These are some helpful extensions:

npm install compromise-adjectives

quick

quick to quickestquick to quickerquick to quicklyquick to quickenquick to quicknessnpm install compromise-dates

June 8th or 03/03/18

2 weeks or 5mins

4:30pm or half past five

npm install compromise-export

npm install compromise-html

npm install compromise-hash

npm install compromise-keypress

npm install compromise-plugin-stats

npm install compromise-paragraphs

this plugin creates a wrapper around the default sentence objects.

npm install compromise-syllables

npm install compromise-penn-tags

we're committed to typescript/deno support, both in main and in the official-plugins:

import nlp from 'compromise'

import stats from 'compromise-stats'

const nlpEx = nlp.extend(stats)

nlpEx('This is type safe!').ngrams({ min: 1 })

slash-support:

We currently split slashes up as different words, like we do for hyphens. so things like this don't work:

nlp('the koala eats/shoots/leaves').has('koala leaves') //false

inter-sentence match:

By default, sentences are the top-level abstraction.

Inter-sentence, or multi-sentence matches aren't supported without a plugin:

nlp("that's it. Back to Winnipeg!").has('it back')//false

nested match syntax:

the danger beauty of regex is that you can recurse indefinitely.

Our match syntax is much weaker. Things like this are not (yet) possible:

doc.match('(modern (major|minor))? general')

complex matches must be achieved with successive .match() statements.

dependency parsing: Proper sentence transformation requires understanding the syntax tree of a sentence, which we don't currently do. We should! Help wanted with this.

en-pos - very clever javascript pos-tagger by Alex Corvi

naturalNode - fancier statistical nlp in javascript

dariusk/pos-js - fastTag fork in javascript

compendium-js - POS and sentiment analysis in javascript

nodeBox linguistics - conjugation, inflection in javascript

reText - very impressive text utilities in javascript

superScript - conversation engine in js

jsPos - javascript build of the time-tested Brill-tagger

spaCy - speedy, multilingual tagger in C/python

Prose - quick tagger in Go by Joseph Kato

MIT

FAQs

modest natural language processing

The npm package compromise receives a total of 41,136 weekly downloads. As such, compromise popularity was classified as popular.

We found that compromise demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 3 open source maintainers collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Product

We're excited to introduce Socket Optimize, a powerful CLI command to secure open source dependencies with tested, optimized package overrides.

Product

We're excited to announce that Socket now supports the Java programming language.

Security News

Socket detected a malicious Python package impersonating a popular browser cookie library to steal passwords, screenshots, webcam images, and Discord tokens.