Security News

The Push to Ban Ransom Payments Is Gaining Momentum

Ransomware costs victims an estimated $30 billion per year and has gotten so out of control that global support for banning payments is gaining momentum.

crawler-url-parser

Advanced tools

Readme

An URL parser for crawling purpose

![]()

npm install crawler-url-parser

const cup = require('crawler-url-parser');

//// parse(current_url,base_url)

let url = cup.parse("../ddd","http://question.stackoverflow.com/aaa/bbb/ccc/");

console.log(url.normalized);//http://question.stackoverflow.com/aaa/bbb/ddd

console.log(url.host); // question.stackoverflow.com

console.log(url.domain); // stackoverflow.com

console.log(url.subdomain); // question

console.log(url.protocol); // http:

console.log(url.path); // /aaa/bbb/ddd

const cup = require('crawler-url-parser');

//// extract(html_str,current_url);

let htmlStr=

'html> \

<body> \

<a href="http://www.stackoverflow.com/internal-1">test-link-4</a><br /> \

<a href="http://www.stackoverflow.com/internal-2">test-link-5</a><br /> \

<a href="http://www.stackoverflow.com/internal-2">test-link-6</a><br /> \

<a href="http://faq.stackoverflow.com/subdomain-1">test-link-7</a><br /> \

<a href="http://faq.stackoverflow.com/subdomain-2">test-link-8</a><br /> \

<a href="http://faq.stackoverflow.com/subdomain-2">test-link-9</a><br /> \

<a href="http://www.google.com/external-1">test-link-10</a><br /> \

<a href="http://www.google.com/external-2">test-link-11</a><br /> \

<a href="http://www.google.com/external-2">test-link-12</a><br /> \

</body> \

</html>';

let currentUrl= "http://www.stackoverflow.com/aaa/bbb/ccc";

let urls = cup.extract(htmlStr,currentUrl);

console.log(urls.length); // 6

const cup = require('crawler-url-parser');

//// getlevel(current_url,base_url);

let level = cup.getlevel("sub.domain.com/aaa/bbb/","sub.domain.com/aaa/bbb/ccc");

console.log(level); //sublevel

level = cup.getlevel("sub.domain.com/aaa/bbb/ccc/ddd","sub.domain.com/aaa/bbb/ccc");

console.log(level); //uplevel

level = cup.getlevel("sub.domain.com/aaa/bbb/eee","sub.domain.com/aaa/bbb/ccc");

console.log(level); //samelevel

level = cup.getlevel("sub.domain.com/aaa/bbb/eee","sub.anotherdomain.com/aaa/bbb/ccc");

console.log(level); //null

const cup = require('crawler-url-parser');

//// querycount(url)

let count = cup.querycount("sub.domain.com/aaa/bbb?q1=data1&q2=data2&q3=data3");

console.log(count); //3

mocha or npm test

more than 200 unit test cases. check test folder and QUICKSTART.js for extra usage.

FAQs

An `URL` parser for crawling purpose.

We found that crawler-url-parser demonstrated a not healthy version release cadence and project activity because the last version was released a year ago. It has 1 open source maintainer collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Security News

Ransomware costs victims an estimated $30 billion per year and has gotten so out of control that global support for banning payments is gaining momentum.

Application Security

New SEC disclosure rules aim to enforce timely cyber incident reporting, but fear of job loss and inadequate resources lead to significant underreporting.

Security News

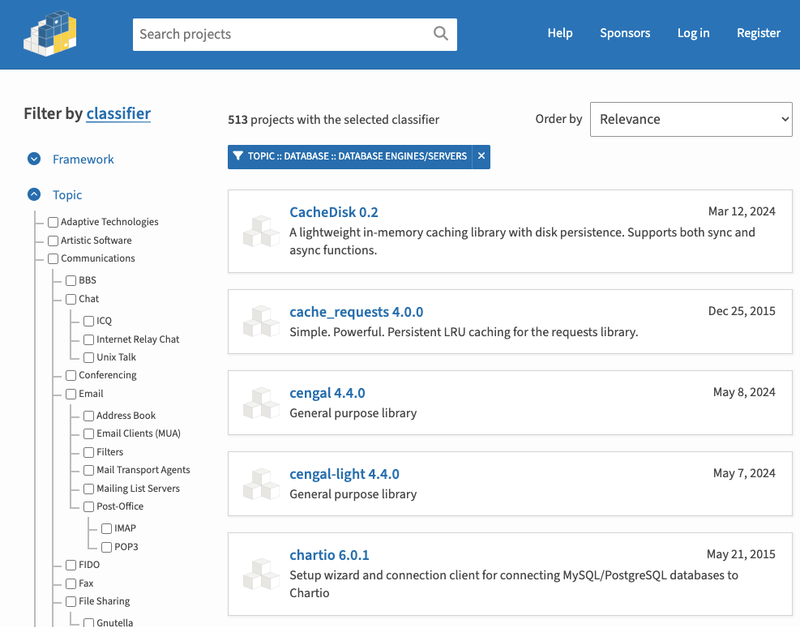

The Python Software Foundation has secured a 5-year sponsorship from Fastly that supports PSF's activities and events, most notably the security and reliability of the Python Package Index (PyPI).