Product

Introducing License Enforcement in Socket

Ensure open-source compliance with Socket’s License Enforcement Beta. Set up your License Policy and secure your software!

llm-interface

Advanced tools

A simple, unified interface for integrating and interacting with multiple Large Language Model (LLM) APIs, including OpenAI, AI21 Studio, Anthropic, Cloudflare AI, Cohere, Fireworks AI, Google Gemini, Goose AI, Groq, Hugging Face, Mistral AI, Perplexity,

The LLM Interface project is a versatile and comprehensive wrapper designed to interact with multiple Large Language Model (LLM) APIs. It simplifies integrating various LLM providers, including OpenAI, AI21 Studio, Anthropic, Cloudflare AI, Cohere, Fireworks AI, Google Gemini, Goose AI, Groq, Hugging Face, Mistral AI, Perplexity, Reka AI, and LLaMA.cpp, into your applications. This project aims to provide a simplified and unified interface for sending messages and receiving responses from different LLM services, making it easier for developers to work with multiple LLMs without worrying about the specific intricacies of each API.

LLMInterfaceSendMessage is a single, consistent interface to interact with fourteen different LLM APIs.v2.0.3

LLMInterface.getAllModelNames() and LLMInterface.getModelConfigValue(provider, configValueKey).v2.0.2

interfaceOptions.attemptJsonRepair to repair invalid JSON responses when they occur.reka becomes rekaai, goose becomes gooseai, mistral becomes mistralai.handlers has been removed.small models for various providers.The project relies on several npm packages and APIs. Here are the primary dependencies:

axios: For making HTTP requests (used for various HTTP AI APIs).@anthropic-ai/sdk: SDK for interacting with the Anthropic API.@google/generative-ai: SDK for interacting with the Google Gemini API.groq-sdk: SDK for interacting with the Groq API.openai: SDK for interacting with the OpenAI API.dotenv: For managing environment variables. Used by test cases.flat-cache: For caching API responses to improve performance and reduce redundant requests.jsonrepair: Used to repair invalid JSON responses.jest: For running test cases.To install the llm-interface package, you can use npm:

npm install llm-interface

First import LLMInterfaceSendMessage. You can do this using either the CommonJS require syntax:

const { LLMInterfaceSendMessage } = require('llm-interface');

or the ES6 import syntax:

import { LLMInterfaceSendMessage } from 'llm-interface';

then send your prompt to the LLM provider of your choice:

const message = {

model: 'gpt-3.5-turbo',

messages: [

{ role: 'system', content: 'You are a helpful assistant.' },

{ role: 'user', content: 'Explain the importance of low latency LLMs.' },

],

};

LLMInterfaceSendMessage('openai', process.env.OPENAI_API_KEY, message, {

max_tokens: 150,

})

.then((response) => {

console.log(response.results);

})

.catch((error) => {

console.error(error);

});

or if you want to keep things simple you can use:

LLMInterfaceSendMessage(

'openai',

process.env.OPENAI_API_KEY,

'Explain the importance of low latency LLMs.',

)

.then((response) => {

console.log(response.results);

})

.catch((error) => {

console.error(error);

});

If you need API Keys, use this starting point. Additional usage examples and an API reference are available. You may also wish to review the test cases for further examples.

The project includes tests for each LLM handler. To run the tests, use the following command:

npm test

Test Suites: 43 passed, 43 total

Tests: 172 passed, 172 total

Contributions to this project are welcome. Please fork the repository and submit a pull request with your changes or improvements.

This project is licensed under the MIT License - see the LICENSE file for details.

FAQs

A simple, unified NPM-based interface for interacting with multiple Large Language Model (LLM) APIs, including OpenAI, AI21 Studio, Anthropic, Cloudflare AI, Cohere, Fireworks AI, Google Gemini, Goose AI, Groq, Hugging Face, Mistral AI, Perplexity, Reka A

The npm package llm-interface receives a total of 71 weekly downloads. As such, llm-interface popularity was classified as not popular.

We found that llm-interface demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 0 open source maintainers collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Product

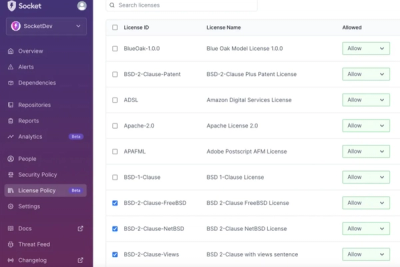

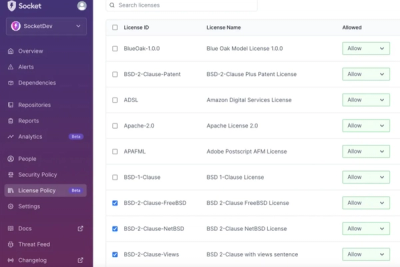

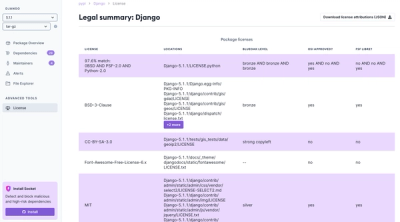

Ensure open-source compliance with Socket’s License Enforcement Beta. Set up your License Policy and secure your software!

Product

We're launching a new set of license analysis and compliance features for analyzing, managing, and complying with licenses across a range of supported languages and ecosystems.

Product

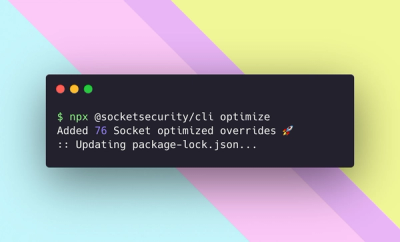

We're excited to introduce Socket Optimize, a powerful CLI command to secure open source dependencies with tested, optimized package overrides.