smartcrop - npm Package Compare versions

Comparing version 2.0.1 to 2.0.2

| { | ||

| "name": "smartcrop", | ||

| "version": "2.0.1", | ||

| "version": "2.0.2", | ||

| "description": "Content aware image cropping.", | ||

@@ -8,21 +8,20 @@ "homepage": "https://github.com/jwagner/smartcrop.js", | ||

| "main": "./smartcrop", | ||

| "files": [ | ||

| "smartcrop.js" | ||

| ], | ||

| "files": ["smartcrop.js"], | ||

| "devDependencies": { | ||

| "500px": "~0.3.2", | ||

| "benchmark": "~1.0.0", | ||

| "chai": "^3.5.0", | ||

| "grunt": "^0.4.4", | ||

| "benchmark": "^2.1.4", | ||

| "chai": "^4.1.2", | ||

| "eslint": "^4.17.0", | ||

| "grunt": "^1.0.1", | ||

| "grunt-cli": "^1.2.0", | ||

| "grunt-contrib-connect": "~0.7.1", | ||

| "grunt-contrib-watch": "~0.6.1", | ||

| "grunt-rsync": "~0.5.0", | ||

| "karma": "^1.1.0", | ||

| "karma-chrome-launcher": "^1.0.1", | ||

| "karma-mocha": "^1.1.1", | ||

| "karma-sauce-launcher": "^1.0.0", | ||

| "microtime": "^2.0.0", | ||

| "mocha": "^3.0.2", | ||

| "promise-polyfill": "^6.0.2" | ||

| "grunt-contrib-connect": "~1.0.2", | ||

| "grunt-contrib-watch": "~1.0.0", | ||

| "grunt-rsync": "~2.0.1", | ||

| "karma": "^2.0.0", | ||

| "karma-chrome-launcher": "^2.2.0", | ||

| "karma-mocha": "^1.3.0", | ||

| "karma-sauce-launcher": "^1.2.0", | ||

| "microtime": "^2.1.7", | ||

| "mocha": "^5.0.0", | ||

| "promise-polyfill": "^7.0.0" | ||

| }, | ||

@@ -36,4 +35,9 @@ "license": "MIT", | ||

| "start": "grunt", | ||

| "test": "./node_modules/.bin/karma start karma.conf.js" | ||

| "test": "npm run lint && karma start karma.conf.js", | ||

| "lint": | ||

| "eslint smartcrop.js test examples/slideshow.js examples/testbed.js examples/testsuite.js s examples/smartcrop-debug.js" | ||

| }, | ||

| "dependencies": { | ||

| "prettier-eslint": "^8.8.1" | ||

| } | ||

| } |

121

README.md

| # smartcrop.js | ||

| [](https://travis-ci.org/jwagner/smartcrop.js) | ||

| [](https://travis-ci.org/jwagner/smartcrop.js) | ||

@@ -8,31 +8,26 @@ Smartcrop.js implements an algorithm to find good crops for images. | ||

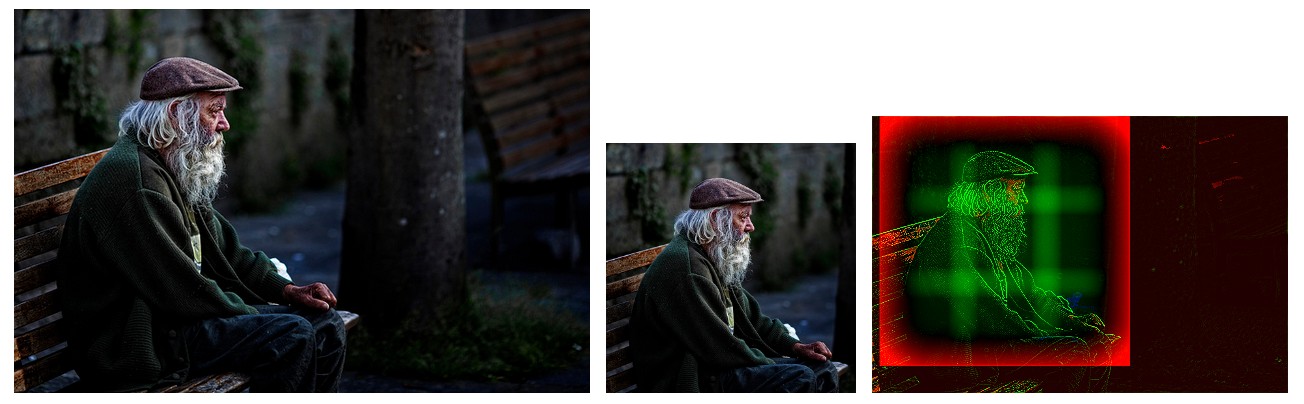

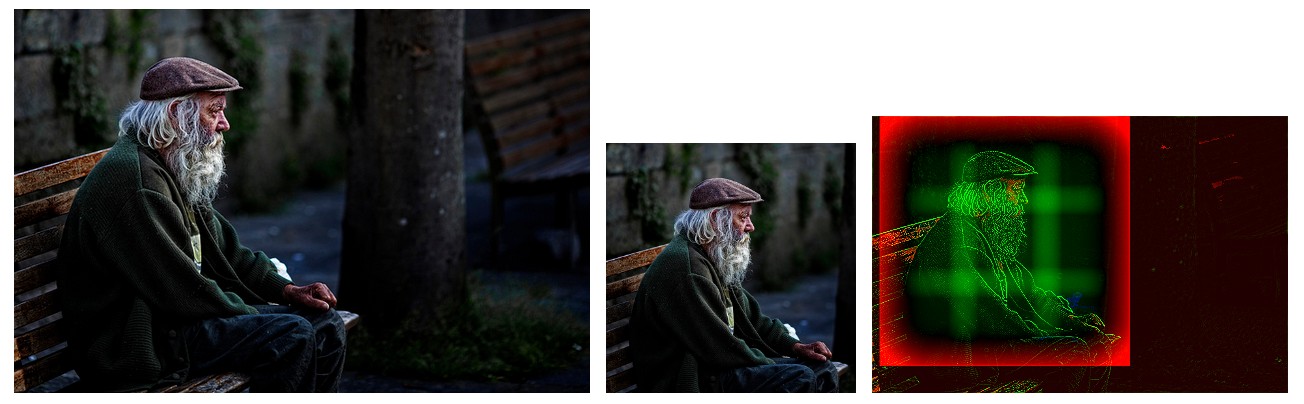

|  | ||

|  | ||

| Image: [https://www.flickr.com/photos/endogamia/5682480447/](https://www.flickr.com/photos/endogamia/5682480447) by N. Feans | ||

| ## Demos | ||

| * [Test Suite](http://29a.ch/sandbox/2014/smartcrop/examples/testsuite.html), contains over 100 images, **heavy**. | ||

| * [Test Bed](http://29a.ch/sandbox/2014/smartcrop/examples/testbed.html), allows you to test smartcrop with your own images and different face detection libraries. | ||

| * [Photo transitions](http://29a.ch/sandbox/2014/smartcrop/examples/slideshow.html), automatically creates Ken Burns transitions for a slide show. | ||

| ## Algorithm Overview | ||

| Smartcrop.js works using fairly dumb image processing. In short: | ||

| * [Smartcrop.js Test Suite](https://29a.ch/sandbox/2014/smartcrop/examples/testsuite.html), contains over 100 images, **heavy**. | ||

| * [Smartcrop.js Test Bed](https://29a.ch/sandbox/2014/smartcrop/examples/testbed.html), allows you to test smartcrop with your own images and different face detection libraries. | ||

| * [Automatic Photo transitions](https://29a.ch/sandbox/2014/smartcrop/examples/slideshow.html), automatically creates Ken Burns transitions for a slide show. | ||

| 1. Find edges using laplace | ||

| 1. Find regions with a color like skin | ||

| 1. Find regions high in saturation | ||

| 1. Boost regions as specified by options (for example detected faces) | ||

| 1. Generate a set of candidate crops using a sliding window | ||

| 1. Rank them using an importance function to focus the detail in the center | ||

| and avoid it in the edges. | ||

| 1. Output the candidate crop with the highest rank | ||

| ## Simple Example | ||

| ## Simple Example | ||

| ```javascript | ||

| smartcrop.crop(image, {width: 100, height: 100}).then(function(result){ | ||

| // you pass in an image as well as the width & height of the crop you | ||

| // want to optimize. | ||

| smartcrop.crop(image, { width: 100, height: 100 }).then(function(result) { | ||

| console.log(result); | ||

| }); | ||

| ``` | ||

| Output: | ||

| ```javascript | ||

| // smartcrop will output you it's best guess for a crop | ||

| // you can now use this data to crop the image. | ||

| {topCrop: {x: 300, y: 200, height: 200, width: 200}} | ||

@@ -42,5 +37,4 @@ ``` | ||

| ## Download/ Installation | ||

| ```npm install smartcrop``` | ||

| or | ||

| ```bower install smartcrop``` | ||

| `npm install smartcrop` | ||

| or just download [smartcrop.js](https://raw.githubusercontent.com/jwagner/smartcrop.js/master/smartcrop.js) from the git repository. | ||

@@ -53,8 +47,53 @@ | ||

| ## Command Line Interface | ||

| The [smartcrop-cli](https://github.com/jwagner/smartcrop-cli) offers command line interface to smartcrop.js. | ||

| ## Node | ||

| You can use smartcrop from nodejs via either [smartcrop-gm](https://github.com/jwagner/smartcrop-gm) (which is using image magick via gm) or [smartcrop-sharp](https://github.com/jwagner/smartcrop-sharp) (which is using libvips via sharp). | ||

| The [smartcrop-cli](https://github.com/jwagner/smartcrop-cli) can be used as an example of using smartcrop from node. | ||

| ## Stability | ||

| While _smartcrop.js_ is a small personal project it is currently being used on high traffic production sites. | ||

| It has a basic set of automated tests and a test coverage of close to 100%. | ||

| The tests are ran in all modern browsers thanks to [saucelabs](https://saucelabs.com/). | ||

| If in any doubt the code is short enough to perform a quick review yourself. | ||

| ## Algorithm Overview | ||

| Smartcrop.js works using fairly dumb image processing. In short: | ||

| 1. Find edges using laplace | ||

| 1. Find regions with a color like skin | ||

| 1. Find regions high in saturation | ||

| 1. Boost regions as specified by options (for example detected faces) | ||

| 1. Generate a set of candidate crops using a sliding window | ||

| 1. Rank them using an importance function to focus the detail in the center | ||

| and avoid it in the edges. | ||

| 1. Output the candidate crop with the highest rank | ||

| ## Face detection | ||

| The smartcrop algorithm itself is designed to be simple, relatively fast, small and generic. | ||

| In many cases it does make sense to add face detection to it to ensure faces get the priority they deserve. | ||

| There are multiple javascript libraries which can be easily integrated into smartcrop.js. | ||

| * [ccv js](https://github.com/liuliu/ccv) / [jquery.facedetection](http://facedetection.jaysalvat.com/) | ||

| * [tracking.js](https://trackingjs.com/examples/face_hello_world.html) | ||

| * [opencv.js](https://docs.opencv.org/3.3.1/d5/d10/tutorial_js_root.html) | ||

| * [node-opencv](https://github.com/peterbraden/node-opencv) | ||

| You can experiment with all of these in the [smartcrop.js testbed](https://29a.ch/sandbox/2014/smartcrop/examples/testbed.html) | ||

| On the client side I would recommend using tracking.js because it's small and simple. Opencv.js is compiled from c++ and very heavy (~7.6MB of javascript + 900kb of data). | ||

| jquery.facedetection has dependency on jquery and from my limited experience seems to perform worse than the others. | ||

| On the server side node-opencv can be quicker but comes with some [annoying issues](https://github.com/peterbraden/node-opencv/issues/415) as well. | ||

| It's also worth noting that all of these libraries are based on the now dated [viola-jones](https://en.wikipedia.org/wiki/Viola%E2%80%93Jones_object_detection_framework) object detection framework. | ||

| It would be interesting to see how more [state of the art](http://mmlab.ie.cuhk.edu.hk/projects/WIDERFace/WiderFace_Results.html) techniques could be implemented in browser friendly javascript. | ||

| ## Supported Module Formats | ||

@@ -70,11 +109,10 @@ | ||

| A [polyfill](https://github.com/taylorhakes/promise-polyfill) for | ||

| [Promises](http://caniuse.com/#feat=promises) is recommended. | ||

| [Promises](http://caniuse.com/#feat=promises) is recommended if you need to support old browsers. | ||

| ## API | ||

| The API is not yet finalized, expect changes. | ||

| ### smartcrop.crop(image, options) | ||

| Find the best crop for *image* using *options*. | ||

| Find the best crop for _image_ using _options_. | ||

| **image:** anything ctx.drawImage() accepts, usually HTMLImageElement, HTMLCanvasElement or HTMLVideoElement. | ||

@@ -101,3 +139,3 @@ | ||

| **debug *(internal)*:** if true, cropResults will contain a debugCanvas and the complete results array. | ||

| **debug _(internal)_:** if true, cropResults will contain a debugCanvas and the complete results array. | ||

@@ -108,6 +146,8 @@ There are many more (for now undocumented) options available. | ||

| ### cropResult | ||

| Result of the promise returned by smartcrop.crop. | ||

| ```javascript | ||

| { | ||

| topCrop: crop | ||

| topCrop: crop; | ||

| } | ||

@@ -117,4 +157,5 @@ ``` | ||

| ### crop | ||

| An invididual crop. | ||

| An individual crop. | ||

| ```javascript | ||

@@ -130,2 +171,3 @@ { | ||

| ### boost | ||

| Describes a region to boost. A usage example of this is to take | ||

@@ -144,13 +186,13 @@ into account faces in the image. See [smartcrop-cli](https://github.com/jwagner/smartcrop-cli) for an example on how to integrate face detection. | ||

| ## Tests | ||

| You can run the tests using grunt test. Alternatively you can also just run grunt (the default task) and open http://localhost:8000/test/. | ||

| The test coverage for smartcrop.js is very limited at the moment. I expect to improve this as the code matures and the concepts solidify. | ||

| You can run the tests using `grunt test`. Alternatively you can also just run grunt (the default task) and open http://localhost:8000/test/. | ||

| ## Benchmark | ||

| There are benchmarks for both the browser (test/benchmark.html) and node (node test/benchmark-node.js [requires node-canvas]) | ||

| both powered by [benchmark.js](http://benchmarkjs.com). | ||

| If you just want some rough numbers: It takes **< 100 ms** to find a **square crop** of a **640x427px** picture on an i7. | ||

| In other words, it's fine to run it on one image, it's not cool to run it on an entire gallery on page load. | ||

| If you just want some rough numbers: It takes **< 20 ms** to find a **square crop** of a **640x427px** picture on an i7. | ||

| In other words, it's fine to run it on one image, it's suboptimal to run it on an entire gallery on page load. | ||

@@ -164,2 +206,4 @@ ## Contributors | ||

| * [connect-thumbs](https://github.com/inadarei/connect-thumbs) Middleware for connect.js that supports smartcrop.js by [Irakli Nadareishvili](https://github.com/inadarei/connect-thumbs) | ||

| * [smartcrop-java](https://github.com/QuadFlask/smartcrop-java) by [QuadFlask](https://github.com/QuadFlask/) | ||

| * [smartcrop-android](https://github.com/QuadFlask/smartcrop-android) by [QuadFlask](https://github.com/QuadFlask/) | ||

| * [smartcrop.go](https://github.com/muesli/smartcrop) by [Christian Muehlhaeuser](https://github.com/muesli) | ||

@@ -172,13 +216,17 @@ * [smartcrop.py](https://github.com/hhatto/smartcrop.py) by [Hideo Hattori](http://www.hexacosa.net/about/) | ||

| ### 2.0.0 (beta) | ||

| In short: It's a lot faster when calculating bigger crops. | ||

| The quality of the crops should be comparable but the could be radically different, | ||

| so this will be a major release. | ||

| The quality of the crops should be comparable but the results | ||

| are going to be different so this will be a major release. | ||

| ### 1.1.1 | ||

| Removed useless files from npm package. | ||

| ### 1.1 | ||

| Creating github releases. Added options.input which is getting passed along to iop.open. | ||

| ### 1.0 | ||

| Refactoring/cleanup to make it easier to use with node.js (dropping the node-canvas dependency) and enable support for boosts which can be used to do face detection. | ||

@@ -189,2 +237,3 @@ This is a 1.0 in the semantic meaning (denoting backwards incompatible API changes). | ||

| ## License | ||

| Copyright (c) 2016 Jonas Wagner, licensed under the MIT License (enclosed) | ||

| Copyright (c) 2018 Jonas Wagner, licensed under the MIT License (enclosed) |

912

smartcrop.js

@@ -5,3 +5,3 @@ /** | ||

| * | ||

| * Copyright (C) 2016 Jonas Wagner | ||

| * Copyright (C) 2018 Jonas Wagner | ||

| * | ||

@@ -29,156 +29,166 @@ * Permission is hereby granted, free of charge, to any person obtaining | ||

| (function() { | ||

| 'use strict'; | ||

| 'use strict'; | ||

| var smartcrop = {}; | ||

| // Promise implementation to use | ||

| smartcrop.Promise = typeof Promise !== 'undefined' ? Promise : function() { | ||

| throw new Error('No native promises and smartcrop.Promise not set.'); | ||

| }; | ||

| var smartcrop = {}; | ||

| // Promise implementation to use | ||

| smartcrop.Promise = | ||

| typeof Promise !== 'undefined' | ||

| ? Promise | ||

| : function() { | ||

| throw new Error('No native promises and smartcrop.Promise not set.'); | ||

| }; | ||

| smartcrop.DEFAULTS = { | ||

| width: 0, | ||

| height: 0, | ||

| aspect: 0, | ||

| cropWidth: 0, | ||

| cropHeight: 0, | ||

| detailWeight: 0.2, | ||

| skinColor: [0.78, 0.57, 0.44], | ||

| skinBias: 0.01, | ||

| skinBrightnessMin: 0.2, | ||

| skinBrightnessMax: 1.0, | ||

| skinThreshold: 0.8, | ||

| skinWeight: 1.8, | ||

| saturationBrightnessMin: 0.05, | ||

| saturationBrightnessMax: 0.9, | ||

| saturationThreshold: 0.4, | ||

| saturationBias: 0.2, | ||

| saturationWeight: 0.3, | ||

| // Step * minscale rounded down to the next power of two should be good | ||

| scoreDownSample: 8, | ||

| step: 8, | ||

| scaleStep: 0.1, | ||

| minScale: 1.0, | ||

| maxScale: 1.0, | ||

| edgeRadius: 0.4, | ||

| edgeWeight: -20.0, | ||

| outsideImportance: -0.5, | ||

| boostWeight: 100.0, | ||

| ruleOfThirds: true, | ||

| prescale: true, | ||

| imageOperations: null, | ||

| canvasFactory: defaultCanvasFactory, | ||

| // Factory: defaultFactories, | ||

| debug: false, | ||

| }; | ||

| smartcrop.DEFAULTS = { | ||

| width: 0, | ||

| height: 0, | ||

| aspect: 0, | ||

| cropWidth: 0, | ||

| cropHeight: 0, | ||

| detailWeight: 0.2, | ||

| skinColor: [0.78, 0.57, 0.44], | ||

| skinBias: 0.01, | ||

| skinBrightnessMin: 0.2, | ||

| skinBrightnessMax: 1.0, | ||

| skinThreshold: 0.8, | ||

| skinWeight: 1.8, | ||

| saturationBrightnessMin: 0.05, | ||

| saturationBrightnessMax: 0.9, | ||

| saturationThreshold: 0.4, | ||

| saturationBias: 0.2, | ||

| saturationWeight: 0.1, | ||

| // Step * minscale rounded down to the next power of two should be good | ||

| scoreDownSample: 8, | ||

| step: 8, | ||

| scaleStep: 0.1, | ||

| minScale: 1.0, | ||

| maxScale: 1.0, | ||

| edgeRadius: 0.4, | ||

| edgeWeight: -20.0, | ||

| outsideImportance: -0.5, | ||

| boostWeight: 100.0, | ||

| ruleOfThirds: true, | ||

| prescale: true, | ||

| imageOperations: null, | ||

| canvasFactory: defaultCanvasFactory, | ||

| // Factory: defaultFactories, | ||

| debug: false | ||

| }; | ||

| smartcrop.crop = function(inputImage, options_, callback) { | ||

| var options = extend({}, smartcrop.DEFAULTS, options_); | ||

| if (options.aspect) { | ||

| options.width = options.aspect; | ||

| options.height = 1; | ||

| } | ||

| smartcrop.crop = function(inputImage, options_, callback) { | ||

| var options = extend({}, smartcrop.DEFAULTS, options_); | ||

| if (options.imageOperations === null) { | ||

| options.imageOperations = canvasImageOperations(options.canvasFactory); | ||

| } | ||

| if (options.aspect) { | ||

| options.width = options.aspect; | ||

| options.height = 1; | ||

| } | ||

| var iop = options.imageOperations; | ||

| if (options.imageOperations === null) { | ||

| options.imageOperations = canvasImageOperations(options.canvasFactory); | ||

| } | ||

| var scale = 1; | ||

| var prescale = 1; | ||

| var iop = options.imageOperations; | ||

| // open the image | ||

| return iop | ||

| .open(inputImage, options.input) | ||

| .then(function(image) { | ||

| // calculate desired crop dimensions based on the image size | ||

| if (options.width && options.height) { | ||

| scale = min( | ||

| image.width / options.width, | ||

| image.height / options.height | ||

| ); | ||

| options.cropWidth = ~~(options.width * scale); | ||

| options.cropHeight = ~~(options.height * scale); | ||

| // Img = 100x100, width = 95x95, scale = 100/95, 1/scale > min | ||

| // don't set minscale smaller than 1/scale | ||

| // -> don't pick crops that need upscaling | ||

| options.minScale = min( | ||

| options.maxScale, | ||

| max(1 / scale, options.minScale) | ||

| ); | ||

| var scale = 1; | ||

| var prescale = 1; | ||

| // open the image | ||

| return iop.open(inputImage, options.input).then(function(image) { | ||

| // calculate desired crop dimensions based on the image size | ||

| if (options.width && options.height) { | ||

| scale = min(image.width / options.width, image.height / options.height); | ||

| options.cropWidth = ~~(options.width * scale); | ||

| options.cropHeight = ~~(options.height * scale); | ||

| // Img = 100x100, width = 95x95, scale = 100/95, 1/scale > min | ||

| // don't set minscale smaller than 1/scale | ||

| // -> don't pick crops that need upscaling | ||

| options.minScale = min(options.maxScale, max(1 / scale, options.minScale)); | ||

| // prescale if possible | ||

| if (options.prescale !== false) { | ||

| prescale = min(max(256 / image.width, 256 / image.height), 1); | ||

| if (prescale < 1) { | ||

| image = iop.resample(image, image.width * prescale, image.height * prescale); | ||

| options.cropWidth = ~~(options.cropWidth * prescale); | ||

| options.cropHeight = ~~(options.cropHeight * prescale); | ||

| if (options.boost) { | ||

| options.boost = options.boost.map(function(boost) { | ||

| return { | ||

| x: ~~(boost.x * prescale), | ||

| y: ~~(boost.y * prescale), | ||

| width: ~~(boost.width * prescale), | ||

| height: ~~(boost.height * prescale), | ||

| weight: boost.weight | ||

| }; | ||

| }); | ||

| // prescale if possible | ||

| if (options.prescale !== false) { | ||

| prescale = min(max(256 / image.width, 256 / image.height), 1); | ||

| if (prescale < 1) { | ||

| image = iop.resample( | ||

| image, | ||

| image.width * prescale, | ||

| image.height * prescale | ||

| ); | ||

| options.cropWidth = ~~(options.cropWidth * prescale); | ||

| options.cropHeight = ~~(options.cropHeight * prescale); | ||

| if (options.boost) { | ||

| options.boost = options.boost.map(function(boost) { | ||

| return { | ||

| x: ~~(boost.x * prescale), | ||

| y: ~~(boost.y * prescale), | ||

| width: ~~(boost.width * prescale), | ||

| height: ~~(boost.height * prescale), | ||

| weight: boost.weight | ||

| }; | ||

| }); | ||

| } | ||

| } else { | ||

| prescale = 1; | ||

| } | ||

| } | ||

| } | ||

| else { | ||

| prescale = 1; | ||

| } | ||

| } | ||

| } | ||

| return image; | ||

| }) | ||

| .then(function(image) { | ||

| return iop.getData(image).then(function(data) { | ||

| var result = analyse(options, data); | ||

| return image; | ||

| }) | ||

| .then(function(image) { | ||

| return iop.getData(image).then(function(data) { | ||

| var result = analyse(options, data); | ||

| var crops = result.crops || [result.topCrop]; | ||

| for (var i = 0, iLen = crops.length; i < iLen; i++) { | ||

| var crop = crops[i]; | ||

| crop.x = ~~(crop.x / prescale); | ||

| crop.y = ~~(crop.y / prescale); | ||

| crop.width = ~~(crop.width / prescale); | ||

| crop.height = ~~(crop.height / prescale); | ||

| } | ||

| if (callback) callback(result); | ||

| return result; | ||

| }); | ||

| }); | ||

| }; | ||

| var crops = result.crops || [result.topCrop]; | ||

| for (var i = 0, iLen = crops.length; i < iLen; i++) { | ||

| var crop = crops[i]; | ||

| crop.x = ~~(crop.x / prescale); | ||

| crop.y = ~~(crop.y / prescale); | ||

| crop.width = ~~(crop.width / prescale); | ||

| crop.height = ~~(crop.height / prescale); | ||

| } | ||

| if (callback) callback(result); | ||

| return result; | ||

| }); | ||

| }); | ||

| }; | ||

| // Check if all the dependencies are there | ||

| // todo: | ||

| smartcrop.isAvailable = function(options) { | ||

| if (!smartcrop.Promise) return false; | ||

| // Check if all the dependencies are there | ||

| // todo: | ||

| smartcrop.isAvailable = function(options) { | ||

| if (!smartcrop.Promise) return false; | ||

| var canvasFactory = options ? options.canvasFactory : defaultCanvasFactory; | ||

| var canvasFactory = options ? options.canvasFactory : defaultCanvasFactory; | ||

| if (canvasFactory === defaultCanvasFactory) { | ||

| var c = document.createElement('canvas'); | ||

| if (!c.getContext('2d')) { | ||

| return false; | ||

| if (canvasFactory === defaultCanvasFactory) { | ||

| var c = document.createElement('canvas'); | ||

| if (!c.getContext('2d')) { | ||

| return false; | ||

| } | ||

| } | ||

| } | ||

| return true; | ||

| }; | ||

| return true; | ||

| }; | ||

| function edgeDetect(i, o) { | ||

| var id = i.data; | ||

| var od = o.data; | ||

| var w = i.width; | ||

| var h = i.height; | ||

| function edgeDetect(i, o) { | ||

| var id = i.data; | ||

| var od = o.data; | ||

| var w = i.width; | ||

| var h = i.height; | ||

| for (var y = 0; y < h; y++) { | ||

| for (var x = 0; x < w; x++) { | ||

| var p = (y * w + x) * 4; | ||

| var lightness; | ||

| for (var y = 0; y < h; y++) { | ||

| for (var x = 0; x < w; x++) { | ||

| var p = (y * w + x) * 4; | ||

| var lightness; | ||

| if (x === 0 || x >= w - 1 || y === 0 || y >= h - 1) { | ||

| lightness = sample(id, p); | ||

| } | ||

| else { | ||

| lightness = sample(id, p) * 4 - | ||

| if (x === 0 || x >= w - 1 || y === 0 || y >= h - 1) { | ||

| lightness = sample(id, p); | ||

| } else { | ||

| lightness = | ||

| sample(id, p) * 4 - | ||

| sample(id, p - w * 4) - | ||

@@ -188,369 +198,401 @@ sample(id, p - 4) - | ||

| sample(id, p + w * 4); | ||

| } | ||

| od[p + 1] = lightness; | ||

| } | ||

| od[p + 1] = lightness; | ||

| } | ||

| } | ||

| } | ||

| function skinDetect(options, i, o) { | ||

| var id = i.data; | ||

| var od = o.data; | ||

| var w = i.width; | ||

| var h = i.height; | ||

| function skinDetect(options, i, o) { | ||

| var id = i.data; | ||

| var od = o.data; | ||

| var w = i.width; | ||

| var h = i.height; | ||

| for (var y = 0; y < h; y++) { | ||

| for (var x = 0; x < w; x++) { | ||

| var p = (y * w + x) * 4; | ||

| var lightness = cie(id[p], id[p + 1], id[p + 2]) / 255; | ||

| var skin = skinColor(options, id[p], id[p + 1], id[p + 2]); | ||

| var isSkinColor = skin > options.skinThreshold; | ||

| var isSkinBrightness = lightness >= options.skinBrightnessMin && lightness <= options.skinBrightnessMax; | ||

| if (isSkinColor && isSkinBrightness) { | ||

| od[p] = (skin - options.skinThreshold) * (255 / (1 - options.skinThreshold)); | ||

| for (var y = 0; y < h; y++) { | ||

| for (var x = 0; x < w; x++) { | ||

| var p = (y * w + x) * 4; | ||

| var lightness = cie(id[p], id[p + 1], id[p + 2]) / 255; | ||

| var skin = skinColor(options, id[p], id[p + 1], id[p + 2]); | ||

| var isSkinColor = skin > options.skinThreshold; | ||

| var isSkinBrightness = | ||

| lightness >= options.skinBrightnessMin && | ||

| lightness <= options.skinBrightnessMax; | ||

| if (isSkinColor && isSkinBrightness) { | ||

| od[p] = | ||

| (skin - options.skinThreshold) * | ||

| (255 / (1 - options.skinThreshold)); | ||

| } else { | ||

| od[p] = 0; | ||

| } | ||

| } | ||

| else { | ||

| od[p] = 0; | ||

| } | ||

| } | ||

| } | ||

| } | ||

| function saturationDetect(options, i, o) { | ||

| var id = i.data; | ||

| var od = o.data; | ||

| var w = i.width; | ||

| var h = i.height; | ||

| for (var y = 0; y < h; y++) { | ||

| for (var x = 0; x < w; x++) { | ||

| var p = (y * w + x) * 4; | ||

| function saturationDetect(options, i, o) { | ||

| var id = i.data; | ||

| var od = o.data; | ||

| var w = i.width; | ||

| var h = i.height; | ||

| for (var y = 0; y < h; y++) { | ||

| for (var x = 0; x < w; x++) { | ||

| var p = (y * w + x) * 4; | ||

| var lightness = cie(id[p], id[p + 1], id[p + 2]) / 255; | ||

| var sat = saturation(id[p], id[p + 1], id[p + 2]); | ||

| var lightness = cie(id[p], id[p + 1], id[p + 2]) / 255; | ||

| var sat = saturation(id[p], id[p + 1], id[p + 2]); | ||

| var acceptableSaturation = sat > options.saturationThreshold; | ||

| var acceptableLightness = lightness >= options.saturationBrightnessMin && | ||

| var acceptableSaturation = sat > options.saturationThreshold; | ||

| var acceptableLightness = | ||

| lightness >= options.saturationBrightnessMin && | ||

| lightness <= options.saturationBrightnessMax; | ||

| if (acceptableLightness && acceptableLightness) { | ||

| od[p + 2] = (sat - options.saturationThreshold) * (255 / (1 - options.saturationThreshold)); | ||

| if (acceptableLightness && acceptableSaturation) { | ||

| od[p + 2] = | ||

| (sat - options.saturationThreshold) * | ||

| (255 / (1 - options.saturationThreshold)); | ||

| } else { | ||

| od[p + 2] = 0; | ||

| } | ||

| } | ||

| else { | ||

| od[p + 2] = 0; | ||

| } | ||

| } | ||

| } | ||

| } | ||

| function applyBoosts(options, output) { | ||

| if (!options.boost) return; | ||

| var od = output.data; | ||

| for (var i = 0; i < output.width; i += 4) { | ||

| od[i + 3] = 0; | ||

| function applyBoosts(options, output) { | ||

| if (!options.boost) return; | ||

| var od = output.data; | ||

| for (var i = 0; i < output.width; i += 4) { | ||

| od[i + 3] = 0; | ||

| } | ||

| for (i = 0; i < options.boost.length; i++) { | ||

| applyBoost(options.boost[i], options, output); | ||

| } | ||

| } | ||

| for (i = 0; i < options.boost.length; i++) { | ||

| applyBoost(options.boost[i], options, output); | ||

| } | ||

| } | ||

| function applyBoost(boost, options, output) { | ||

| var od = output.data; | ||

| var w = output.width; | ||

| var x0 = ~~boost.x; | ||

| var x1 = ~~(boost.x + boost.width); | ||

| var y0 = ~~boost.y; | ||

| var y1 = ~~(boost.y + boost.height); | ||

| var weight = boost.weight * 255; | ||

| for (var y = y0; y < y1; y++) { | ||

| for (var x = x0; x < x1; x++) { | ||

| var i = (y * w + x) * 4; | ||

| od[i + 3] += weight; | ||

| function applyBoost(boost, options, output) { | ||

| var od = output.data; | ||

| var w = output.width; | ||

| var x0 = ~~boost.x; | ||

| var x1 = ~~(boost.x + boost.width); | ||

| var y0 = ~~boost.y; | ||

| var y1 = ~~(boost.y + boost.height); | ||

| var weight = boost.weight * 255; | ||

| for (var y = y0; y < y1; y++) { | ||

| for (var x = x0; x < x1; x++) { | ||

| var i = (y * w + x) * 4; | ||

| od[i + 3] += weight; | ||

| } | ||

| } | ||

| } | ||

| } | ||

| function generateCrops(options, width, height) { | ||

| var results = []; | ||

| var minDimension = min(width, height); | ||

| var cropWidth = options.cropWidth || minDimension; | ||

| var cropHeight = options.cropHeight || minDimension; | ||

| for (var scale = options.maxScale; scale >= options.minScale; scale -= options.scaleStep) { | ||

| for (var y = 0; y + cropHeight * scale <= height; y += options.step) { | ||

| for (var x = 0; x + cropWidth * scale <= width; x += options.step) { | ||

| results.push({ | ||

| x: x, | ||

| y: y, | ||

| width: cropWidth * scale, | ||

| height: cropHeight * scale, | ||

| }); | ||

| function generateCrops(options, width, height) { | ||

| var results = []; | ||

| var minDimension = min(width, height); | ||

| var cropWidth = options.cropWidth || minDimension; | ||

| var cropHeight = options.cropHeight || minDimension; | ||

| for ( | ||

| var scale = options.maxScale; | ||

| scale >= options.minScale; | ||

| scale -= options.scaleStep | ||

| ) { | ||

| for (var y = 0; y + cropHeight * scale <= height; y += options.step) { | ||

| for (var x = 0; x + cropWidth * scale <= width; x += options.step) { | ||

| results.push({ | ||

| x: x, | ||

| y: y, | ||

| width: cropWidth * scale, | ||

| height: cropHeight * scale | ||

| }); | ||

| } | ||

| } | ||

| } | ||

| return results; | ||

| } | ||

| return results; | ||

| } | ||

| function score(options, output, crop) { | ||

| var result = { | ||

| detail: 0, | ||

| saturation: 0, | ||

| skin: 0, | ||

| boost: 0, | ||

| total: 0, | ||

| }; | ||

| function score(options, output, crop) { | ||

| var result = { | ||

| detail: 0, | ||

| saturation: 0, | ||

| skin: 0, | ||

| boost: 0, | ||

| total: 0 | ||

| }; | ||

| var od = output.data; | ||

| var downSample = options.scoreDownSample; | ||

| var invDownSample = 1 / downSample; | ||

| var outputHeightDownSample = output.height * downSample; | ||

| var outputWidthDownSample = output.width * downSample; | ||

| var outputWidth = output.width; | ||

| var od = output.data; | ||

| var downSample = options.scoreDownSample; | ||

| var invDownSample = 1 / downSample; | ||

| var outputHeightDownSample = output.height * downSample; | ||

| var outputWidthDownSample = output.width * downSample; | ||

| var outputWidth = output.width; | ||

| for (var y = 0; y < outputHeightDownSample; y += downSample) { | ||

| for (var x = 0; x < outputWidthDownSample; x += downSample) { | ||

| var p = (~~(y * invDownSample) * outputWidth + ~~(x * invDownSample)) * 4; | ||

| var i = importance(options, crop, x, y); | ||

| var detail = od[p + 1] / 255; | ||

| for (var y = 0; y < outputHeightDownSample; y += downSample) { | ||

| for (var x = 0; x < outputWidthDownSample; x += downSample) { | ||

| var p = | ||

| (~~(y * invDownSample) * outputWidth + ~~(x * invDownSample)) * 4; | ||

| var i = importance(options, crop, x, y); | ||

| var detail = od[p + 1] / 255; | ||

| result.skin += od[p] / 255 * (detail + options.skinBias) * i; | ||

| result.detail += detail * i; | ||

| result.saturation += od[p + 2] / 255 * (detail + options.saturationBias) * i; | ||

| result.boost += od[p + 3] / 255 * i; | ||

| result.skin += od[p] / 255 * (detail + options.skinBias) * i; | ||

| result.detail += detail * i; | ||

| result.saturation += | ||

| od[p + 2] / 255 * (detail + options.saturationBias) * i; | ||

| result.boost += od[p + 3] / 255 * i; | ||

| } | ||

| } | ||

| result.total = | ||

| (result.detail * options.detailWeight + | ||

| result.skin * options.skinWeight + | ||

| result.saturation * options.saturationWeight + | ||

| result.boost * options.boostWeight) / | ||

| (crop.width * crop.height); | ||

| return result; | ||

| } | ||

| result.total = (result.detail * options.detailWeight + | ||

| result.skin * options.skinWeight + | ||

| result.saturation * options.saturationWeight + | ||

| result.boost * options.boostWeight) / (crop.width * crop.height); | ||

| return result; | ||

| } | ||

| function importance(options, crop, x, y) { | ||

| if ( | ||

| crop.x > x || | ||

| x >= crop.x + crop.width || | ||

| crop.y > y || | ||

| y >= crop.y + crop.height | ||

| ) { | ||

| return options.outsideImportance; | ||

| } | ||

| x = (x - crop.x) / crop.width; | ||

| y = (y - crop.y) / crop.height; | ||

| var px = abs(0.5 - x) * 2; | ||

| var py = abs(0.5 - y) * 2; | ||

| // Distance from edge | ||

| var dx = Math.max(px - 1.0 + options.edgeRadius, 0); | ||

| var dy = Math.max(py - 1.0 + options.edgeRadius, 0); | ||

| var d = (dx * dx + dy * dy) * options.edgeWeight; | ||

| var s = 1.41 - sqrt(px * px + py * py); | ||

| if (options.ruleOfThirds) { | ||

| s += Math.max(0, s + d + 0.5) * 1.2 * (thirds(px) + thirds(py)); | ||

| } | ||

| return s + d; | ||

| } | ||

| smartcrop.importance = importance; | ||

| function importance(options, crop, x, y) { | ||

| if (crop.x > x || x >= crop.x + crop.width || crop.y > y || y >= crop.y + crop.height) { | ||

| return options.outsideImportance; | ||

| function skinColor(options, r, g, b) { | ||

| var mag = sqrt(r * r + g * g + b * b); | ||

| var rd = r / mag - options.skinColor[0]; | ||

| var gd = g / mag - options.skinColor[1]; | ||

| var bd = b / mag - options.skinColor[2]; | ||

| var d = sqrt(rd * rd + gd * gd + bd * bd); | ||

| return 1 - d; | ||

| } | ||

| x = (x - crop.x) / crop.width; | ||

| y = (y - crop.y) / crop.height; | ||

| var px = abs(0.5 - x) * 2; | ||

| var py = abs(0.5 - y) * 2; | ||

| // Distance from edge | ||

| var dx = Math.max(px - 1.0 + options.edgeRadius, 0); | ||

| var dy = Math.max(py - 1.0 + options.edgeRadius, 0); | ||

| var d = (dx * dx + dy * dy) * options.edgeWeight; | ||

| var s = 1.41 - sqrt(px * px + py * py); | ||

| if (options.ruleOfThirds) { | ||

| s += (Math.max(0, s + d + 0.5) * 1.2) * (thirds(px) + thirds(py)); | ||

| } | ||

| return s + d; | ||

| } | ||

| smartcrop.importance = importance; | ||

| function skinColor(options, r, g, b) { | ||

| var mag = sqrt(r * r + g * g + b * b); | ||

| var rd = (r / mag - options.skinColor[0]); | ||

| var gd = (g / mag - options.skinColor[1]); | ||

| var bd = (b / mag - options.skinColor[2]); | ||

| var d = sqrt(rd * rd + gd * gd + bd * bd); | ||

| return 1 - d; | ||

| } | ||

| function analyse(options, input) { | ||

| var result = {}; | ||

| var output = new ImgData(input.width, input.height); | ||

| function analyse(options, input) { | ||

| var result = {}; | ||

| var output = new ImgData(input.width, input.height); | ||

| edgeDetect(input, output); | ||

| skinDetect(options, input, output); | ||

| saturationDetect(options, input, output); | ||

| applyBoosts(options, output); | ||

| edgeDetect(input, output); | ||

| skinDetect(options, input, output); | ||

| saturationDetect(options, input, output); | ||

| applyBoosts(options, output); | ||

| var scoreOutput = downSample(output, options.scoreDownSample); | ||

| var scoreOutput = downSample(output, options.scoreDownSample); | ||

| var topScore = -Infinity; | ||

| var topCrop = null; | ||

| var crops = generateCrops(options, input.width, input.height); | ||

| var topScore = -Infinity; | ||

| var topCrop = null; | ||

| var crops = generateCrops(options, input.width, input.height); | ||

| for (var i = 0, iLen = crops.length; i < iLen; i++) { | ||

| var crop = crops[i]; | ||

| crop.score = score(options, scoreOutput, crop); | ||

| if (crop.score.total > topScore) { | ||

| topCrop = crop; | ||

| topScore = crop.score.total; | ||

| for (var i = 0, iLen = crops.length; i < iLen; i++) { | ||

| var crop = crops[i]; | ||

| crop.score = score(options, scoreOutput, crop); | ||

| if (crop.score.total > topScore) { | ||

| topCrop = crop; | ||

| topScore = crop.score.total; | ||

| } | ||

| } | ||

| } | ||

| result.topCrop = topCrop; | ||

| result.topCrop = topCrop; | ||

| if (options.debug && topCrop) { | ||

| result.crops = crops; | ||

| result.debugOutput = output; | ||

| result.debugOptions = options; | ||

| // Create a copy which will not be adjusted by the post scaling of smartcrop.crop | ||

| result.debugTopCrop = extend({}, result.topCrop); | ||

| if (options.debug && topCrop) { | ||

| result.crops = crops; | ||

| result.debugOutput = output; | ||

| result.debugOptions = options; | ||

| // Create a copy which will not be adjusted by the post scaling of smartcrop.crop | ||

| result.debugTopCrop = extend({}, result.topCrop); | ||

| } | ||

| return result; | ||

| } | ||

| return result; | ||

| } | ||

| function ImgData(width, height, data) { | ||

| this.width = width; | ||

| this.height = height; | ||

| if (data) { | ||

| this.data = new Uint8ClampedArray(data); | ||

| function ImgData(width, height, data) { | ||

| this.width = width; | ||

| this.height = height; | ||

| if (data) { | ||

| this.data = new Uint8ClampedArray(data); | ||

| } else { | ||

| this.data = new Uint8ClampedArray(width * height * 4); | ||

| } | ||

| } | ||

| else { | ||

| this.data = new Uint8ClampedArray(width * height * 4); | ||

| } | ||

| } | ||

| smartcrop.ImgData = ImgData; | ||

| smartcrop.ImgData = ImgData; | ||

| function downSample(input, factor) { | ||

| var idata = input.data; | ||

| var iwidth = input.width; | ||

| var width = Math.floor(input.width / factor); | ||

| var height = Math.floor(input.height / factor); | ||

| var output = new ImgData(width, height); | ||

| var data = output.data; | ||

| var ifactor2 = 1 / (factor * factor); | ||

| for (var y = 0; y < height; y++) { | ||

| for (var x = 0; x < width; x++) { | ||

| var i = (y * width + x) * 4; | ||

| function downSample(input, factor) { | ||

| var idata = input.data; | ||

| var iwidth = input.width; | ||

| var width = Math.floor(input.width / factor); | ||

| var height = Math.floor(input.height / factor); | ||

| var output = new ImgData(width, height); | ||

| var data = output.data; | ||

| var ifactor2 = 1 / (factor * factor); | ||

| for (var y = 0; y < height; y++) { | ||

| for (var x = 0; x < width; x++) { | ||

| var i = (y * width + x) * 4; | ||

| var r = 0; | ||

| var g = 0; | ||

| var b = 0; | ||

| var a = 0; | ||

| var r = 0; | ||

| var g = 0; | ||

| var b = 0; | ||

| var a = 0; | ||

| var mr = 0; | ||

| var mg = 0; | ||

| var mb = 0; | ||

| var mr = 0; | ||

| var mg = 0; | ||

| for (var v = 0; v < factor; v++) { | ||

| for (var u = 0; u < factor; u++) { | ||

| var j = ((y * factor + v) * iwidth + (x * factor + u)) * 4; | ||

| r += idata[j]; | ||

| g += idata[j + 1]; | ||

| b += idata[j + 2]; | ||

| a += idata[j + 3]; | ||

| mr = Math.max(mr, idata[j]); | ||

| mg = Math.max(mg, idata[j + 1]); | ||

| mb = Math.max(mb, idata[j + 2]); | ||

| for (var v = 0; v < factor; v++) { | ||

| for (var u = 0; u < factor; u++) { | ||

| var j = ((y * factor + v) * iwidth + (x * factor + u)) * 4; | ||

| r += idata[j]; | ||

| g += idata[j + 1]; | ||

| b += idata[j + 2]; | ||

| a += idata[j + 3]; | ||

| mr = Math.max(mr, idata[j]); | ||

| mg = Math.max(mg, idata[j + 1]); | ||

| // unused | ||

| // mb = Math.max(mb, idata[j + 2]); | ||

| } | ||

| } | ||

| // this is some funky magic to preserve detail a bit more for | ||

| // skin (r) and detail (g). Saturation (b) does not get this boost. | ||

| data[i] = r * ifactor2 * 0.5 + mr * 0.5; | ||

| data[i + 1] = g * ifactor2 * 0.7 + mg * 0.3; | ||

| data[i + 2] = b * ifactor2; | ||

| data[i + 3] = a * ifactor2; | ||

| } | ||

| // this is some funky magic to preserve detail a bit more for | ||

| // skin (r) and detail (g). Saturation (b) does not get this boost. | ||

| data[i] = r * ifactor2 * 0.5 + mr * 0.5; | ||

| data[i + 1] = g * ifactor2 * 0.7 + mg * 0.3; | ||

| data[i + 2] = b * ifactor2; | ||

| data[i + 3] = a * ifactor2; | ||

| } | ||

| return output; | ||

| } | ||

| return output; | ||

| } | ||

| smartcrop._downSample = downSample; | ||

| smartcrop._downSample = downSample; | ||

| function defaultCanvasFactory(w, h) { | ||

| var c = document.createElement('canvas'); | ||

| c.width = w; | ||

| c.height = h; | ||

| return c; | ||

| } | ||

| function defaultCanvasFactory(w, h) { | ||

| var c = document.createElement('canvas'); | ||

| c.width = w; | ||

| c.height = h; | ||

| return c; | ||

| } | ||

| function canvasImageOperations(canvasFactory) { | ||

| return { | ||

| // Takes imageInput as argument | ||

| // returns an object which has at least | ||

| // {width: n, height: n} | ||

| open: function(image) { | ||

| // Work around images scaled in css by drawing them onto a canvas | ||

| var w = image.naturalWidth || image.width; | ||

| var h = image.naturalHeight || image.height; | ||

| var c = canvasFactory(w, h); | ||

| var ctx = c.getContext('2d'); | ||

| if (image.naturalWidth && (image.naturalWidth != image.width || image.naturalHeight != image.height)) { | ||

| c.width = image.naturalWidth; | ||

| c.height = image.naturalHeight; | ||

| } | ||

| else { | ||

| c.width = image.width; | ||

| c.height = image.height; | ||

| } | ||

| ctx.drawImage(image, 0, 0); | ||

| return smartcrop.Promise.resolve(c); | ||

| }, | ||

| // Takes an image (as returned by open), and changes it's size by resampling | ||

| resample: function(image, width, height) { | ||

| return Promise.resolve(image).then(function(image) { | ||

| var c = canvasFactory(~~width, ~~height); | ||

| function canvasImageOperations(canvasFactory) { | ||

| return { | ||

| // Takes imageInput as argument | ||

| // returns an object which has at least | ||

| // {width: n, height: n} | ||

| open: function(image) { | ||

| // Work around images scaled in css by drawing them onto a canvas | ||

| var w = image.naturalWidth || image.width; | ||

| var h = image.naturalHeight || image.height; | ||

| var c = canvasFactory(w, h); | ||

| var ctx = c.getContext('2d'); | ||

| ctx.drawImage(image, 0, 0, image.width, image.height, 0, 0, c.width, c.height); | ||

| if ( | ||

| image.naturalWidth && | ||

| (image.naturalWidth != image.width || | ||

| image.naturalHeight != image.height) | ||

| ) { | ||

| c.width = image.naturalWidth; | ||

| c.height = image.naturalHeight; | ||

| } else { | ||

| c.width = image.width; | ||

| c.height = image.height; | ||

| } | ||

| ctx.drawImage(image, 0, 0); | ||

| return smartcrop.Promise.resolve(c); | ||

| }); | ||

| }, | ||

| getData: function(image) { | ||

| return Promise.resolve(image).then(function(c) { | ||

| var ctx = c.getContext('2d'); | ||

| var id = ctx.getImageData(0, 0, c.width, c.height); | ||

| return new ImgData(c.width, c.height, id.data); | ||

| }); | ||

| }, | ||

| }; | ||

| } | ||

| smartcrop._canvasImageOperations = canvasImageOperations; | ||

| }, | ||

| // Takes an image (as returned by open), and changes it's size by resampling | ||

| resample: function(image, width, height) { | ||

| return Promise.resolve(image).then(function(image) { | ||

| var c = canvasFactory(~~width, ~~height); | ||

| var ctx = c.getContext('2d'); | ||

| // Aliases and helpers | ||

| var min = Math.min; | ||

| var max = Math.max; | ||

| var abs = Math.abs; | ||

| var ceil = Math.ceil; | ||

| var sqrt = Math.sqrt; | ||

| ctx.drawImage( | ||

| image, | ||

| 0, | ||

| 0, | ||

| image.width, | ||

| image.height, | ||

| 0, | ||

| 0, | ||

| c.width, | ||

| c.height | ||

| ); | ||

| return smartcrop.Promise.resolve(c); | ||

| }); | ||

| }, | ||

| getData: function(image) { | ||

| return Promise.resolve(image).then(function(c) { | ||

| var ctx = c.getContext('2d'); | ||

| var id = ctx.getImageData(0, 0, c.width, c.height); | ||

| return new ImgData(c.width, c.height, id.data); | ||

| }); | ||

| } | ||

| }; | ||

| } | ||

| smartcrop._canvasImageOperations = canvasImageOperations; | ||

| function extend(o) { | ||

| for (var i = 1, iLen = arguments.length; i < iLen; i++) { | ||

| var arg = arguments[i]; | ||

| if (arg) { | ||

| for (var name in arg) { | ||

| o[name] = arg[name]; | ||

| // Aliases and helpers | ||

| var min = Math.min; | ||

| var max = Math.max; | ||

| var abs = Math.abs; | ||

| var sqrt = Math.sqrt; | ||

| function extend(o) { | ||

| for (var i = 1, iLen = arguments.length; i < iLen; i++) { | ||

| var arg = arguments[i]; | ||

| if (arg) { | ||

| for (var name in arg) { | ||

| o[name] = arg[name]; | ||

| } | ||

| } | ||

| } | ||

| return o; | ||

| } | ||

| return o; | ||

| } | ||

| // Gets value in the range of [0, 1] where 0 is the center of the pictures | ||

| // returns weight of rule of thirds [0, 1] | ||

| function thirds(x) { | ||

| x = ((x - (1 / 3) + 1.0) % 2.0 * 0.5 - 0.5) * 16; | ||

| return Math.max(1.0 - x * x, 0.0); | ||

| } | ||

| // Gets value in the range of [0, 1] where 0 is the center of the pictures | ||

| // returns weight of rule of thirds [0, 1] | ||

| function thirds(x) { | ||

| x = (((x - 1 / 3 + 1.0) % 2.0) * 0.5 - 0.5) * 16; | ||

| return Math.max(1.0 - x * x, 0.0); | ||

| } | ||

| function cie(r, g, b) { | ||

| return 0.5126 * b + 0.7152 * g + 0.0722 * r; | ||

| } | ||

| function sample(id, p) { | ||

| return cie(id[p], id[p + 1], id[p + 2]); | ||

| } | ||

| function saturation(r, g, b) { | ||

| var maximum = max(r / 255, g / 255, b / 255); | ||

| var minumum = min(r / 255, g / 255, b / 255); | ||

| if (maximum === minumum) { | ||

| return 0; | ||

| function cie(r, g, b) { | ||

| return 0.5126 * b + 0.7152 * g + 0.0722 * r; | ||

| } | ||

| function sample(id, p) { | ||

| return cie(id[p], id[p + 1], id[p + 2]); | ||

| } | ||

| function saturation(r, g, b) { | ||

| var maximum = max(r / 255, g / 255, b / 255); | ||

| var minumum = min(r / 255, g / 255, b / 255); | ||

| var l = (maximum + minumum) / 2; | ||

| var d = maximum - minumum; | ||

| if (maximum === minumum) { | ||

| return 0; | ||

| } | ||

| return l > 0.5 ? d / (2 - maximum - minumum) : d / (maximum + minumum); | ||

| } | ||

| var l = (maximum + minumum) / 2; | ||

| var d = maximum - minumum; | ||

| // Amd | ||

| if (typeof define !== 'undefined' && define.amd) define(function() {return smartcrop;}); | ||

| // Common js | ||

| if (typeof exports !== 'undefined') exports.smartcrop = smartcrop; | ||

| // Browser | ||

| else if (typeof navigator !== 'undefined') window.SmartCrop = window.smartcrop = smartcrop; | ||

| // Nodejs | ||

| if (typeof module !== 'undefined') { | ||

| module.exports = smartcrop; | ||

| } | ||

| return l > 0.5 ? d / (2 - maximum - minumum) : d / (maximum + minumum); | ||

| } | ||

| // Amd | ||

| if (typeof define !== 'undefined' && define.amd) | ||

| define(function() { | ||

| return smartcrop; | ||

| }); | ||

| // Common js | ||

| if (typeof exports !== 'undefined') exports.smartcrop = smartcrop; | ||

| else if (typeof navigator !== 'undefined') | ||

| // Browser | ||

| window.SmartCrop = window.smartcrop = smartcrop; | ||

| // Nodejs | ||

| if (typeof module !== 'undefined') { | ||

| module.exports = smartcrop; | ||

| } | ||

| })(); |

New alerts

License Policy Violation

LicenseThis package is not allowed per your license policy. Review the package's license to ensure compliance.

Found 1 instance in 1 package

Fixed alerts

License Policy Violation

LicenseThis package is not allowed per your license policy. Review the package's license to ensure compliance.

Found 1 instance in 1 package

Improved metrics

- Total package byte prevSize

- increased by15.46%

29108

- Lines of code

- increased by9.57%

538

- Number of lines in readme file

- increased by27.37%

228

Worsened metrics

- Dependency count

- increased byInfinity%

1

- Dev dependency count

- increased by6.67%

16

Dependency changes

+ Addedprettier-eslint@^8.8.1

+ Addedacorn@3.3.05.7.4(transitive)

+ Addedacorn-jsx@3.0.1(transitive)

+ Addedajv@5.5.2(transitive)

+ Addedajv-keywords@2.1.1(transitive)

+ Addedansi-escapes@3.2.0(transitive)

+ Addedansi-regex@2.1.13.0.1(transitive)

+ Addedansi-styles@2.2.13.2.1(transitive)

+ Addedargparse@1.0.10(transitive)

+ Addedbabel-code-frame@6.26.0(transitive)

+ Addedbabel-runtime@6.26.0(transitive)

+ Addedbalanced-match@1.0.2(transitive)

+ Addedbrace-expansion@1.1.11(transitive)

+ Addedbuffer-from@1.1.2(transitive)

+ Addedcaller-path@0.1.0(transitive)

+ Addedcallsites@0.2.0(transitive)

+ Addedchalk@1.1.32.4.2(transitive)

+ Addedchardet@0.4.2(transitive)

+ Addedcircular-json@0.3.3(transitive)

+ Addedcli-cursor@2.1.0(transitive)

+ Addedcli-width@2.2.1(transitive)

+ Addedco@4.6.0(transitive)

+ Addedcolor-convert@1.9.3(transitive)

+ Addedcolor-name@1.1.3(transitive)

+ Addedcommon-tags@1.8.2(transitive)

+ Addedconcat-map@0.0.1(transitive)

+ Addedconcat-stream@1.6.2(transitive)

+ Addedcore-js@2.6.12(transitive)

+ Addedcore-util-is@1.0.3(transitive)

+ Addedcross-spawn@5.1.0(transitive)

+ Addeddebug@3.2.7(transitive)

+ Addeddeep-is@0.1.4(transitive)

+ Addeddlv@1.1.3(transitive)

+ Addeddoctrine@2.1.0(transitive)

+ Addedescape-string-regexp@1.0.5(transitive)

+ Addedeslint@4.19.1(transitive)

+ Addedeslint-scope@3.7.3(transitive)

+ Addedeslint-visitor-keys@1.3.0(transitive)

+ Addedespree@3.5.4(transitive)

+ Addedesprima@4.0.1(transitive)

+ Addedesquery@1.6.0(transitive)

+ Addedesrecurse@4.3.0(transitive)

+ Addedestraverse@4.3.05.3.0(transitive)

+ Addedesutils@2.0.3(transitive)

+ Addedexternal-editor@2.2.0(transitive)

+ Addedfast-deep-equal@1.1.0(transitive)

+ Addedfast-json-stable-stringify@2.1.0(transitive)

+ Addedfast-levenshtein@2.0.6(transitive)

+ Addedfigures@2.0.0(transitive)

+ Addedfile-entry-cache@2.0.0(transitive)

+ Addedflat-cache@1.3.4(transitive)

+ Addedfs.realpath@1.0.0(transitive)

+ Addedfunctional-red-black-tree@1.0.1(transitive)

+ Addedglob@7.2.3(transitive)

+ Addedglobals@11.12.0(transitive)

+ Addedgraceful-fs@4.2.11(transitive)

+ Addedhas-ansi@2.0.0(transitive)

+ Addedhas-flag@3.0.0(transitive)

+ Addediconv-lite@0.4.24(transitive)

+ Addedignore@3.3.10(transitive)

+ Addedimurmurhash@0.1.4(transitive)

+ Addedindent-string@3.2.0(transitive)

+ Addedinflight@1.0.6(transitive)

+ Addedinherits@2.0.4(transitive)

+ Addedinquirer@3.3.0(transitive)

+ Addedis-fullwidth-code-point@2.0.0(transitive)

+ Addedis-resolvable@1.1.0(transitive)

+ Addedisarray@1.0.0(transitive)

+ Addedisexe@2.0.0(transitive)

+ Addedjs-tokens@3.0.2(transitive)

+ Addedjs-yaml@3.14.1(transitive)

+ Addedjson-schema-traverse@0.3.1(transitive)

+ Addedjson-stable-stringify-without-jsonify@1.0.1(transitive)

+ Addedlevn@0.3.0(transitive)

+ Addedlodash@4.17.21(transitive)

+ Addedlodash.merge@4.6.2(transitive)

+ Addedlodash.unescape@4.0.1(transitive)

+ Addedloglevel@1.9.2(transitive)

+ Addedloglevel-colored-level-prefix@1.0.0(transitive)

+ Addedlru-cache@4.1.5(transitive)

+ Addedmimic-fn@1.2.0(transitive)

+ Addedminimatch@3.1.2(transitive)

+ Addedminimist@1.2.8(transitive)

+ Addedmkdirp@0.5.6(transitive)

+ Addedms@2.1.3(transitive)

+ Addedmute-stream@0.0.7(transitive)

+ Addednatural-compare@1.4.0(transitive)

+ Addedobject-assign@4.1.1(transitive)

+ Addedonce@1.4.0(transitive)

+ Addedonetime@2.0.1(transitive)

+ Addedoptionator@0.8.3(transitive)

+ Addedos-tmpdir@1.0.2(transitive)

+ Addedpath-is-absolute@1.0.1(transitive)

+ Addedpath-is-inside@1.0.2(transitive)

+ Addedpluralize@7.0.0(transitive)

+ Addedprelude-ls@1.1.2(transitive)

+ Addedprettier@1.19.1(transitive)

+ Addedprettier-eslint@8.8.2(transitive)

+ Addedpretty-format@23.6.0(transitive)

+ Addedprocess-nextick-args@2.0.1(transitive)

+ Addedprogress@2.0.3(transitive)

+ Addedpseudomap@1.0.2(transitive)

+ Addedreadable-stream@2.3.8(transitive)

+ Addedregenerator-runtime@0.11.1(transitive)

+ Addedregexpp@1.1.0(transitive)

+ Addedrequire-relative@0.8.7(transitive)

+ Addedrequire-uncached@1.0.3(transitive)

+ Addedresolve-from@1.0.1(transitive)

+ Addedrestore-cursor@2.0.0(transitive)

+ Addedrimraf@2.6.3(transitive)

+ Addedrun-async@2.4.1(transitive)

+ Addedrx-lite@4.0.8(transitive)

+ Addedrx-lite-aggregates@4.0.8(transitive)

+ Addedsafe-buffer@5.1.2(transitive)

+ Addedsafer-buffer@2.1.2(transitive)

+ Addedsemver@5.5.05.7.2(transitive)

+ Addedshebang-command@1.2.0(transitive)

+ Addedshebang-regex@1.0.0(transitive)

+ Addedsignal-exit@3.0.7(transitive)

+ Addedslice-ansi@1.0.0(transitive)

+ Addedsprintf-js@1.0.3(transitive)

+ Addedstring-width@2.1.1(transitive)

+ Addedstring_decoder@1.1.1(transitive)

+ Addedstrip-ansi@3.0.14.0.0(transitive)

+ Addedstrip-json-comments@2.0.1(transitive)

+ Addedsupports-color@2.0.05.5.0(transitive)

+ Addedtable@4.0.2(transitive)

+ Addedtext-table@0.2.0(transitive)

+ Addedthrough@2.3.8(transitive)

+ Addedtmp@0.0.33(transitive)

+ Addedtype-check@0.3.2(transitive)

+ Addedtypedarray@0.0.6(transitive)

+ Addedtypescript@2.9.2(transitive)

+ Addedtypescript-eslint-parser@16.0.1(transitive)

+ Addedutil-deprecate@1.0.2(transitive)

+ Addedvue-eslint-parser@2.0.3(transitive)

+ Addedwhich@1.3.1(transitive)

+ Addedword-wrap@1.2.5(transitive)

+ Addedwrappy@1.0.2(transitive)

+ Addedwrite@0.2.1(transitive)

+ Addedyallist@2.1.2(transitive)