Product

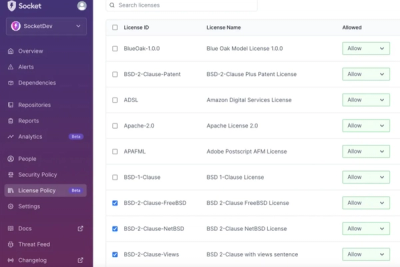

Introducing License Enforcement in Socket

Ensure open-source compliance with Socket’s License Enforcement Beta. Set up your License Policy and secure your software!

This repository contains a list of of HTTP user-agents used by robots, crawlers, and spiders as in single JSON file.

Each pattern is a regular expression. It should work out-of-the-box wih your favorite regex library.

If you use this project in a commercial product, please sponsor it.

Download the crawler-user-agents.json file from this repository directly.

crawler-user-agents is deployed on npmjs.com: https://www.npmjs.com/package/crawler-user-agents

To use it using npm or yarn:

npm install --save crawler-user-agents

# OR

yarn add crawler-user-agents

In Node.js, you can require the package to get an array of crawler user agents.

const crawlers = require('crawler-user-agents');

console.log(crawlers);

Install with pip install crawler-user-agents

Then:

import crawleruseragents

if crawleruseragents.is_crawler("Googlebot/"):

# do something

or:

import crawleruseragents

indices = crawleruseragents.matching_crawlers("bingbot/2.0")

print("crawlers' indices:", indices)

print(

"crawler's URL:",

crawleruseragents.CRAWLER_USER_AGENTS_DATA[indices[0]]["url"]

)

Note that matching_crawlers is much slower than is_crawler, if the given User-Agent does indeed match any crawlers.

Go: use this package,

it provides global variable Crawlers (it is synchronized with crawler-user-agents.json),

functions IsCrawler and MatchingCrawlers.

Example of Go program:

package main

import (

"fmt"

"github.com/monperrus/crawler-user-agents"

)

func main() {

userAgent := "Mozilla/5.0 (compatible; Discordbot/2.0; +https://discordapp.com)"

isCrawler := agents.IsCrawler(userAgent)

fmt.Println("isCrawler:", isCrawler)

indices := agents.MatchingCrawlers(userAgent)

fmt.Println("crawlers' indices:", indices)

fmt.Println("crawler's URL:", agents.Crawlers[indices[0]].URL)

}

Output:

isCrawler: true

crawlers' indices: [237]

crawler' URL: https://discordapp.com

I do welcome additions contributed as pull requests.

The pull requests should:

Example:

{

"pattern": "rogerbot",

"addition_date": "2014/02/28",

"url": "http://moz.com/help/pro/what-is-rogerbot-",

"instances" : ["rogerbot/2.3 example UA"]

}

The list is under a MIT License. The versions prior to Nov 7, 2016 were under a CC-SA license.

There are a few wrapper libraries that use this data to detect bots:

Other systems for spotting robots, crawlers, and spiders that you may want to consider are:

FAQs

Unknown package

We found that crawler-user-agents demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 1 open source maintainer collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Product

Ensure open-source compliance with Socket’s License Enforcement Beta. Set up your License Policy and secure your software!

Product

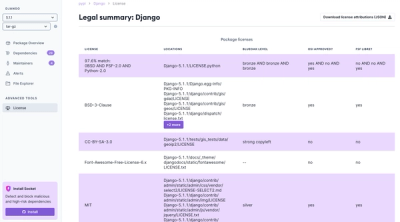

We're launching a new set of license analysis and compliance features for analyzing, managing, and complying with licenses across a range of supported languages and ecosystems.

Product

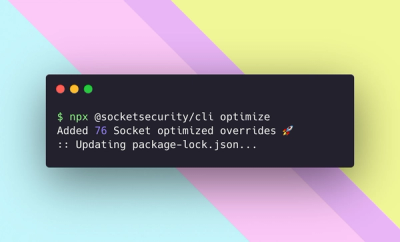

We're excited to introduce Socket Optimize, a powerful CLI command to secure open source dependencies with tested, optimized package overrides.