Product

Introducing License Enforcement in Socket

Ensure open-source compliance with Socket’s License Enforcement Beta. Set up your License Policy and secure your software!

Unlock the Value of GenAI with Iso!

The isoai package is designed to facilitate the integration, transformation, deployment, and tracing of AI models. It provides a suite of tools that make it easy to work with different types of neural network architectures and deploy them in a containerized environment.

To install the isoai package, use the following command:

pip install isoai

The GPT_bitnet module contains implementations of transformers using the BitNet architecture.

The torch_bitnet module provides implementations for RMSNorm and TorchBitNet layers.

The replace_linear_layers module provides functionality to replace traditional linear layers in a model with TorchBitNet layers.

The LLama_bitnet module includes the BitNetLLAMA model, which leverages the BitNet architecture.

The MoE_bitnet module implements the Mixture of Experts (MoE) architecture using BitNet.

The containerize module helps in containerizing models into Docker containers.

The oscontainerization module extends containerization functionality with automatic OS detection.

The isotrace module provides tools for tracing and logging variables within the code.

import torch

from isoai.isobits.GPT_bitnet import Transformer

from isoai.isobits.replace_linear_layers import replace_linears_with_torchbitnet

class ModelArgs:

def __init__(self):

self.vocab_size = 30522

self.dim = 768

self.n_heads = 12

self.n_kv_heads = 12

self.max_seq_len = 512

self.norm_eps = 1e-5

self.multiple_of = 64

self.ffn_dim_multiplier = 4

self.n_layers = 12

self.max_batch_size = 32

args = ModelArgs()

tokens = torch.randint(0, args.vocab_size, (2, args.max_seq_len))

transformer = Transformer(args)

output = transformer(tokens, start_pos=0)

print("Original Transformer Description: ", transformer)

replace_linears_with_torchbitnet(transformer, norm_dim=10)

print("Bitnet Transformer Description: ", transformer)

import torch

from isoai.isobits.LLama_bitnet import BitNetLLAMA

class ModelArgs:

def __init__(self):

self.vocab_size = 30522

self.dim = 768

self.n_heads = 12

self.n_kv_heads = 12

self.max_seq_len = 512

self.norm_eps = 1e-5

self.multiple_of = 64

self.ffn_dim_multiplier = 4

self.max_batch_size = 32

self.n_layers = 12

args = ModelArgs()

tokens = torch.randint(0, args.vocab_size, (2, args.max_seq_len))

bitnet_llama = BitNetLLAMA(args)

output = bitnet_llama(tokens, start_pos=0)

print(output.shape)

import torch

from isoai.isobits.MoE_bitnet import MoETransformer

class ModelArgs:

def __init__(self):

self.vocab_size = 30522

self.dim = 768

self.n_heads = 12

self.n_kv_heads = 12

self.max_seq_len = 512

self.norm_eps = 1e-5

self.multiple_of = 64

self.ffn_dim_multiplier = 4

self.max_batch_size = 32

self.n_layers = 12

self.num_experts = 4 # Number of experts in MoE layers

args = ModelArgs()

tokens = torch.randint(0, args.vocab_size, (2, args.max_seq_len))

moe_transformer = MoETransformer(args)

output = moe_transformer(tokens, start_pos=0)

print(output.shape)

from isoai.isodeploy.containerize import Containerize

model_path = "isoai"

output_path = "Dockerfile"

containerize = Containerize(model_path)

containerize.run(output_path)

from isoai.isodeploy.oscontainerization import OSContainerize

model_path = "isoai"

output_path = "Dockerfile"

containerize = OSContainerize(model_path)

containerize.run(output_path)

from isoai.isotrace.autotrace import Autotrace

search_path = "isoai"

output_file = "output.json"

autotrace = Autotrace(search_path)

autotrace.run(output_file)

FAQs

Compress, Trace and Deploy Your Models with Iso!

We found that isoai demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 1 open source maintainer collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Product

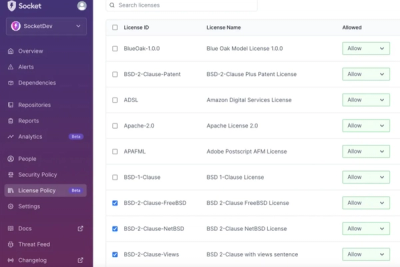

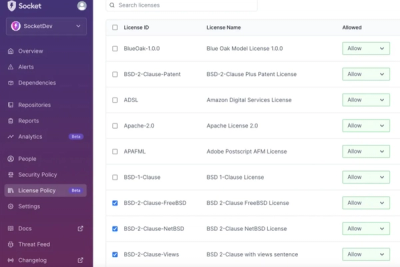

Ensure open-source compliance with Socket’s License Enforcement Beta. Set up your License Policy and secure your software!

Product

We're launching a new set of license analysis and compliance features for analyzing, managing, and complying with licenses across a range of supported languages and ecosystems.

Product

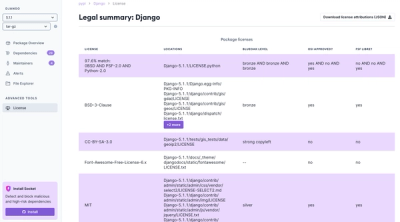

We're excited to introduce Socket Optimize, a powerful CLI command to secure open source dependencies with tested, optimized package overrides.