Security News

Package Maintainers Call for Improvements to GitHub’s New npm Security Plan

Maintainers back GitHub’s npm security overhaul but raise concerns about CI/CD workflows, enterprise support, and token management.

github.com/vsantosalmeida/csv-parser

Is a Golang library to process csv files and normalize the data to an employee entity.

First execute the build of the bin file.

make build

Copy yours csv files to the bin directory.

cp *.csv ./bin

Run the binary with the -files params (each file separated by ,).

cd bin

./csv-parser.bin -f=roster1.csv,roster2.csv

For each file will require an input with the name of the columns. Case your file don't have the column, just hit enter.

IMAGE

After the execution, if the files are processed with success one or both of that files will be created with the results.

employee-{timestamp}.json

badData-{timestamp}.json

Check coverage (will open in your browser the code coverage.)

make test cover-html

For this project, I choose to use a simple way to process the files, which is receiving from the input the structure of the CSV files. Thus ensuring in a better way that the parser will be able to interpret each file in the best way. As architecture, I use a pattern that in my opinion creates a better pattern in the structure of the project and ensures a more scalable code for any new feature. The clean architecture, which is a pattern to develop software independent of frameworks, UI, or any external technologies.

Clean Architecture by Elton Minetto

FAQs

Unknown package

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Security News

Maintainers back GitHub’s npm security overhaul but raise concerns about CI/CD workflows, enterprise support, and token management.

Product

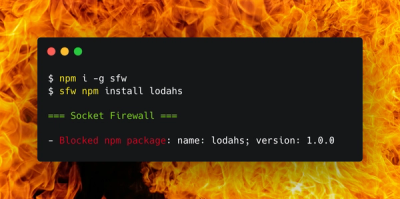

Socket Firewall is a free tool that blocks malicious packages at install time, giving developers proactive protection against rising supply chain attacks.

Research

Socket uncovers malicious Rust crates impersonating fast_log to steal Solana and Ethereum wallet keys from source code.