Security News

Package Maintainers Call for Improvements to GitHub’s New npm Security Plan

Maintainers back GitHub’s npm security overhaul but raise concerns about CI/CD workflows, enterprise support, and token management.

@biopassid/face-sdk-react-native

Advanced tools

Key Features • Customizations • Quick Start Guide • Prerequisites • Installation • How to use • LicenseKey • FaceConfig • Something else • Changelog • Support

Increase the security of your applications without harming your user experience.

All customization available:

Enable or disable components:

Change all colors:

Check out our official documentation for more in depth information on BioPass ID.

| Android | iOS | |

|---|---|---|

| Support | SDK 24+ | iOS 15+ |

- A device with a camera

- License key

- Internet connection is required to verify the license

npm install @biopassid/face-sdk-react-native

Change the minimum Android sdk version to 24 (or higher) in your android/app/build.gradle file.

minSdkVersion 24

Requires iOS 15.0 or higher.

Add to the ios/Info.plist:

Privacy - Camera Usage Description and a usage description.If editing Info.plist as text, add:

<key>NSCameraUsageDescription</key>

<string>Your camera usage description</string>

Then go into your project's ios folder and run pod install.

# Go into ios folder

$ cd ios

# Install dependencies

$ pod install

<?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE plist PUBLIC "-//Apple//DTD PLIST 1.0//EN" "http://www.apple.com/DTDs/PropertyList-1.0.dtd">

<plist version="1.0">

<dict>

<key>NSPrivacyCollectedDataTypes</key>

<array>

<dict>

<key>NSPrivacyCollectedDataType</key>

<string>NSPrivacyCollectedDataTypeOtherUserContent</string>

<key>NSPrivacyCollectedDataTypeLinked</key>

<false/>

<key>NSPrivacyCollectedDataTypeTracking</key>

<false/>

<key>NSPrivacyCollectedDataTypePurposes</key>

<array>

<string>NSPrivacyCollectedDataTypePurposeAppFunctionality</string>

</array>

</dict>

<dict>

<key>NSPrivacyCollectedDataType</key>

<string>NSPrivacyCollectedDataTypeDeviceID</string>

<key>NSPrivacyCollectedDataTypeLinked</key>

<false/>

<key>NSPrivacyCollectedDataTypeTracking</key>

<false/>

<key>NSPrivacyCollectedDataTypePurposes</key>

<array>

<string>NSPrivacyCollectedDataTypePurposeAppFunctionality</string>

</array>

</dict>

</array>

<key>NSPrivacyTracking</key>

<false/>

<key>NSPrivacyAccessedAPITypes</key>

<array>

<dict>

<key>NSPrivacyAccessedAPITypeReasons</key>

<array>

<string>CA92.1</string>

</array>

<key>NSPrivacyAccessedAPIType</key>

<string>NSPrivacyAccessedAPICategoryUserDefaults</string>

</dict>

</array>

</dict>

</plist>

You can use the library in Expo via a development build.

You will need to use expo-build-properties plugin.

npx expo install expo-build-properties

Add the configuration in the app.json file.

{

"plugins" : [

[

"expo-build-properties",

{

"android": {

"extraMavenRepos": [

{

"url": "https://packagecloud.io/biopassid/FaceSDKAndroid/maven2"

},

{

"url": "https://packagecloud.io/biopassid/dlibwrapper/maven2"

}

]

}

}

]

],

}

To call @biopassid/face-sdk-react-native in your React Native project is as easy as follow:

import React from 'react';

import {StyleSheet, View, Button} from 'react-native';

import {useFace} from '@biopassid/face-sdk-react-native';

export default function App() {

const {takeFace} = useFace();

async function onPress() {

await takeFace({

config: {

licenseKey: 'your-license-key',

// If you want to use Liveness, uncomment this line

// liveness: {enabled: true},

},

onFaceCapture: (

image,

faceAttributes /* Only available on Liveness */,

) => {

console.log('onFaceCapture:', image.substring(0, 20));

// Only available on Liveness

console.log('onFaceCapture:', faceAttributes);

},

// Only available on Liveness

onFaceDetected: faceAttributes => {

console.log('onFaceDetected:', faceAttributes);

},

});

}

return (

<View style={styles.container}>

<Button onPress={onPress} title="Capture Face" />

</View>

);

}

const styles = StyleSheet.create({

container: {

flex: 1,

alignItems: 'center',

justifyContent: 'center',

backgroundColor: '#FFFFFF',

},

});

In this example we use facial detection in liveness mode to perform automatic facial capture and send it to the BioPass ID API.

We used the Liveness from the Multibiometrics plan.

Here, you will need an API key to be able to make requests to the BioPass ID API. To get your API key contact us through our website BioPass ID.

import React from 'react';

import {StyleSheet, View, Button} from 'react-native';

import {useFace} from '@biopassid/face-sdk-react-native';

export default function App() {

const {takeFace} = useFace();

async function onPress() {

await takeFace({

config: {

licenseKey: 'your-license-key',

liveness: {enabled: true},

},

onFaceCapture: async (image, faceAttributes) => {

// Create headers passing your api key

const headers = {

'Content-Type': 'application/json',

'Ocp-Apim-Subscription-Key': '9cf1c6b269d04da8a51cc6bdfeec7557',

};

// Create json body

const body = JSON.stringify({

Spoof: {Image: image},

});

// Execute request to BioPass ID API

const response = await fetch(

'https://api.biopassid.com/multibiometrics/v2/liveness',

{

method: 'POST',

headers,

body,

},

);

const data = await response.json();

// Handle API response

console.log('Response status: ', response.status);

console.log('Response body: ', data);

},

onFaceDetected: faceAttributes => {

console.log('onFaceDetected:', faceAttributes);

},

});

}

return (

<View style={styles.container}>

<Button onPress={onPress} title="Capture Face" />

</View>

);

}

const styles = StyleSheet.create({

container: {

flex: 1,

alignItems: 'center',

justifyContent: 'center',

backgroundColor: '#FFFFFF',

},

});

import React, {useEffect, useState} from 'react';

import {

StyleSheet,

View,

ScrollView,

Text,

TouchableOpacity,

Image,

} from 'react-native';

import {

FaceConfig,

FaceExtract,

useFace,

useRecognition,

} from '@biopassid/face-sdk-react-native';

export default function App() {

const [imageA, setImageA] = useState<string | null>();

const [imageB, setImageB] = useState<string | null>();

const [faceExtractA, setFaceExtractA] = useState<FaceExtract | null>();

const [faceExtractB, setFaceExtractB] = useState<FaceExtract | null>();

const {takeFace} = useFace();

const {closeRecognition, extract, startRecognition, verify} =

useRecognition();

const config: FaceConfig = {licenseKey: 'your-license-key'};

useEffect(() => {

startRecognition('your-license-key');

return () => closeRecognition();

}, [closeRecognition, startRecognition]);

async function extractA() {

await takeFace({

config: config,

onFaceCapture: async (image, faceAttributes) => {

setImageA(image);

const faceExtractA = await extract(image);

setFaceExtractA(faceExtractA);

console.log('ExtractA:', faceExtractA);

},

});

}

async function extractB() {

await takeFace({

config: config,

onFaceCapture: async (image, faceAttributes) => {

setImageB(image);

const faceExtractB = await extract(image);

setFaceExtractB(faceExtractB);

console.log('ExtractB:', faceExtractB);

},

});

}

async function verifyTemplates() {

if (faceExtractA && faceExtractB) {

const faceVerify = await verify(

faceExtractA!.template,

faceExtractB!.template,

);

console.log('Verify:', faceVerify);

}

}

return (

<ScrollView style={styles.container}>

<Text style={styles.title}>Face Recognition</Text>

{imageA && (

<View style={styles.imageContainer}>

<Image

style={styles.image}

source={{

uri: `data:image/png;base64,${imageA}`,

}}

/>

</View>

)}

<TouchableOpacity style={styles.button} onPress={extractA}>

<Text style={styles.buttonText}>Extract A</Text>

</TouchableOpacity>

{imageB && (

<View style={styles.imageContainer}>

<Image

style={styles.image}

source={{

uri: `data:image/png;base64,${imageB}`,

}}

/>

</View>

)}

<TouchableOpacity style={styles.button} onPress={extractB}>

<Text style={styles.buttonText}>Extract B</Text>

</TouchableOpacity>

<TouchableOpacity style={styles.button} onPress={verifyTemplates}>

<Text style={styles.buttonText}>Verify Templates</Text>

</TouchableOpacity>

</ScrollView>

);

}

const styles = StyleSheet.create({

container: {

flex: 1,

paddingHorizontal: 30,

backgroundColor: '#FFF',

},

title: {

marginTop: 54,

marginBottom: 15,

color: '#000',

fontSize: 32,

fontWeight: 'bold',

textAlign: 'center',

},

imageContainer: {

marginTop: 12,

alignItems: 'center',

justifyContent: 'center',

},

image: {

width: 150,

height: 150,

resizeMode: 'contain',

marginBottom: 20,

},

button: {

marginBottom: 15,

alignItems: 'center',

backgroundColor: '#D6A262',

padding: 10,

},

buttonText: {

fontSize: 25,

fontWeight: 'bold',

color: '#FFF',

},

});

In this example we use react-native-sqlite-storage to save templates locally.

First, add the react-native-sqlite-storage package.

npm install react-native-sqlite-storage

Because we’re using TypeScript, we can install @types/react-native-sqlite-storage to use the included types. If you stick to JavaScript, you don’t have to install this library.

Define the Template data model:

export interface Template {

id: number;

image: string;

template: string;

}

Create the DBHelper to handle DB operations:

import {

enablePromise,

openDatabase,

SQLiteDatabase,

} from 'react-native-sqlite-storage';

import {Template} from './Template';

const tableName = 'templates';

enablePromise(true);

export async function getDBConnection() {

return openDatabase({name: 'template.db', location: 'default'});

}

export async function createTable(db: SQLiteDatabase) {

const query = `CREATE TABLE IF NOT EXISTS ${tableName} (id INTEGER PRIMARY KEY AUTOINCREMENT, image TEXT, template TEXT)`;

await db.executeSql(query);

}

export async function getTemplates(db: SQLiteDatabase): Promise<Template[]> {

try {

const templates: Template[] = [];

const results = await db.executeSql(`SELECT * FROM ${tableName}`);

results.forEach(result => {

for (let index = 0; index < result.rows.length; index++) {

templates.push(result.rows.item(index));

}

});

return templates;

} catch (error) {

console.error(error);

throw Error('Failed to get templates');

}

}

export async function saveTemplate(

db: SQLiteDatabase,

image: string,

template: string,

) {

const insertQuery = `INSERT INTO ${tableName} (image, template) values (?, ?)`;

return db.executeSql(insertQuery, [image, template]);

}

export async function deleteTemplate(db: SQLiteDatabase, id: number) {

const deleteQuery = `DELETE FROM ${tableName} WHERE id = ${id}`;

await db.executeSql(deleteQuery);

}

In your App.tsx:

import React, {useCallback, useEffect, useState} from 'react';

import {

StyleSheet,

View,

ScrollView,

Text,

TouchableOpacity,

Image,

Button,

Alert,

} from 'react-native';

import {

FaceConfig,

useFace,

useRecognition,

} from '@biopassid/face-sdk-react-native';

import {Template} from './Template';

import {

createTable,

deleteTemplate,

getDBConnection,

getTemplates,

saveTemplate,

} from './DBHelper';

export default function App() {

const [templates, setTemplates] = useState<Template[]>([]);

const {takeFace} = useFace();

const {closeRecognition, extract, startRecognition, verify} =

useRecognition();

const config: FaceConfig = {licenseKey: 'your-license-key'};

const loadDataCallback = useCallback(async () => {

try {

const storedTemplates = await getAll();

setTemplates(storedTemplates);

} catch (error) {

console.error(error);

}

}, []);

useEffect(() => {

loadDataCallback();

startRecognition('your-license-key');

return () => closeRecognition();

}, [closeRecognition, loadDataCallback, startRecognition]);

async function getAll() {

const db = await getDBConnection();

await createTable(db);

return await getTemplates(db);

}

async function extractTemplate() {

await takeFace({

config: config,

onFaceCapture: async (image, faceAttributes) => {

try {

const faceExtract = await extract(image);

if (faceExtract) {

const db = await getDBConnection();

await saveTemplate(db, image, faceExtract!.template);

loadDataCallback();

}

} catch (error) {

console.error(error);

}

},

});

}

async function verifyTemplate() {

await takeFace({

config: config,

onFaceCapture: async (image, faceAttributes) => {

try {

const faceExtract = await extract(image);

if (faceExtract) {

const templates = await getAll();

const filteredTemplates: Template[] = [];

for (const template of templates) {

const faceVerify = await verify(

faceExtract!.template,

template.template,

);

if (faceVerify?.isGenuine) {

filteredTemplates.push(template);

}

}

if (filteredTemplates.length) {

setTemplates(filteredTemplates);

} else {

Alert.alert(

'Template not found',

'No template was found compatible with the captured image.',

[{text: 'OK', onPress: () => {}}],

);

}

}

} catch (error) {

console.error(error);

}

},

});

}

async function removeTemplate(template: Template) {

try {

const db = await getDBConnection();

await deleteTemplate(db, template.id);

loadDataCallback();

} catch (error) {

console.error(error);

}

}

return (

<View style={styles.container}>

<Text style={styles.title}>Face Recognition</Text>

<ScrollView style={styles.templateList}>

<View>

{templates.map(template => (

<TouchableOpacity

key={template.id}

onLongPress={() => removeTemplate(template)}>

<View style={styles.templateItem}>

<Image

style={styles.image}

source={{

uri: `data:image/png;base64,${template.image}`,

}}

/>

<Text style={styles.templateItemTitle}>{template.id}</Text>

</View>

</TouchableOpacity>

))}

</View>

</ScrollView>

<View style={styles.buttonContainer}>

<Button onPress={extractTemplate} title="Add template" />

</View>

<View style={styles.buttonContainer}>

<Button onPress={verifyTemplate} title="Verify Template" />

</View>

<View style={styles.buttonContainer}>

<Button onPress={loadDataCallback} title="Get All" />

</View>

</View>

);

}

const styles = StyleSheet.create({

container: {

flex: 1,

paddingHorizontal: 30,

backgroundColor: '#FFF',

},

title: {

marginTop: 54,

marginBottom: 15,

color: '#000',

fontSize: 32,

fontWeight: 'bold',

textAlign: 'center',

},

buttonContainer: {

marginBottom: 15,

},

templateList: {

marginBottom: 15,

},

templateItem: {

flex: 1,

flexDirection: 'row',

alignItems: 'center',

marginBottom: 15,

},

image: {

height: 40,

width: 40,

borderRadius: 40,

},

templateItemTitle: {

marginLeft: 20,

color: '#000',

},

});

To use @biopassid/face-sdk-react-native you need a license key. To set the license key needed is simple as setting another attribute. Simply doing:

// Face Detection

import { useFace } from '@biopassid/face-sdk-react-native';

const { takeFace } = useFace();

await takeFace({

config: { licenseKey: 'your-license-key' },

onFaceCapture: (image, faceAttributes) => {},

});

// Face Recognition

import { useRecognition } from '@biopassid/face-sdk-react-native';

const { startRecognition } = useRecognition();

startRecognition('your-license-key');

You can set a custom callback to receive the captured image. Note: FaceAttributes will only be available if livenes mode is enabled. You can write you own callback following this example:

import { useFace } from '@biopassid/face-sdk-react-native';

const { takeFace } = useFace();

await takeFace({

config: { licenseKey: 'your-license-key' },

onFaceCapture: (image, faceAttributes) => {

console.log('onFaceCapture:', image.substring(0, 20));

console.log('onFaceCapture:', faceAttributes);

},

onFaceDetected: (faceAttributes) => {

console.log('onFaceDetected:', faceAttributes);

},

});

| Name | Type | Description |

|---|---|---|

| faceProp | number | Proportion of the area occupied by the face in the image, in percentage |

| faceWidth | number | Face width, in pixels |

| faceHeight | number | Face height, in pixels |

| ied | number | Distance between left eye and right eye, in pixels |

| bbox | FaceRect | Face bounding box |

| rollAngle | number | The Euler angle X of the head. Indicates the rotation of the face about the axis pointing out of the image. Positive z euler angle is a counter-clockwise rotation within the image plane |

| pitchAngle | number | The Euler angle X of the head. Indicates the rotation of the face about the horizontal axis of the image. Positive x euler angle is when the face is turned upward in the image that is being processed |

| yawAngle | number | The Euler angle Y of the head. Indicates the rotation of the face about the vertical axis of the image. Positive y euler angle is when the face is turned towards the right side of the image that is being processed |

| averageLightIntensity | number | The average intensity of the pixels in the image |

| leftEyeOpenProbability? // Android Only | number | Probability that the face’s left eye is open, in percentage |

| rightEyeOpenProbability? // Android Only | number | Probability that the face’s right eye is open, in percentage |

| smilingProbability? // Android Only | number | Probability that the face is smiling, in percentage |

| Name | Type |

|---|---|

| left | number |

| top | number |

| right | number |

| bottom | number |

The SDK supports 2 types of facial detection, below you can see their description and how to use them:

A simpler facial detection, supporting face centering and proximity. This is the SDK's default detection. See FaceDetectionOptions to see what settings are available for this functionality. Note: For this functionality to work, liveness mode and continuous capture must be disabled. See below how to use:

const config: FaceConfig = {

licenseKey: 'your-license-key',

liveness: { enabled: false }, // liveness mode must be disabled. This is the default value

continuousCapture: { enabled: false }, // continuous capture must be disabled. This is the default value

faceDetection: {

enabled: true,

autoCapture: true,

multipleFacesEnabled: false,

timeToCapture: 3000, // time in millisecond

maxFaceDetectionTime: 60000, // time in millisecond

scoreThreshold: 0.5,

},

};

More accurate facial detection supporting more features beyond face centering and proximity. Ideal for those who want to have more control over facial detection. See FaceLivenessDetectionOptions to see what settings are available for this functionality. Note: This feature only works with the front camera. See below how to use:

const config: FaceConfig = {

licenseKey: 'your-license-key',

liveness: {

enabled: true,

debug: false,

timeToCapture: 3000, // time in milliseconds

maxFaceDetectionTime: 60000, // time in milliseconds

minFaceProp: 0.1,

maxFaceProp: 0.4,

minFaceWidth: 150,

minFaceHeight: 150,

ied: 90,

bboxPad: 20,

faceDetectionThresh: 0.5,

rollThresh: 4.0,

pitchThresh: 4.0,

yawThresh: 4.0,

closedEyesThresh: 0.7,

smilingThresh: 0.7,

tooDarkThresh: 50,

tooLightThresh: 170,

faceCentralizationThresh: 0.05,

},

};

You can use continuous shooting to capture multiple frames at once. Additionally, you can set a maximum number of frames to be captured using maxNumberFrames. As well as capture time with timeToCapture. Note: Facial detection does not work with continuous capture. Using continuous capture is as simple as setting another attribute. Simply by doing:

const config: FaceConfig = {

licenseKey: 'your-license-key',

liveness: { enabled: false }, // liveness mode must be disabled. This is the default value

continuousCapture: {

enabled: true,

timeToCapture: 1000, // capture every frame per second, time in millisecond

maxNumberFrames: 40,

},

};

Extract is a funcionality to extract a template from a given biometric image. The operation returns a FaceExtract object which contains the resulting template as a byte array and a status.

import { useRecognition } from '@biopassid/face-sdk-react-native';

const { extract, startRecognition } = useRecognition();

startRecognition('your-license-key');

const faceExtract = await extract(base64Image);

| Name | Type | Description |

|---|---|---|

| status | number | The resulting status |

| template | string | The resulting template as a base64 string |

Verify is a funcionality to compares two biometric templates. The operation returns a FaceVerify object which contains the resulting score and if the templates are correspondent.

import { useRecognition } from '@biopassid/face-sdk-react-native';

const { startRecognition, verify } = useRecognition();

startRecognition('your-license-key');

const faceVerify = await verify(base64Template, base64Template);

| Name | Type | Description |

|---|---|---|

| isGenuine | boolean | Indicates whether the informed biometric templates are correspondent or not |

| score | number | Indicates the level of similarity between the two given biometrics. Its value may vary from 0 to 100 |

You can also use pre-build configurations on your application, so you can automatically start using multiples services and features that better suit your application. You can instantiate each one and use it's default properties, or if you prefer you can change every config available. Here are the types that are supported right now:

| Name | Type |

|---|---|

| licenseKey | string |

| resolutionPreset | FaceResolutionPreset |

| lensDirection | FaceCameraLensDirection |

| imageFormat | FaceImageFormat |

| flashEnabled | boolean |

| fontFamily | string |

| liveness | FaceLivenessDetectionOptions |

| continuousCapture | FaceContinuousCaptureOptions |

| faceDetection | FaceDetectionOptions |

| mask | FaceMaskOptions |

| titleText | FaceTextOptions |

| loadingText | FaceTextOptions |

| helpText | FaceTextOptions |

| feedbackText | FaceFeedbackTextOptions |

| backButton | FaceButtonOptions |

| flashButton | FaceFlashButtonOptions |

| switchCameraButton | FaceButtonOptions |

| captureButton | FaceButtonOptions |

Default configs:

const defaultConfig: FaceConfig = {

licenseKey: '',

resolutionPreset: FaceResolutionPreset.VERYHIGH,

lensDirection: FaceCameraLensDirection.FRONT,

imageFormat: FaceImageFormat.JPEG,

flashEnabled: false,

fontFamily: 'facesdk_opensans_bold',

liveness: {

enabled: false,

debug: false,

timeToCapture: 3000,

maxFaceDetectionTime: 60000,

minFaceProp: 0.1,

maxFaceProp: 0.4,

minFaceWidth: 150,

minFaceHeight: 150,

ied: 90,

bboxPad: 20,

faceDetectionThresh: 0.5,

rollThresh: 4.0,

pitchThresh: 4.0,

yawThresh: 4.0,

closedEyesThresh: 0.7,

smilingThresh: 0.7,

tooDarkThresh: 50,

tooLightThresh: 170,

faceCentralizationThresh: 0.05,

},

continuousCapture: {

enabled: false,

timeToCapture: 1000,

maxNumberFrames: 40,

},

faceDetection: {

enabled: true,

autoCapture: true,

multipleFacesEnabled: false,

timeToCapture: 3000,

maxFaceDetectionTime: 40000,

scoreThreshold: 0.7,

},

mask: {

enabled: true,

type: FaceMaskFormat.FACE,

backgroundColor: '#CC000000',

frameColor: '#FFFFFF',

frameEnabledColor: '#16AC81',

frameErrorColor: '#E25353',

},

titleText: {

enabled: true,

content: 'Capturing Face',

textColor: '#FFFFFF',

textSize: 20,

},

loadingText: {

enabled: true,

content: 'Processing...',

textColor: '#FFFFFF',

textSize: 14,

},

helpText: {

enabled: true,

content: 'Fit your face into the shape below',

textColor: '#FFFFFF',

textSize: 14,

},

feedbackText: {

enabled: true,

messages: {

noDetection: 'No faces detected',

multipleFaces: 'Multiple faces detected',

faceCentered: 'Face centered. Do not move',

tooClose: 'Turn your face away',

tooFar: 'Bring your face closer',

tooLeft: 'Move your face to the right',

tooRight: 'Move your face to the left',

tooUp: 'Move your face down',

tooDown: 'Move your face up',

invalidIED: 'Invalid inter-eye distance',

faceAngleMisaligned: 'Misaligned face angle',

closedEyes: 'Open your eyes',

smiling: 'Do not smile',

tooDark: 'Too dark',

tooLight: 'Too light',

},

textColor: '#FFFFFF',

textSize: 14,

},

backButton: {

enabled: true,

backgroundColor: '#00000000',

buttonPadding: 0,

buttonSize: { width: 56, height: 56 },

iconOptions: {

enabled: true,

iconFile: 'facesdk_ic_close',

iconColor: '#FFFFFF',

iconSize: { width: 32, height: 32 },

},

labelOptions: {

enabled: false,

content: 'Back',

textColor: '#FFFFFF',

textSize: 14,

},

},

flashButton: {

enabled: false,

backgroundColor: '#FFFFFF',

buttonPadding: 0,

buttonSize: { width: 56, height: 56 },

flashOnIconOptions: {

enabled: true,

iconFile: 'facesdk_ic_flash_on',

iconColor: '#FFCC01',

iconSize: { width: 32, height: 32 },

},

flashOnLabelOptions: {

enabled: false,

content: 'Flash On',

textColor: '#323232',

textSize: 14,

},

flashOffIconOptions: {

enabled: true,

iconFile: 'facesdk_ic_flash_off',

iconColor: '#323232',

iconSize: { width: 32, height: 32 },

},

flashOffLabelOptions: {

enabled: false,

content: 'Flash Off',

textColor: '#323232',

textSize: 14,

},

},

switchCameraButton: {

enabled: true,

backgroundColor: '#FFFFFF',

buttonPadding: 0,

buttonSize: { width: 56, height: 56 },

iconOptions: {

enabled: true,

iconFile: 'facesdk_ic_switch_camera',

iconColor: '#323232',

iconSize: { width: 32, height: 32 },

},

labelOptions: {

enabled: false,

content: 'Switch Camera',

textColor: '#323232',

textSize: 14,

},

},

captureButton: {

enabled: true,

backgroundColor: '#FFFFFF',

buttonPadding: 0,

buttonSize: { width: 56, height: 56 },

iconOptions: {

enabled: true,

iconFile: 'facesdk_ic_capture',

iconColor: '#323232',

iconSize: { width: 32, height: 32 },

},

labelOptions: {

enabled: false,

content: 'Capture',

textColor: '#323232',

textSize: 14,

},

},

};

| Name | Type |

|---|---|

| enabled | boolean |

| timeToCapture | number // time in milliseconds |

| maxNumberFrames | number |

| Name | Type | Description |

|---|---|---|

| enabled | boolean | Activates facial detection |

| autoCapture | boolean | Activates automatic capture |

| multipleFacesEnabled | boolean | Allows the capture of photos with two or more faces |

| timeToCapture | number | Time it takes to perform an automatic capture, in miliseconds |

| maxFaceDetectionTime | number | Maximum facial detection attempt time, in miliseconds |

| scoreThreshold | number | Minimum trust score for a detection to be considered valid. Must be a number between 0 and 1, which 0.1 would be a lower face detection trust level and 0.9 would be a higher trust level |

| Name | Type | Description |

|---|---|---|

| enabled | boolean | Activates facial detection |

| debug | boolean | If activated, a red rectangle will be drawn around the detected faces, in addition, it will be shown in the feedback message which attribute caused an invalid face |

| timeToCapture | number | Time it takes to perform an automatic capture, in miliseconds |

| maxFaceDetectionTime | number | Maximum facial detection attempt time, in miliseconds |

| minFaceProp | number | Maximum facial detection attempt time, in miliseconds |

| maxFaceProp | number | Maximum limit on the proportion of the area occupied by the face in the image, in percentage |

| minFaceWidth | number | Minimum face width, in pixels |

| minFaceHeight | number | Minimum face height, in pixels |

| ied | number | Minimum distance between left eye and right eye, in pixels |

| bboxPad | number | Padding the face's bounding box to the edges of the image, in pixels |

| faceDetectionThresh | number | Minimum trust score for a detection to be considered valid. Must be a number between 0 and 1, which 0.1 would be a lower face detection trust level and 0.9 would be a higher trust level |

| rollThresh | number | The Euler angle X of the head. Indicates the rotation of the face about the axis pointing out of the image. Positive z euler angle is a counter-clockwise rotation within the image plane |

| pitchThresh | number | The Euler angle X of the head. Indicates the rotation of the face about the horizontal axis of the image. Positive x euler angle is when the face is turned upward in the image that is being processed |

| yawThresh | number | The Euler angle Y of the head. Indicates the rotation of the face about the vertical axis of the image. Positive y euler angle is when the face is turned towards the right side of the image that is being processed |

| closedEyesThresh // Android Only | number | Minimum probability threshold that the left eye and right eye of the face are closed, in percentage. A value less than 0.7 indicates that the eyes are likely closed |

| smilingThresh // Android Only | number | Minimum threshold for the probability that the face is smiling, in percentage. A value of 0.7 or more indicates that a person is likely to be smiling |

| tooDarkThresh | number | Minimum threshold for the average intensity of the pixels in the image |

| tooLightThresh | number | Maximum threshold for the average intensity of the pixels in the image |

| faceCentralizationThresh | number | Threshold to consider the face centered, in percentage |

| Name | Type |

|---|---|

| enabled | boolean |

| type | FaceMaskFormat |

| backgroundColor | string |

| frameColor | string |

| frameEnabledColor | string |

| frameErrorColor | string |

| Name | Type |

|---|---|

| enabled | boolean |

| messages | FaceFeedbackTextMessages |

| textColor | string |

| textSize | number |

| Name | Type |

|---|---|

| noDetection | string |

| multipleFaces | string |

| faceCentered | string |

| tooClose | string |

| tooFar | string |

| tooLeft | string |

| tooRight | string |

| tooUp | string |

| tooDown | string |

| invalidIED | string |

| faceAngleMisaligned | string |

| closedEyes // Android Only | string |

| smiling // Android Only | string |

| tooDark | string |

| tooLight | string |

| Name | Type |

|---|---|

| enabled | boolean |

| backgroundColor | string |

| buttonPadding | number |

| buttonSize | FaceSize |

| flashOnIconOptions | FaceIconOptions |

| flashOnLabelOptions | FaceTextOptions |

| flashOffIconOptions | FaceIconOptions |

| flashOffLabelOptions | FaceTextOptions |

| Name | Type |

|---|---|

| enabled | boolean |

| backgroundColor | string |

| buttonPadding | number |

| buttonSize | FaceSize |

| iconOptions | FaceIconOptions |

| labelOptions | FaceTextOptions |

| Name | Type |

|---|---|

| enabled | boolean |

| iconFile | string |

| iconColor | string |

| iconSize | FaceSize |

| Name | Type |

|---|---|

| enabled | boolean |

| content | string |

| textColor | string |

| textSize | number |

| Name |

|---|

| FaceCameraLensDirection.FRONT |

| FaceCameraLensDirection.BACK |

| Name |

|---|

| FaceImageFormat.JPEG |

| FaceImageFormat.PNG |

| Name |

|---|

| FaceMaskFormat.FACE |

| FaceMaskFormat.SQUARE |

| FaceMaskFormat.ELLIPSE |

| Name | Resolution |

|---|---|

| FaceResolutionPreset.LOW | 240p (352x288 on iOS, 320x240 on Android) |

| FaceResolutionPreset.MEDIUM | 480p (640x480 on iOS, 720x480 on Android) |

| FaceResolutionPreset.HIGH | 720p (1280x720) |

| FaceResolutionPreset.VERYHIGH | 1080p (1920x1080) |

| FaceResolutionPreset.ULTRAHIGH | 2160p (3840x2160) |

| FaceResolutionPreset.MAX | The highest resolution available |

You can use the default font family or set one of your own. To set a font, create a folder font under res directory in your android/app/src/main/res. Download the font which ever you want and paste it inside font folder. All font file names must be only: lowercase a-z, 0-9, or underscore. The structure should be some thing like below.

To add the font files to your Xcode project:

Then, add the "Fonts provided by application" key to your app’s Info.plist file. For the key’s value, provide an array of strings containing the relative paths to any added font files.

In the following example, the font file is inside the fonts directory, so you use fonts/roboto_mono_bold_italic.ttf as the string value in the Info.plist file.

Finally, just set the font passing the name of the font file when instantiating FaceConfig in your React Native app.

const config: FaceConfig = {

licenseKey: "your-license-key",

fontFamily: "roboto_mono_bold_italic",

};

You can use the default icons or define one of your own. To set a icon, download the icon which ever you want and paste it inside drawable folder in your android/app/src/main/res. All icon file names must be only: lowercase a-z, 0-9, or underscore. The structure should be some thing like below.

![]()

To add icon files to your Xcode project:

![]()

Finally, just set the icon passing the name of the icon file when instantiating FaceConfig in your React Native app.

const config: FaceConfig = {

licenseKey: "your-license-key",

// Changing back button icon

backButton: { iconOptions: { iconFile: "ic_baseline_camera" } },

// Changing switch camera button icon

switchCameraButton: { iconOptions: { iconFile: "ic_baseline_camera" } },

// Changing capture button icon

captureButton: { iconOptions: { iconFile: "ic_baseline_camera" } },

// Changing flash button icon

flashButton: {

flashOnIconOptions: { iconFile: 'ic_baseline_camera' },

flashOffIconOptions: { iconFile: 'ic_baseline_camera' },

},

};

Do you like the Face SDK and would you like to know about our other products? We have solutions for fingerprint detection and digital signature capture.

// Before

const config: FaceConfig = {

licenseKey: 'your-license-key',

feedbackText: {

messages: {

noFaceDetectedMessage: 'No faces detected',

multipleFacesDetectedMessage: 'Multiple faces detected',

detectedFaceIsCenteredMessage: 'Face centered. Do not move',

detectedFaceIsTooCloseMessage: 'Turn your face away',

detectedFaceIsTooFarMessage: 'Bring your face closer',

detectedFaceIsOnTheLeftMessage: 'Move your face to the right',

detectedFaceIsOnTheRightMessage: 'Move your face to the left',

detectedFaceIsTooUpMessage: 'Move your face down',

detectedFaceIsTooDownMessage: 'Move your face up',

},

},

};

// Now

const config: FaceConfig = {

licenseKey: 'your-license-key',

feedbackText: {

messages: {

noDetection: 'No faces detected',

multipleFaces: 'Multiple faces detected',

faceCentered: 'Face centered. Do not move',

tooClose: 'Turn your face away',

tooFar: 'Bring your face closer',

tooLeft: 'Move your face to the right',

tooRight: 'Move your face to the left',

tooUp: 'Move your face down',

tooDown: 'Move your face up',

},

},

};

FAQs

BioPass ID Face React Native module.

We found that @biopassid/face-sdk-react-native demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 6 open source maintainers collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Security News

Maintainers back GitHub’s npm security overhaul but raise concerns about CI/CD workflows, enterprise support, and token management.

Product

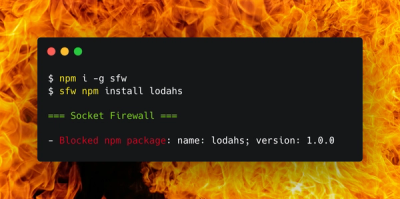

Socket Firewall is a free tool that blocks malicious packages at install time, giving developers proactive protection against rising supply chain attacks.

Research

Socket uncovers malicious Rust crates impersonating fast_log to steal Solana and Ethereum wallet keys from source code.