Security News

Deno 2.2 Improves Dependency Management and Expands Node.js Compatibility

Deno 2.2 enhances Node.js compatibility, improves dependency management, adds OpenTelemetry support, and expands linting and task automation for developers.

@vivocha/bot-sdk

Advanced tools

JavaScript / TypeScript SDK to create Bot Agents and Filters for the Vivocha platform

This SDK allows to write Vivocha Bot Agents integrating existing bots, built and trained using your preferred bot / NLP platform. E.g., Dialogflow, IBM Watson Assistant (formerly Conversation), Wit.ai, etc... By creating a BotManager it is possible to register multi-platform bot implementations and let Vivocha communicate with them through a well-defined and uniform message-based API.

The Vivocha platform provides out-of-the-box support for chat bots built using IBM Watson Assistant (formerly Conversation) and Dialogflow platforms. This means that it is possible to integrate these particular bot implementations with Vivocha simply using the Vivocha configuration app and specificing few settings, like authentication tokens, and following some, very simple, mandatory guidelines when building the bot, at design time. The first sections of this documentation focus on building custom Bot Agents using the Bot SDK, which allows to integrate them with the Vivocha system with ease and also provides a library to write bots using the Wit.ai NLP platform.

The last three sections of this guide are dedicated to the integration guidelines for bots built with the three supported platforms: IBM Watson Assistant (formerly Conversation), Dialogflow and Wit.ai.

The following picture shows an high-level overview of the Vivocha Bot SDK and its software components.

|

|---|

| FIGURE 1 - Overview of the main modules of the Bot SDK |

The examples folder contains some samples of Bot Managers, a Wit.ai Bot implementation and a Filter, along with some related HTTP requests to show how to call their APIs.

See:

sample: dead simple bot Agent and Manager plus a Bot Filter, read and use the examples/http-requests/sample.http file to learn more and to run them;dummy-bot: a simple bot (Agent and Manager) able to understand some simple "commands" to return several types of messages, including quick replies and templates. Read and use the examples/http-requests/dummy-bot.http file to learn more and to run and interact with them.sample-wit: a simple bot using the Wit.ai platform.TIP: For a quick start learning about the format of requests, responses and messages body, including quick replies and templates, just read the Dummy Bot code.

A BotAgent represents and communicates with a particular Bot implementation platform.

A BotManager exposes a Web API acting as a gateway to registered BotAgents.

Usually, the steps to use agents and managers are:

BotAgent for every Bot/NLP platform you need to support, handling / wrapping / transforming messages of type BotRequest and BotResponse;BotAgentManager instance;BotAgents defined in step 1) to the BotAgentManager, through the registerAgent(key, botAgent) method, where key (string) is the choosen bot engine (e.g, Dialogflow, Watson, ...) and agent is a BotAgent instance;BotAgentManager service through its listen() method, it exposes a Web API;BotAgent thanks to the engine.type message property, used as key in step 3). The API is fully described by its Swagger specification, available at http://<BotAgentManager-Host>:<port>/swagger.json.A BotFilter is a Web service to filter/manipulate/enrich/transform BotRequests and/or BotResponses.

For example, a BotFilter can enrich a request calling an external API to get additional data before sending it to a BotAgent, or it can filter a response coming from a BotAgent to transform data before forwarding it to the user chat.

Basically, to write a filter you have to:

BotFilter specifying a BotRequestFilter or a BotResponseFilter. These are the functions containing your logic to manipulate/filter/enrich requests to bots and responses from them. Inside them you can call, for example, external web services, access to DBs, transform data and do whatever you need to do to achieve your application-specific goal. A BotFilter can provide a filter only for requests, only for responses or both.BotFilter service through its listen() method, it exposes a Web API; the API is fully described by its Swagger specification, available at http://<BotFilter-Host>:<port>/swagger.json.A BotAgent represents an abstract Bot implementation and it directly communicates with a particular Bot / NLP platform (like Dialogflow, IBM Watson Assistant, formerly Conversation, and so on...).

In the Vivocha model, a Bot is represented by a function with the following signature:

In Typescript:

(request: BotRequest): Promise<BotResponse>

In JavaScript:

let botAgent = async (request) => {

// the logic to interact with the particular bot implementation

// goes here, then produce a BotResponse message...

...

return response;

}

Requests are sent to BotAgents, BotManagers and BotFilters. A BotRequest is a JSON with the following properties (in bold the required properties):

| PROPERTY | VALUE | DESCRIPTION |

|---|---|---|

event | string: start or continue or end or a custom string | start event is sent to wake-up the Bot; continue tells the Bot to continue the conversation; end to set the conversation as finished; a custom string can be set for specific custom internal Bot functionalities. |

message | (optional) object, see BotMessage below | the message to send to the BotAgent |

language | (optional) string. E.g., en, it, ... | language string, mandatory for some Bot platforms. |

data | (optional) object | an object containing data to send to the Bot. Its properties must be of basic type. E.g., {"firstname":"Antonio", "lastname": "Smith", "code": 12345} |

context | (optional) object | Opaque, Bot specific context data |

tempContext | (optional) object | Temporary context, useful to store volatile data; i.e., in bot filters chains. |

settings | (optional) BotSettings object (see below) | Bot platform settings. |

| PROPERTY | VALUE | DESCRIPTION |

|---|---|---|

code | string, value is always message | Vivocha code type for Bot messages. |

type | string: text or postback | Vivocha Bot message type. |

body | string | the message text body. |

quick_replies | (optional) only in case of type === text messages, an array of MessageQuickReply objects (see below) | an array of quick replies |

template | (optional) only in case of type === text messages, an object with a required type string property and an optional elements object array property | a template object |

Bot platform settings object. Along with the engine property (see the table below), it is possible to set an arbitrarily number of properties. In case, it is responsability of the specific Bot implementation / platform to handle them.

| PROPERTY | VALUE | DESCRIPTION |

|---|---|---|

engine | (optional) BotEngineSettings object (see below) | Specific Bot/NLP Platform settings. |

| PROPERTY | VALUE | DESCRIPTION |

|---|---|---|

type | string | Unique bot engine identifier, i.e., the platform name, like: Watson, Dialogflow, WitAi, ... |

settings | (optional) object | Specific settings to send to the BOT/NLP platform. E.g. for Watson Assistant (formerly Conversation) is an object like {"workspaceId": "<id>" "username": "<usrname>", "password": "<passwd>"}; for a Dialogflow bot is something like: {"token": "<token>", "startEvent": "MyCustomStartEvent"}, and so on... You need to refer to the documentation of the specific Bot Platform used. |

| PROPERTY | VALUE | DESCRIPTION |

|---|---|---|

content_type | string, accepted values: text or location | Type of the content of the Quick Reply |

title | (optional) string | title of the message |

payload | (optional) a string or a number | string or number related to the content-type property value |

image_url | (optional) string | a URL of an image |

Example of a request sent to provide the name in a conversation with a Wit.ai based Bot.

{

"language": "en",

"event": "continue",

"message": {

"code": "message",

"type": "text",

"body": "my name is Antonio Watson"

},

"settings": {

"engine": {

"type": "WitAi",

"settings": {

"token": "abcd-123"

}

}

},

"context": {

"contexts": [

"ask_for_name"

]

}

}

Responses are sent back by BotAgents, BotManagers and BotFilters to convay a Bot platform reply back to the Vivocha platform.

A BotResponse is a JSON with the following properties and it is similar to a BotRequest, except for some fields (in bold the required properties):

| PROPERTY | VALUE | DESCRIPTION |

|---|---|---|

event | string: continue or end | continue event is sent back to Vivocha to continue the conversation, in other words it means that the bot is awaiting for the next user message; end is sent back with the meaning that Bot finished is task. |

messages | (optional) an array of BotMessage objects (same as BotRequest) | the messages sent back by the BotAgent |

language | (optional) string. E.g., en, it, ... | language string code |

data | (optional) object | an object containing data collected or computed by the Bot. Its properties must be of simple type. E.g., {"firstname":"Antonio", "lastname": "Smith", "code": 12345, "availableAgents": 5} |

context | (optional) object | Opaque, Bot specific context data. The Vivocha platform will send it immutated to the Bot in the next iteration. |

tempContext | (optional) object | Temporary context, useful to store volatile data, i.e., in bot filters chains |

settings | (optional) BotSettings object | Bot platform settings |

raw | (optional) object | raw, platform specific, unparsed bot response. |

An example of response sent back by a Wit.ai based Bot. It is related to the request in the BotRequest sample above in this document.

{

"event": "continue",

"messages": [

{

"code": "message",

"type": "text",

"body": "Thank you Antonio Watson, do you prefer to be contacted by email or by phone?"

}

],

"data": {

"name": "Antonio Watson"

},

"settings": {

"engine": {

"type": "WitAi",

"settings": {

"token": "abcd-123"

}

}

},

"context": {

"contexts": [

"recontact_by_email_or_phone"

]

},

"raw": {

"_text": "my name is Antonio Watson",

"entities": {

"contact": [

{

"suggested": true,

"confidence": 0.9381,

"value": "Antonio Watson",

"type": "value"

}

],

"intent": [

{

"confidence": 0.9950627479,

"value": "provide_name"

}

]

},

"msg_id": "0ZUymTwNbUPLh6xp6"

}

}

A BotManager is a bot registry microservice, which basically provides two main functionalities:

BotAgents;BotAgents, acting as a gateway using a normalized interface.In the code contained in the examples directory it is possible to read in detail how to create and register Bot Agents.

Briefly, to register a BotAgent, BotManager provides a registerAgent() method:

const manager = new BotAgentManager();

manager.registerAgent('custom', async (msg: BotRequest): Promise<BotResponse> => {

// Bot Agent application logic goes here

// I.e., call the specific Bot implementation APIs (e.g., Watson, Dialogflow, etc...)

// adapting requests and responses.

...

}

The BotManager allows to register several BotAgents by specifying different type parameters (first param in registerAgent() method. E.g., Watson, Dialogflow, WitAi, custom, ecc... ).

In this way it is possible to have a multi-bot application instance, the BotManager will forward the requests to the correct registered bot, matching the registered BotAgent type with the settings.engine.type property in incoming BotRequests.

The BotManager listen() method starts a Web server microservice, exposing the following API endpoint:

POST /bot/message - Sends a BotRequest and replies with a BotResponse.

After launching a BotManager service, the detailed info, and a Swagger based API description, are always available at URL:

http(s)://<Your-BotAgentManager-Host>:<port>/swagger.json

BotFilters are Web (micro)services to augment or adapt or transform BotRequests before reaching a Bot, and/or to augment or adapt or transform BotResponses coming from a Bot before returning back them to the Vivocha platform. It is also possible to chain several BotFilters in order to have specialized filters related to the application domain.

Next picture shows an example of a BotFilters chain:

|

|---|

| FIGURE 2 - An example of a BotFilters chain configured using Vivocha |

The same BotFilter instance can act as a filter for requests, as a filter for responses or both.

See BotFilter class constructor to configure it as you prefer.

Figure 2 shows an example of a BotFilter chain: BotFilters A, B and C are configured to act as request filters; in other words they receive a BotRequest and return the same BotRequest maybe augmented with more data or transformed as a particular application requires. For example, BotFilter A may add data after reading from a DB, BotFilter B may call an API or external service to see if a given user has a premium account (consequentially setting in the request a isPremium boolean property), and so on...

When it's time to send a request to a BotAgent (through a BotManager), the Vivocha platform will sequentially call all the filters in the request chain before forwarding the resulting request to the Bot.

BotFilter D is a response filter and notice that BotFilter A is also configured to be a response filter; thus, when a response comes from the Bot, Vivocha sequentially calls all the response BotFilters in the response chain before sending back to a chat the resulting response. For example: a response BotFilter can hide or encrypt data coming from a Bot or it can on-the-fly convert currencies, or format dates or call external services and APIs to get useful additional data to send back to users.

As an example, refer to examples/sample.ts(.js) files where it is defined a runnable simple BotFilter.

The BotFilter listen() method runs a Web server microservice, exposing the following API endpoints:

POST /filter/request - For a request BotFilter, it receives a BotRequest and returns a BotRequest.

POST /filter/response - For a response BotFilter, it receives a BotResponse and returns a BotResponse.

After launching a BotFilter service, the detailed info, and a Swagger based API description, are always available at URL:

http(s)://<Your-BotFilter-Host>:<port>/swagger.json

Next sections briefly provide some guidelines to integrate Bots built with the three supported platforms and using the default drivers / settings.

N.B.: Vivocha can be integrated with any Bot Platform, if you're using a platform different than the supported you need to write a driver and a BotManager to use BotRequest / BotResponse messages and communicate with the particular, chosen, Bot Platform.

Dialogflow Bot Platform allows the creation of conversation flows using its nice Intents feature. Feel free to build your conversation flow as you prefer, related to the specific Bot application domain, BUT, in order to properly work with Vivocha taking advantage of the out-of-the-box support it provides, it is mandatory to follow some guidelines:

Must exists in Dialogflow an intent configured to be triggered by a start event. The start event name configured in a Dialogflow intent must exactly match the start event configured in Vivocha; Default is: start.

At the end of each conversation branch designed in Dialogflow, the bot MUST set a special context named (exactly) end, to tell to Vivocha that Bot's task is complete and to terminate the chat conversation.

Data passed to the Bot through Vivocha drivers are always contained inside a special context named SESSION_MESSAGE_DATA_PAYLOAD. Thus, the Dialogflow bot can access to data "stored" in that particular context in each intent that needs to get information; i.e., to extract real-time data coming from BotFilters. If the bot implementation needs to extract passed data/parameters, it can access to that context through (for example) the expression: #SESSION_MESSAGE_DATA_PAYLOAD.my_parameter_name - see Dialogflow documentation).

In the Dialogflow console:

followUp events to jump to a particular intent node in your bot;Watson Assistant (formerly Conversation) provides a tool to create conversation flows: Dialogs.

Watson Assistant doesn't handle events, only messages, thus you must create an intent trained to understand the word start (simulating an event, in this case).

To communicate that a conversation flow/branch is complete, in each leaf node of the Dialog node, set a specific context parameter to true named as specified by endEventKey property in the module constructor; Important: in order to use the default Vivocha driver, just set the dataCollectionComplete context parameter to true in each Watson Assistant Dialog leaf node; it can be set using the Watson Assistant JSON Editor for a particular dialog node; like in:

...

"context": {

"dataCollectionComplete": true

}

...

Using the IBM Watson Assistant workspace:

Slot-filling and parameters can be defined for every node in the Dialog tab;

a slot-filling can be specified for every Dialog node and the JSON output can be configured using the related JSON Editor;

An Entity can be of type pattern: this allows to define regex-based entities. To save in the context the entered value for a pattern entity it should be used the following syntax: @NAME_OF_THE_ENTITY.literal.

E.g., for slot filling containing a pattern entity like:

Check for: @ContactInfo - Save it as: $email

configure the particular slot through Edit Slot > ... > Open JSON Editor as:

...

"context": {

"email": "@ContactInfo.literal"

}

...

"milan,cagliari,london,rome,berlin".split(",").contains(input.text.toLowerCase())

Wit.ai is a pure Natural Language Processing (NLP) platform. Using the Web console it is not possible to design Bot's dialog flows or conversations, anymore. Therefore, all the bot application logic, conversation flows, contexts and so on... (in other words: the Bot itself) must be coded outside, calling Wit.ai APIs (mainly) to process natural language messages coming from the users. Through creating an App in Wit.ai and training the system for the specific application domain, it is possible to let it processing messages and extract information from them, like (but not only): user intents end entities, along with their confidence value.

Skipping platform-specific details, in order to create Wit.ai Chat Bots and integrate them with the Vivocha Platform you have to:

Create and train a Wit.ai App, naming intents that will be used by the coded Bot;

Write the code of your Bot subclassing the WitAiBot class provided by this SDK, mapping intents defined in 1) to handler functions;

Run the coded Bot (Agent) using a BotManager and configure it using the Vivocha web console.

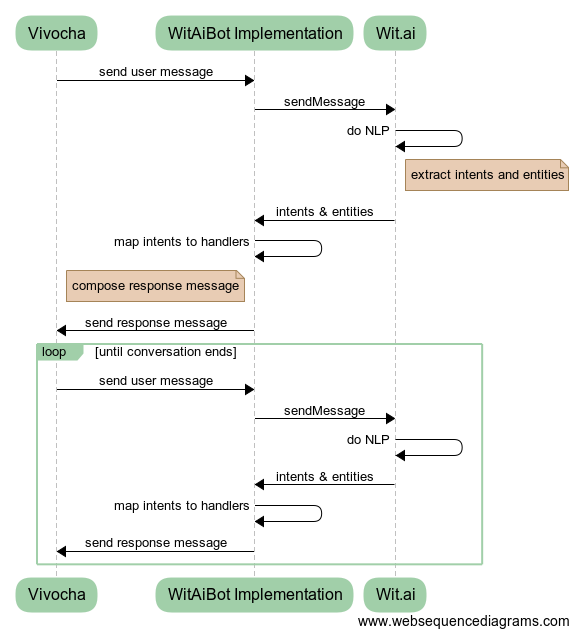

The next picture shows how this integration works:

|

|---|

| FIGURE 3 - The Vivocha - Wit.ai integration model: subclassing to provided WitAiBot class it is possible to quickly code bots using Wit.ai NLP tool without writing specific API calls. |

Subclassing the WitAiBot allows writing Bots using Wit.ai NLP.

Subclassing that class implies:

IntentsMap: it maps intents names as coming from Wit.ai to custom intent handler functions. E.g, in the following (TypeScript) snippet are defined the required intents mapping to handle a simple customer info collection;export class SimpleWitBot extends WitAiBot {

protected intents: IntentsMap = {

provide_name: (data, request) => this.askEmailorPhone(data.entities, request),

by_email: (data, request) => this.contactMeByEmail(data, request),

by_phone: (data, request) => this.contactMeByPhone(data, request),

provide_phone: (data, request) => this.providePhoneNumber(data.entities, request),

provide_email: (data, request) => this.provideEmailAddress(data.entities, request),

unknown: (data, request) => this.unknown(data, request)

};

...

// write intent handlers here...

}

Note that the unknown mapping is needed to handle all the cases when Wit.ai isn't able to extract an intent. For example, the associated handler function could reply with a message like the popular "Sorry I didn’t get that!" text ;)

getStartMessage(request: BotRequest) which is called by Vivocha to start a bot instance only at the very beggining of a conversation with a user;More details can be found in the dedicated examples/sample-wit.ts(.js) sample files.

use BotRequest/BotResponse context.contexts array property to set contexts, in order to drive your bot in taking decisions about which conversation flow branch follow and about what reply to the user. To check contexts, the WitAiBotclass provides the inContext() method. See the example to discover more;

in each intent mapping handler which decides to terminate the conversation, remember to send back a response with the event property set to end.

FAQs

Vivocha Bot SDK: TypeScript / JavaScript SDK to create/integrate chatbots with the Vivocha platform

The npm package @vivocha/bot-sdk receives a total of 4 weekly downloads. As such, @vivocha/bot-sdk popularity was classified as not popular.

We found that @vivocha/bot-sdk demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 0 open source maintainers collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Security News

Deno 2.2 enhances Node.js compatibility, improves dependency management, adds OpenTelemetry support, and expands linting and task automation for developers.

Security News

React's CRA deprecation announcement sparked community criticism over framework recommendations, leading to quick updates acknowledging build tools like Vite as valid alternatives.

Security News

Ransomware payment rates hit an all-time low in 2024 as law enforcement crackdowns, stronger defenses, and shifting policies make attacks riskier and less profitable.