Research

Security News

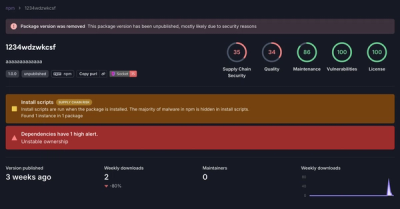

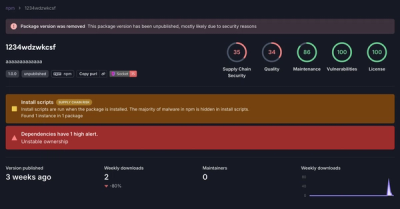

Threat Actor Exposes Playbook for Exploiting npm to Build Blockchain-Powered Botnets

A threat actor's playbook for exploiting the npm ecosystem was exposed on the dark web, detailing how to build a blockchain-powered botnet.

apache-arrow

Advanced tools

The apache-arrow npm package provides a cross-language development platform for in-memory data. It is designed to improve the performance and efficiency of data processing and analytics by using a columnar memory format. This package is particularly useful for handling large datasets and performing complex data manipulations.

Reading and Writing Arrow Files

This feature allows you to read and write Arrow files, which are efficient for storing and transferring large datasets. The code sample demonstrates how to read an Arrow file into a table and how to write a new table to an Arrow file.

const arrow = require('apache-arrow');

const fs = require('fs');

// Reading an Arrow file

const arrowFile = fs.readFileSync('data.arrow');

const table = arrow.Table.from([arrowFile]);

console.log(table.toString());

// Writing an Arrow file

const newTable = arrow.Table.new([{ name: 'Alice', age: 30 }, { name: 'Bob', age: 25 }]);

const arrowBuffer = newTable.serialize();

fs.writeFileSync('newData.arrow', arrowBuffer);DataFrame Operations

This feature provides DataFrame-like operations, such as creating tables, selecting columns, and filtering rows. The code sample shows how to create a DataFrame, select a column, and filter rows based on a condition.

const arrow = require('apache-arrow');

// Creating a DataFrame

const df = new arrow.Table({

name: arrow.Utf8Vector.from(['Alice', 'Bob']),

age: arrow.Int32Vector.from([30, 25])

});

// Selecting a column

const names = df.getColumn('name');

console.log(names.toArray());

// Filtering rows

const filtered = df.filter(row => row.get('age') > 25);

console.log(filtered.toString());Interoperability with Other Languages

Apache Arrow supports interoperability with other languages like Python, R, and Java. The code sample demonstrates how to create a table in JavaScript and pass it to Python using the pyarrow library.

const arrow = require('apache-arrow');

const pyarrow = require('pyarrow'); // Assuming you have a Python environment set up

// Create a table in JavaScript

const table = arrow.Table.new([{ name: 'Alice', age: 30 }, { name: 'Bob', age: 25 }]);

const arrowBuffer = table.serialize();

// Pass the buffer to Python

const pyTable = pyarrow.Table.from_buffer(arrowBuffer);

print(pyTable)Pandas is a powerful data manipulation and analysis library for Python. It provides DataFrame and Series data structures for handling tabular and time series data. While pandas is highly efficient for in-memory data manipulation, it does not use a columnar memory format like Apache Arrow, which can be less efficient for certain types of operations.

Dask is a parallel computing library in Python that scales the existing Python ecosystem, including pandas and NumPy. It provides advanced parallelism for analytics, enabling the processing of large datasets that do not fit into memory. Unlike Apache Arrow, Dask focuses on parallel computing and distributed data processing.

Arrow is a set of technologies that enable big data systems to process and transfer data quickly.

apache-arrow from NPMnpm install apache-arrow or yarn add apache-arrow

(read about how we package apache-arrow below)

Apache Arrow is a columnar memory layout specification for encoding vectors and table-like containers of flat and nested data. The Arrow spec aligns columnar data in memory to minimize cache misses and take advantage of the latest SIMD (Single input multiple data) and GPU operations on modern processors.

Apache Arrow is the emerging standard for large in-memory columnar data (Spark, Pandas, Drill, Graphistry, ...). By standardizing on a common binary interchange format, big data systems can reduce the costs and friction associated with cross-system communication.

Check out our API documentation to learn more about how to use Apache Arrow's JS implementation. You can also learn by example by checking out some of the following resources:

import { readFileSync } from 'fs';

import { Table } from 'apache-arrow';

const arrow = readFileSync('simple.arrow');

const table = Table.from([arrow]);

console.log(table.toString());

/*

foo, bar, baz

1, 1, aa

null, null, null

3, null, null

4, 4, bbb

5, 5, cccc

*/

import { readFileSync } from 'fs';

import { Table } from 'apache-arrow';

const table = Table.from([

'latlong/schema.arrow',

'latlong/records.arrow'

].map((file) => readFileSync(file)));

console.log(table.toString());

/*

origin_lat, origin_lon

35.393089294433594, -97.6007308959961

35.393089294433594, -97.6007308959961

35.393089294433594, -97.6007308959961

29.533695220947266, -98.46977996826172

29.533695220947266, -98.46977996826172

*/

import {

Table,

FloatVector,

DateVector

} from 'apache-arrow';

const LENGTH = 2000;

const rainAmounts = Float32Array.from(

{ length: LENGTH },

() => Number((Math.random() * 20).toFixed(1)));

const rainDates = Array.from(

{ length: LENGTH },

(_, i) => new Date(Date.now() - 1000 * 60 * 60 * 24 * i));

const rainfall = Table.new(

[FloatVector.from(rainAmounts), DateVector.from(rainDates)],

['precipitation', 'date']

);

fetchimport { Table } from "apache-arrow";

const table = await Table.from(fetch("/simple.arrow"));

console.log(table.toString());

import { readFileSync } from 'fs';

import { Table } from 'apache-arrow';

const table = Table.from([

'latlong/schema.arrow',

'latlong/records.arrow'

].map(readFileSync));

const column = table.getColumn('origin_lat');

// Copy the data into a TypedArray

const typed = column.toArray();

assert(typed instanceof Float32Array);

for (let i = -1, n = column.length; ++i < n;) {

assert(column.get(i) === typed[i]);

}

import MapD from 'rxjs-mapd';

import { Table } from 'apache-arrow';

const port = 9091;

const host = `localhost`;

const db = `mapd`;

const user = `mapd`;

const password = `HyperInteractive`;

MapD.open(host, port)

.connect(db, user, password)

.flatMap((session) =>

// queryDF returns Arrow buffers

session.queryDF(`

SELECT origin_city

FROM flights

WHERE dest_city ILIKE 'dallas'

LIMIT 5`

).disconnect()

)

.map(([schema, records]) =>

// Create Arrow Table from results

Table.from([schema, records]))

.map((table) =>

// Stringify the table to CSV with row numbers

table.toString({ index: true }))

.subscribe((csvStr) =>

console.log(csvStr));

/*

Index, origin_city

0, Oklahoma City

1, Oklahoma City

2, Oklahoma City

3, San Antonio

4, San Antonio

*/

See DEVELOP.md

Even if you do not plan to contribute to Apache Arrow itself or Arrow integrations in other projects, we'd be happy to have you involved:

We prefer to receive contributions in the form of GitHub pull requests. Please send pull requests against the github.com/apache/arrow repository.

If you are looking for some ideas on what to contribute, check out the JIRA issues for the Apache Arrow project. Comment on the issue and/or contact dev@arrow.apache.org with your questions and ideas.

If you’d like to report a bug but don’t have time to fix it, you can still post it on JIRA, or email the mailing list dev@arrow.apache.org

apache-arrow is written in TypeScript, but the project is compiled to multiple JS versions and common module formats.

The base apache-arrow package includes all the compilation targets for convenience, but if you're conscientious about your node_modules footprint, we got you.

The targets are also published under the @apache-arrow namespace:

npm install apache-arrow # <-- combined es5/UMD, es2015/CommonJS/ESModules/UMD, and TypeScript package

npm install @apache-arrow/ts # standalone TypeScript package

npm install @apache-arrow/es5-cjs # standalone es5/CommonJS package

npm install @apache-arrow/es5-esm # standalone es5/ESModules package

npm install @apache-arrow/es5-umd # standalone es5/UMD package

npm install @apache-arrow/es2015-cjs # standalone es2015/CommonJS package

npm install @apache-arrow/es2015-esm # standalone es2015/ESModules package

npm install @apache-arrow/es2015-umd # standalone es2015/UMD package

npm install @apache-arrow/esnext-cjs # standalone esNext/CommonJS package

npm install @apache-arrow/esnext-esm # standalone esNext/ESModules package

npm install @apache-arrow/esnext-umd # standalone esNext/UMD package

The JS community is a diverse group with a varied list of target environments and tool chains. Publishing multiple packages accommodates projects of all stripes.

If you think we missed a compilation target and it's a blocker for adoption, please open an issue.

Full list of broader Apache Arrow committers.

Full list of broader Apache Arrow projects & organizations.

Apache Arrow 2.0.0 (2020-10-19)

"a"_.In(<>).Assume(<compound>)sink argument class in ParquetFileWriterEXPLAIN <SQL> statementStringArray from Vec<Option<&str>> (+50%)take by 2x-3x (change scaling with batch size)ExprFromIter and IntoIter for primitive typesRecordBatchReader implement IteratorFAQs

Apache Arrow columnar in-memory format

The npm package apache-arrow receives a total of 169,342 weekly downloads. As such, apache-arrow popularity was classified as popular.

We found that apache-arrow demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 7 open source maintainers collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Research

Security News

A threat actor's playbook for exploiting the npm ecosystem was exposed on the dark web, detailing how to build a blockchain-powered botnet.

Security News

NVD’s backlog surpasses 20,000 CVEs as analysis slows and NIST announces new system updates to address ongoing delays.

Security News

Research

A malicious npm package disguised as a WhatsApp client is exploiting authentication flows with a remote kill switch to exfiltrate data and destroy files.