Benchmark-lib

A function for computing and logging benchmarks

React component to render computed benchmarks

npm install benchmark-lib --save

Contents

benchmark

import { benchmark } from 'benchmark-lib'

benchmark(foo, bar)

benchmark([foo, bar, baz])

Other arguments (null can be passed to skip argument)

benchmark(

foo, bar, 'benchName',

['test1Name', 'test2Name'], iterations,

{beforeAll, afterAll, beforeEach, afterEach},

logEach

)

benchmark([foo, bar, baz], null, 'benchName')

To return results as an object with summary info and an array of objects with computed results

const b = benchmark([foo, bar, baz])

- b.fastestTest - string - test name

- b.slowestTest - string - test name

- b.fastestMS - number

- b.slowestMS - number

- b.opt - object

- b.opt.benchName

- b.opt.benchStart - Date

- b.opt.benchEnd - Date

- b.opt.benchDuration - ms

- b.opt.iterations - number

- b.opt.callbacks - object - {beforeAll...}

- b.res - object - computed results

- b.res.t1 - number - test time in

ms - b.res.t2

Default test names is t1, t2...

| Name | Type | Default | Description |

|---|

| iterations | number | 500 | |

| logEach | number | 0 | log during each `n` iteration, `0` will log result |

Callbacks args

afterAll(result)

beforeEach(testName, testCounter)

afterEach(testName, ms)

Example

for, forEach

let fiboSeq = [1, 1]

const beforeAll = function () {

for (let i = 2; i < 99; i++) {

fiboSeq.push(fiboSeq[i-1]+fiboSeq[i-2])

}

}

const forLoop = function () {

let clone = fiboSeq.slice()

for (let i = 0; i < clone.length; i++) {

clone[i] /= 2

}

}

const forEachLoop = function () {

let clone = fiboSeq.slice()

clone.forEach((o, i) => {

clone[i] = o / 2

})

}

benchmark(forLoop, forEachLoop, 'for, forEach benchmark', ['for', 'forEach'])

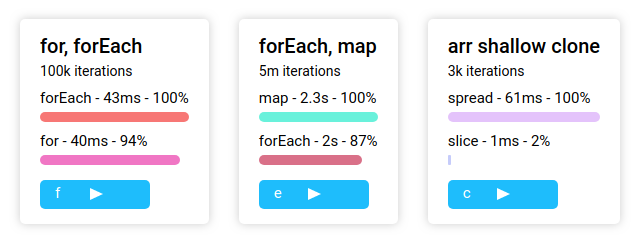

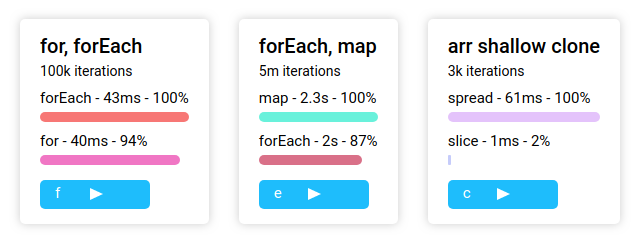

Benchmark component

import { Bench } from 'benchmark-lib'

import 'benchmark-lib/dist/style.css'

<Bench

firstTest={foo}

secondTest={bar}

/>

<Bench

tests={[foo, bar, baz]}

benchName='benchmark'

testNames={['foo', 'bar', 'baz']}

iterations={500}

callbacks={{beforeAll, afterAll, beforeEach, afterEach}}

/>

| hide | array | {['benchName', 'iterations', 'testNames', 'bars']} |

| trigger | literal | to run benchmark using keyboard, default `b` |

| disableTrigger | bool | |

| runOnInit | bool | |

| shortenIters | bool | |

| convertMS | bool | |

License

Make sure you have donated for lib maintenance: