Security News

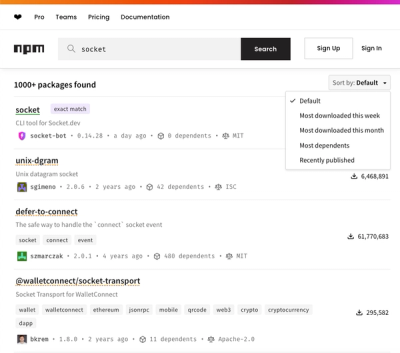

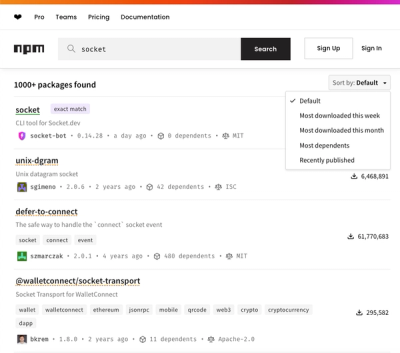

npm Updates Search Experience with New Objective Sorting Options

npm has a revamped search experience with new, more transparent sorting options—Relevance, Downloads, Dependents, and Publish Date.

LevelUP is a Node.js library that provides a simple interface for interacting with LevelDB, a fast key-value storage library. It allows for efficient storage and retrieval of data, making it suitable for applications that require high-performance data operations.

Basic Put and Get

This feature allows you to store and retrieve key-value pairs in the database. The `put` method is used to store a value with a specific key, and the `get` method is used to retrieve the value associated with a key.

const level = require('level');

const db = level('./mydb');

// Put a key-value pair

await db.put('name', 'LevelUP');

// Get the value for a key

const value = await db.get('name');

console.log(value); // 'LevelUP'Batch Operations

Batch operations allow you to perform multiple put and delete operations in a single atomic action. This is useful for making multiple changes to the database efficiently.

const level = require('level');

const db = level('./mydb');

// Perform batch operations

await db.batch()

.put('name', 'LevelUP')

.put('type', 'database')

.del('oldKey')

.write();Streams

Streams provide a way to read and write data in a continuous flow. The `createReadStream` method allows you to read all key-value pairs in the database as a stream, which is useful for processing large datasets.

const level = require('level');

const db = level('./mydb');

// Create a read stream

const stream = db.createReadStream();

stream.on('data', ({ key, value }) => {

console.log(`${key} = ${value}`);

});Sublevel

Sublevel allows you to create isolated sub-databases within a LevelDB instance. This is useful for organizing data into different namespaces.

const level = require('level');

const sublevel = require('subleveldown');

const db = level('./mydb');

const subdb = sublevel(db, 'sub');

// Put and get in sublevel

await subdb.put('name', 'SubLevelUP');

const value = await subdb.get('name');

console.log(value); // 'SubLevelUP'LevelDOWN is a lower-level binding for LevelDB, providing a more direct interface to the LevelDB library. It is used as a backend for LevelUP but can be used independently for more fine-grained control over LevelDB operations.

RocksDB is a high-performance key-value store developed by Facebook. It is similar to LevelDB but offers additional features like column families and more tunable performance options. It can be used as an alternative to LevelDB for applications requiring higher performance.

Redis is an in-memory key-value store known for its speed and support for various data structures like strings, hashes, lists, sets, and more. Unlike LevelDB, Redis operates entirely in memory, making it suitable for use cases where low-latency access is critical.

LevelDB is a simple key/value data store built by Google, inspired by BigTable. It's used in Google Chrome and many other products. LevelDB supports arbitrary byte arrays as both keys and values, singular get, put and delete operations, batched put and delete, forward and reverse iteration and simple compression using the Snappy algorithm which is optimised for speed over compression.

LevelUP aims to expose the features of LevelDB in a Node.js-friendly way. Both keys and values are treated as Buffer objects and are automatically converted using a specified 'encoding'. LevelDB's iterators are exposed as a Node.js style object-ReadStream and writing can be peformed via an object-WriteStream.

All operations are asynchronous although they don't necessarily require a callback if you don't need to know when the operation was performed.

var levelup = require('levelup')

// 1) Create our database, supply location and options.

// This will create or open the underlying LevelDB store.

var options = { createIfMissing: true, errorIfExists: false }

levelup('./mydb', options, function (err, db) {

if (err) return console.log('Ooops!', err)

// 2) put a key & value

db.put('name', 'LevelUP', function (err) {

if (err) return console.log('Ooops!', err) // some kind of I/O error

// 3) fetch by key

db.get('name', function (err, value) {

if (err) return console.log('Ooops!', err) // likely the key was not found

// ta da!

console.log('name=' + value)

})

})

})

levelup() takes an optional options object as its second argument; the following properties are accepted:

createIfMissing (boolean): If true, will initialise an empty database at the specified location if one doesn't already exit. If false and a database doesn't exist you will receive an error in your open() callback and your database won't open. Defaults to false.

errorIfExists (boolean): If true, you will receive an error in your open() callback if the database exists at the specified location. Defaults to false.

encoding (string): The encoding of the keys and values passed through Node.js' Buffer implementation (see Buffer#toString())

'utf8' is the default encoding for both keys and values so you can simply pass in strings and expect strings from your get() operations. You can also pass Buffer objects as keys and/or values and converstion will be performed.

Supported encodings are: hex, utf8, ascii, binary, base64, ucs2, utf16le.

'json' encoding is also supported, see below.

keyEncoding and valueEncoding (string): use instead of encoding to specify the exact encoding of both the keys and the values in this database.

Additionally, each of the main interface methods accept an optional options object that can be used to override encoding (or keyEncoding & valueEncoding).

For faster write operations, the batch() method can be used to submit an array of operations to be executed sequentially. Each operation is contained in an object having the following properties: type, key, value, where the type is either 'put' or 'del'. In the case of 'del' the 'value' property is ignored.

var ops = [

{ type: 'del', key: 'father' }

, { type: 'put', key: 'name', value: 'Yuri Irsenovich Kim' }

, { type: 'put', key: 'dob', value: '16 February 1941' }

, { type: 'put', key: 'spouse', value: 'Kim Young-sook' }

, { type: 'put', key: 'occupation', value: 'Clown' }

]

db.batch(ops, function (err) {

if (err) return console.log('Ooops!', err)

console.log('Great success dear leader!')

})

You can obtain a ReadStream of the full database by calling the readStream() method. The resulting stream is a complete Node.js-style Readable Stream where 'data' events emit objects with 'key' and 'value' pairs.

db.readStream()

.on('data', function (data) {

console.log(data.key, '=', data.value)

})

.on('error', function (err) {

console.log('Oh my!', err)

})

.on('close', function () {

console.log('Stream closed')

})

.on('end', function () {

console.log('Stream closed')

})

The standard pause(), resume() and destroy() methods are implemented on the ReadStream, as is pipe() (see below). 'data', 'error', 'end' and 'close' events are emitted.

Additionally, you can supply an options object as the first parameter to readStream() with the following options:

'start': the key you wish to start the read at. By default it will start at the beginning of the store. Note that the start doesn't have to be an actual key that exists, LevelDB will simply find the next key, greater than the key you provide.

'end': the key you wish to end the read on. By default it will continue until the end of the store. Again, the end doesn't have to be an actual key as an (inclusive) <=-type operation is performed to detect the end. You can also use the destroy() method instead of supplying an 'end' parameter to achieve the same effect.

'reverse': a boolean, set to true if you want the stream to go in reverse order. Beware that due to the way LevelDB works, a reverse seek will be slower than a forward seek.

'keys': a boolean (defaults to true) to indicate whether the 'data' event should contain keys. If set to true and 'values' set to false then 'data' events will simply be keys, rather than objects with a 'key' property. Used internally by the keyStream() method.

'values': a boolean (defaults to true) to indicate whether the 'data' event should contain values. If set to true and 'keys' set to false then 'data' events will simply be values, rather than objects with a 'value' property. Used internally by the valueStream() method.

A KeyStream is a ReadStream where the 'data' events are simply the keys from the database so it can be used like a traditional stream rather than an object stream.

You can obtain a KeyStream either by calling the keyStream() method on a LevelUP object or by passing passing an options object to readStream() with keys set to true and values set to false.

db.keyStream()

.on('data', function (data) {

console.log('key=', data)

})

// same as:

db.readStream({ keys: true, values: false })

.on('data', function (data) {

console.log('key=', data)

})

A ValueStream is a ReadStream where the 'data' events are simply the values from the database so it can be used like a traditional stream rather than an object stream.

You can obtain a ValueStream either by calling the valueStream() method on a LevelUP object or by passing passing an options object to readStream() with valuess set to true and keys set to false.

db.valueStream()

.on('data', function (data) {

console.log('value=', data)

})

// same as:

db.readStream({ keys: false, values: true })

.on('data', function (data) {

console.log('value=', data)

})

A WriteStream can be obtained by calling the writeStream() method. The resulting stream is a complete Node.js-style Writable Stream which accepts objects with 'key' and 'value' pairs on its write() method. Tce WriteStream will buffer writes and submit them as a batch() operation where the writes occur on the same event loop tick, otherwise they are treated as simple put() operations.

db.writeStream()

.on('error', function (err) {

console.log('Oh my!', err)

})

.on('close', function () {

console.log('Stream closed')

})

.write({ key: 'name', value: 'Yuri Irsenovich Kim' })

.write({ key: 'dob', value: '16 February 1941' })

.write({ key: 'spouse', value: 'Kim Young-sook' })

.write({ key: 'occupation', value: 'Clown' })

.end()

The standard write(), end(), destroy() and destroySoon() methods are implemented on the WriteStream. 'drain', 'error', 'close' and 'pipe' events are emitted.

A ReadStream can be piped directly to a WriteStream, allowing for easy copying of an entire database. A simple copy() operation is included in LevelUP that performs exactly this on two open databases:

function copy (srcdb, dstdb, callback) {

srcdb.readStream().pipe(dstdb.writeStream().on('close', callback))

}

The ReadStream is also fstream-compatible which means you should be able to pipe to and from fstreams. So you can serialize and deserialize an entire database to a directory where keys are filenames and values are their contents, or even into a tar file using node-tar. See the fstream functional test for an example. (Note: I'm not really sure there's a great use-case for this but it's a fun example and it helps to harden the stream implementations.)

KeyStreams and ValueStreams can be treated like standard streams of raw data. If 'encoding' is set to 'binary' the 'data' events will simply be standard Node Buffer objects straight out of the data store.

You specify 'json' encoding for both keys and/or values, you can then supply JavaScript objects to LevelUP and receive them from all fetch operations, including ReadStreams. LevelUP will automatically stringify your objects and store them as utf8 and parse the strings back into objects before passing them back to you.

LevelUP is Copyright (c) 2012 Rod Vagg @rvagg and licenced under the MIT licence. All rights not explicitly granted in the MIT license are reserved. See the included LICENSE file for more details.

LevelUP builds on the excellent work of the LevelDB and Snappy teams from Google and additional contributors. LevelDB and Snappy are both issued under the New BSD Licence.

FAQs

Fast & simple storage - a Node.js-style LevelDB wrapper

The npm package levelup receives a total of 823,117 weekly downloads. As such, levelup popularity was classified as popular.

We found that levelup demonstrated a not healthy version release cadence and project activity because the last version was released a year ago. It has 3 open source maintainers collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Security News

npm has a revamped search experience with new, more transparent sorting options—Relevance, Downloads, Dependents, and Publish Date.

Security News

A supply chain attack has been detected in versions 1.95.6 and 1.95.7 of the popular @solana/web3.js library.

Research

Security News

A malicious npm package targets Solana developers, rerouting funds in 2% of transactions to a hardcoded address.