Research

Security News

Malicious npm Packages Inject SSH Backdoors via Typosquatted Libraries

Socket’s threat research team has detected six malicious npm packages typosquatting popular libraries to insert SSH backdoors.

x-crawl is a flexible Node.js AI-assisted crawler library. Flexible usage and powerful AI assistance functions make crawler work more efficient, intelligent and convenient.

It consists of two parts:

If you find x-crawl helpful, or you like x-crawl, you can give x-crawl repository a like on GitHub A star. Your support is the driving force for our continuous improvement! thank you for your support!

With the rapid development of network technology, website updates have become more frequent, and changes in class names or structures often bring considerable challenges to crawlers that rely on these elements. Against this background, crawlers combined with AI technology have become a powerful weapon to meet this challenge.

First of all, changes in class names or structures after website updates may cause traditional crawler strategies to fail. This is because crawlers often rely on fixed class names or structures to locate and extract the required information. Once these elements change, the crawler may not be able to accurately find the required data, thus affecting the effectiveness and accuracy of data crawling.

However, crawlers combined with AI technology are better able to cope with this change. AI can also understand and parse the semantic information of web pages through natural language processing and other technologies to more accurately extract the required data.

To sum up, crawlers combined with AI technology can better cope with the problem of class name or structure changes after website updates.

The combination of crawler and AI allows the crawler and AI to obtain pictures of high-rated vacation rentals according to our instructions:

import { createCrawl, createCrawlOpenAI } from 'x-crawl'

//Create a crawler application

const crawlApp = createCrawl({

maxRetry: 3,

intervalTime: { max: 2000, min: 1000 }

})

//Create AI application

const crawlOpenAIApp = createCrawlOpenAI({

clientOptions: { apiKey: process.env['OPENAI_API_KEY'] },

defaultModel: { chatModel: 'gpt-4-turbo-preview' }

})

// crawlPage is used to crawl pages

crawlApp.crawlPage('https://www.airbnb.cn/s/select_homes').then(async (res) => {

const { page, browser } = res.data

// Wait for the element to appear on the page and get the HTML

const targetSelector = '[data-tracking-id="TOP_REVIEWED_LISTINGS"]'

await page.waitForSelector(targetSelector)

const highlyHTML = await page.$eval(targetSelector, (el) => el.innerHTML)

// Let the AI get the image link and de-duplicate it (the more detailed the description, the better)

const srcResult = await crawlOpenAIApp.parseElements(

highlyHTML,

`Get the image link, don't source it inside, and de-duplicate it`

)

browser.close()

// crawlFile is used to crawl file resources

crawlApp.crawlFile({

targets: srcResult.elements.map((item) => item.src),

storeDirs: './upload'

})

})

You can even send the whole HTML to the AI to help us operate, because the website content is more complex you also need to describe the location to get more accurately, and will consume a lot of Tokens.

Procedure:

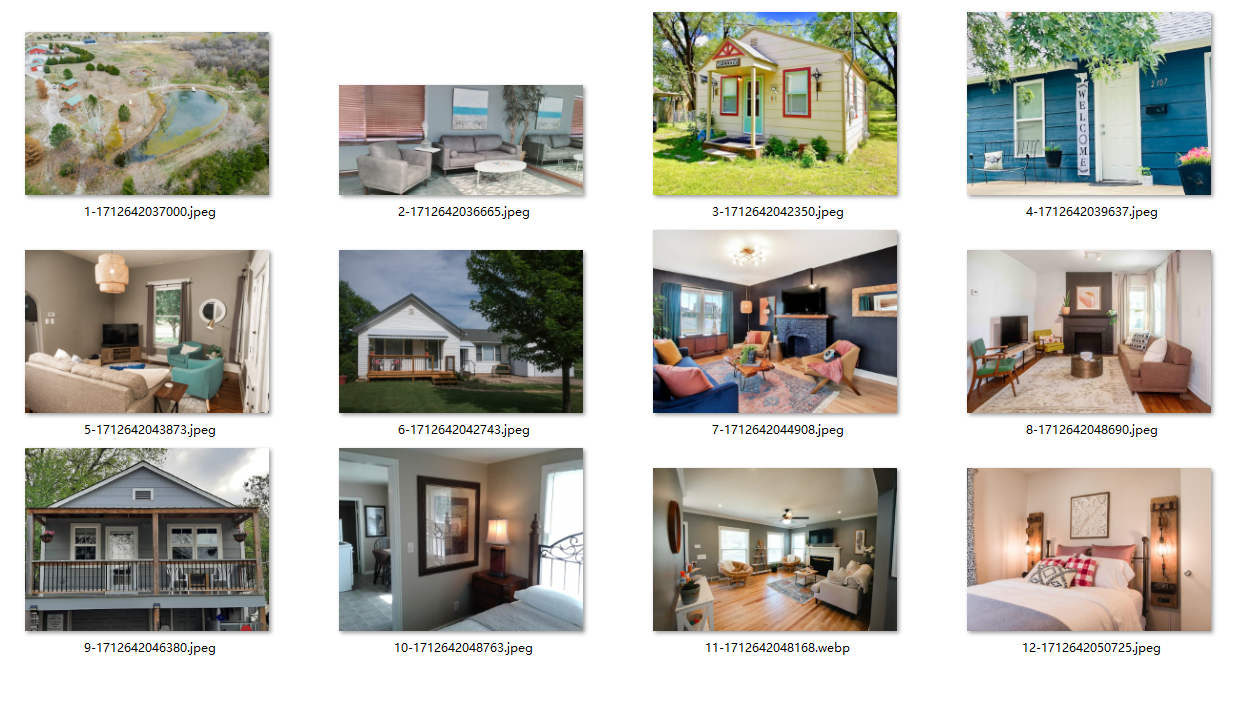

Pictures of highly rated vacation rentals climbed to:

Want to know more?

For example: View the HTML that AI needs to process or view the srcResult (img url) returned by AI after parsing the HTML according to our instructions

All at the bottom of this example: https://coder-hxl.github.io/x-crawl/guide/#example

warning: x-crawl is for legal use only. Any illegal activity using this tool is prohibited. Please be sure to comply with the robots.txt file regulations of the target website. This example is only used to demonstrate the use of x-crawl and is not targeted at a specific website.

x-crawl latest version documentation:

x-crawl v9 documentation:

FAQs

x-crawl is a flexible Node.js AI-assisted crawler library.

The npm package x-crawl receives a total of 106 weekly downloads. As such, x-crawl popularity was classified as not popular.

We found that x-crawl demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 1 open source maintainer collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Research

Security News

Socket’s threat research team has detected six malicious npm packages typosquatting popular libraries to insert SSH backdoors.

Security News

MITRE's 2024 CWE Top 25 highlights critical software vulnerabilities like XSS, SQL Injection, and CSRF, reflecting shifts due to a refined ranking methodology.

Security News

In this segment of the Risky Business podcast, Feross Aboukhadijeh and Patrick Gray discuss the challenges of tracking malware discovered in open source softare.