Security News

Create React App Officially Deprecated Amid React 19 Compatibility Issues

Create React App is officially deprecated due to React 19 issues and lack of maintenance—developers should switch to Vite or other modern alternatives.

Apache Airflow plugin that exposes xtended secure API endpoints similar to the official Airflow API (Stable) (1.0.0), providing richer capabilities to support more powerful DAG and job management. Apache Airflow version 2.8.0 or higher is necessary.

python3 -m pip install airflow-xtended-api

Build a custom version of this plugin by following the instructions in this doc

Airflow Xtended API plugin uses the same auth mechanism as Airflow API (Stable) (1.0.0). So, by default APIs exposed via this plugin respect the auth mechanism used by your Airflow webserver and also complies with the existing RBAC policies. Note that you will need to pass credentials data as part of the request. Here is a snippet from the official docs when basic authorization is used:

curl -X POST 'http://{AIRFLOW_HOST}:{AIRFLOW_PORT}/api/v1/dags/{dag_id}?update_mask=is_paused' \

-H 'Content-Type: application/json' \

--user "username:password" \

-d '{

"is_paused": true

}'

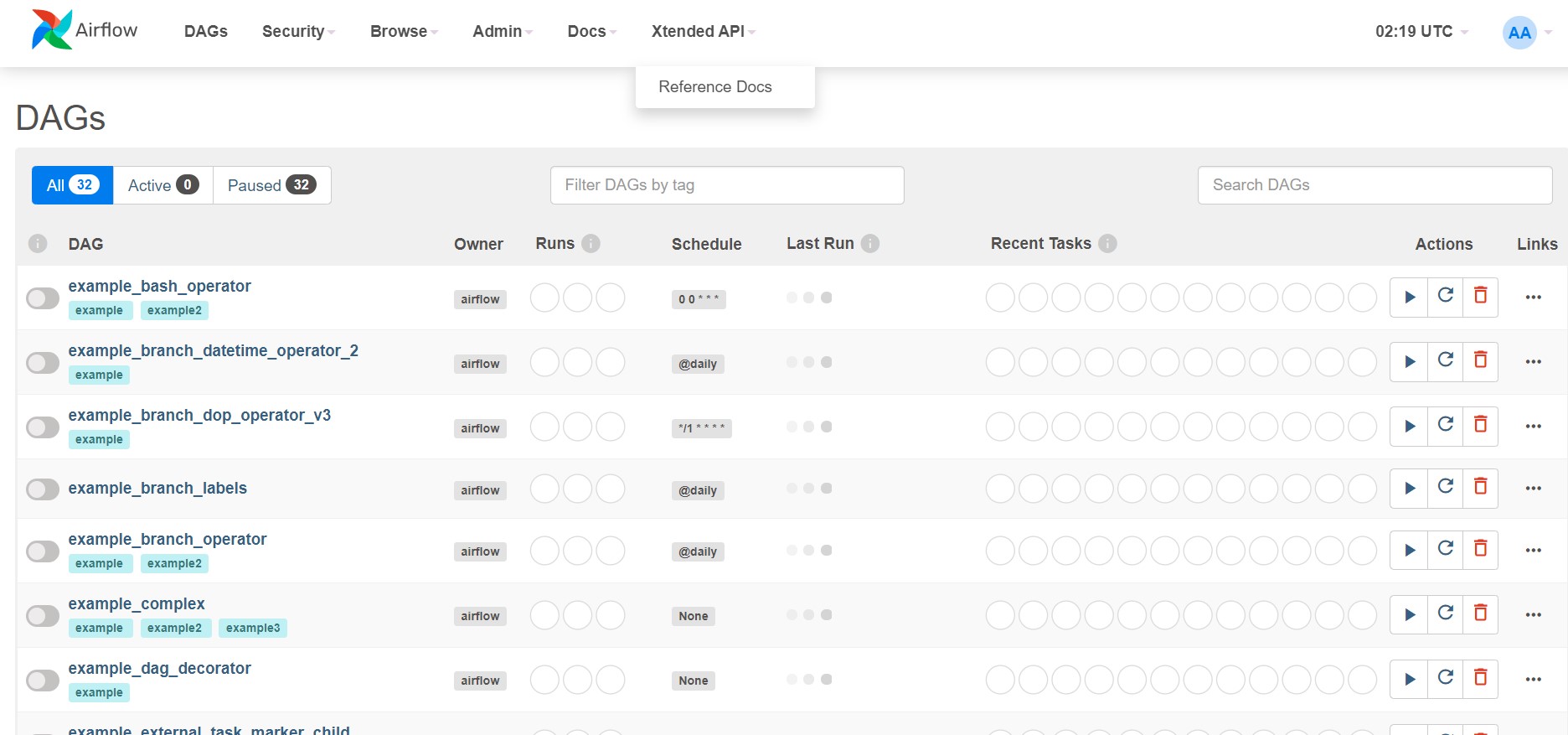

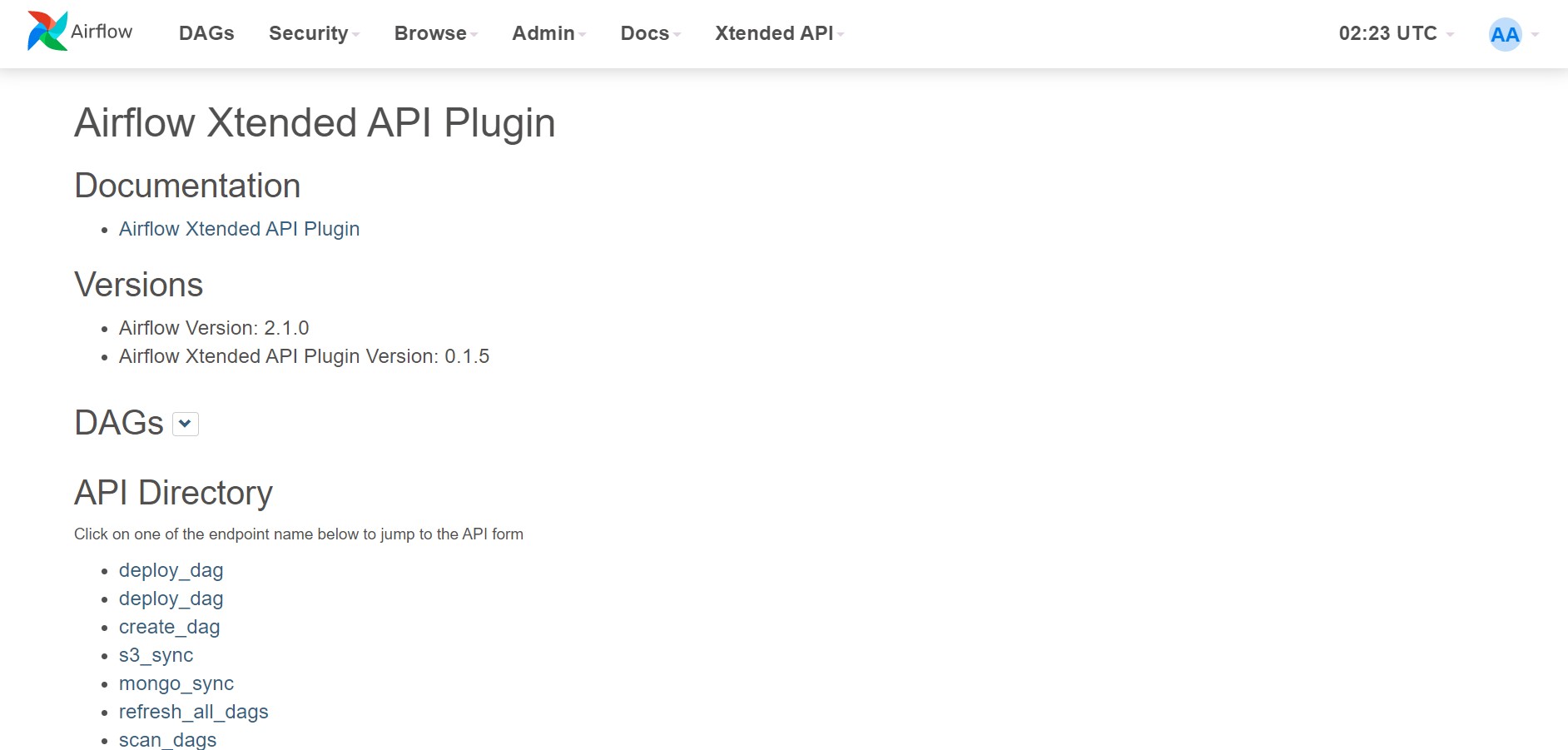

After installing the plugin python package and restarting your airflow webserver, You can see a link under the 'Xtended API' tab called 'Reference Docs' on the airflow webserver homepage. All the necessary documentation for the supported API endpoints resides on that page. You can also directly navigate to that page using below link.

http://{AIRFLOW_HOST}:{AIRFLOW_PORT}/xtended_api/

All the supported endpoints are exposed in the below format:

http://{AIRFLOW_HOST}:{AIRFLOW_PORT}/api/v1/xtended/{ENDPOINT_NAME}

Following are the names of endpoints which are currently supported.

http://{AIRFLOW_HOST}:{AIRFLOW_PORT}/api/v1/xtended/deploy_dag

curl -X POST -H 'Content-Type: multipart/form-data' \

--user "username:password" \

-F 'dag_file=@test_dag.py' \

-F 'force=y' \

-F 'unpause=y' \

http://{AIRFLOW_HOST}:{AIRFLOW_PORT}/api/v1/xtended/deploy_dag

{

"message": "DAG File [<module '{MODULE_NAME}' from '/{DAG_FOLDER}/exam.py'>] has been uploaded",

"status": "success"

}

curl -X GET --user "username:password" \

'http://{AIRFLOW_HOST}:{AIRFLOW_PORT}/api/v1/xtended/deploy_dag?dag_file_url={DAG_FILE_URL}&filename=test_dag.py&force=on&unpause=on'

{

"message": "DAG File [<module '{MODULE_NAME}' from '/{DAG_FOLDER}/exam.py'>] has been uploaded",

"status": "success"

}

http://{AIRFLOW_HOST}:{AIRFLOW_PORT}/api/v1/xtended/create_dag

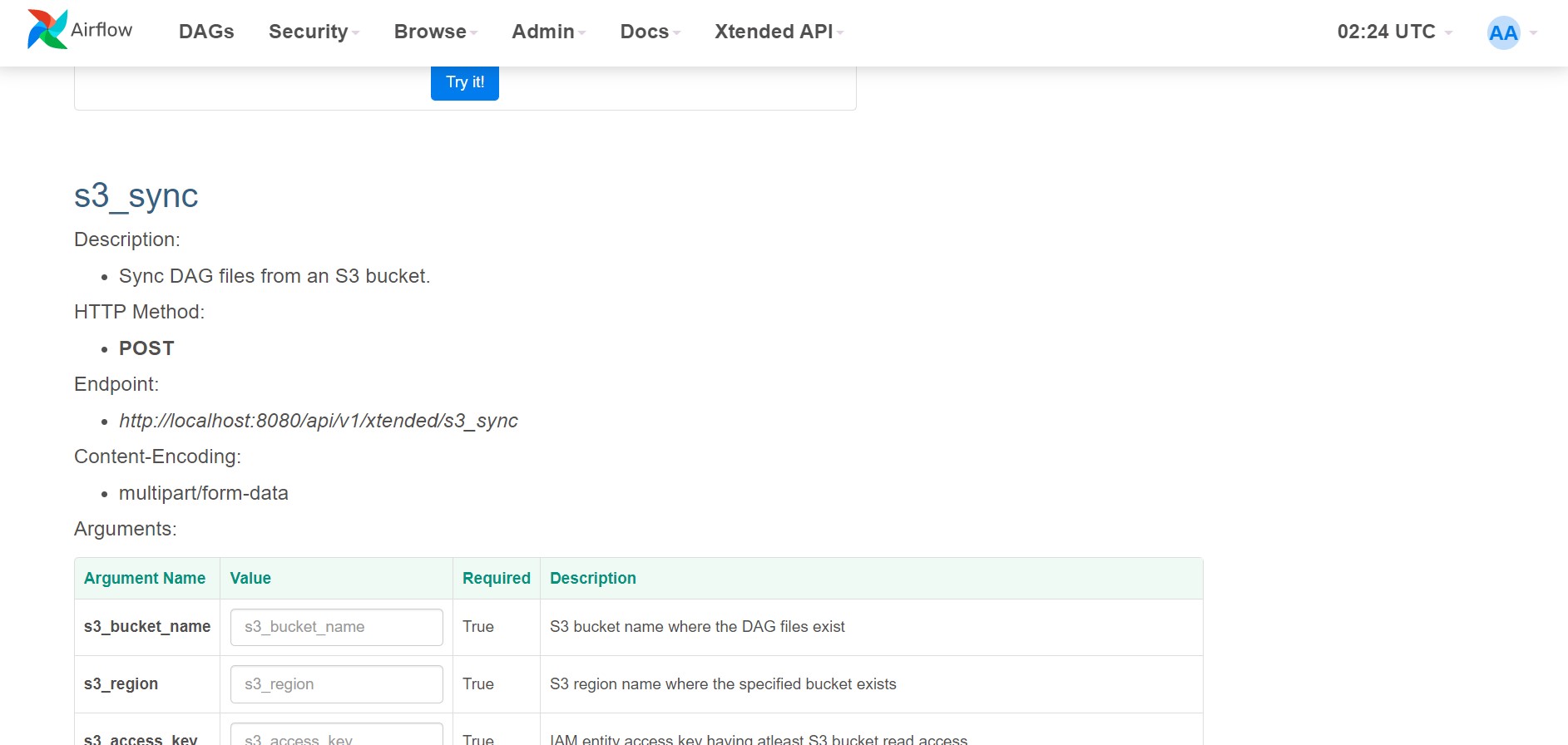

http://{AIRFLOW_HOST}:{AIRFLOW_PORT}/api/v1/xtended/s3_sync

curl -X POST -H 'Content-Type: multipart/form-data' \

--user "username:password" \

-F 's3_bucket_name=test-bucket' \

-F 's3_region=us-east-1' \

-F 's3_access_key={IAM_ACCESS_KEY}' \

-F 's3_secret_key={IAM_SECRET_KEY}' \

-F 'skip_purge=y' \

http://{AIRFLOW_HOST}:{AIRFLOW_PORT}/api/v1/xtended/s3_sync

{

"message": "dag files synced from s3",

"sync_status": {

"synced": ["test_dag0.py", "test_dag1.py", "test_dag2.py"],

"failed": []

},

"status": "success"

}

http://{AIRFLOW_HOST}:{AIRFLOW_PORT}/api/v1/xtended/mongo_sync

curl -X POST -H 'Content-Type: multipart/form-data' \

--user "username:password" \

-F 'connection_string={MONGO_SERVER_CONNECTION_STRING}' \

-F 'db_name=test_db' \

-F 'collection_name=test_collection' \

-F 'field_dag_source=dag_source' \

-F 'field_filename=dag_filename' \

-F 'skip_purge=y' \

http://{AIRFLOW_HOST}:{AIRFLOW_PORT}/api/v1/xtended/mongo_sync

{

"message": "dag files synced from mongo",

"sync_status": {

"synced": ["test_dag0.py", "test_dag1.py", "test_dag2.py"],

"failed": []

},

"status": "success"

}

http://{AIRFLOW_HOST}:{AIRFLOW_PORT}/api/v1/xtended/refresh_all_dags

curl -X GET --user "username:password" \

http://{AIRFLOW_HOST}:{AIRFLOW_PORT}/api/v1/xtended/refresh_all_dags

{

"message": "All DAGs are now up-to-date!!",

"status": "success"

}

http://{AIRFLOW_HOST}:{AIRFLOW_PORT}/api/v1/xtended/scan_dags

curl -X GET --user "username:password" \

http://{AIRFLOW_HOST}:{AIRFLOW_PORT}/api/v1/xtended/scan_dags

{

"message": "Ondemand DAG scan complete!!",

"status": "success"

}

http://{AIRFLOW_HOST}:{AIRFLOW_PORT}/api/v1/xtended/purge_dags

curl -X GET --user "username:password" \

http://{AIRFLOW_HOST}:{AIRFLOW_PORT}/api/v1/xtended/purge_dags

{

"message": "DAG directory purged!!",

"status": "success"

}

http://{AIRFLOW_HOST}:{AIRFLOW_PORT}/api/v1/xtended/delete_dag

curl -X GET --user "username:password" \

'http://{AIRFLOW_HOST}:{AIRFLOW_PORT}/api/v1/xtended/delete_dag?dag_id=test_dag&filename=test_dag.py'

{

"message": "DAG [dag_test] deleted",

"status": "success"

}

http://{AIRFLOW_HOST}:{AIRFLOW_PORT}/api/v1/xtended/upload_file

curl -X POST -H 'Content-Type: multipart/form-data' \

--user "username:password" \

-F 'file=@test_file.py' \

-F 'force=y' \

http://{AIRFLOW_HOST}:{AIRFLOW_PORT}/api/v1/xtended/upload_file

{

"message": "File [/{DAG_FOLDER}/dag_test.txt] has been uploaded",

"status": "success"

}

http://{AIRFLOW_HOST}:{AIRFLOW_PORT}/api/v1/xtended/restart_failed_task

curl -X GET --user "username:password" \

'http://{AIRFLOW_HOST}:{AIRFLOW_PORT}/api/v1/xtended/restart_failed_task?dag_id=test_dag&run_id=test_run'

{

"message": {

"failed_task_count": 1,

"clear_task_count": 7

},

"status": "success"

}

http://{AIRFLOW_HOST}:{AIRFLOW_PORT}/api/v1/xtended/kill_running_tasks

curl -X GET --user "username:password" \

'http://{AIRFLOW_HOST}:{AIRFLOW_PORT}/api/v1/xtended/kill_running_tasks?dag_id=test_dag&run_id=test_run&task_id=test_task'

{

"message": "tasks in test_run killed!!",

"status": "success"

}

http://{AIRFLOW_HOST}:{AIRFLOW_PORT}/api/v1/xtended/run_task_instance

curl -X POST -H 'Content-Type: multipart/form-data' \

--user "username:password" \

-F 'dag_id=test_dag' \

-F 'run_id=test_run' \

-F 'tasks=test_task' \

http://{AIRFLOW_HOST}:{AIRFLOW_PORT}/api/v1/xtended/run_task_instance

{

"execution_date": "2021-06-21T05:50:19.740803+0000",

"status": "success"

}

http://{AIRFLOW_HOST}:{AIRFLOW_PORT}/api/v1/xtended/skip_task_instance

curl -X GET http://{AIRFLOW_HOST}:{AIRFLOW_PORT}/api/v1/xtended/skip_task_instance?dag_id=test_dag&run_id=test_run&task_id=test_task

{

"message": "<TaskInstance: test_dag.test_task 2021-06-21 19:59:34.638794+00:00 [skipped]> skipped!!",

"status": "success"

}

Huge shout out to these awesome plugins that contributed to the growth of Airflow ecosystem, which also inspired this plugin.

FAQs

Exposes 'Xtended' Apache Airflow management capabilities via secure API

We found that airflow-xtended-api demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 1 open source maintainer collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Security News

Create React App is officially deprecated due to React 19 issues and lack of maintenance—developers should switch to Vite or other modern alternatives.

Security News

Oracle seeks to dismiss fraud claims in the JavaScript trademark dispute, delaying the case and avoiding questions about its right to the name.

Security News

The Linux Foundation is warning open source developers that compliance with global sanctions is mandatory, highlighting legal risks and restrictions on contributions.