A growing collection of useful helpers and fully functional, ready-made abstractions for @react-three/fiber. If you make a component that is generic enough to be useful to others, think about CONTRIBUTING!

npm install @react-three/drei

[!IMPORTANT]

this package is using the stand-alone three-stdlib instead of three/examples/jsm.

Basic usage:

import { PerspectiveCamera, PositionalAudio, ... } from '@react-three/drei'

React-native:

import { PerspectiveCamera, PositionalAudio, ... } from '@react-three/drei/native'

The native route of the library does not export Html or Loader. The default export of the library is web which does export Html and Loader.

Index

Cameras

PerspectiveCamera

type Props = Omit<JSX.IntrinsicElements['perspectiveCamera'], 'children'> & {

makeDefault?: boolean

manual?: boolean

children?: React.ReactNode | ((texture: THREE.Texture) => React.ReactNode)

frames?: number

resolution?: number

envMap?: THREE.Texture

}

A responsive THREE.PerspectiveCamera that can set itself as the default.

<PerspectiveCamera makeDefault {...props} />

<mesh />

You can also give it children, which will now occupy the same position as the camera and follow along as it moves.

<PerspectiveCamera makeDefault {...props}>

<mesh />

</PerspectiveCamera>

You can also drive it manually, it won't be responsive and you have to calculate aspect ratio yourself.

<PerspectiveCamera manual aspect={...} onUpdate={(c) => c.updateProjectionMatrix()}>

You can use the PerspectiveCamera to film contents into a RenderTarget, similar to CubeCamera. As a child you must provide a render-function which receives the texture as its first argument. The result of that function will not follow the camera, instead it will be set invisible while the FBO renders so as to avoid issues where the meshes that receive the texture are interrering.

<PerspectiveCamera position={[0, 0, 10]}>

{(texture) => (

<mesh geometry={plane}>

<meshBasicMaterial map={texture} />

</mesh>

)}

</PerspectiveCamera>

OrthographicCamera

A responsive THREE.OrthographicCamera that can set itself as the default.

<OrthographicCamera makeDefault {...props}>

<mesh />

</OrthographicCamera>

You can use the OrthographicCamera to film contents into a RenderTarget, it has the same API as PerspectiveCamera.

<OrthographicCamera position={[0, 0, 10]}>

{(texture) => (

<mesh geometry={plane}>

<meshBasicMaterial map={texture} />

</mesh>

)}

</OrthographicCamera>

CubeCamera

A THREE.CubeCamera that returns its texture as a render-prop. It makes children invisible while rendering to the internal buffer so that they are not included in the reflection.

type Props = JSX.IntrinsicElements['group'] & {

frames?: number

resolution?: number

near?: number

far?: number

envMap?: THREE.Texture

fog?: Fog | FogExp2

children: (tex: Texture) => React.ReactNode

}

Using the frames prop you can control if this camera renders indefinitely or statically (a given number of times).

If you have two static objects in the scene, make it frames={2} for instance, so that both objects get to "see" one another in the reflections, which takes multiple renders.

If you have moving objects, unset the prop and use a smaller resolution instead.

<CubeCamera>

{(texture) => (

<mesh>

<sphereGeometry />

<meshStandardMaterial envMap={texture} />

</mesh>

)}

</CubeCamera>

Controls

If available controls have damping enabled by default, they manage their own updates, remove themselves on unmount, are compatible with the frameloop="demand" canvas-flag. They inherit all props from their underlying THREE controls. They are the first effects to run before all other useFrames, to ensure that other components may mutate the camera on top of them.

Some controls allow you to set makeDefault, similar to, for instance, PerspectiveCamera. This will set @react-three/fiber's controls field in the root store. This can make it easier in situations where you want controls to be known and other parts of the app could respond to it. Some drei controls already take it into account, like CameraShake, Gizmo and TransformControls.

<CameraControls makeDefault />

const controls = useThree((state) => state.controls)

Drei currently exports OrbitControls  , MapControls

, MapControls  , TrackballControls, ArcballControls, FlyControls, DeviceOrientationControls, PointerLockControls

, TrackballControls, ArcballControls, FlyControls, DeviceOrientationControls, PointerLockControls  , FirstPersonControls

, FirstPersonControls  CameraControls

CameraControls  and FaceControls

and FaceControls

All controls react to the default camera. If you have a <PerspectiveCamera makeDefault /> in your scene, they will control it. If you need to inject an imperative camera or one that isn't the default, use the camera prop: <OrbitControls camera={MyCamera} />.

PointerLockControls additionally supports a selector prop, which enables the binding of click event handlers for control activation to other elements than document (e.g. a 'Click here to play' button). All elements matching the selector prop will activate the controls. It will also center raycast events by default, so regular onPointerOver/etc events on meshes will continue to work.

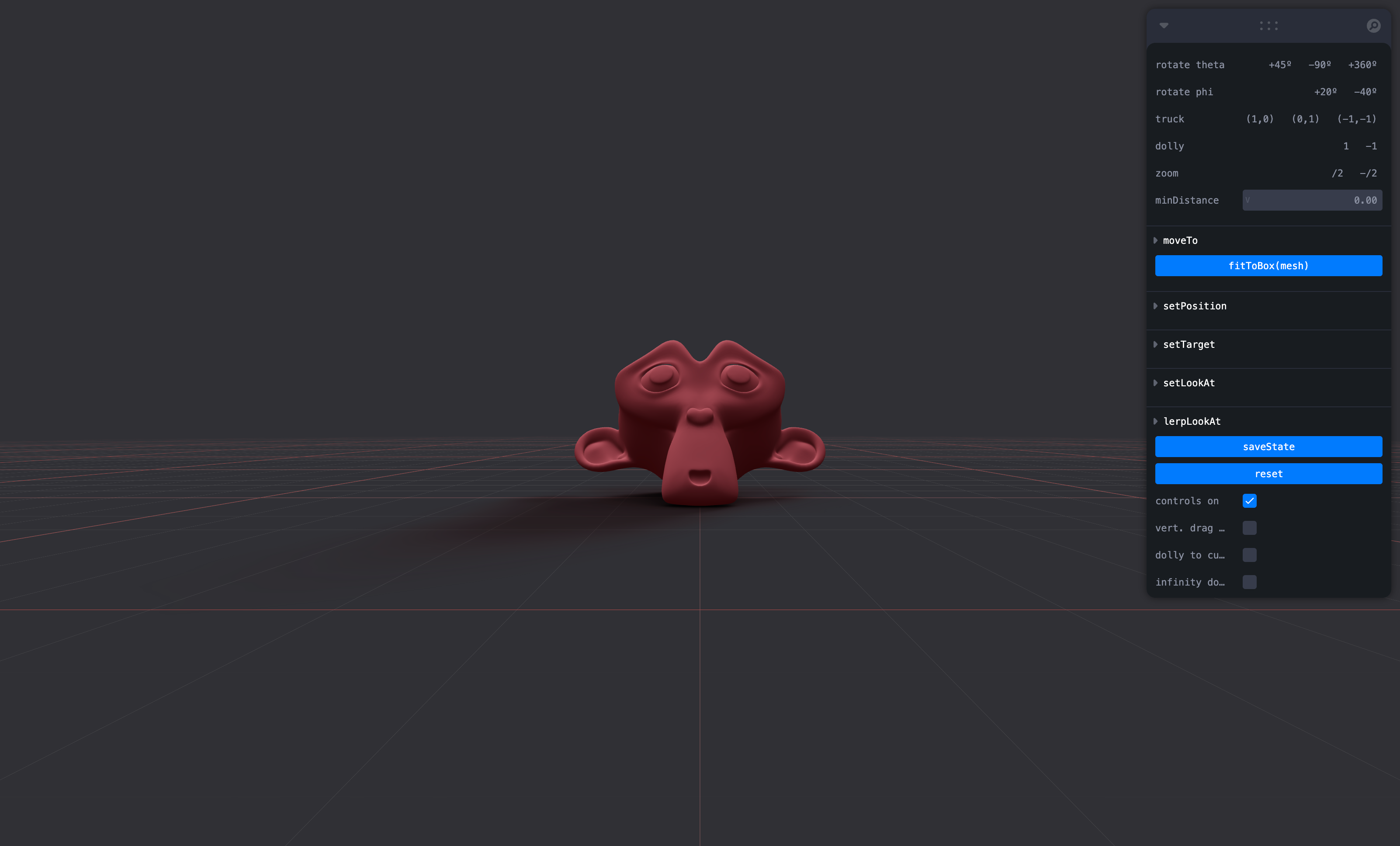

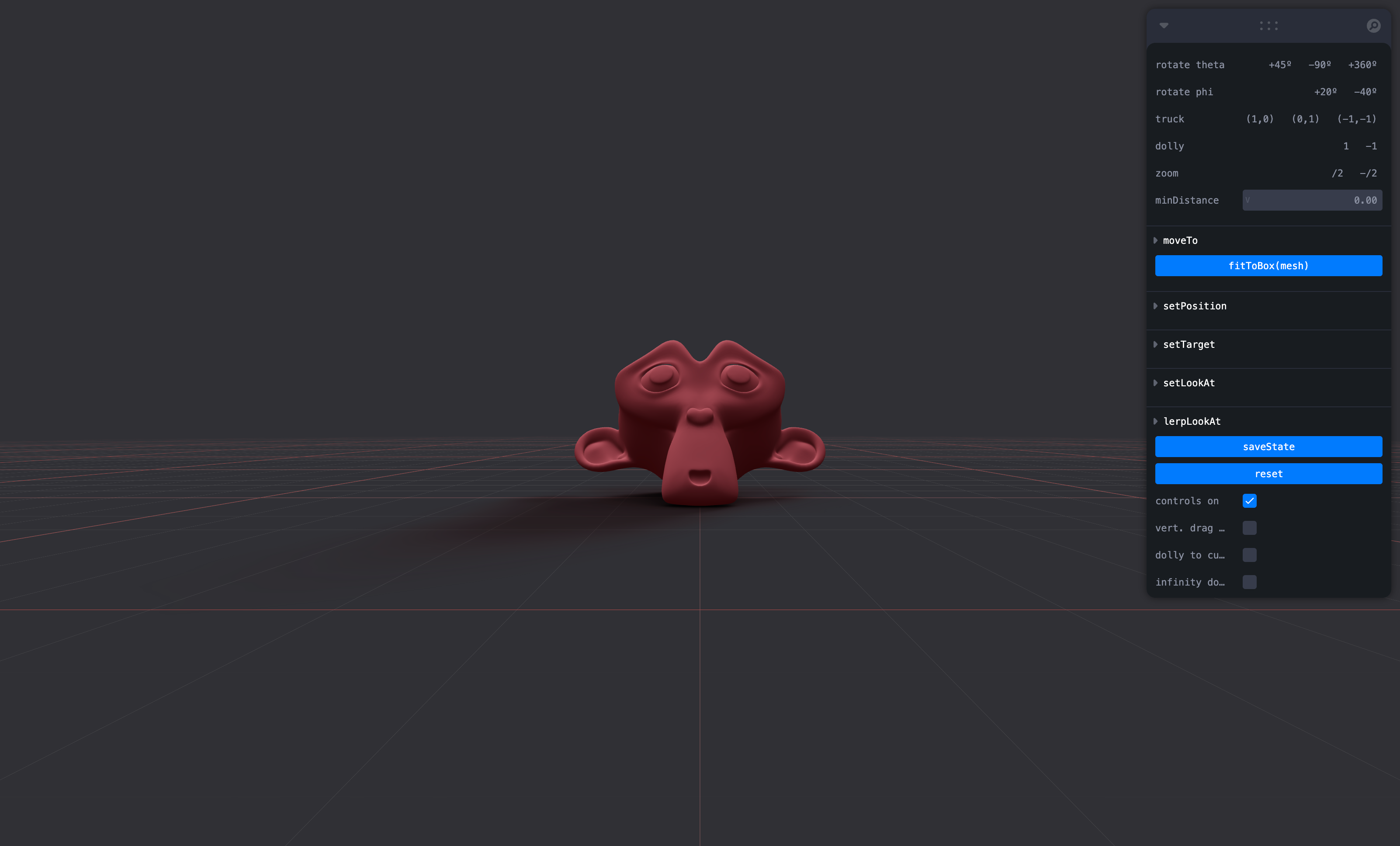

CameraControls

This is an implementation of the camera-controls library.

<CameraControls />

type CameraControlsProps = {

camera?: PerspectiveCamera | OrthographicCamera

domElement?: HTMLElement

makeDefault?: boolean

onStart?: (e?: { type: 'controlstart' }) => void

onEnd?: (e?: { type: 'controlend' }) => void

onChange?: (e?: { type: 'update' }) => void

}

ScrollControls

type ScrollControlsProps = {

eps?: number

horizontal?: boolean

infinite?: boolean

pages?: number

distance?: number

damping?: number

maxSpeed?: number

prepend?: boolean

enabled?: boolean

style?: React.CSSProperties

children: React.ReactNode

}

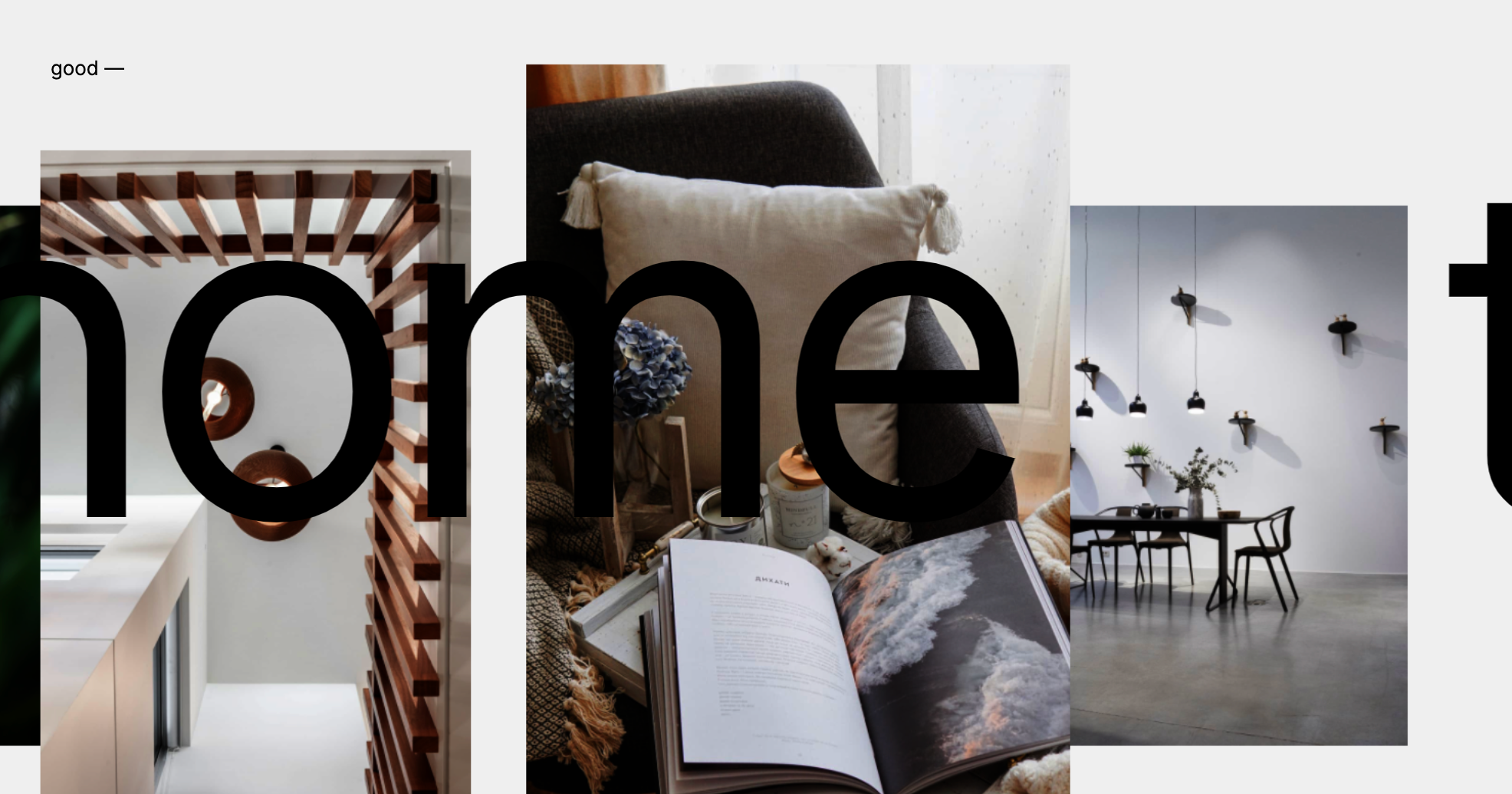

Scroll controls create an HTML scroll container in front of the canvas. Everything you drop into the <Scroll> component will be affected.

You can listen and react to scroll with the useScroll hook which gives you useful data like the current scroll offset, delta and functions for range finding: range, curve and visible. The latter functions are especially useful if you want to react to the scroll offset, for instance if you wanted to fade things in and out if they are in or out of view.

;<ScrollControls pages={3} damping={0.1}>

{/* Canvas contents in here will *not* scroll, but receive useScroll! */}

<SomeModel />

<Scroll>

{/* Canvas contents in here will scroll along */}

<Foo position={[0, 0, 0]} />

<Foo position={[0, viewport.height, 0]} />

<Foo position={[0, viewport.height * 1, 0]} />

</Scroll>

<Scroll html>

{/* DOM contents in here will scroll along */}

<h1>html in here (optional)</h1>

<h1 style={{ top: '100vh' }}>second page</h1>

<h1 style={{ top: '200vh' }}>third page</h1>

</Scroll>

</ScrollControls>

function Foo(props) {

const ref = useRef()

const data = useScroll()

useFrame(() => {

const a = data.range(0, 1 / 3)

const b = data.range(1 / 3, 1 / 3)

const c = data.range(1 / 3, 1 / 3, 0.1)

const d = data.curve(1 / 3, 1 / 3)

const e = data.curve(1 / 3, 1 / 3, 0.1)

const f = data.visible(2 / 3, 1 / 3)

const g = data.visible(2 / 3, 1 / 3, 0.1)

})

return <mesh ref={ref} {...props} />

}

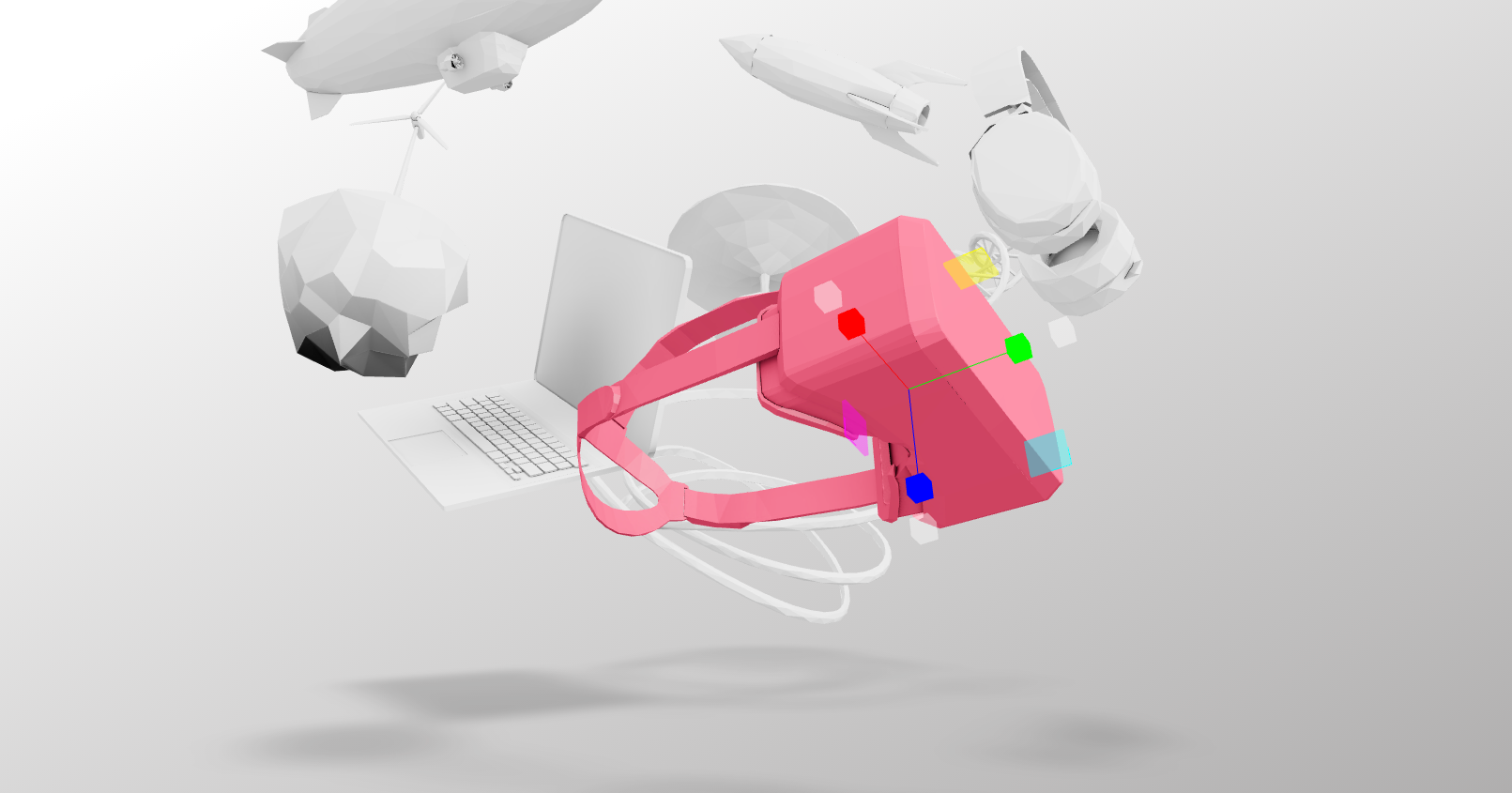

PresentationControls

Semi-OrbitControls with spring-physics, polar zoom and snap-back, for presentational purposes. These controls do not turn the camera but will spin their contents. They will not suddenly come to rest when they reach limits like OrbitControls do, but rather smoothly anticipate stopping position.

<PresentationControls

enabled={true}

global={false}

cursor={true}

snap={false}

speed={1}

zoom={1}

rotation={[0, 0, 0]}

polar={[0, Math.PI / 2]}

azimuth={[-Infinity, Infinity]}

config={{ mass: 1, tension: 170, friction: 26 }}

domElement={events.connected}

>

<mesh />

</PresentationControls>

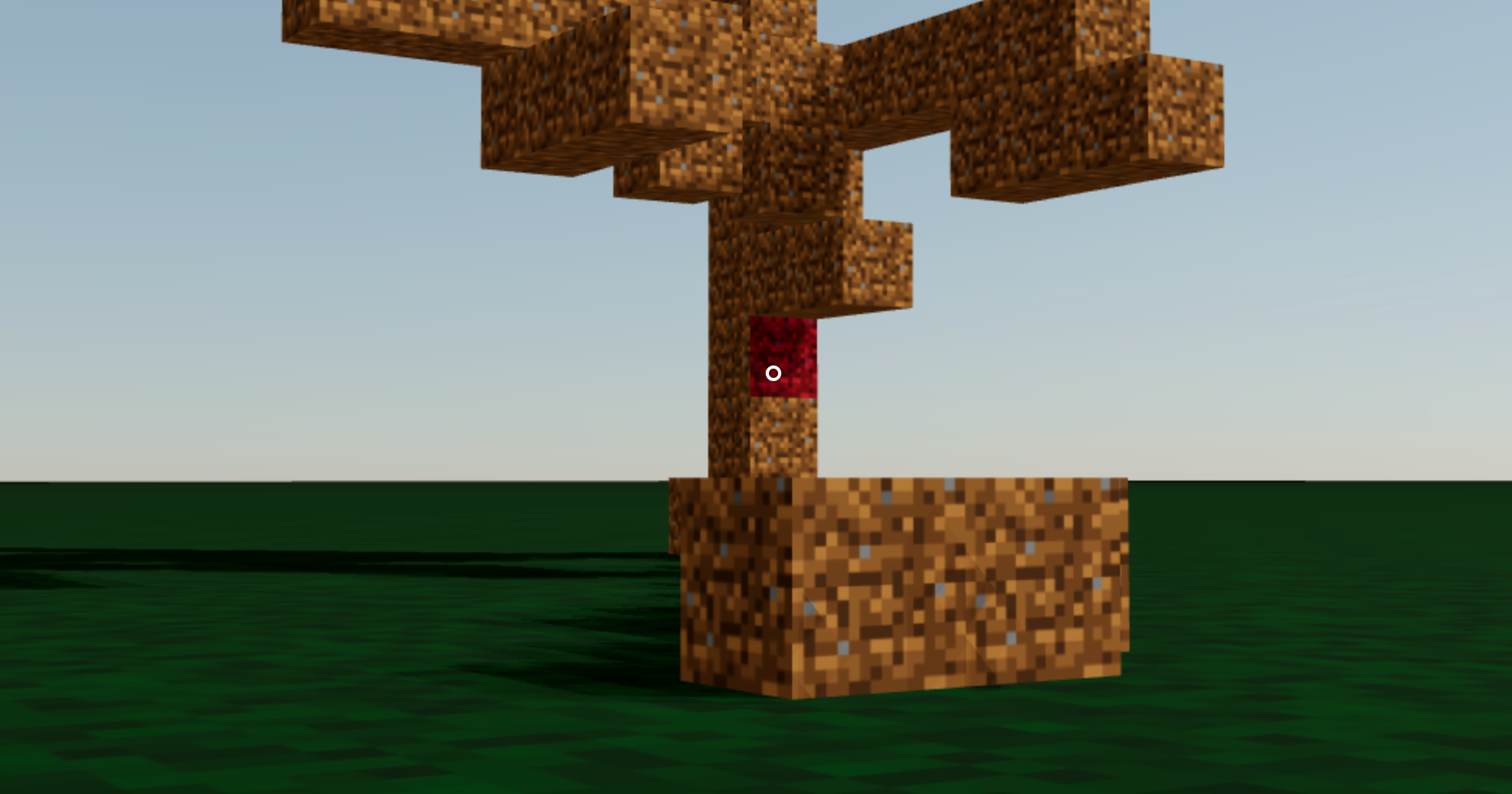

KeyboardControls

A rudimentary keyboard controller which distributes your defined data-model to the useKeyboard hook. It's a rather simple way to get started with keyboard input.

type KeyboardControlsState<T extends string = string> = { [K in T]: boolean }

type KeyboardControlsEntry<T extends string = string> = {

name: T

keys: string[]

up?: boolean

}

type KeyboardControlsProps = {

map: KeyboardControlsEntry[]

children: React.ReactNode

onChange: (name: string, pressed: boolean, state: KeyboardControlsState) => void

domElement?: HTMLElement

}

You start by wrapping your app, or scene, into <KeyboardControls>.

enum Controls {

forward = 'forward',

back = 'back',

left = 'left',

right = 'right',

jump = 'jump',

}

function App() {

const map = useMemo<KeyboardControlsEntry<Controls>[]>(()=>[

{ name: Controls.forward, keys: ['ArrowUp', 'KeyW'] },

{ name: Controls.back, keys: ['ArrowDown', 'KeyS'] },

{ name: Controls.left, keys: ['ArrowLeft', 'KeyA'] },

{ name: Controls.right, keys: ['ArrowRight', 'KeyD'] },

{ name: Controls.jump, keys: ['Space'] },

], [])

return (

<KeyboardControls map={map}>

<App />

</KeyboardControls>

You can either respond to input reactively, it uses zustand (with the subscribeWithSelector middleware) so all the rules apply:

function Foo() {

const forwardPressed = useKeyboardControls<Controls>(state => state.forward)

Or transiently, either by subscribe, which is a function which returns a function to unsubscribe, so you can pair it with useEffect for cleanup, or get, which fetches fresh state non-reactively.

function Foo() {

const [sub, get] = useKeyboardControls<Controls>()

useEffect(() => {

return sub(

(state) => state.forward,

(pressed) => {

console.log('forward', pressed)

}

)

}, [])

useFrame(() => {

const pressed = get().back

})

}

FaceControls

The camera follows your face.

Prerequisite: wrap into a FaceLandmarker provider

<FaceLandmarker>...</FaceLandmarker>

<FaceControls />

type FaceControlsProps = {

camera?: THREE.Camera

autostart?: boolean

webcam?: boolean

webcamVideoTextureSrc?: VideoTextureSrc

manualUpdate?: boolean

manualDetect?: boolean

onVideoFrame?: (e: THREE.Event) => void

makeDefault?: boolean

smoothTime?: number

offset?: boolean

offsetScalar?: number

eyes?: boolean

eyesAsOrigin?: boolean

depth?: number

debug?: boolean

facemesh?: FacemeshProps

}

type FaceControlsApi = THREE.EventDispatcher & {

detect: (video: HTMLVideoElement, time: number) => void

computeTarget: () => THREE.Object3D

update: (delta: number, target?: THREE.Object3D) => void

facemeshApiRef: RefObject<FacemeshApi>

webcamApiRef: RefObject<WebcamApi>

play: () => void

pause: () => void

}

FaceControls events

Two THREE.Events are dispatched on FaceControls ref object:

{ type: "stream", stream: MediaStream } -- when webcam's .getUserMedia() promise is resolved{ type: "videoFrame", texture: THREE.VideoTexture, time: number } -- each time a new video frame is sent to the compositor (thanks to rVFC)

Note

rVFC

Internally, FaceControls uses requestVideoFrameCallback, you may need a polyfill (for Firefox).

FaceControls[manualDetect]

By default, detect is called on each "videoFrame". You can disable this by manualDetect and call detect yourself.

For example:

const controls = useThree((state) => state.controls)

const onVideoFrame = useCallback((event) => {

controls.detect(event.texture.source.data, event.time)

}, [controls])

<FaceControls makeDefault

manualDetect

onVideoFrame={onVideoFrame}

/>

FaceControls[manualUpdate]

By default, update method is called each rAF useFrame. You can disable this by manualUpdate and call it yourself:

const controls = useThree((state) => state.controls)

useFrame((_, delta) => {

controls.update(delta)

})

<FaceControls makeDefault manualUpdate />

Or, if you want your own custom damping, use computeTarget method and update the camera pos/rot yourself with:

const [current] = useState(() => new THREE.Object3D())

useFrame((_, delta) => {

const target = controls?.computeTarget()

if (target) {

const eps = 1e-9

easing.damp3(current.position, target.position, 0.25, delta, undefined, undefined, eps)

easing.dampE(current.rotation, target.rotation, 0.25, delta, undefined, undefined, eps)

camera.position.copy(current.position)

camera.rotation.copy(current.rotation)

}

})

MotionPathControls

Motion path controls, it takes a path of bezier curves or catmull-rom curves as input and animates the passed object along that path. It can be configured to look upon an external object for staging or presentation purposes by adding a focusObject property (ref).

type MotionPathProps = JSX.IntrinsicElements['group'] & {

curves?: THREE.Curve<THREE.Vector3>[]

debug?: boolean

object?: React.MutableRefObject<THREE.Object3D>

focus?: [x: number, y: number, z: number] | React.MutableRefObject<THREE.Object3D>

offset?: number

smooth?: boolean | number

eps?: number

damping?: number

focusDamping?: number

maxSpeed?: number

}

You can use MotionPathControls with declarative curves.

function App() {

const poi = useRef()

return (

<group>

<MotionPathControls offset={0} focus={poi} damping={0.2}>

<cubicBezierCurve3 v0={[-5, -5, 0]} v1={[-10, 0, 0]} v2={[0, 3, 0]} v3={[6, 3, 0]} />

<cubicBezierCurve3 v0={[6, 3, 0]} v1={[10, 5, 5]} v2={[5, 5, 5]} v3={[5, 5, 5]} />

</MotionPathControls>

<Box args={[1, 1, 1]} ref={poi}/>

Or with imperative curves.

<MotionPathControls

offset={0}

focus={poi}

damping={0.2}

curves={[

new THREE.CubicBezierCurve3(

new THREE.Vector3(-5, -5, 0),

new THREE.Vector3(-10, 0, 0),

new THREE.Vector3(0, 3, 0),

new THREE.Vector3(6, 3, 0)

),

new THREE.CubicBezierCurve3(

new THREE.Vector3(6, 3, 0),

new THREE.Vector3(10, 5, 5),

new THREE.Vector3(5, 3, 5),

new THREE.Vector3(5, 5, 5)

),

]}

/>

You can exert full control with the useMotion hook, it allows you to define the current position along the path for instance, or define your own lookAt. Keep in mind that MotionPathControls will still these values unless you set damping and focusDamping to 0. Then you can also employ your own easing.

type MotionState = {

current: number

path: THREE.CurvePath<THREE.Vector3>

focus: React.MutableRefObject<THREE.Object3D<THREE.Event>> | [x: number, y: number, z: number] | undefined

object: React.MutableRefObject<THREE.Object3D<THREE.Event>>

offset: number

point: THREE.Vector3

tangent: THREE.Vector3

next: THREE.Vector3

}

const state: MotionState = useMotion()

function Loop() {

const motion = useMotion()

useFrame((state, delta) => {

motion.current += delta

motion.object.current.lookAt(motion.next)

})

}

<MotionPathControls>

<cubicBezierCurve3 v0={[-5, -5, 0]} v1={[-10, 0, 0]} v2={[0, 3, 0]} v3={[6, 3, 0]} />

<Loop />

Gizmos

GizmoHelper

Used by widgets that visualize and control camera position.

Two example gizmos are included: GizmoViewport and GizmoViewcube, and useGizmoContext makes it easy to create your own.

Make sure to set the makeDefault prop on your controls, in that case you do not have to define the onTarget and onUpdate props.

<GizmoHelper

alignment="bottom-right"

margin={[80, 80]}

onUpdate={}

onTarget={}

renderPriority={}

>

<GizmoViewport axisColors={['red', 'green', 'blue']} labelColor="black" />

{}

</GizmoHelper>

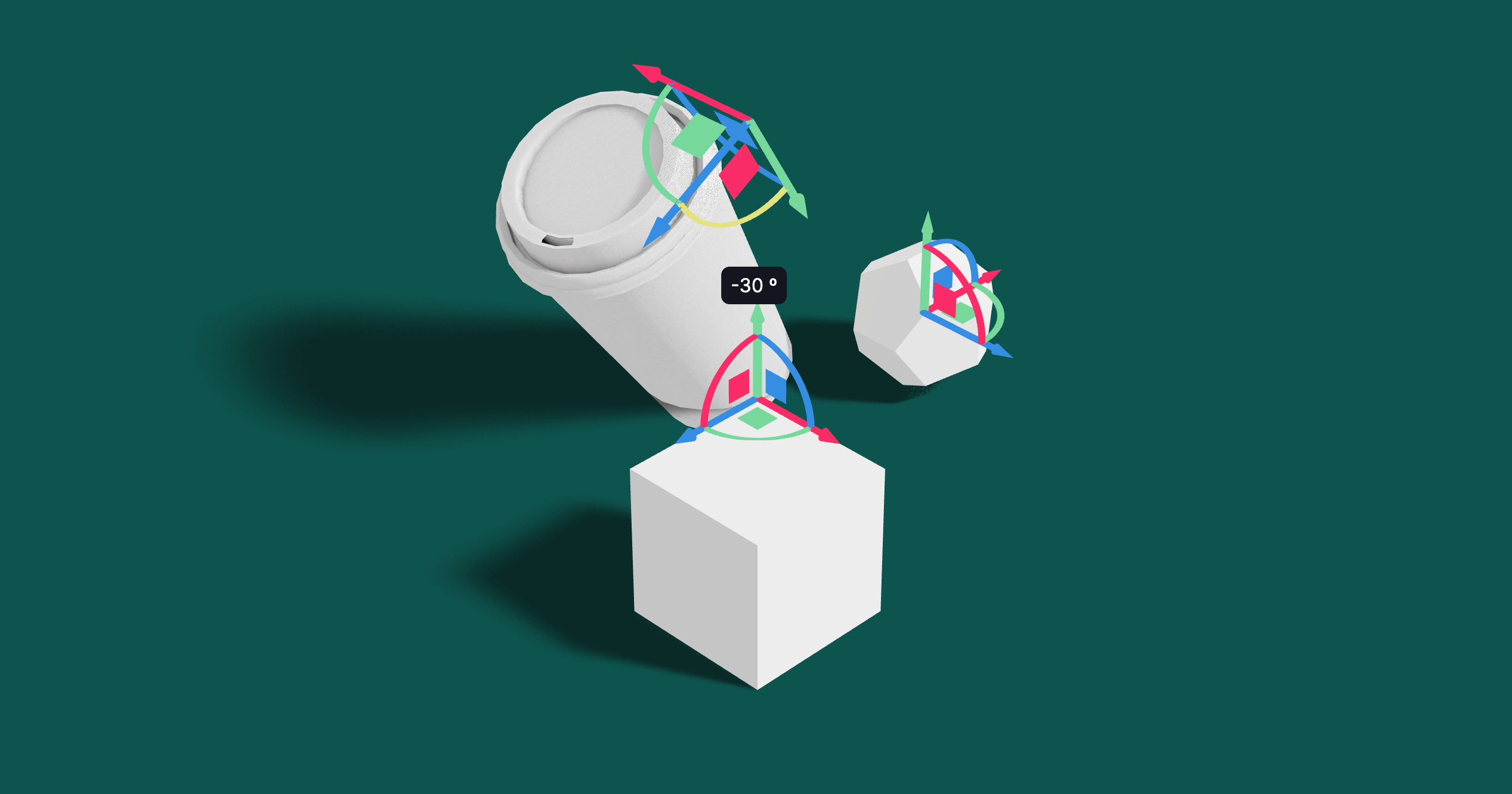

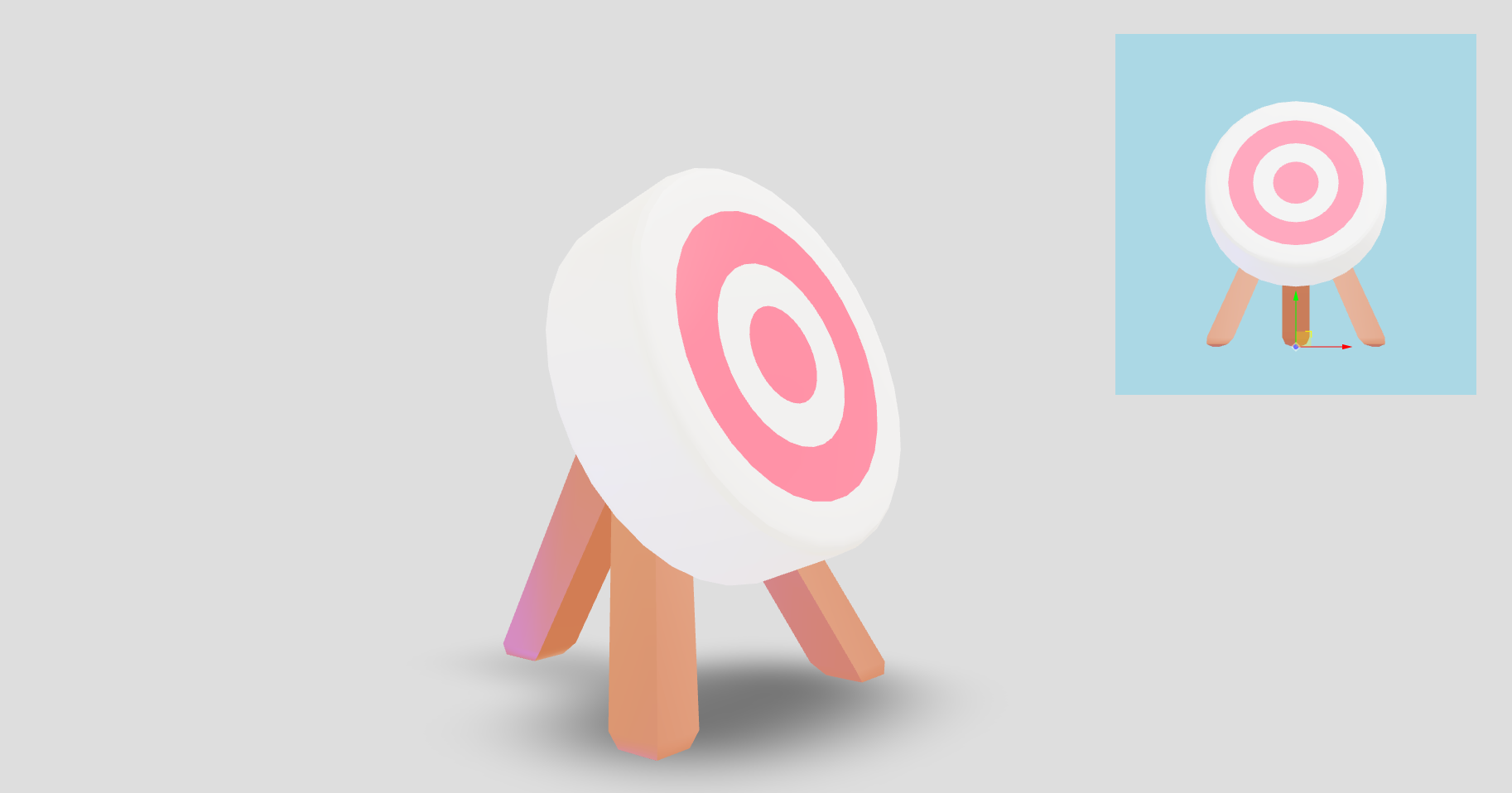

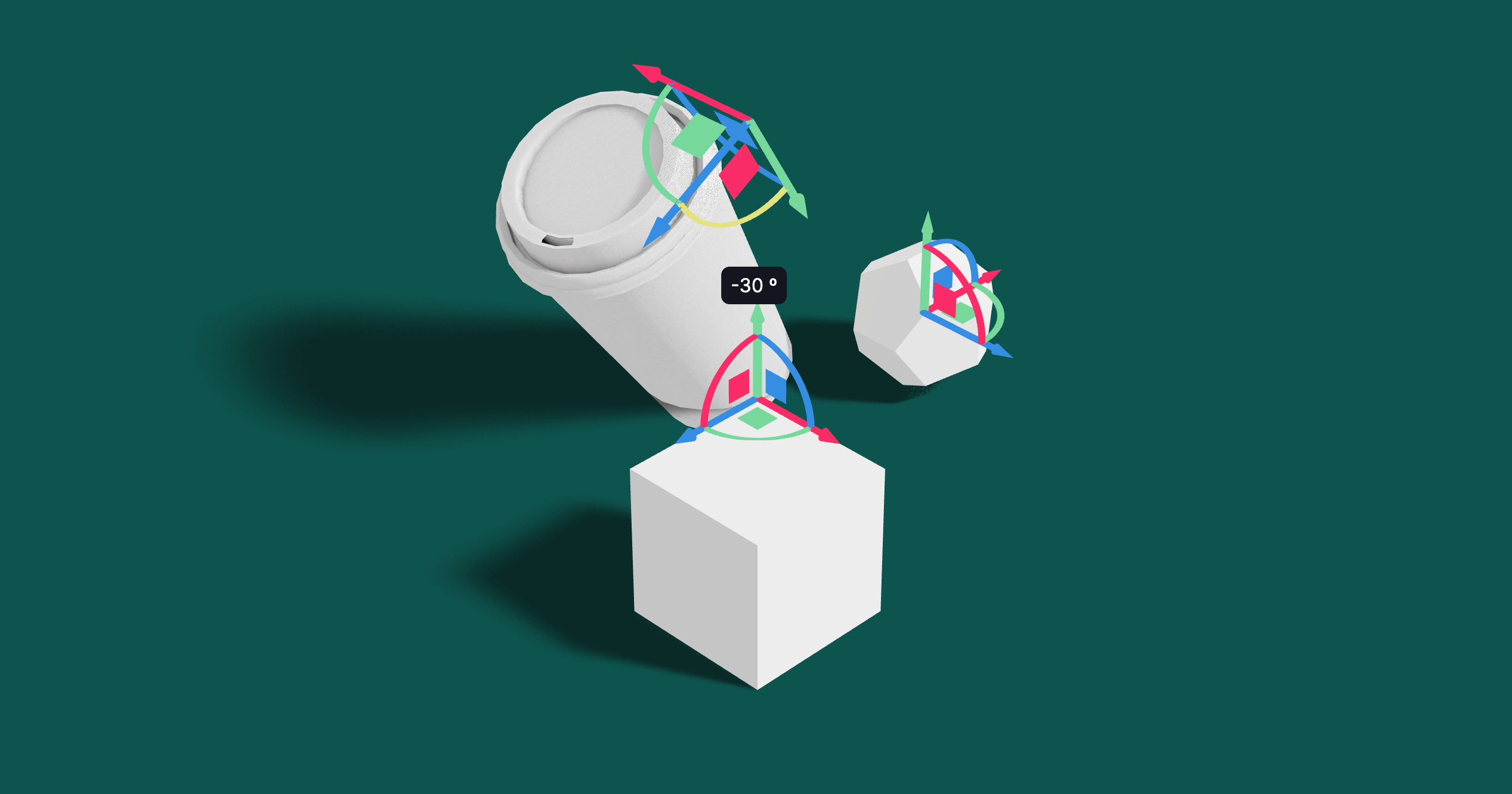

PivotControls

Controls for rotating and translating objects. These controls will stick to the object the transform and by offsetting or anchoring it forms a pivot. This control has HTML annotations for some transforms and supports [tab] for rounded values while dragging.

type PivotControlsProps = {

enabled?: boolean

scale?: number

lineWidth?: number

fixed?: boolean

offset?: [number, number, number]

rotation?: [number, number, number]

matrix?: THREE.Matrix4

anchor?: [number, number, number]

autoTransform?: boolean

activeAxes?: [boolean, boolean, boolean]

disableAxes?: boolean

disableSliders?: boolean

disableRotations?: boolean

disableScaling?: boolean

axisColors?: [string | number, string | number, string | number]

hoveredColor?: string | number

annotations?: boolean

annotationsClass?: string

onDragStart?: () => void

onDrag?: (l: THREE.Matrix4, deltaL: THREE.Matrix4, w: THREE.Matrix4, deltaW: THREE.Matrix4) => void

onDragEnd?: () => void

depthTest?: boolean

opacity?: number

visible?: boolean

userData?: { [key: string]: any }

children?: React.ReactNode

}

<PivotControls>

<mesh />

</PivotControls>

You can use Pivot as a controlled component, switch autoTransform off in that case and now you are responsible for applying the matrix transform yourself. You can also leave autoTransform on and apply the matrix to foreign objects, in that case Pivot will be able to control objects that are not parented within.

const matrix = new THREE.Matrix4()

return (

<PivotControls

ref={ref}

matrix={matrix}

autoTransform={false}

onDrag={({ matrix: matrix_ }) => matrix.copy(matrix_)}

DragControls

You can use DragControls to make objects draggable in your scene. It supports locking the drag to specific axes, setting drag limits, and custom drag start, drag, and drag end events.

type DragControlsProps = {

autoTransform?: boolean

matrix?: THREE.Matrix4

axisLock?: 'x' | 'y' | 'z'

dragLimits?: [[number, number] | undefined, [number, number] | undefined, [number, number] | undefined]

onHover?: (hovering: boolean) => void

onDragStart?: (origin: THREE.Vector3) => void

onDrag?: (

localMatrix: THREE.Matrix4,

deltaLocalMatrix: THREE.Matrix4,

worldMatrix: THREE.Matrix4,

deltaWorldMatrix: THREE.Matrix4

) => void

onDragEnd?: () => void

children: React.ReactNode

}

<DragControls>

<mesh />

</DragControls>

You can utilize DragControls as a controlled component by toggling autoTransform off, which then requires you to manage the matrix transformation manually. Alternatively, keeping autoTransform enabled allows you to apply the matrix to external objects, enabling DragControls to manage objects that are not directly parented within it.

const matrix = new THREE.Matrix4()

return (

<DragControls

ref={ref}

matrix={matrix}

autoTransform={false}

onDrag={(localMatrix) => matrix.copy(localMatrix)}

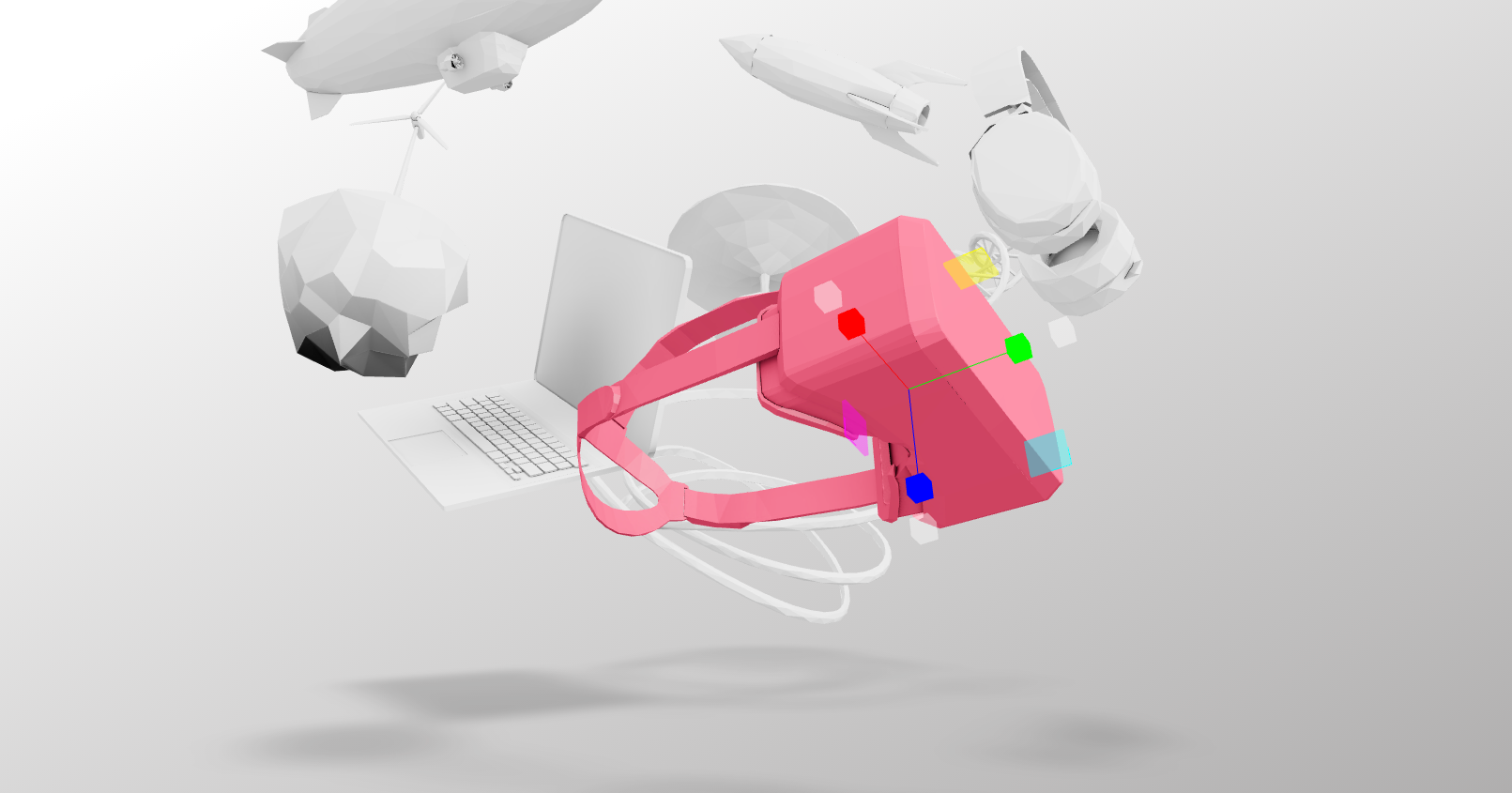

TransformControls

An abstraction around THREE.TransformControls.

You can wrap objects which then receive a transform gizmo.

<TransformControls mode="translate">

<mesh />

</TransformControls>

You could also reference the object which might make it easier to exchange the target. Now the object does not have to be part of the same sub-graph. References can be plain objects or React.MutableRefObjects.

<TransformControls object={mesh} mode="translate" />

<mesh ref={mesh} />

If you are using other controls (Orbit, Trackball, etc), you will notice how they interfere, dragging one will affect the other. Default-controls will temporarily be disabled automatically when the user is pulling on the transform gizmo.

<TransformControls mode="translate" />

<OrbitControls makeDefault />

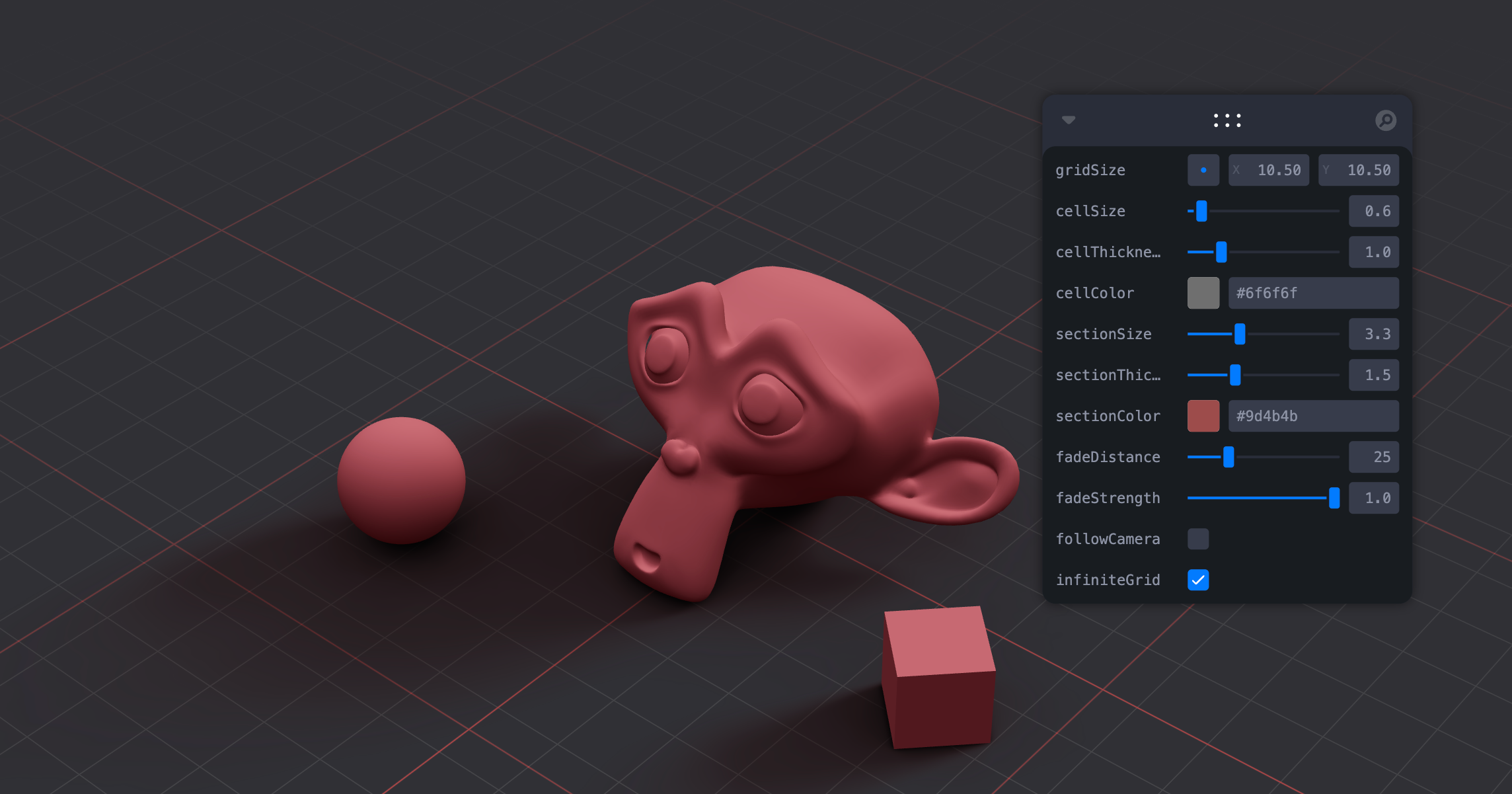

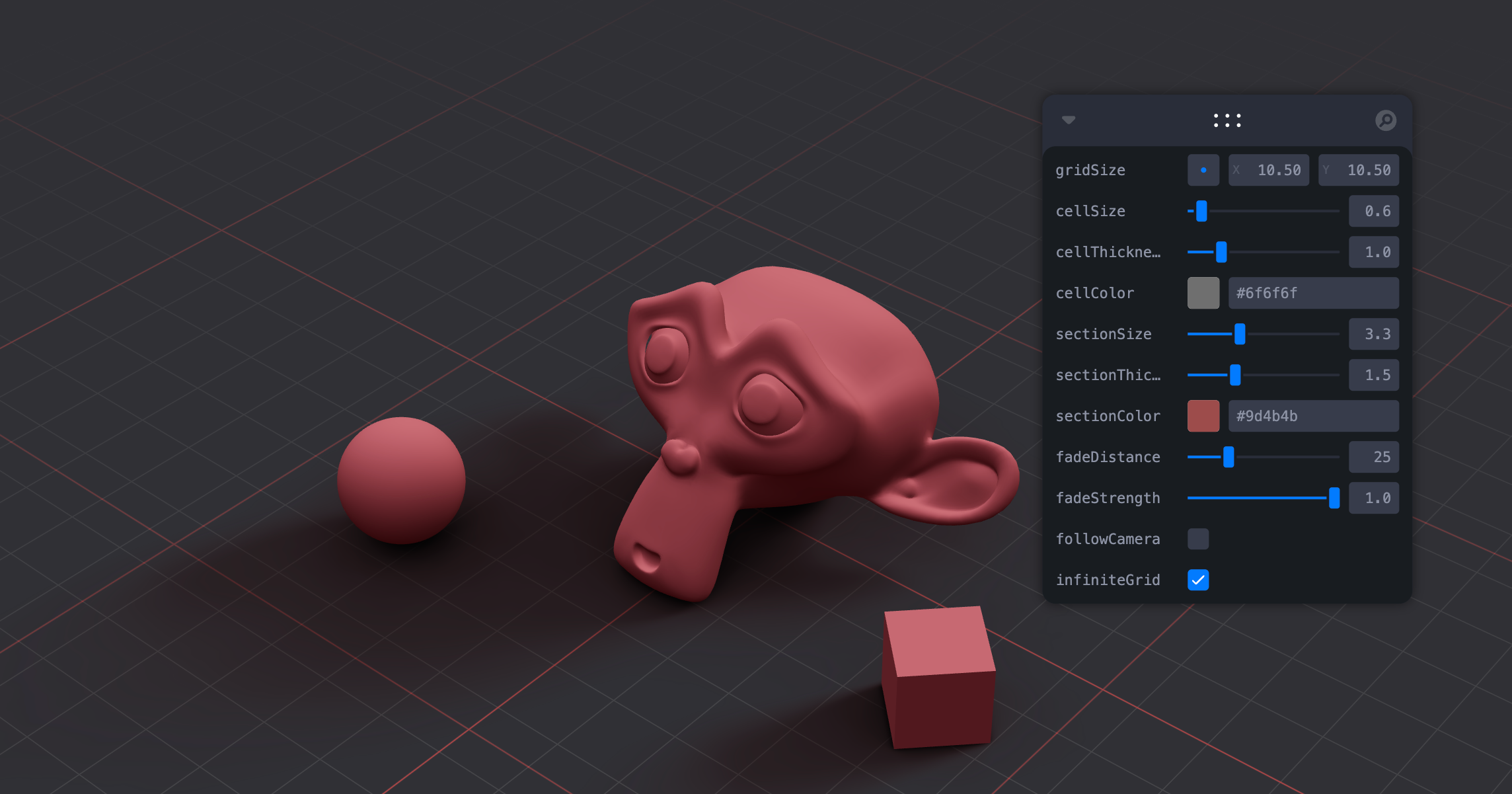

Grid

A y-up oriented, shader-based grid implementation.

export type GridMaterialType = {

cellSize?: number

cellThickness?: number

cellColor?: THREE.ColorRepresentation

sectionSize?: number

sectionThickness?: number

sectionColor?: THREE.ColorRepresentation

followCamera?: boolean

infiniteGrid?: boolean

fadeDistance?: number

fadeStrength?: number

fadeFrom?: number

}

export type GridProps = GridMaterialType & {

args?: ConstructorParameters<typeof THREE.PlaneGeometry>

}

<Grid />

Helper / useHelper

A hook for a quick way to add helpers to existing nodes in the scene. It handles removal of the helper on unmount and auto-updates it by default.

const mesh = useRef()

useHelper(mesh, BoxHelper, 'cyan')

useHelper(condition && mesh, BoxHelper, 'red')

<mesh ref={mesh} ... />

or with Helper:

<mesh>

<boxGeometry />

<meshBasicMaterial />

<Helper type={BoxHelper} args={['royalblue']} />

<Helper type={VertexNormalsHelper} args={[1, 0xff0000]} />

</mesh>

Shapes

Plane, Box, Sphere, Circle, Cone, Cylinder, Tube, Torus, TorusKnot, Ring, Tetrahedron, Polyhedron, Icosahedron, Octahedron, Dodecahedron, Extrude, Lathe, Shape

Short-cuts for a mesh with a buffer geometry.

<Box

args={[1, 1, 1]}

{...meshProps}

/>

<Plane args={[2, 2]} />

<Box material-color="hotpink" />

<Sphere>

<meshStandardMaterial color="hotpink" />

</Sphere>

RoundedBox

A box buffer geometry with rounded corners, done with extrusion.

<RoundedBox

args={[1, 1, 1]}

radius={0.05}

smoothness={4}

bevelSegments={4}

creaseAngle={0.4}

{...meshProps}

>

<meshPhongMaterial color="#f3f3f3" wireframe />

</RoundedBox>

ScreenQuad

<ScreenQuad>

<myMaterial />

</ScreenQuad>

A triangle that fills the screen, ideal for full-screen fragment shader work (raymarching, postprocessing).

👉 Why a triangle?

👉 Use as a post processing mesh

Line

Renders a THREE.Line2 or THREE.LineSegments2 (depending on the value of segments).

<Line

points={[[0, 0, 0], ...]}

color="black"

lineWidth={1}

segments

dashed={false}

vertexColors={[[0, 0, 0], ...]}

{...lineProps}

{...materialProps}

/>

QuadraticBezierLine

Renders a THREE.Line2 using THREE.QuadraticBezierCurve3 for interpolation.

<QuadraticBezierLine

start={[0, 0, 0]}

end={[10, 0, 10]}

mid={[5, 0, 5]}

color="black"

lineWidth={1}

dashed={false}

vertexColors={[[0, 0, 0], ...]}

{...lineProps}

{...materialProps}

/>

You can also update the line runtime.

const ref = useRef()

useFrame((state) => {

ref.current.setPoints(

[0, 0, 0],

[10, 0, 0],

)

}, [])

return <QuadraticBezierLine ref={ref} />

}

CubicBezierLine

Renders a THREE.Line2 using THREE.CubicBezierCurve3 for interpolation.

<CubicBezierLine

start={[0, 0, 0]}

end={[10, 0, 10]}

midA={[5, 0, 0]}

midB={[0, 0, 5]}

color="black"

lineWidth={1}

dashed={false}

vertexColors={[[0, 0, 0], ...]}

{...lineProps}

{...materialProps}

/>

CatmullRomLine

Renders a THREE.Line2 using THREE.CatmullRomCurve3 for interpolation.

<CatmullRomLine

points={[[0, 0, 0], ...]}

closed={false}

curveType="centripetal"

tension={0.5}

color="black"

lineWidth={1}

dashed={false}

vertexColors={[[0, 0, 0], ...]}

{...lineProps}

{...materialProps}

/>

Facemesh

Renders an oriented MediaPipe face mesh:

const faceLandmarkerResult = {

"faceLandmarks": [

[

{ "x": 0.5760777592658997, "y": 0.8639070391654968, "z": -0.030997956171631813 },

{ "x": 0.572094738483429, "y": 0.7886289358139038, "z": -0.07189624011516571 },

],

],

"faceBlendshapes": [

],

"facialTransformationMatrixes": [

]

},

}

const points = faceLandmarkerResult.faceLandmarks[0]

<Facemesh points={points} />

export type FacemeshProps = {

points?: MediaPipePoints

face?: MediaPipeFaceMesh

width?: number

height?: number

depth?: number

verticalTri?: [number, number, number]

origin?: number | THREE.Vector3

facialTransformationMatrix?: (typeof FacemeshDatas.SAMPLE_FACELANDMARKER_RESULT.facialTransformationMatrixes)[0]

offset?: boolean

offsetScalar?: number

faceBlendshapes?: (typeof FacemeshDatas.SAMPLE_FACELANDMARKER_RESULT.faceBlendshapes)[0]

eyes?: boolean

eyesAsOrigin?: boolean

debug?: boolean

}

Ref-api:

const api = useRef<FacemeshApi>()

<Facemesh ref={api} points={points} />

type FacemeshApi = {

meshRef: React.RefObject<THREE.Mesh>

outerRef: React.RefObject<THREE.Group>

eyeRightRef: React.RefObject<FacemeshEyeApi>

eyeLeftRef: React.RefObject<FacemeshEyeApi>

}

You can for example get face mesh world direction:

api.meshRef.current.localToWorld(new THREE.Vector3(0, 0, -1))

or get L/R iris direction:

api.eyeRightRef.current.irisDirRef.current.localToWorld(new THREE.Vector3(0, 0, -1))

Abstractions

Image

A shader-based image component with auto-cover (similar to css/background: cover).

export type ImageProps = Omit<JSX.IntrinsicElements['mesh'], 'scale'> & {

segments?: number

scale?: number | [number, number]

color?: Color

zoom?: number

radius?: number

grayscale?: number

toneMapped?: boolean

transparent?: boolean

opacity?: number

side?: THREE.Side

}

function Foo() {

const ref = useRef()

useFrame(() => {

ref.current.material.radius = ...

ref.current.material.zoom = ...

ref.current.material.grayscale = ...

ref.current.material.color.set(...)

})

return <Image ref={ref} url="/file.jpg" />

}

To make the material transparent:

<Image url="/file.jpg" transparent opacity={0.5} />

You can have custom planes, for instance a rounded-corner plane.

import { extend } from '@react-three/fiber'

import { Image } from '@react-three/drei'

import { easing, geometry } from 'maath'

extend({ RoundedPlaneGeometry: geometry.RoundedPlaneGeometry })

<Image url="/file.jpg">

<roundedPlaneGeometry args={[1, 2, 0.15]} />

</Image>

Text

Hi-quality text rendering w/ signed distance fields (SDF) and antialiasing, using troika-3d-text. All of troikas props are valid! Text is suspense-based!

<Text color="black" anchorX="center" anchorY="middle">

hello world!

</Text>

Text will suspend while loading the font data, but in order to completely avoid FOUC you can pass the characters it needs to render.

<Text font={fontUrl} characters="abcdefghijklmnopqrstuvwxyz0123456789!">

hello world!

</Text>

Text3D

Render 3D text using ThreeJS's TextGeometry.

Text3D will suspend while loading the font data. Text3D requires fonts in JSON format generated through typeface.json, either as a path to a JSON file or a JSON object. If you face display issues try checking "Reverse font direction" in the typeface tool.

<Text3D font={fontUrl} {...textOptions}>

Hello world!

<meshNormalMaterial />

</Text3D>

You can use any material. textOptions are options you'd pass to the TextGeometry constructor. Find more information about available options here.

You can align the text using the <Center> component.

<Center top left>

<Text3D>hello</Text3D>

</Center>

It adds three properties that do not exist in the original TextGeometry, lineHeight, letterSpacing and smooth. LetterSpacing is a factor that is 1 by default. LineHeight is in threejs units and 0 by default. Smooth merges vertices with a tolerance and calls computeVertexNormals.

<Text3D smooth={1} lineHeight={0.5} letterSpacing={-0.025}>{`hello\nworld`}</Text3D>

Effects

Abstraction around threes own EffectComposer. By default it will prepend a render-pass and a gammacorrection-pass. Children are cloned, attach is given to them automatically. You can only use passes or effects in there.

By default it creates a render target with HalfFloatType, RGBAFormat. You can change all of this to your liking, inspect the types.

import { SSAOPass } from "three-stdlib"

extend({ SSAOPass })

<Effects multisamping={8} renderIndex={1} disableGamma={false} disableRenderPass={false} disableRender={false}>

<sSAOPass args={[scene, camera, 100, 100]} kernelRadius={1.2} kernelSize={0} />

</Effects>

PositionalAudio

A wrapper around THREE.PositionalAudio. Add this to groups or meshes to tie them to a sound that plays when the camera comes near.

<PositionalAudio

url="/sound.mp3"

distance={1}

loop

{...props}

/>

Billboard

Adds a <group /> that always faces the camera.

<Billboard

follow={true}

lockX={false}

lockY={false}

lockZ={false}

>

<Text fontSize={1}>I'm a billboard</Text>

</Billboard>

ScreenSpace

Adds a <group /> that aligns objects to screen space.

<ScreenSpace

depth={1}

>

<Box>I'm in screen space</Box>

</ScreenSpace>

ScreenSizer

Adds a <object3D /> that scales objects to screen space.

<ScreenSizer

scale={1}

>

<Box

args={[100, 100, 100]} // will render roughly as a 100px box

/>

</ScreenSizer>

GradientTexture

A declarative THREE.Texture which attaches to "map" by default. You can use this to create gradient backgrounds.

<mesh>

<planeGeometry />

<meshBasicMaterial>

<GradientTexture

stops={[0, 1]} // As many stops as you want

colors={['aquamarine', 'hotpink']} // Colors need to match the number of stops

size={1024} // Size is optional, default = 1024

/>

</meshBasicMaterial>

</mesh>

Radial gradient.

import { GradientTexture, GradientType } from './GradientTexture'

;<mesh>

<planeGeometry />

<meshBasicMaterial>

<GradientTexture

stops={[0, 0.5, 1]} // As many stops as you want

colors={['aquamarine', 'hotpink', 'yellow']} // Colors need to match the number of stops

size={1024} // Size (height) is optional, default = 1024

width={1024} // Width of the canvas producing the texture, default = 16

type={GradientType.Radial} // The type of the gradient, default = GradientType.Linear

innerCircleRadius={0} // Optional, the radius of the inner circle of the gradient, default = 0

outerCircleRadius={'auto'} // Optional, the radius of the outer circle of the gradient, default = auto

/>

</meshBasicMaterial>

</mesh>

Edges

Abstracts THREE.EdgesGeometry. It pulls the geometry automatically from its parent, optionally you can ungroup it and give it a geometry prop. You can give it children, for instance a custom material. Edges is based on <Line> and supports all of its props.

<mesh>

<boxGeometry />

<meshBasicMaterial />

<Edges

linewidth={4}

scale={1.1}

threshold={15} // Display edges only when the angle between two faces exceeds this value (default=15 degrees)

color="white"

/>

</mesh>

Outlines

An ornamental component that extracts the geometry from its parent and displays an inverted-hull outline. Supported parents are <mesh>, <skinnedMesh> and <instancedMesh>.

type OutlinesProps = JSX.IntrinsicElements['group'] & {

color: ReactThreeFiber.Color

screenspace: boolean

opacity: number

transparent: boolean

thickness: number

angle: number

}

<mesh>

<boxGeometry />

<meshBasicMaterial />

<Outlines thickness={0.05} color="hotpink" />

</mesh>

Trail

A declarative, three.MeshLine based Trails implementation. You can attach it to any mesh and it will give it a beautiful trail.

Props defined below with their default values.

<Trail

width={0.2}

color={'hotpink'}

length={1}

decay={1}

local={false}

stride={0}

interval={1}

target={undefined}

attenuation={(width) => width}

>

{}

<mesh>

<sphereGeometry />

<meshBasicMaterial />

</mesh>

{}

{}

</Trail>

👉 Inspired by TheSpite's Codevember 2021 #9

Sampler

– Complex Demo by @CantBeFaraz

– Simple Demo by @ggsimm

Declarative abstraction around MeshSurfaceSampler & InstancedMesh.

It samples points from the passed mesh and transforms an InstancedMesh's matrix to distribute instances on the points.

Check the demos & code for more.

You can either pass a Mesh and InstancedMesh as children:

<Sampler

weight={'normal'}

transform={transformPoint}

count={16}

>

<mesh>

<sphereGeometry args={[2]} />

</mesh>

<instancedMesh args={[null, null, 1_000]}>

<sphereGeometry args={[0.1]} />

</instancedMesh>

</Sampler>

or use refs when you can't compose declaratively:

const { nodes } = useGLTF('my/mesh/url')

const mesh = useRef(nodes)

const instances = useRef()

return <>

<instancedMesh args={[null, null, 1_000]}>

<sphereGeometry args={[0.1]}>

</instancedMesh>

<Sampler mesh={mesh} instances={instances}>

</>

ComputedAttribute

Create and attach an attribute declaratively.

<sphereGeometry>

<ComputedAttribute

// attribute will be added to the geometry with this name

name="my-attribute-name"

compute={(geometry) => {

// ...someLogic;

return new THREE.BufferAttribute([1, 2, 3], 1)

}}

// you can pass any BufferAttribute prop to this component, eg.

usage={THREE.StaticReadUsage}

/>

</sphereGeometry>

Clone

Declarative abstraction around THREE.Object3D.clone. This is useful when you want to create a shallow copy of an existing fragment (and Object3D, Groups, etc) into your scene, for instance a group from a loaded GLTF. This clone is now re-usable, but it will still refer to the original geometries and materials.

<Clone

object: THREE.Object3D | THREE.Object3D[]

children?: React.ReactNode

deep?: boolean | 'materialsOnly' | 'geometriesOnly'

keys?: string[]

inject?: MeshProps | React.ReactNode | ((object: THREE.Object3D) => React.ReactNode)

castShadow?: boolean

receiveShadow?: boolean

/>

You create a shallow clone by passing a pre-existing object to the object prop.

const { nodes } = useGLTF(url)

return (

<Clone object={nodes.table} />

Or, multiple objects:

<Clone object={[nodes.foo, nodes.bar]} />

You can dynamically insert objects, these will apply to anything that isn't a group or a plain object3d (meshes, lines, etc):

<Clone object={nodes.table} inject={<meshStandardMaterial color="green" />} />

Or make inserts conditional:

<Clone object={nodes.table} inject={

{(object) => (object.name === 'table' ? <meshStandardMaterial color="green" /> : null)}

} />

useAnimations

A hook that abstracts AnimationMixer.

const { nodes, materials, animations } = useGLTF(url)

const { ref, mixer, names, actions, clips } = useAnimations(animations)

useEffect(() => {

actions?.jump.play()

})

return (

<mesh ref={ref} />

The hook can also take a pre-existing root (which can be a plain object3d or a reference to one):

const { scene, animations } = useGLTF(url)

const { actions } = useAnimations(animations, scene)

return <primitive object={scene} />

MarchingCubes

An abstraction for threes MarchingCubes

<MarchingCubes resolution={50} maxPolyCount={20000} enableUvs={false} enableColors={true}>

<MarchingCube strength={0.5} subtract={12} color={new Color('#f0f')} position={[0.5, 0.5, 0.5]} />

<MarchingPlane planeType="y" strength={0.5} subtract={12} />

</MarchingCubes>

Decal

Abstraction around Three's DecalGeometry. It will use the its parent mesh as the decal surface by default.

The decal box has to intersect the surface, otherwise it will not be visible. if you do not specifiy a rotation it will look at the parents center point. You can also pass a single number as the rotation which allows you to spin it.

<mesh>

<sphereGeometry />

<meshBasicMaterial />

<Decal

debug // Makes "bounding box" of the decal visible

position={[0, 0, 0]} // Position of the decal

rotation={[0, 0, 0]} // Rotation of the decal (can be a vector or a degree in radians)

scale={1} // Scale of the decal

>

<meshBasicMaterial

map={texture}

polygonOffset

polygonOffsetFactor={-1} // The material should take precedence over the original

/>

</Decal>

</mesh>

If you do not specify a material it will create a transparent meshBasicMaterial with a polygonOffsetFactor of -10.

<mesh>

<sphereGeometry />

<meshBasicMaterial />

<Decal map={texture} />

</mesh>

If declarative composition is not possible, use the mesh prop to define the surface the decal must attach to.

<Decal mesh={ref}>

<meshBasicMaterial map={texture} polygonOffset polygonOffsetFactor={-1} />

</Decal>

Svg

Wrapper around the three svg loader demo.

Accepts an SVG url or svg raw data.

<Svg src={urlOrRawSvgString} />

AsciiRenderer

Abstraction of three's AsciiEffect. It creates a DOM layer on top of the canvas and renders the scene as ascii characters.

type AsciiRendererProps = {

renderIndex?: number

bgColor?: string

fgColor?: string

characters?: string

invert?: boolean

color?: boolean

resolution?: number

}

<Canvas>

<AsciiRenderer />

Splat

A declarative abstraction around antimatter15/splat. It supports re-use, multiple splats with correct depth sorting, splats can move and behave as a regular object3d's, supports alphahash & alphatest, and stream-loading.

type SplatProps = {

src: string

toneMapped?: boolean

alphaTest?: number

alphaHash?: boolean

chunkSize?: number

} & JSX.IntrinsicElements['mesh']

<Splat src="https://huggingface.co/cakewalk/splat-data/resolve/main/nike.splat" />

In order to depth sort multiple splats correectly you can either use alphaTest, for instance with a low value. But keep in mind that this can show a slight outline under some viewing conditions.

<Splat alphaTest={0.1} src="foo.splat" />

<Splat alphaTest={0.1} src="bar.splat" />

You can also use alphaHash, but this can be slower and create some noise, you would typically get rid of the noise in postprocessing with a TAA pass. You don't have to use alphaHash on all splats.

<Splat alphaHash src="foo.splat" />

Shaders

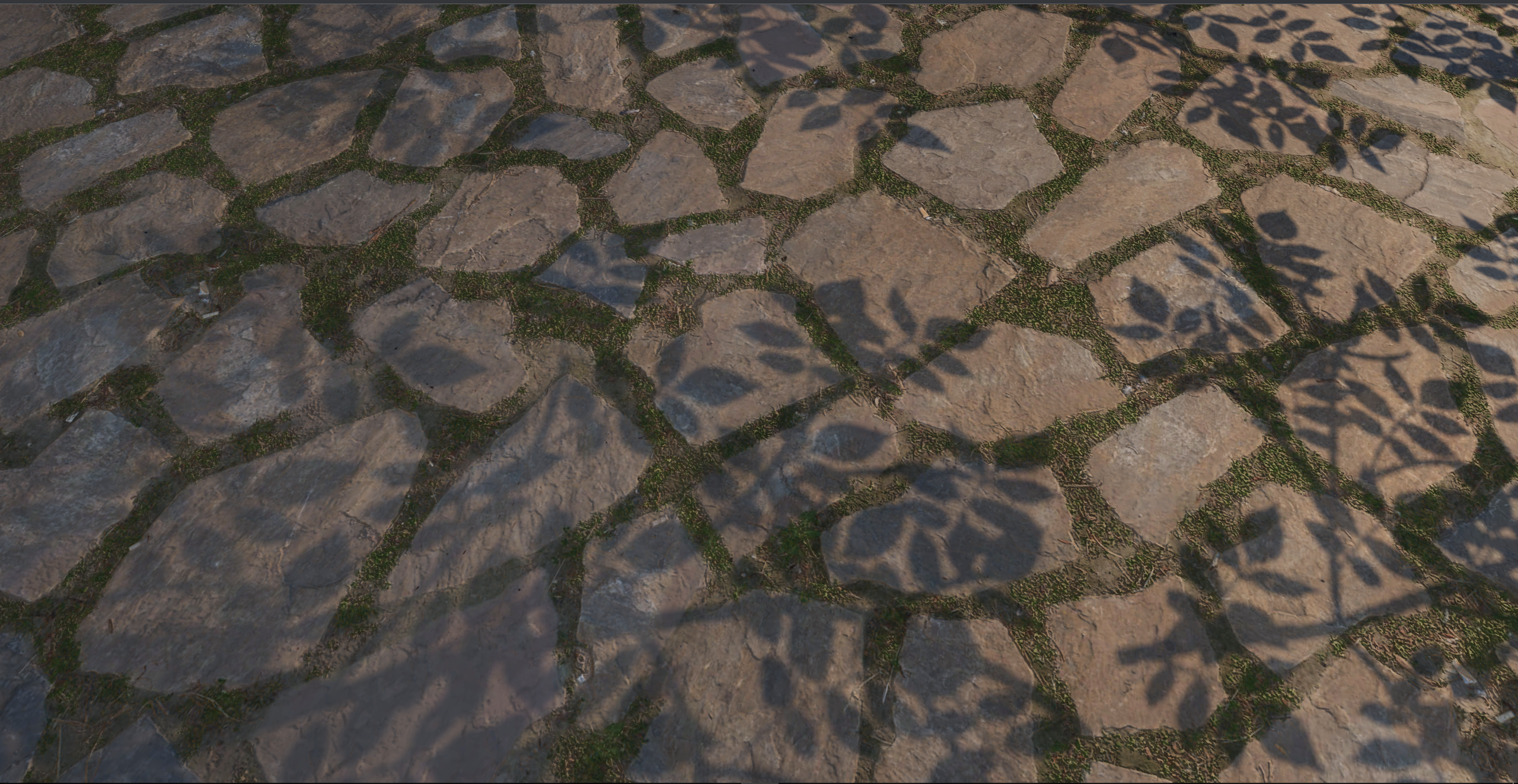

MeshReflectorMaterial

Easily add reflections and/or blur to any mesh. It takes surface roughness into account for a more realistic effect. This material extends from THREE.MeshStandardMaterial and accepts all its props.

<mesh>

<planeGeometry />

<MeshReflectorMaterial

blur={[0, 0]} // Blur ground reflections (width, height), 0 skips blur

mixBlur={0} // How much blur mixes with surface roughness (default = 1)

mixStrength={1} // Strength of the reflections

mixContrast={1} // Contrast of the reflections

resolution={256} // Off-buffer resolution, lower=faster, higher=better quality, slower

mirror={0} // Mirror environment, 0 = texture colors, 1 = pick up env colors

depthScale={0} // Scale the depth factor (0 = no depth, default = 0)

minDepthThreshold={0.9} // Lower edge for the depthTexture interpolation (default = 0)

maxDepthThreshold={1} // Upper edge for the depthTexture interpolation (default = 0)

depthToBlurRatioBias={0.25} // Adds a bias factor to the depthTexture before calculating the blur amount [blurFactor = blurTexture * (depthTexture + bias)]. It accepts values between 0 and 1, default is 0.25. An amount > 0 of bias makes sure that the blurTexture is not too sharp because of the multiplication with the depthTexture

distortion={1} // Amount of distortion based on the distortionMap texture

distortionMap={distortionTexture} // The red channel of this texture is used as the distortion map. Default is null

debug={0} /* Depending on the assigned value, one of the following channels is shown:

0 = no debug

1 = depth channel

2 = base channel

3 = distortion channel

4 = lod channel (based on the roughness)

*/

reflectorOffset={0.2} // Offsets the virtual camera that projects the reflection. Useful when the reflective surface is some distance from the object's origin (default = 0)

/>

</mesh>

MeshWobbleMaterial

This material makes your geometry wobble and wave around. It was taken from the threejs-examples and adapted into a self-contained material.

<mesh>

<boxGeometry />

<MeshWobbleMaterial factor={1} speed={10} />

</mesh>

MeshDistortMaterial

This material makes your geometry distort following simplex noise.

<mesh>

<boxGeometry />

<MeshDistortMaterial distort={1} speed={10} />

</mesh>

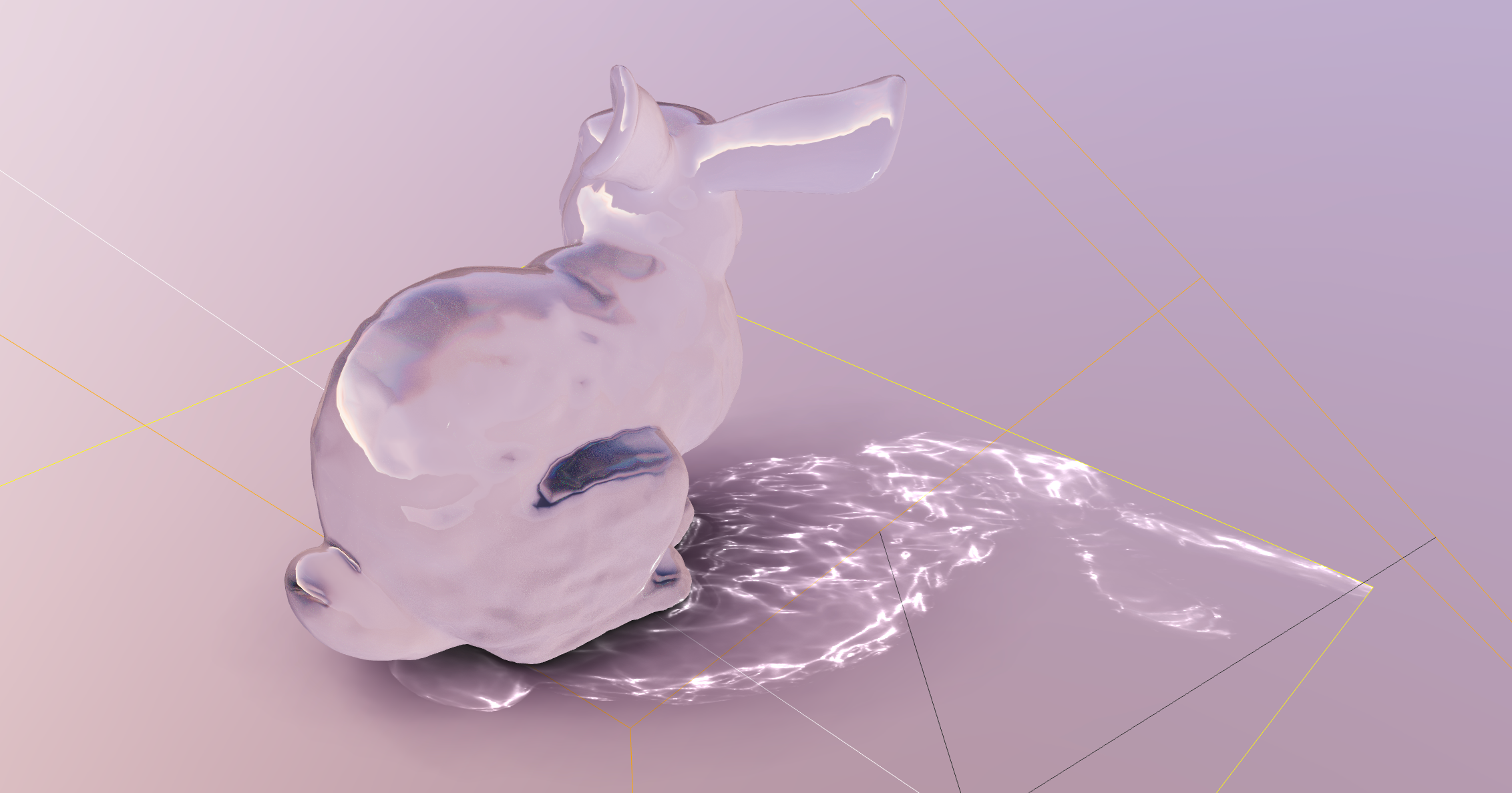

MeshRefractionMaterial

A convincing Glass/Diamond refraction material.

type MeshRefractionMaterialProps = JSX.IntrinsicElements['shaderMaterial'] & {

envMap: THREE.CubeTexture | THREE.Texture

bounces?: number

ior?: number

fresnel?: number

aberrationStrength?: number

color?: ReactThreeFiber.Color

fastChroma?: boolean

}

If you want it to reflect other objects in the scene you best pair it with a cube-camera.

<CubeCamera>

{(texture) => (

<mesh geometry={diamondGeometry} {...props}>

<MeshRefractionMaterial envMap={texture} />

</mesh>

)}

</CubeCamera>

Otherwise just pass it an environment map.

const texture = useLoader(RGBELoader, "/textures/royal_esplanade_1k.hdr")

return (

<mesh geometry={diamondGeometry} {...props}>

<MeshRefractionMaterial envMap={texture} />

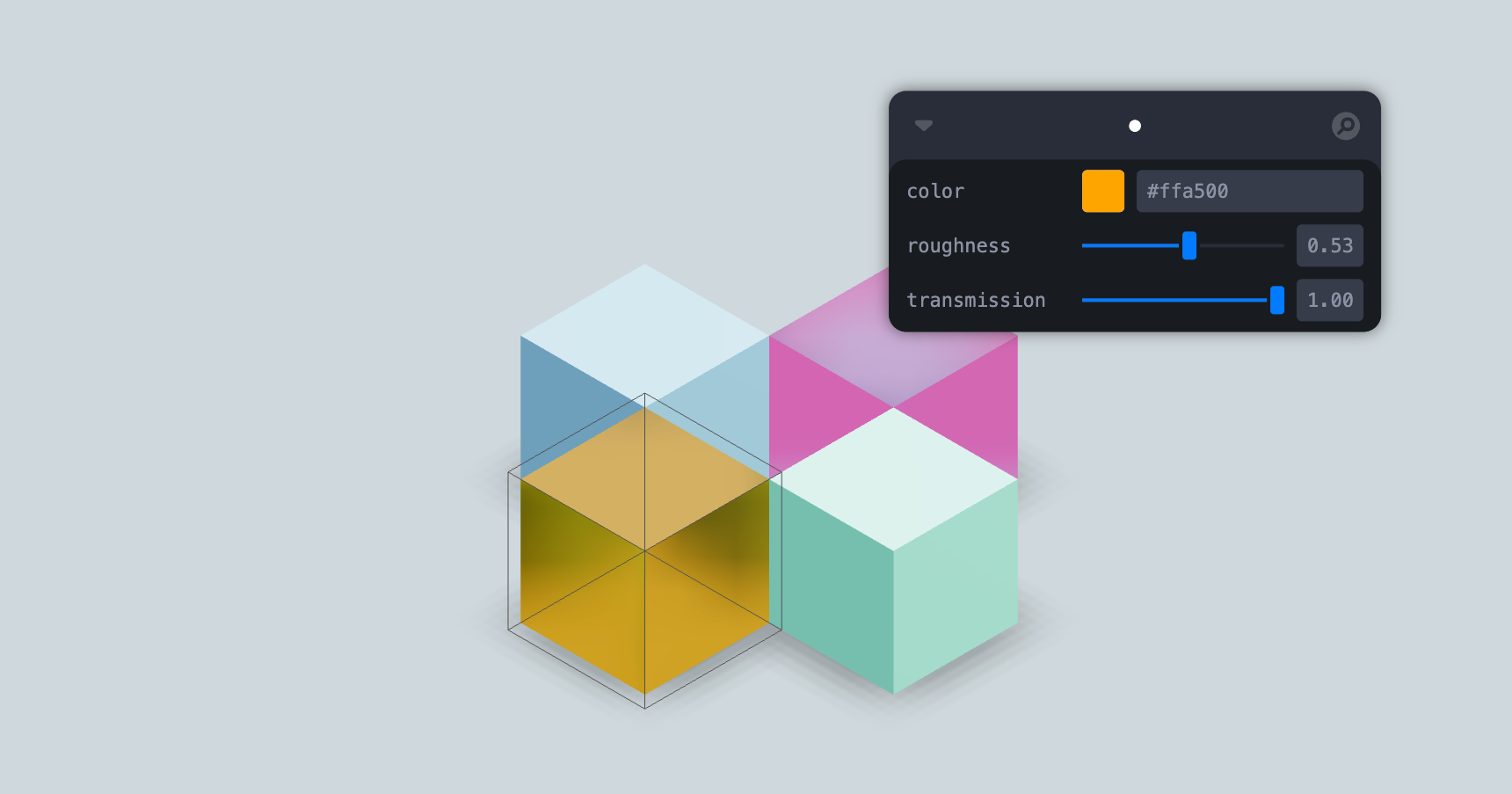

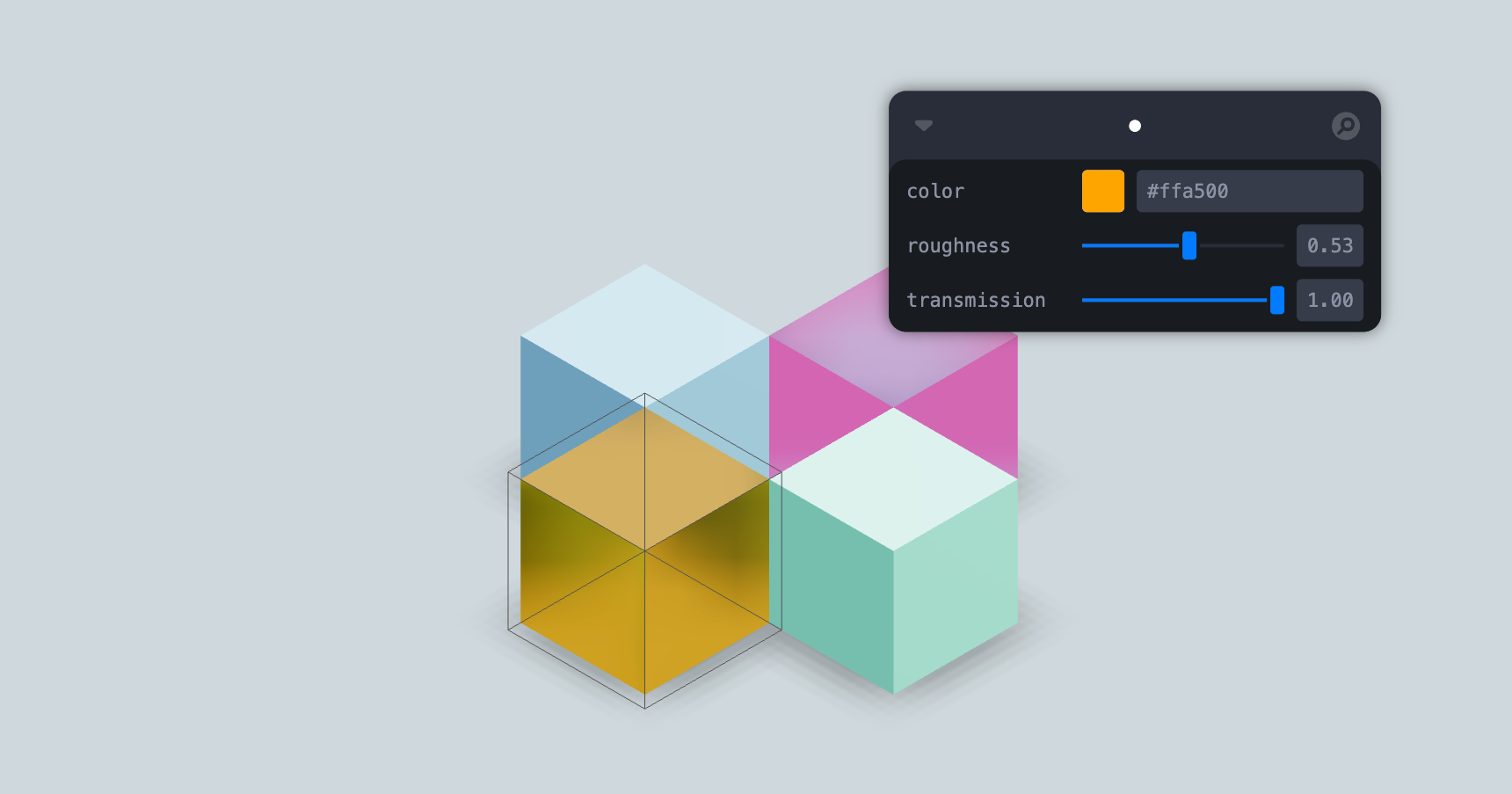

MeshTransmissionMaterial

An improved THREE.MeshPhysicalMaterial. It acts like a normal PhysicalMaterial in terms of transmission support, thickness, ior, roughness, etc., but has chromatic aberration, noise-based roughness blur, (primitive) anisotropic blur support, and unlike the original it can "see" other transmissive or transparent objects which leads to improved visuals.

Although it should be faster than MPM keep in mind that it can still be expensive as it causes an additional render pass of the scene. Low samples and low resolution will make it faster. If you use roughness consider using a tiny resolution, for instance 32x32 pixels, it will still look good but perform much faster.

For performance and visual reasons the host mesh gets removed from the render-stack temporarily. If you have other objects that you don't want to see reflected in the material just add them to the parent mesh as children.

type MeshTransmissionMaterialProps = JSX.IntrinsicElements['meshPhysicalMaterial'] & {

transmission?: number

thickness?: number

backsideThickness?: number

roughness?: number

chromaticAberration?: number

anisotropicBlur?: number

distortion?: number

distortionScale?: number

temporalDistortion?: number

buffer?: THREE.Texture

transmissionSampler?: boolean

backside?: boolean

resolution?: number

backsideResolution?: number

samples?: number

background?: THREE.Texture

}

return (

<mesh geometry={geometry} {...props}>

<MeshTransmissionMaterial />

If each material rendering the scene on its own is too expensive you can share a buffer texture. Either by enabling transmissionSampler which would use the threejs-internal buffer that MeshPhysicalMaterials use. This might be faster, the downside is that no transmissive material can "see" other transparent or transmissive objects.

<mesh geometry={torus}>

<MeshTransmissionMaterial transmissionSampler />

</mesh>

<mesh geometry={sphere}>

<MeshTransmissionMaterial transmissionSampler />

</mesh>

Or, by passing a texture to buffer manually, for instance using useFBO.

const buffer = useFBO()

useFrame((state) => {

state.gl.setRenderTarget(buffer)

state.gl.render(state.scene, state.camera)

state.gl.setRenderTarget(null)

})

return (

<>

<mesh geometry={torus}>

<MeshTransmissionMaterial buffer={buffer.texture} />

</mesh>

<mesh geometry={sphere}>

<MeshTransmissionMaterial buffer={buffer.texture} />

</mesh>

Or a PerspectiveCamera.

<PerspectiveCamera makeDefault fov={75} position={[10, 0, 15]} resolution={1024}>

{(texture) => (

<>

<mesh geometry={torus}>

<MeshTransmissionMaterial buffer={texture} />

</mesh>

<mesh geometry={sphere}>

<MeshTransmissionMaterial buffer={texture} />

</mesh>

</>

)}

This would mimic the default MeshPhysicalMaterial behaviour, these materials won't "see" one another, but at least they would pick up on everything else, including transmissive or transparent objects.

MeshDiscardMaterial

A material that renders nothing. In comparison to <mesh visible={false} it can be used to hide objects from the scene while still displays shadows and children.

<mesh castShadow>

<torusKnotGeonetry />

<MeshDiscardMaterial />

{}

<Edges />

PointMaterial

Antialiased round dots. It takes the same props as regular THREE.PointsMaterial on which it is based.

<points>

<PointMaterial transparent vertexColors size={15} sizeAttenuation={false} depthWrite={false} />

</points>

SoftShadows

type SoftShadowsProps = {

size?: number

samples?: number

focus?: number

}

Injects percent closer soft shadows (pcss) into threes shader chunk. Mounting and unmounting this component will lead to all shaders being be re-compiled, although it will only cause overhead if SoftShadows is mounted after the scene has already rendered, if it mounts with everything else in your scene shaders will compile naturally.

<SoftShadows />

shaderMaterial

Creates a THREE.ShaderMaterial for you with easier handling of uniforms, which are automatically declared as setter/getters on the object and allowed as constructor arguments.

import { extend } from '@react-three/fiber'

const ColorShiftMaterial = shaderMaterial(

{ time: 0, color: new THREE.Color(0.2, 0.0, 0.1) },

`

varying vec2 vUv;

void main() {

vUv = uv;

gl_Position = projectionMatrix * modelViewMatrix * vec4(position, 1.0);

}

`,

`

uniform float time;

uniform vec3 color;

varying vec2 vUv;

void main() {

gl_FragColor.rgba = vec4(0.5 + 0.3 * sin(vUv.yxx + time) + color, 1.0);

}

`

)

extend({ ColorShiftMaterial })

...

<mesh>

<colorShiftMaterial color="hotpink" time={1} />

</mesh>

const material = new ColorShiftMaterial({ color: new THREE.Color("hotpink") })

material.time = 1

shaderMaterial attaches a unique key property to the prototype class. If you wire it to Reacts own key property, you can enable hot-reload.

import { ColorShiftMaterial } from './ColorShiftMaterial'

extend({ ColorShiftMaterial })

<colorShiftMaterial key={ColorShiftMaterial.key} color="hotpink" time={1} />

Modifiers

CurveModifier

Given a curve will replace the children of this component with a mesh that move along said curve calling the property moveAlongCurve on the passed ref. Uses three's Curve Modifier

const curveRef = useRef()

const curve = React.useMemo(() => new THREE.CatmullRomCurve3([...handlePos], true, 'centripetal'), [handlePos])

return (

<CurveModifier ref={curveRef} curve={curve}>

<mesh>

<boxGeometry args={[10, 10]} />

</mesh>

</CurveModifier>

)

Misc

useContextBridge

Allows you to forward contexts provided above the <Canvas /> to be consumed from within the <Canvas /> normally

function SceneWrapper() {

const ContextBridge = useContextBridge(ThemeContext, GreetingContext)

return (

<Canvas>

<ContextBridge>

<Scene />

</ContextBridge>

</Canvas>

)

}

function Scene() {

const theme = React.useContext(ThemeContext)

const greeting = React.useContext(GreetingContext)

return (

)

}

Example

Warning solely for CONTRIBUTING purposes

A "counter" example.

<Example font="/Inter_Bold.json" />

type ExampleProps = {

font: string

color?: Color

debug?: boolean

bevelSize?: number

}

Ref-api:

const api = useRef<ExampleApi>()

<Example ref={api} font="/Inter_Bold.json" />

type ExampleApi = {

incr: (x?: number) => void

decr: (x?: number) => void

}

Html

Allows you to tie HTML content to any object of your scene. It will be projected to the objects whereabouts automatically.

<Html

as='div'

wrapperClass

prepend

center

fullscreen

distanceFactor={10}

zIndexRange={[100, 0]}

portal={domnodeRef}

transform

sprite

calculatePosition={(el: Object3D, camera: Camera, size: { width: number; height: number }) => number[]}

occlude={[ref]}

onOcclude={(hidden) => null}

{...groupProps}

{...divProps}

>

<h1>hello</h1>

<p>world</p>

</Html>

Html can hide behind geometry using the occlude prop.

<Html occlude />

When the Html object hides it sets the opacity prop on the innermost div. If you want to animate or control the transition yourself then you can use onOcclude.

const [hidden, set] = useState()

<Html

occlude

onOcclude={set}

style={{

transition: 'all 0.5s',

opacity: hidden ? 0 : 1,

transform: `scale(${hidden ? 0.5 : 1})`

}}

/>

Blending occlusion

Html can hide behind geometry as if it was part of the 3D scene using this mode. It can be enabled by using "blending" as the occlude prop.

<Html occlude="blending" />

You can also give HTML material properties using the material prop.

<Html

occlude

material={

<meshPhysicalMaterial

side={DoubleSide} // Required

opacity={0.1} // Degree of influence of lighting on the HTML

... // Any other material properties

/>

}

/>

Enable shadows using the castShadow and recieveShadow prop.

Note: Shadows only work with a custom material. Shadows will not work with meshBasicMaterial and shaderMaterial by default.

<Html

occlude

castShadow

receiveShadow

material={<meshPhysicalMaterial side={DoubleSide} opacity={0.1} />}

/>

Note: Html 'blending' mode only correctly occludes rectangular HTML elements by default. Use the geometry prop to swap the backing geometry to a custom one if your Html has a different shape.

If transform mode is enabled, the dimensions of the rendered html will depend on the position relative to the camera, the camera fov and the distanceFactor. For example, an Html component placed at (0,0,0) and with a distanceFactor of 10, rendered inside a scene with a perspective camera positioned at (0,0,2.45) and a FOV of 75, will have the same dimensions as a "plain" html element like in this example.

A caveat of transform mode is that on some devices and browsers, the rendered html may appear blurry, as discussed in #859. The issue can be at least mitigated by scaling down the Html parent and scaling up the html children:

<Html transform scale={0.5}>

<div style={{ transform: 'scale(2)' }}>Some text</div>

</Html>

CycleRaycast

This component allows you to cycle through all objects underneath the cursor with optional visual feedback. This can be useful for non-trivial selection, CAD data, housing, everything that has layers. It does this by changing the raycasters filter function and then refreshing the raycaster.

For this to work properly your event handler have to call event.stopPropagation(), for instance in onPointerOver or onClick, only one element can be selective for cycling to make sense.

<CycleRaycast

preventDefault={true}

scroll={true}

keyCode={9}

onChanged={(objects, cycle) => console.log(objects, cycle)}

/>

Select

type Props = {

multiple?: boolean

box?: boolean

border?: string

backgroundColor?: string

onChange?: (selected: THREE.Object3D[]) => void

onChangePointerUp?: (selected: THREE.Object3D[]) => void

filter?: (selected: THREE.Object3D[]) => THREE.Object3D[]

}

This component allows you to select/unselect objects by clicking on them. It keeps track of the currently selected objects and can select multiple objects (with the shift key). Nested components can request the current selection (which is always an array) with the useSelect hook. With the box prop it will let you shift-box-select objects by holding and draging the cursor over multiple objects. Optionally you can filter the selected items as well as define in which shape they are stored by defining the filter prop.

<Select box multiple onChange={console.log} filter={items => items}>

<Foo />

<Bar />

</Select>

function Foo() {

const selected = useSelect()

Sprite Animator

type Props = {

startFrame?: number

endFrame?: number

fps?: number

frameName?: string

textureDataURL?: string

textureImageURL?: string

loop?: boolean

numberOfFrames?: number

autoPlay?: boolean

animationNames?: Array<string>

onStart?: Function

onEnd?: Function

onLoopEnd?: Function

onFrame?: Function

play?: boolean

pause?: boolean

flipX?: boolean

alphaTest?: number

asSprite?: boolean

offset?: number

playBackwards: boolean

resetOnEnd?: boolean

instanceItems?: any[]

maxItems?: number

spriteDataset?: any

}

The SpriteAnimator component provided by drei is a powerful tool for animating sprites in a simple and efficient manner. It allows you to create sprite animations by cycling through a sequence of frames from a sprite sheet image or JSON data.

Notes:

- The SpriteAnimator component internally uses the useFrame hook from react-three-fiber (r3f) for efficient frame updates and rendering.

- The sprites (without a JSON file) should contain equal size frames

- Trimming of spritesheet frames is not yet supported

- Internally uses the

useSpriteLoader or can use data from it directly

<SpriteAnimator

position={[-3.5, -2.0, 2.5]}

startFrame={0}

scaleFactor={0.125}

autoPlay={true}

loop={true}

numberOfFrames={16}

textureImageURL={'./alien.png'}

/>

ScrollControls example

;<ScrollControls damping={0.2} maxSpeed={0.5} pages={2}>

<SpriteAnimator

position={[0.0, -1.5, -1.5]}

startFrame={0}

onEnd={doSomethingOnEnd}

onStart={doSomethingOnStart}

autoPlay={true}

textureImageURL={'sprite.png'}

textureDataURL={'sprite.json'}

>

<FireScroll />

</SpriteAnimator>

</ScrollControls>

function FireScroll() {

const sprite = useSpriteAnimator()

const scroll = useScroll()

const ref = React.useRef()

useFrame(() => {

if (sprite && scroll) {

sprite.current = scroll.offset

}

})

return null

}

Stats

Adds stats to document.body. It takes over the render-loop!

<Stats showPanel={0} className="stats" {...props} />

You can choose to mount Stats to a different DOM Element - for example, for custom styling:

const node = useRef(document.createElement('div'))

useEffect(() => {

node.current.id = 'test'

document.body.appendChild(node.current)

return () => document.body.removeChild(node.current)

}, [])

return <Stats parent={parent} />

StatsGl

Adds stats-gl to document.body. It takes over the render-loop!

<StatsGl className="stats" {...props} />

Wireframe

Renders an Antialiased, shader based wireframe on or around a geometry.

<mesh>

<geometry />

<material />

<Wireframe />

</mesh>

<Wireframe

geometry={geometry | geometryRef} // Will create the wireframe based on input geometry.

// Other props

simplify={false} // Remove some edges from wireframes

fill={"#00ff00"} // Color of the inside of the wireframe

fillMix={0} // Mix between the base color and the Wireframe 'fill'. 0 = base; 1 = wireframe

fillOpacity={0.25} // Opacity of the inner fill

stroke={"#ff0000"} // Color of the stroke

strokeOpacity={1} // Opacity of the stroke

thickness={0.05} // Thinkness of the lines

colorBackfaces={false} // Whether to draw lines that are facing away from the camera

backfaceStroke={"#0000ff"} // Color of the lines that are facing away from the camera

dashInvert={true} // Invert the dashes

dash={false} // Whether to draw lines as dashes

dashRepeats={4} // Number of dashes in one seqment

dashLength={0.5} // Length of each dash

squeeze={false} // Narrow the centers of each line segment

squeezeMin={0.2} // Smallest width to squueze to

squeezeMax={1} // Largest width to squeeze from

/>

useDepthBuffer

Renders the scene into a depth-buffer. Often effects depend on it and this allows you to render a single buffer and share it, which minimizes the performance impact. It returns the buffer's depthTexture.

Since this is a rather expensive effect you can limit the amount of frames it renders when your objects are static. For instance making it render only once by setting frames: 1.

const depthBuffer = useDepthBuffer({

size: 256,

frames: Infinity,

})

return <SomethingThatNeedsADepthBuffer depthBuffer={depthBuffer} />

Fbo / useFBO

Creates a THREE.WebGLRenderTarget.

type FBOSettings = {

samples?: number

depth?: boolean

} & THREE.RenderTargetOptions

export function useFBO(

width?: number | FBOSettings,

height?: number,

settings?: FBOSettings

): THREE.WebGLRenderTarget {

const target = useFBO({ stencilBuffer: false })

The rendertarget is automatically disposed when unmounted.

useCamera

A hook for the rare case when you are using non-default cameras for heads-up-displays or portals, and you need events/raytracing to function properly (raycasting uses the default camera otherwise).

<mesh raycast={useCamera(customCamera)} />

CubeCamera / useCubeCamera

Creates a THREE.CubeCamera that renders into a fbo renderTarget and that you can update().

export function useCubeCamera({

/** Resolution of the FBO, 256 */

resolution?: number

/** Camera near, 0.1 */

near?: number

/** Camera far, 1000 */

far?: number

/** Custom environment map that is temporarily set as the scenes background */

envMap?: THREE.Texture

/** Custom fog that is temporarily set as the scenes fog */

fog?: Fog | FogExp2

})

const { fbo, camera, update } = useCubeCamera()

DetectGPU / useDetectGPU

This hook uses DetectGPU by @TimvanScherpenzeel, wrapped into suspense, to determine what tier should be assigned to the user's GPU.

👉 This hook CAN be used outside the @react-three/fiber Canvas.

function App() {

const GPUTier = useDetectGPU()

return (

{(GPUTier.tier === "0" || GPUTier.isMobile) ? <Fallback /> : <Canvas>...</Canvas>

<Suspense fallback={null}>

<App />

useAspect

This hook calculates aspect ratios (for now only what in css would be image-size: cover is supported). You can use it to make an image fill the screen. It is responsive and adapts to viewport resize. Just give the hook the image bounds in pixels. It returns an array: [width, height, 1].

const scale = useAspect(

1024,

512,

1

)

return (

<mesh scale={scale}>

<planeGeometry />

<meshBasicMaterial map={imageTexture} />

useCursor

A small hook that sets the css body cursor according to the hover state of a mesh, so that you can give the user visual feedback when the mouse enters a shape. Arguments 1 and 2 determine the style, the defaults are: onPointerOver = 'pointer', onPointerOut = 'auto'.

const [hovered, set] = useState()

useCursor(hovered, )

return (

<mesh onPointerOver={() => set(true)} onPointerOut={() => set(false)}>

useIntersect

A very cheap frustum check that gives you a reference you can observe in order to know if the object has entered the view or is outside of it. This relies on THREE.Object3D.onBeforeRender so it only works on objects that are effectively rendered, like meshes, lines, sprites. It won't work on groups, object3d's, bones, etc.

const ref = useIntersect((visible) => console.log('object is visible', visible))

return <mesh ref={ref} />

useBoxProjectedEnv

The cheapest possible way of getting reflections in threejs. This will box-project the current environment map onto a plane. It returns an object that you need to spread over its material. The spread object contains a ref, onBeforeCompile and customProgramCacheKey. If you combine it with drei/CubeCamera you can "film" a single frame of the environment and feed it to the material, thereby getting realistic reflections at no cost. Align it with the position and scale properties.

const projection = useBoxProjectedEnv(

[0, 0, 0],

[1, 1, 1]

)

<CubeCamera frames={1}>

{(texture) => (

<mesh>

<planeGeometry />

<meshStandardMaterial envMap={texture} {...projection} />

</mesh>

)}

</CubeCamera>

Trail / useTrail

A hook to obtain an array of points that make up a Trail. You can use this array to drive your own MeshLine or make a trail out of anything you please.

Note: The hook returns a ref (MutableRefObject<Vector3[]>) this means updates to it will not trigger a re-draw, thus keeping this cheap.

const points = useTrail(

target,

{

length,

decay,

local,

stride,

interval,

}

)

useFrame(() => {

meshLineRef.current.position.setPoints(points.current)

})

useSurfaceSampler

A hook to obtain the result of the <Sampler /> as a buffer. Useful for driving anything other than InstancedMesh via the Sampler.

const buffer = useSurfaceSampler(

mesh,

count,

transform,

weight,

instancedMesh

)

FaceLandmarker

A @mediapipe/tasks-vision FaceLandmarker provider, as well as a useFaceLandmarker hook.

<FaceLandmarker>{}</FaceLandmarker>

It will instanciate a FaceLandmarker object with the following defaults:

{

basePath: "https://cdn.jsdelivr.net/npm/@mediapipe/tasks-vision@x.y.z/wasm",

options: {

baseOptions: {

modelAssetPath: "https://storage.googleapis.com/mediapipe-models/face_landmarker/face_landmarker/float16/1/face_landmarker.task",

delegate: "GPU",

},

runningMode: "VIDEO",

outputFaceBlendshapes: true,

outputFacialTransformationMatrixes: true,

}

}

You can override defaults, like for example self-host tasks-vision's wasm/ and face_landmarker.task model in you public/ directory:

$ ln -s ../node_modules/@mediapipe/tasks-vision/wasm/ public/tasks-vision-wasm

$ curl https://storage.googleapis.com/mediapipe-models/face_landmarker/face_landmarker/float16/1/face_landmarker.task -o public/face_landmarker.task

import { FaceLandmarkerDefaults } from '@react-three/drei'

const visionBasePath = new URL("/tasks-vision-wasm", import.meta.url).toString()

const modelAssetPath = new URL("/face_landmarker.task", import.meta.url).toString()

const faceLandmarkerOptions = { ...FaceLandmarkerDefaults.options };

faceLandmarkerOptions.baseOptions.modelAssetPath = modelAssetPath;

<FaceLandmarker basePath={visionBasePath} options={faceLandmarkerOptions}>

Loading

Loader

A quick and easy loading overlay component that you can drop on top of your canvas. It's intended to "hide" the whole app, so if you have multiple suspense wrappers in your application, you should use multiple loaders. It will show an animated loadingbar and a percentage.

<Canvas>

<Suspense fallback={null}>

<AsyncModels />

</Suspense>

</Canvas>

<Loader />

You can override styles, too.

<Loader

containerStyles={...container}

innerStyles={...inner}

barStyles={...bar}

dataStyles={...data}

dataInterpolation={(p) => `Loading ${p.toFixed(2)}%`}

initialState={(active) => active}

>

Progress / useProgress

A convenience hook that wraps THREE.DefaultLoadingManager's progress status.

function Loader() {

const { active, progress, errors, item, loaded, total } = useProgress()

return <Html center>{progress} % loaded</Html>

}

return (

<Suspense fallback={<Loader />}>

<AsyncModels />

</Suspense>

)

If you don't want your progress component to re-render on all changes you can be specific as to what you need, for instance if the component is supposed to collect errors only. Look into zustand for more info about selectors.

const errors = useProgress((state) => state.errors)

👉 Note that your loading component does not have to be a suspense fallback. You can use it anywhere, even in your dom tree, for instance for overlays.

Gltf / useGLTF

A convenience hook that uses useLoader and GLTFLoader, it defaults to CDN loaded draco binaries (https://www.gstatic.com/draco/v1/decoders/) which are only loaded for compressed models.

useGLTF(url)

useGLTF(url, '/draco-gltf')

useGLTF.preload(url)

If you want to use your own draco decoder globally, you can pass it through useGLTF.setDecoderPath(path):

Note

If you are using the CDN loaded draco binaries, you can get a small speedup in loading time by prefetching them.

You can accomplish this by adding two <link> tags to your <head> tag, as below. The version in those URLs must exactly match what useGLTF uses for this to work. If you're using create-react-app, public/index.html file contains the <head> tag.

<link

rel="prefetch"

crossorigin="anonymous"

href="https://www.gstatic.com/draco/versioned/decoders/1.5.5/draco_wasm_wrapper.js"

/>

<link

rel="prefetch"

crossorigin="anonymous"

href="https://www.gstatic.com/draco/versioned/decoders/1.5.5/draco_decoder.wasm"

/>

It is recommended that you check your browser's network tab to confirm that the correct URLs are being used, and that the files do get loaded from the prefetch cache on subsequent requests.

Fbx / useFBX

A convenience hook that uses useLoader and FBXLoader:

useFBX(url)

function SuzanneFBX() {

let fbx = useFBX('suzanne/suzanne.fbx')

return <primitive object={fbx} />

}

Texture / useTexture

A convenience hook that uses useLoader and TextureLoader

const texture = useTexture(url)

const [texture1, texture2] = useTexture([texture1, texture2])

You can also use key: url objects:

const props = useTexture({

metalnessMap: url1,

map: url2,

})

return <meshStandardMaterial {...props} />

Ktx2 / useKTX2

A convenience hook that uses useLoader and KTX2Loader

const texture = useKTX2(url)

const [texture1, texture2] = useKTX2([texture1, texture2])

return <meshStandardMaterial map={texture} />

CubeTexture / useCubeTexture

A convenience hook that uses useLoader and CubeTextureLoader

const envMap = useCubeTexture(['px.png', 'nx.png', 'py.png', 'ny.png', 'pz.png', 'nz.png'], { path: 'cube/' })

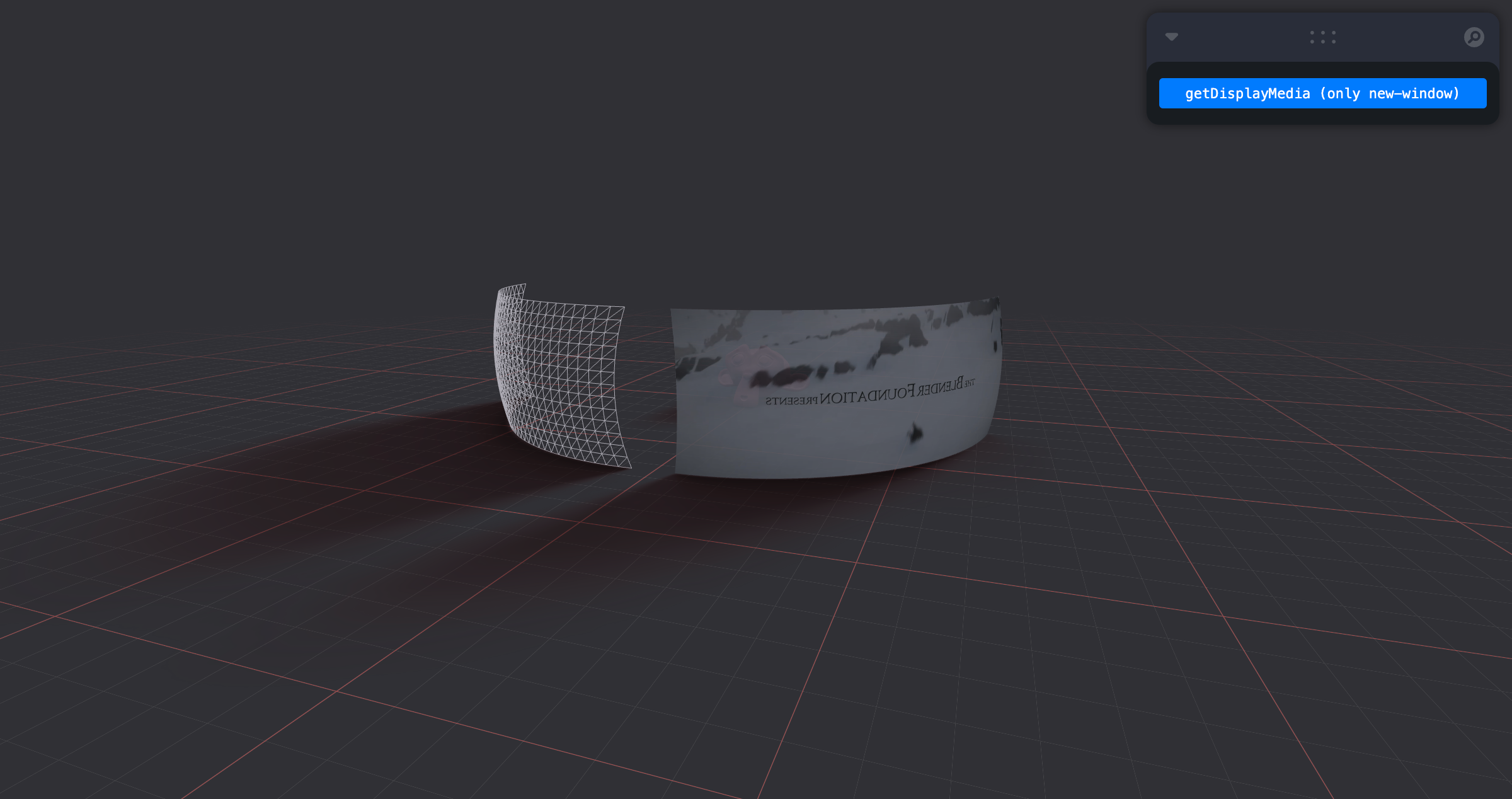

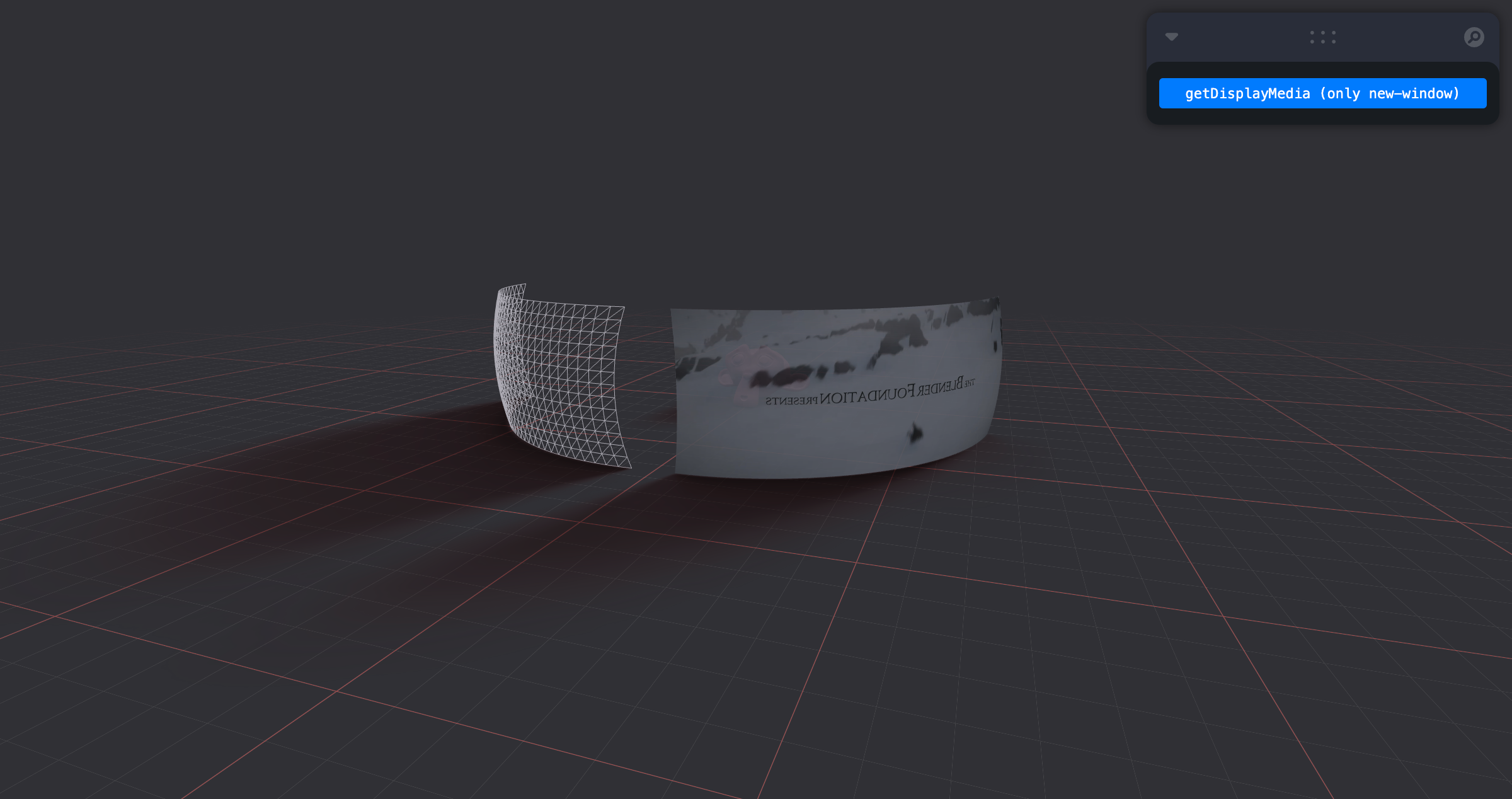

VideoTexture / useVideoTexture

A convenience hook that returns a THREE.VideoTexture and integrates loading into suspense. By default it falls back until the loadedmetadata event. Then it starts playing the video, which, if the video is muted, is allowed in the browser without user interaction.

type VideoTextureProps = {

unsuspend?: 'canplay' | 'canplaythrough' | 'loadstart' | 'loadedmetadata'

muted?: boolean

loop?: boolean

start?: boolean

crossOrigin?: string

}

export function useVideoTexture(src: string, props: VideoTextureProps) {

const { unsuspend, start, crossOrigin, muted, loop } = {

unsuspend: 'loadedmetadata',

crossOrigin: 'Anonymous',

muted: true,

loop: true,

start: true

...props,

}

const texture = useVideoTexture("/video.mp4")

return (

<mesh>

<meshBasicMaterial map={texture} toneMapped={false} />

It also accepts a MediaStream from eg. .getDisplayMedia() or .getUserMedia():

const [stream, setStream] = useState()

return (

<mesh onClick={async () => setStream(await navigator.mediaDevices.getDisplayMedia({ video: true }))}>

<React.Suspense fallback={<meshBasicMaterial wireframe />}>

<VideoMaterial src={stream} />

</React.Suspense>

function VideoMaterial({ src }) {

const texture = useVideoTexture(src)

return <meshBasicMaterial map={texture} toneMapped={false} />

}

NB: It's important to wrap VideoMaterial into React.Suspense since, useVideoTexture(src) here will be suspended until the user shares its screen.

HLS - useVideoTexture supports .m3u8 HLS manifest via (https://github.com/video-dev/hls.js).

You can fine-tune via the hls configuration:

const texture = useVideoTexture('https://test-streams.mux.dev/x36xhzz/x36xhzz.m3u8', {

hls: { abrEwmaFastLive: 1.0, abrEwmaSlowLive: 3.0, enableWorker: true }

})

Available options: https://github.com/video-dev/hls.js/blob/master/docs/API.md#fine-tuning

TrailTexture / useTrailTexture

This hook returns a THREE.Texture with a pointer trail which can be used in shaders to control displacement among other things, and a movement callback event => void which reads from event.uv.

type TrailConfig = {

size?: number

maxAge?: number

radius?: number

intensity?: number

interpolate?: number

smoothing?: number

minForce?: number

blend?: CanvasRenderingContext2D['globalCompositeOperation']

ease?: (t: number) => number

}

const [texture, onMove] = useTrailTexture(config)

return (

<mesh onPointerMove={onMove}>

<meshStandardMaterial displacementMap={texture} />

useFont

Uses THREE.FontLoader to load a font and returns a THREE.Font object. It also accepts a JSON object as a parameter. You can use this to preload or share a font across multiple components.

const font = useFont('/fonts/helvetiker_regular.typeface.json')

return <Text3D font={font} />

In order to preload you do this:

useFont.preload('/fonts/helvetiker_regular.typeface.json')

useSpriteLoader

Loads texture and JSON files with multiple or single animations and parses them into appropriate format. These assets can be used by multiple SpriteAnimator components to save memory and loading times.

Returns: {spriteTexture:Texture, spriteData:{any[], object}, aspect:Vector3}

- spriteTexture: The ThreeJS Texture

- spriteData: A collection of the sprite frames, and some meta information (width, height)

- aspect: Information about the aspect ratio of the sprite sheet

input?: Url | null,

json?: string | null,

animationNames?: string[] | null,

numberOfFrames?: number | null,

onLoad?: (texture: Texture, textureData?: any) => void

const { spriteObj } = useSpriteLoader(

'multiasset.png',

'multiasset.json',

['orange', 'Idle Blinking', '_Bat'],

null

)

<SpriteAnimator

position={[4.5, 0.5, 0.1]}

autoPlay={true}

loop={true}

scale={5}

frameName={'_Bat'}

animationNames={['_Bat']}

spriteDataset={spriteObj}

alphaTest={0.01}

asSprite={false}

/>

<SpriteAnimator

position={[5.5, 0.5, 5.8]}

autoPlay={true}

loop={true}

scale={5}

frameName={'Idle Blinking'}

animationNames={['Idle Blinking']}

spriteDataset={spriteObj}

alphaTest={0.01}

asSprite={false}

/>

Performance

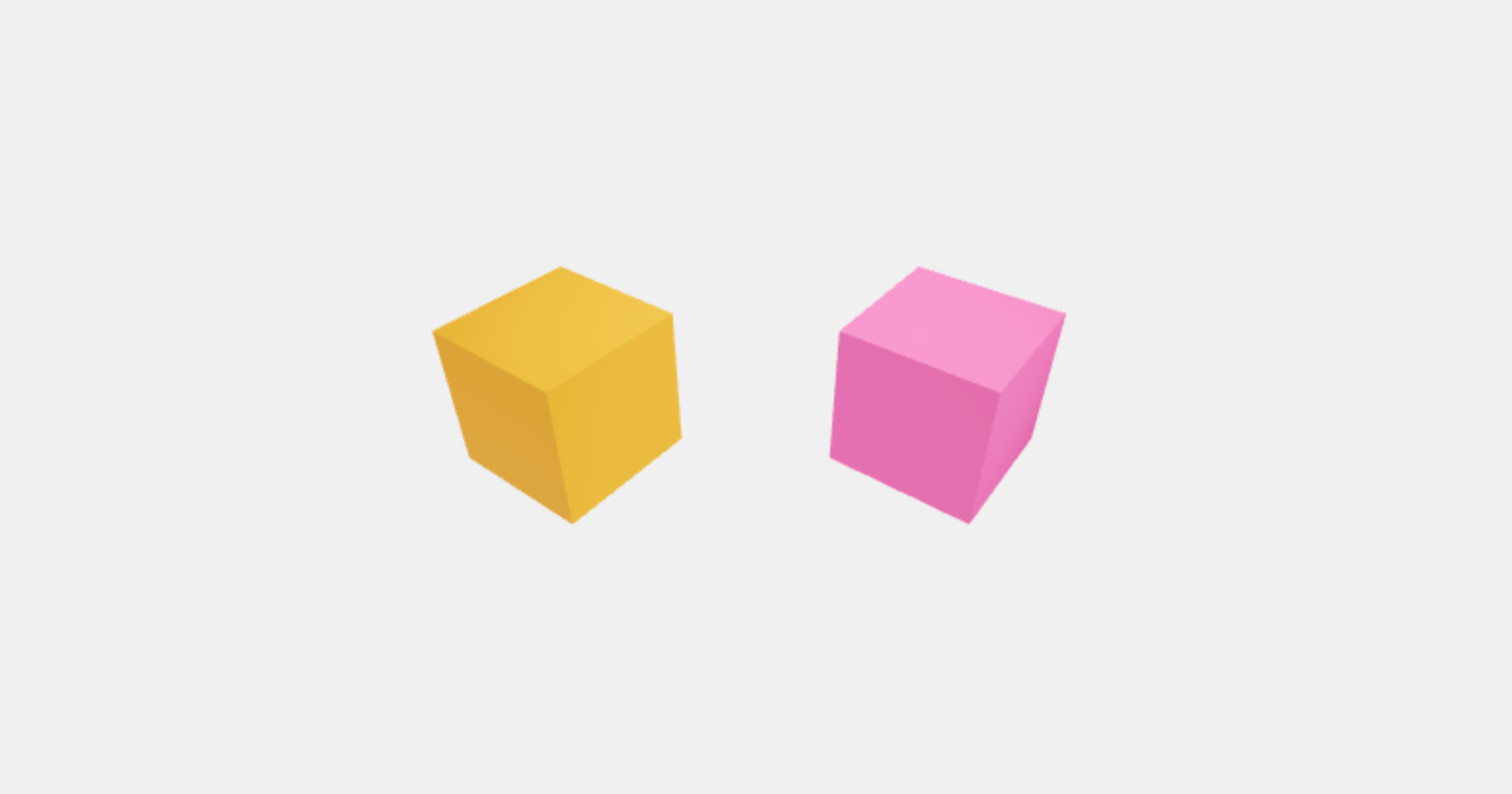

Instances

A wrapper around THREE.InstancedMesh. This allows you to define hundreds of thousands of objects in a single draw call, but declaratively!

<Instances

limit={1000}

range={1000}

>

<boxGeometry />

<meshStandardMaterial />

<Instance

color="red"

scale={2}

position={[1, 2, 3]}

rotation={[Math.PI / 3, 0, 0]}

onClick={onClick} ... />

</Instances>

You can nest Instances and use relative coordinates!

<group position={[1, 2, 3]} rotation={[Math.PI / 2, 0, 0]}>

<Instance />

</group>

Instances can also receive non-instanced objects, for instance annotations!

<Instance>

<Html>hello from the dom</Html>

</Instance>

You can define events on them!

<Instance onClick={...} onPointerOver={...} />

👉 Note: While creating instances declaratively keeps all the power of components with reduced draw calls, it comes at the cost of CPU overhead. For cases like foliage where you want no CPU overhead with thousands of intances you should use THREE.InstancedMesh such as in this example.

Merged

This creates instances for existing meshes and allows you to use them cheaply in the same scene graph. Each type will cost you exactly one draw call, no matter how many you use. meshes has to be a collection of pre-existing THREE.Mesh objects.

<Merged meshes={[box, sphere]}>

{(Box, Sphere) => (

<>

<Box position={[-2, -2, 0]} color="red" />

<Box position={[-3, -3, 0]} color="tomato" />

<Sphere scale={0.7} position={[2, 1, 0]} color="green" />

<Sphere scale={0.7} position={[3, 2, 0]} color="teal" />

</>

)}

</Merged>

You may also use object notation, which is good for loaded models.

function Model({ url }) {

const { nodes } = useGLTF(url)

return (

<Merged meshes={nodes}>

{({ Screw, Filter, Pipe }) => (

<>

<Screw />

<Filter position={[1, 2, 3]} />

<Pipe position={[4, 5, 6]} />

</>

)}

</Merged>

)

}

Points

A wrapper around THREE.Points. It has the same api and properties as Instances.

<Points

limit={1000}

range={1000}

>

<pointsMaterial vertexColors />

<Point position={[1, 2, 3]} color="red" onClick={onClick} onPointerOver={onPointerOver} ... />

</Points>

If you just want to use buffers for position, color and size, you can use the alternative API:

<Points positions={positionsBuffer} colors={colorsBuffer} sizes={sizesBuffer}>

<pointsMaterial />

</Points>

Segments

A wrapper around THREE.LineSegments. This allows you to use thousands of segments under the same geometry.

Prop based:

<Segments

limit={1000}

lineWidth={1.0}

{...materialProps}

>

<Segment start={[0, 0, 0]} end={[0, 10, 0]} color="red" />

<Segment start={[0, 0, 0]} end={[0, 10, 10]} color={[1, 0, 1]} />

</Segments>

Ref based (for fast updates):

const ref = useRef()

useFrame(() => {

ref.current.start.set(0,0,0)

ref.current.end.set(10,10,0)

ref.current.color.setRGB(0,0,0)

})

<Segments

limit={1000}

lineWidth={1.0}

>

<Segment ref={ref} />

</Segments>

Detailed

A wrapper around THREE.LOD (Level of detail).

<Detailed distances={[0, 10, 20]} {...props}>

<mesh geometry={highDetail} />

<mesh geometry={mediumDetail} />

<mesh geometry={lowDetail} />

</Detailed>

Preload

The WebGLRenderer will compile materials only when they hit the frustrum, which can cause jank. This component precompiles the scene using gl.compile which makes sure that your app is responsive from the get go.

By default gl.compile will only preload visible objects, if you supply the all prop, it will circumvent that. With the scene and camera props you could also use it in portals.

<Canvas>