Research

Security News

Quasar RAT Disguised as an npm Package for Detecting Vulnerabilities in Ethereum Smart Contracts

Socket researchers uncover a malicious npm package posing as a tool for detecting vulnerabilities in Etherium smart contracts.

com.hecomi.ulipsync

Advanced tools

uLipSync is an Unity asset to do a realtime lipsync.

https://github.com/hecomi/uLipSync.git#upm to Package Manager.https://registry.npmjs.comcom.hecomiuLipSync component to the GameObject that has AudioSource and plays voice sounds.

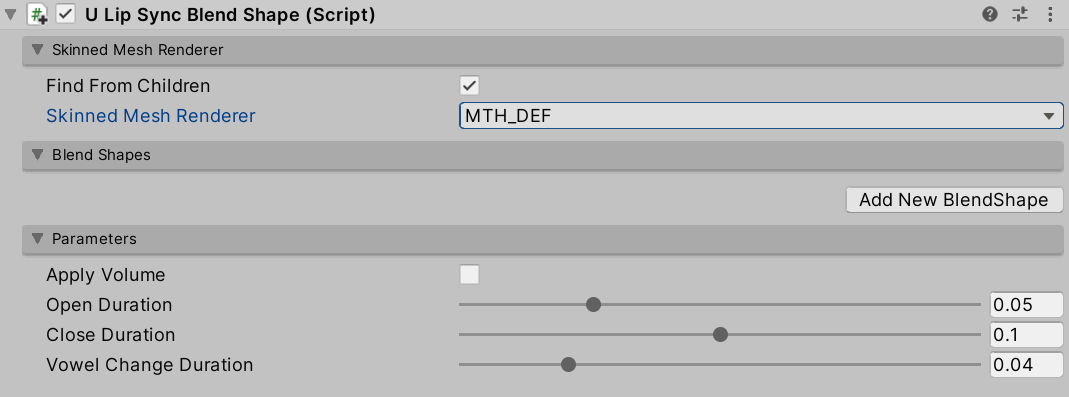

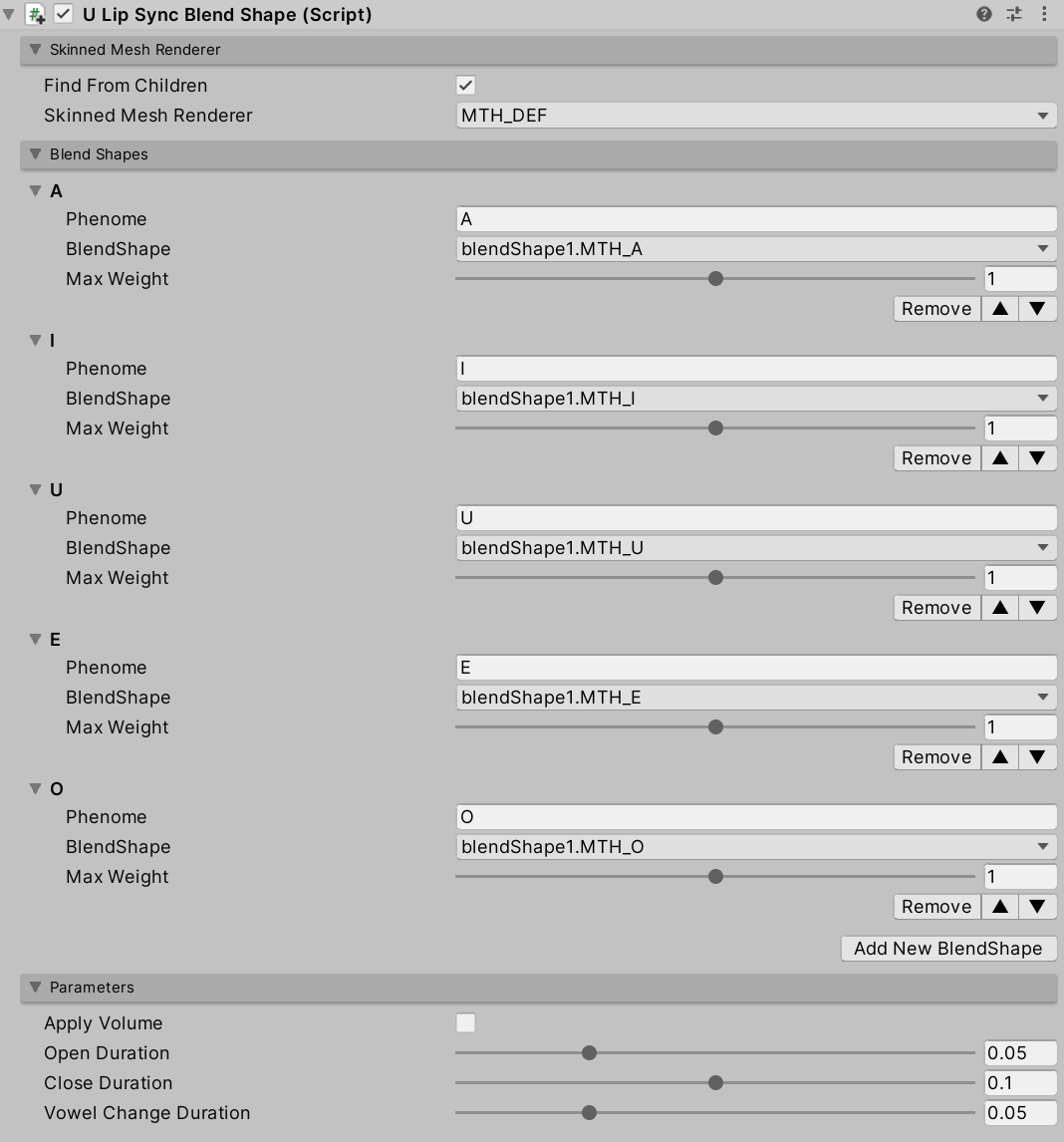

uLipSyncBlendshape component to the GameObject of your character and select target SkinnedMeshRenderer.

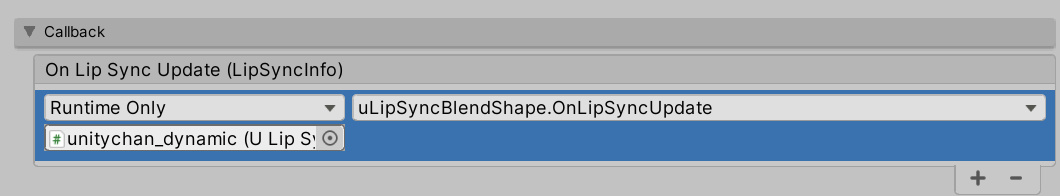

uLipSyncBlendshape.OnLipSyncUpdate to uLipSync in Callback section.

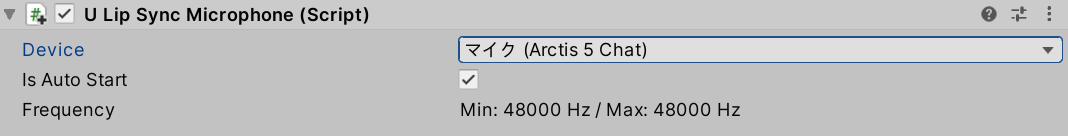

uLipSyncMicrophone.

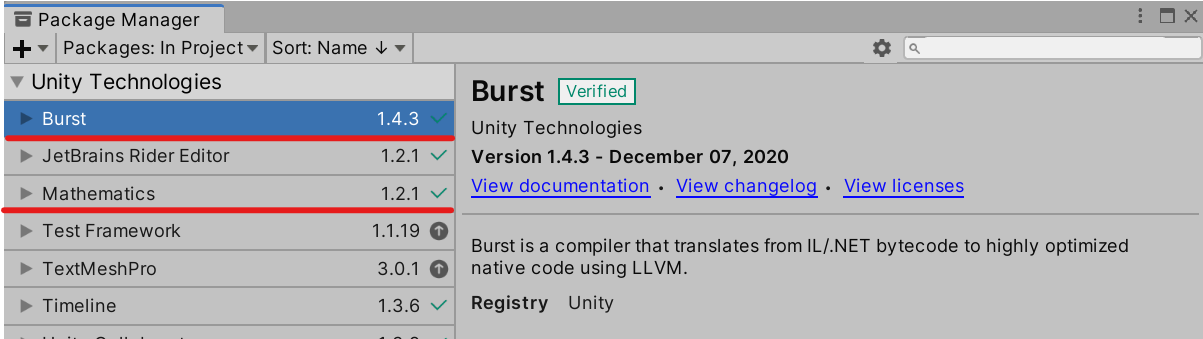

uLipSyncThis is the core component for calculating lip-sync. uLipSync gets the audio buffer from MonoBehaviour.OnAudioFilterRead(), so it needs to be attached to the same GameObject that plays the audio in AudioSource. This computation is done in a background thread and is optimized by JobSystem and Burst Compiler.

uLipSyncBlendShapeThis component is used to control the blendshape of the SkinndeMeshRenderer. By registering a blendshape that corresponds to the Phoneme registered in the uLipSync profile, the results of speech analysis will be reflected in the shape of the mouth.

uLipSyncMicrophoneCreate an AudioClip to play the microphone input and set it to AudioSource. Attach this component to a GameObject that has uLipSync. You can start/stop recording by calling StartRecord() / StopRecord() from the script. You can also change the input source by changing the index. To find the input you want to use, you can use uLipSync.MicUtil.GetDeviceList().

uLipSync extracts voice features called MFCCs from the currently playing sound in real time. This is one of the features that were used for speech recognition before the advent of deep learning. The uLipSync estimates which MFCCs of each phoneme the currently playing sound is close to, and issues a callback with the relevant information. The uLipSyncBlendShape uses the callback to smoothly move the blendshape of the SkinnedMeshRenderer.

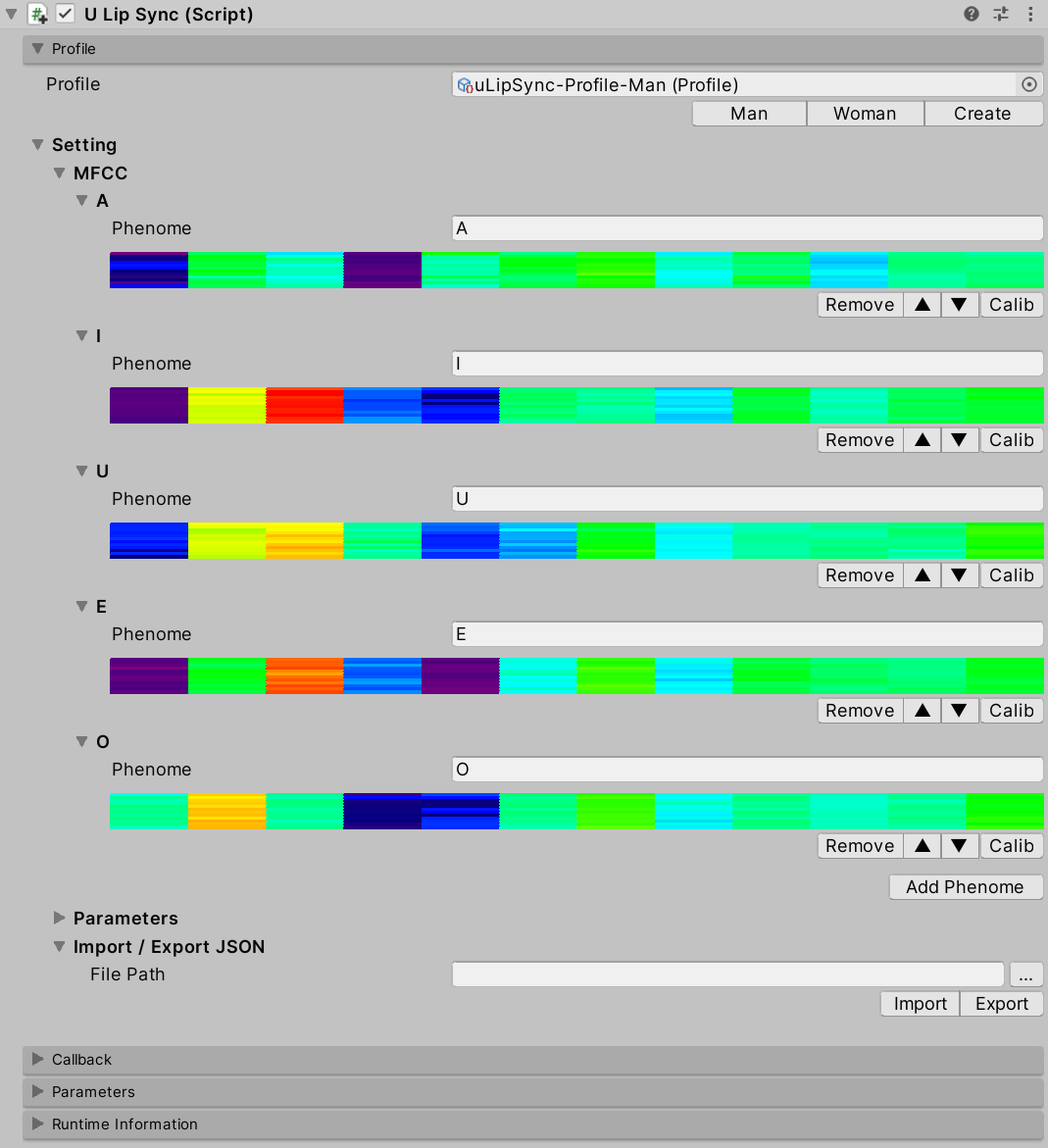

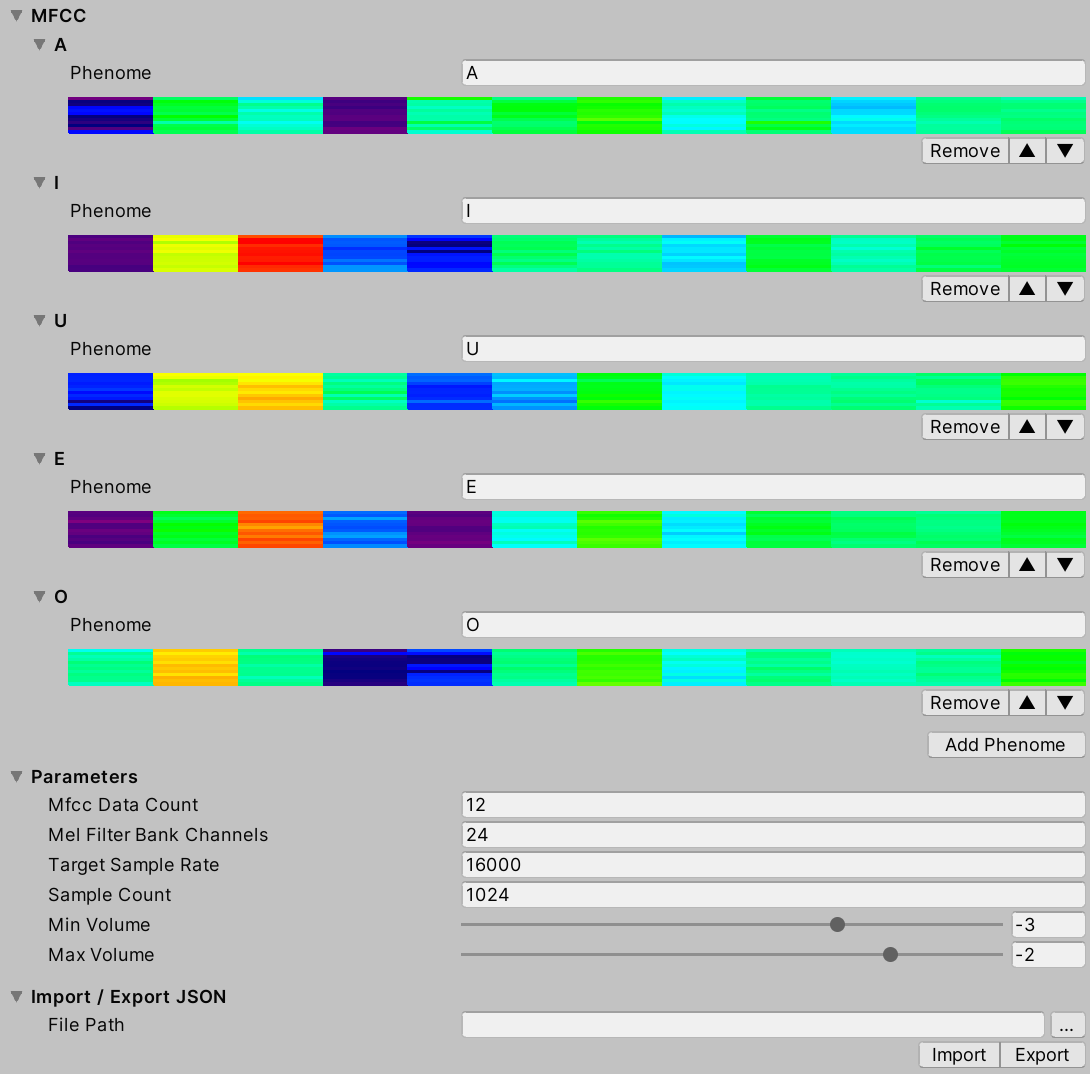

An asset called Profile is used to register this MFCC and related calculation parameters.

Add Phoneme to register a new phoneme. By registering the name of the phoneme (e.g. A, I, E, O, U) and the corresponding MFCC according to the calibration method described below, you can estimate the phoneme.OnAudioFilterRead(), but by default it is downsampled by 1/3 to 16000 Hz.Min Volume will be output as the volume for the callback (OnLipSyncUpdate()).Profile to JSON and vice versa, or import it. See below for details.The registered callback will be issued at the time of Update() after the lip-sync calculation is finished. The LipSyncInfo structure passed as an argument looks like the following.

public struct LipSyncInfo

{

public int index;

public string phenome;

public float volume;

public float rawVolume;

public float distance;

}

index

phenome

volume

Min Volume and Max VolumerawVolume

distance

An example code is as follows:

using UnityEngine;

using uLipSync;

public class DebugPrintLipSyncInfo : MonoBehaviour

{

public void OnLipSyncUpdate(LipSyncInfo info)

{

Debug.LogFormat(

$"PHENOME: {info.phenome}, " +

$"VOL: {info.volume}, " +

$"DIST: {info.distance} ");

}

}

AudioSource to 0, the recognition itself will not be done.

Min Volume and Max Volume registered in Profile.Opening this FoldOut will affect the performance of the game as it will cause the editor to draw every frame.

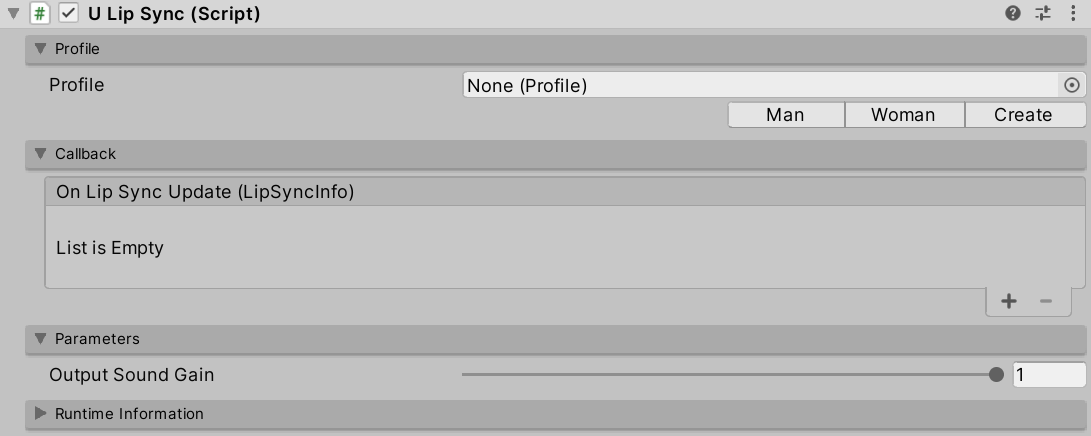

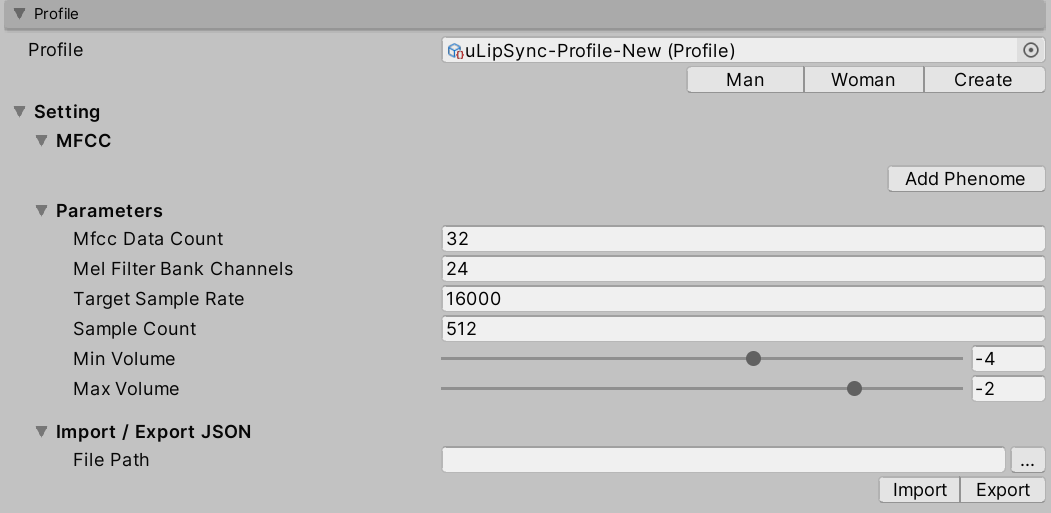

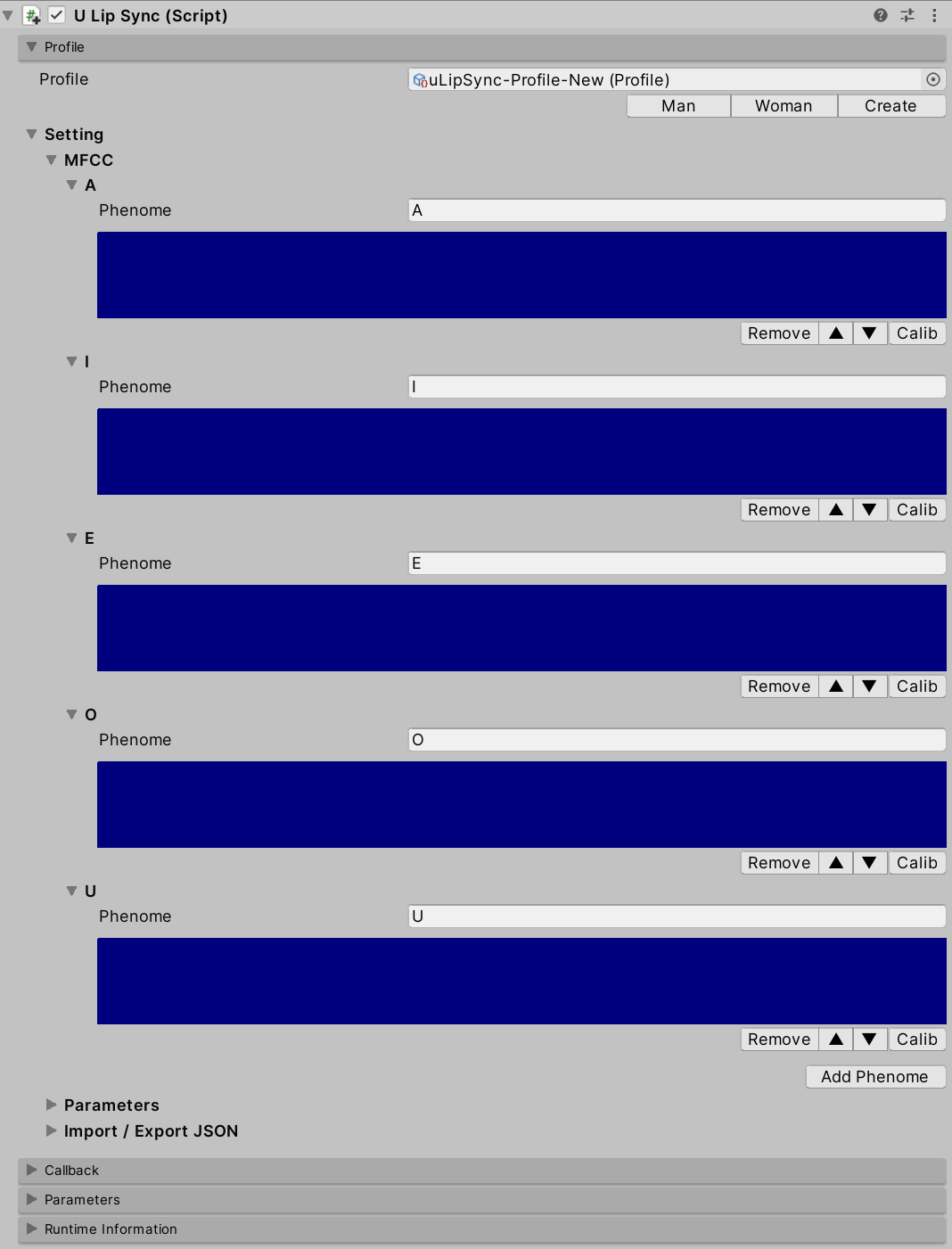

First, click the Create button under Profile to create a new profile. The created profile asset will be automatically registered to the profile of uLipSync.

Next, click the Add Phenome button to add a phoneme, such as A, I, E, O, U.

Then start the game, and hold down the Calib button in A when it is talking "aaaaaaa". Likewise, continue to speak "iiiiiii" and press and hold the Calib button in I. Calibrate all the phonemes like this to register the MFCCs.

Please prepare an AudioClip that is compatible with each Phoneme. While one of them is playing, press the corresponding Calib button as described above to reflect the result of the sound analysis into the profile.

You can also send calibration requests from scripts by calling the uLipSync.RequestCalibration(int index). The sample CalibrationByKeyboardInput.cs shows how to calibrate with numeric keys like this:

lipSync = GetComponent<uLipSync>();

for (int i = 0; i < lipSync.profile.mfccs.Count; ++i)

{

var key = (KeyCode)((int)(KeyCode.Alpha1) + i);

if (Input.GetKey(key)) lipSync.RequestCalibration(i);

}

Since Profile is a ScriptableObject, changes are not saved in a build. Instead, it is possible to export and import the profile in Json format. From the script, do the following:

var lipSync = GetComponent<uLipSync>();

var profile = lipSync.profile;

// Export

profile.Export(path);

// Import

profile.Import(path);

Examples include Unity-chan assets.

© Unity Technologies Japan/UCL

FAQs

A MFCC-based LipSync plugin for Unity using Job and Burst Compiler

The npm package com.hecomi.ulipsync receives a total of 224 weekly downloads. As such, com.hecomi.ulipsync popularity was classified as not popular.

We found that com.hecomi.ulipsync demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 0 open source maintainers collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Research

Security News

Socket researchers uncover a malicious npm package posing as a tool for detecting vulnerabilities in Etherium smart contracts.

Security News

Research

A supply chain attack on Rspack's npm packages injected cryptomining malware, potentially impacting thousands of developers.

Research

Security News

Socket researchers discovered a malware campaign on npm delivering the Skuld infostealer via typosquatted packages, exposing sensitive data.