Security News

Research

Data Theft Repackaged: A Case Study in Malicious Wrapper Packages on npm

The Socket Research Team breaks down a malicious wrapper package that uses obfuscation to harvest credentials and exfiltrate sensitive data.

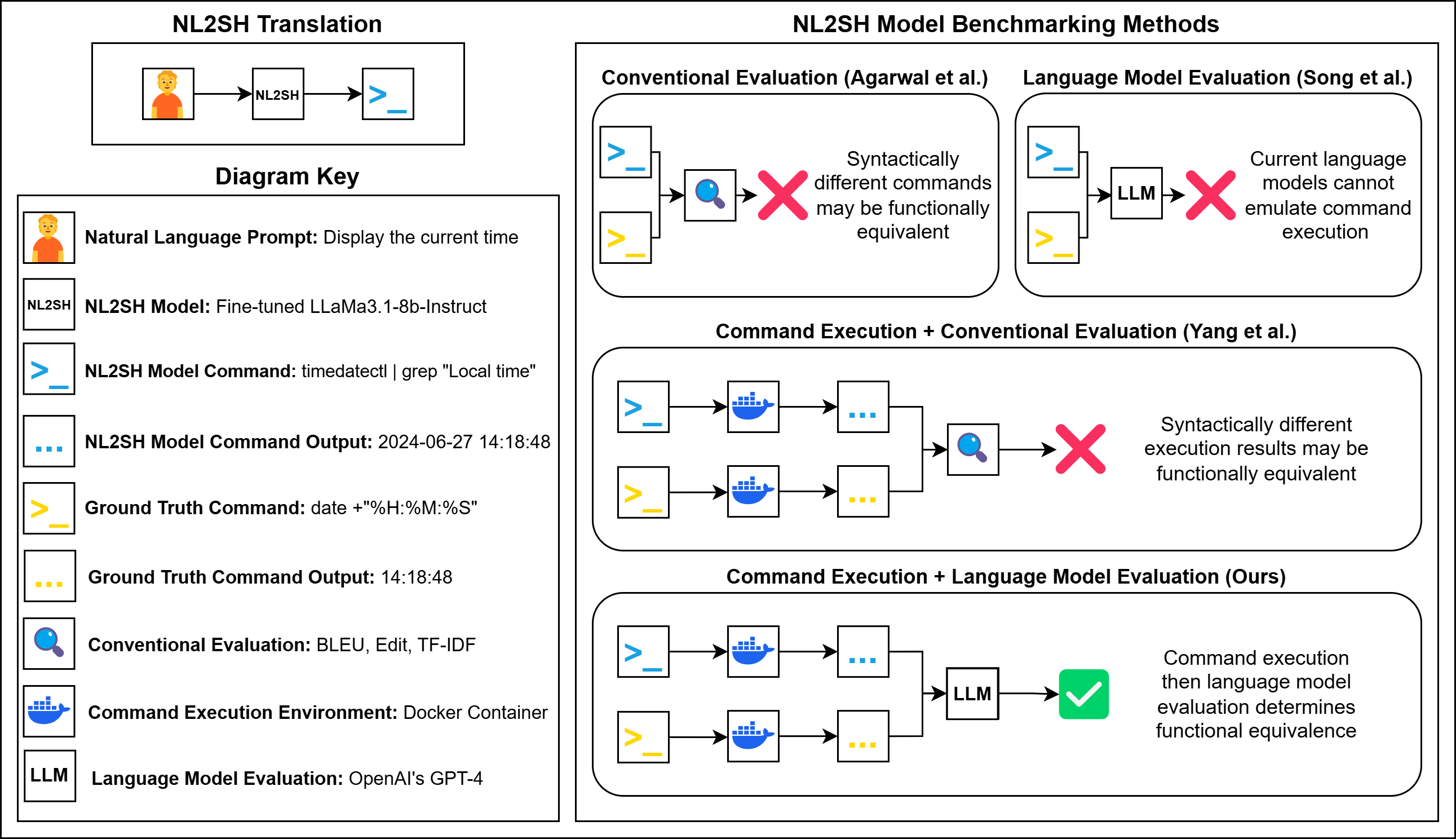

A fork of the InterCode benchmark used to evaluate natural language to Bash command translation.

A fork of the InterCode benchmark used to evaluate natural language to Bash command translation.

Dataset

PyPI Package

apt install python3.12-venv

python3 -m venv icalfa-venv

source icalfa-venv/bin/activate

pip install icalfa

curl -fsSL https://ollama.com/install.sh | sh

ollama pull llama3.1:70b

ollama pull mxbai-embed-large

import os

from icalfa import submit_command

from datasets import load_dataset

# Store OpenAI key as environment variable

os.environ['ICALFA_OPENAI_API_KEY'] = '...'

# Load dataset

dataset = load_dataset("westenfelder/InterCode-ALFA-Data")['train']

# Iterate through the dataset

score = 0

for index, row in enumerate(dataset):

# Retrieve natural language prompt

prompt = row['query']

# Convert natural language prompt to Bash command here

# Submit Bash command for benchmark scoring. 0 = incorrect, 1 = correct

score += submit_command(index=index, command="...")

# Retrieve ground truth commands

ground_truth_command = row['gold']

ground_truth_command2 = row['gold2']

# Print the benchmark result

print(score/len(dataset))

# By default icalfa uses OpenAI's GPT-4 model and expects an API key

submit_command(index, command, eval_mode="openai", eval_param="gpt-4-0613")

# A local model can be used via Ollama

submit_command(index, command, eval_mode="ollama", eval_param="llama3.1:70b")

# You can also test the original method used in Princeton's InterCode benchmark

submit_command(index, command, eval_mode="tfidf")

# An embedding based comparison method is also available

# This uses the mxbai-embed-large model via Ollama, with the eval_param specifying the similarity threshold

submit_command(index, command, eval_mode="embed", eval_param=0.75)

# Stop containers

docker stop $(docker ps -a --filter "name=intercode*" -q)

# Delete containers

docker rm $(docker ps -a --filter "name=intercode*" -q)

# update version in pyproject.toml and __init__.py

rm -rf dist

python3 -m build

python3 -m twine upload --repository pypi dist/*

pip install --upgrade icalfa

InterCode-ALFA is a fork of the InterCode benchmark developed by the Princeton NLP group.

InterCode Website

InterCode PyPI Package

FAQs

A fork of the InterCode benchmark used to evaluate natural language to Bash command translation.

We found that icalfa demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 1 open source maintainer collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Security News

Research

The Socket Research Team breaks down a malicious wrapper package that uses obfuscation to harvest credentials and exfiltrate sensitive data.

Research

Security News

Attackers used a malicious npm package typosquatting a popular ESLint plugin to steal sensitive data, execute commands, and exploit developer systems.

Security News

The Ultralytics' PyPI Package was compromised four times in one weekend through GitHub Actions cache poisoning and failure to rotate previously compromised API tokens.