Security News

Research

Data Theft Repackaged: A Case Study in Malicious Wrapper Packages on npm

The Socket Research Team breaks down a malicious wrapper package that uses obfuscation to harvest credentials and exfiltrate sensitive data.

Install (or upgrade) memory_graph using pip:

pip install --upgrade memory_graph

Additionally Graphviz needs to be installed.

In Python, assigning the list from variable a to variable b causes both variables to reference the same list object and therefore share the data. Consequently, any change applied through one variable will impact the other. This behavior can lead to elusive bugs if a programmer incorrectly assumes that list a and b are independent.

|

a graph showing |

The fact that a and b share data can not be verified by printing the lists. It can be verified by comparing the identity of both variables using the id() function or by using the is comparison operator as shown in the program output below, but this quickly becomes impractical for larger programs.

a: 4, 3, 2, 1

b: 4, 3, 2, 1

ids: 126432214913216 126432214913216

identical?: True

A better way to understand what data is shared is to draw a graph of the data using the memory_graph package.

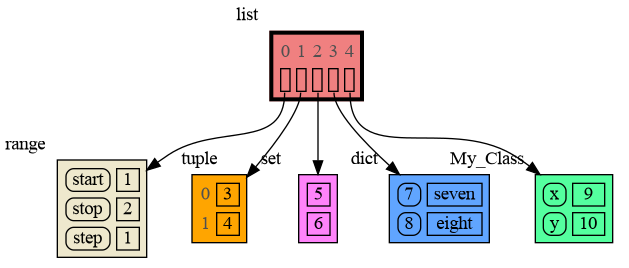

The memory_graph package can graph many different data types.

import memory_graph

class MyClass:

def __init__(self, x, y):

self.x = x

self.y = y

data = [ range(1, 2), (3, 4), {5, 6}, {7:'seven', 8:'eight'}, MyClass(9, 10) ]

memory_graph.show(data, block=True)

By using block=True the program blocks until the ENTER key is pressed so you can view the graph before continuing program execution (and possibly viewing later graphs). Instead of showing the graph you can also render it to an output file of your choosing (see Graphviz Output Formats) using for example:

memory_graph.render(data, "my_graph.pdf")

memory_graph.render(data, "my_graph.png")

memory_graph.render(data, "my_graph.gv") # Graphviz DOT file

Bas Terwijn

Inspired by Python Tutor.

The Python Data Model makes a distiction between immutable and mutable types:

In the code below variable a and b both reference the same int value 10. An int is an immutable type and therefore when we change variable a its value can not be mutated in place, and thus a copy is made and a and b reference a different value afterwards.

import memory_graph

memory_graph.config.no_reference_types.pop(int, None) # show references to ints

a = 10

b = a

memory_graph.render(locals(), 'immutable1.png')

a += 1

memory_graph.render(locals(), 'immutable2.png')

|  |

|---|---|

| immutable1.png | immutable2.png |

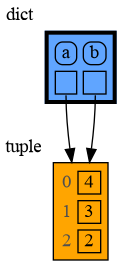

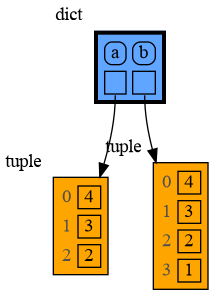

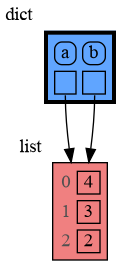

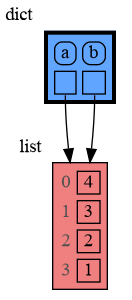

With mutable types the result is different. In the code below variable a and b both reference the same list value [4, 3, 2]. A list is a mutable type and therefore when we change variable a its value can be mutated in place and thus a and b both reference the same new value afterwards. Thus changing a also changes b and vice versa. Sometimes we want this but other times we don't and then we will have to make a copy so that b is independent from a.

import memory_graph

a = [4, 3, 2]

b = a

memory_graph.render(locals(), 'mutable1.png')

a.append(1)

memory_graph.render(locals(), 'mutable2.png')

|  |

|---|---|

| mutable1.png | mutable2.png |

Python makes this distiction between mutable and immutable types because a value of a mutable type generally could be large and therefore it would be slow to make a copy each time we change it. On the other hand, a value of a changable immutable type generally is small and therefore fast to copy.

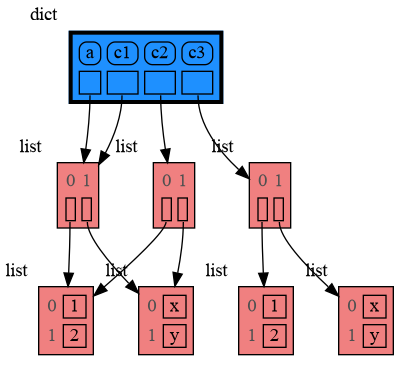

Python offers three different "copy" options that we will demonstrate using a nested list:

import memory_graph

import copy

a = [ [1, 2], ['x', 'y'] ] # a nested list (a list containing lists)

# three different ways to make a "copy" of 'a':

c1 = a

c2 = copy.copy(a) # equivalent to: a.copy() a[:] list(a)

c3 = copy.deepcopy(a)

memory_graph.show(locals())

c1 is an assignment, all the data is shared, nothing is copiedc2 is a shallow copy, only the data referenced by the first reference is copied and the underlying data is sharedc3 is a deep copy, all the data is copied, nothing is shared

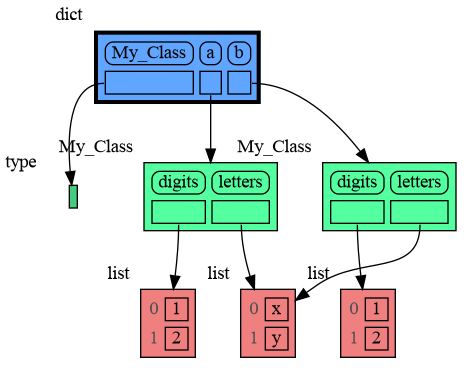

We can write our own custom copy function or method in case the three "copy" options don't do what we want. For example the copy() method of My_Class in the code below copies the digits but shares the letters between the two objects.

import memory_graph

import copy

class My_Class:

def __init__(self):

self.digits = [1, 2]

self.letters = ['x', 'y']

def copy(self): # custom copy method copies the digits but shares the letters

c = copy.copy(self)

c.digits = copy.copy(self.digits)

return c

a = My_Class()

b = a.copy()

memory_graph.show(locals())

Often it is useful to graph all the local variables using:

memory_graph.show(locals(), block=True)

So much so that function d() is available as alias for this for easier debugging. Additionally it can optionally log the data by printing them. For example:

import memory_graph

squares = []

squares_collector = []

for i in range(1,6):

squares.append(i**2)

squares_collector.append(squares.copy())

memory_graph.d(log=True)

which after pressing ENTER a number of times results in:

squares: [1, 4, 9, 16, 25]

squares_collector: [[1], [1, 4], [1, 4, 9], [1, 4, 9, 16], [1, 4, 9, 16, 25]]

i: 5

Function d() has these default arguments:

def d(data=None, graph=True, log=False, block=True):

locals() when not specifiedTo print to a log file instead of standard output use:

memory_graph.log_file = open("my_log_file.txt", "w")

Alternatively you get an even better debugging experience when you set expression:

memory_graph.render(locals(), "my_debug_graph.pdf")

as a watchpoint in a debugger tool and open the "my_debug_graph.pdf" output file. This continuouly shows the graph of all the local variables while debugging and avoids having to add any memory_graph show(), render(), or d() calls to your code.

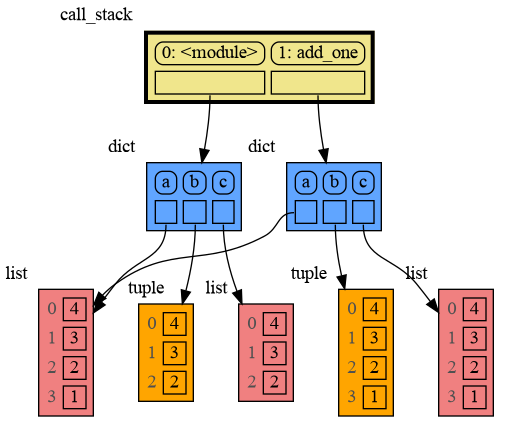

Function memory_graph.get_call_stack() returns the full call stack that holds for each called function all the local variables. This enables us to visualize the local variables of each of the called functions simultaneously. This helps to visualize if variables of different called functions share any data between them. Here for example we call function add_one() with arguments a, b, c that adds one to change each of its arguments.

import memory_graph

def add_one(a, b, c):

a += 1

b.append(1)

c.append(1)

memory_graph.show(memory_graph.get_call_stack())

a = 10

b = [4, 3, 2]

c = [4, 3, 2]

add_one(a, b, c.copy())

print(f"a:{a} b:{b} c:{c}")

As a is of immutable type 'int' and as we call the function with a copy of c, only b is shared so only b is changed in the calling stack frame as reflected in the printed output:

a:10 b:[4, 3, 2, 1] c:[4, 3, 2]

The call stack also helps to visualize how recursion works. Here we show each step of how recursively factorial(3) is computed:

import memory_graph

def factorial(n):

if n==0:

return 1

memory_graph.show( memory_graph.get_call_stack(), block=True )

result = n * factorial(n-1)

memory_graph.show( memory_graph.get_call_stack(), block=True )

return result

print(factorial(3))

and the final result is: 1 x 2 x 3 = 6

The memory_graph.get_call_stack() doesn't work well in a watchpoint context in most debuggers because debuggers introduce additional stack frames that cause problems. Use these alternative functions for various debuggers to filter out these problematic stack frames:

| debugger | function to get the call stack |

|---|---|

| pdb, pudb | memory_graph.get_call_stack_pdb() |

| Visual Studio Code | memory_graph.get_call_stack_vscode() |

| Pycharm | memory_graph.get_call_stack_pycharm() |

For other debuggers, invoke this function within the watchpoint context. Then, in the "call_stack.txt" file, identify the slice of functions you wish to include in the call stack, more specifically choise 'after' and 'up_to' what function you want to slice.

memory_graph.save_call_stack("call_stack.txt")

and then call this function to get the desired call stack to show in the graph:

memory_graph.get_call_stack_after_up_to(after_function, up_to_function="<module>")

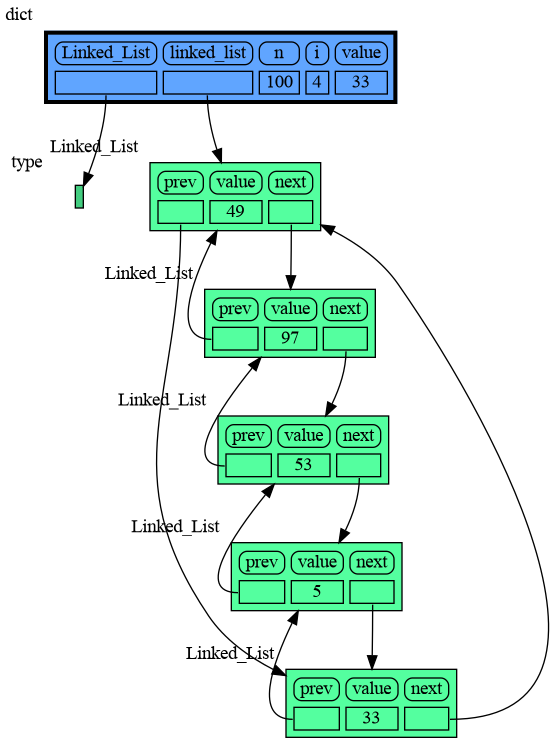

Module memory_graph can be very useful in a course about datastructures, some examples:

import memory_graph

import random

random.seed(0) # use same random numbers each run

class Node:

def __init__(self, value):

self.prev = None

self.value = value

self.next = None

class LinkedList:

def __init__(self):

self.head = None

self.tail = None

def add_front(self, value):

new_node = Node(value)

if self.head is None:

self.head = new_node

self.tail = new_node

else:

new_node.next = self.head

self.head.prev = new_node

self.head = new_node

memory_graph.d() # <--- draw graph

linked_list = LinkedList()

n = 100

for i in range(n):

new_value = random.randrange(n)

linked_list.add_front(new_value)

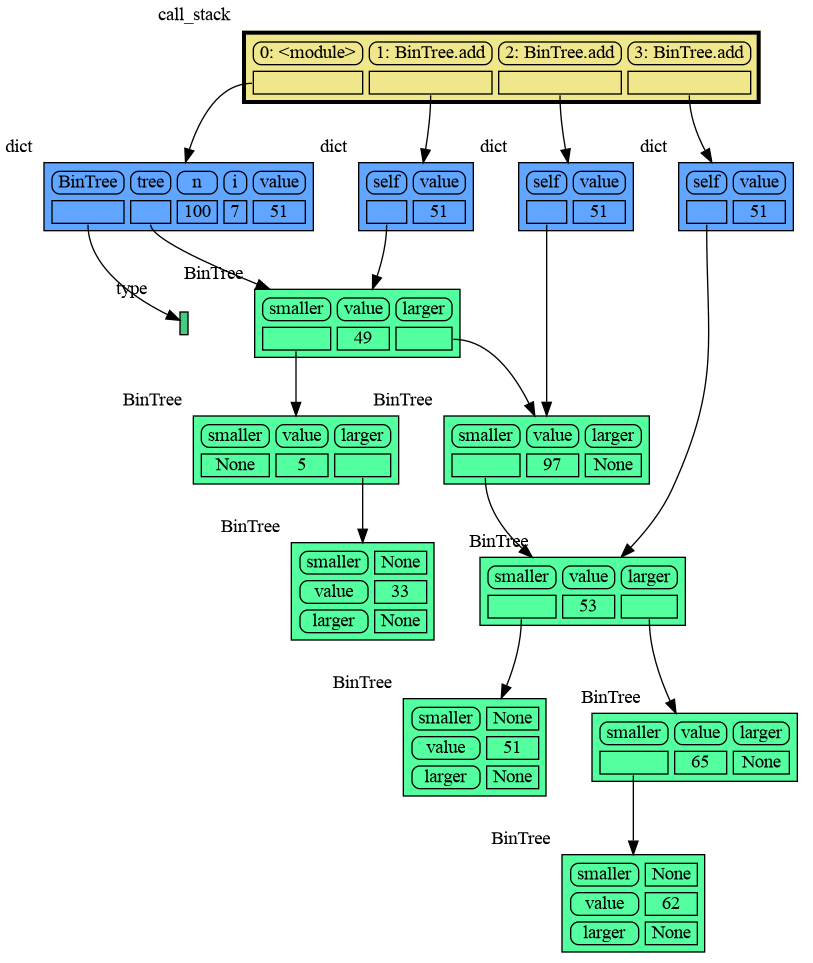

import memory_graph

import random

random.seed(0) # use same random numbers each run

class Node:

def __init__(self, value):

self.smaller = None

self.value = value

self.larger = None

class BinTree:

def __init__(self):

self.root = None

def add_recursive(self, new_value, node):

if new_value < node.value:

if node.smaller is None:

node.smaller = Node(new_value)

else:

self.add_recursive(new_value, node.smaller)

else:

if node.larger is None:

node.larger = Node(new_value)

else:

self.add_recursive(new_value, node.larger)

memory_graph.d() # <--- draw graph

def add(self, value):

if self.root is None:

self.root = Node(value)

else:

self.add_recursive(value, self.root)

tree = BinTree()

n = 100

for i in range(n):

new_value = random.randrange(100)

tree.add(new_value)

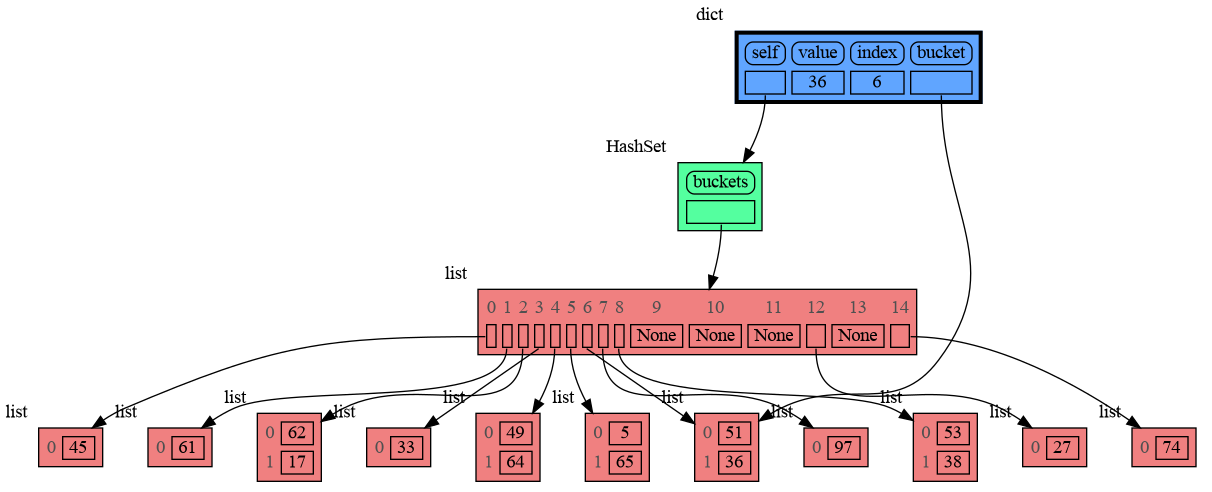

import memory_graph

import random

random.seed(0) # use same random numbers each run

class HashSet:

def __init__(self, capacity=15):

self.buckets = [None] * capacity

def add(self, value):

index = hash(value) % len(self.buckets)

if self.buckets[index] is None:

self.buckets[index] = []

bucket = self.buckets[index]

bucket.append(value)

memory_graph.d() # <--- draw graph

def contains(self, value):

index = hash(value) % len(self.buckets)

if self.buckets[index] is None:

return False

return value in self.buckets[index]

def remove(self, value):

index = hash(value) % len(self.buckets)

if self.buckets[index] is not None:

self.buckets[index].remove(value)

hash_set = HashSet()

n = 100

for i in range(n):

new_value = random.randrange(n)

hash_set.add(new_value)

Different aspects of memory_graph can be configured. The default configuration is reset by importing 'memory_graph.config_default'.

memory_graph.config.max_number_nodes : int

★ symbol indictes where the graph is cut short.memory_graph.config.max_string_length : int

memory_graph.config.no_reference_types : dict

memory_graph.config.no_child_references_types : set

memory_graph.config.type_to_node : dict

memory_graph.config.type_to_color : dict

memory_graph.config.type_to_vertical_orientation : dict

memory_graph.config.type_to_slicer : dict

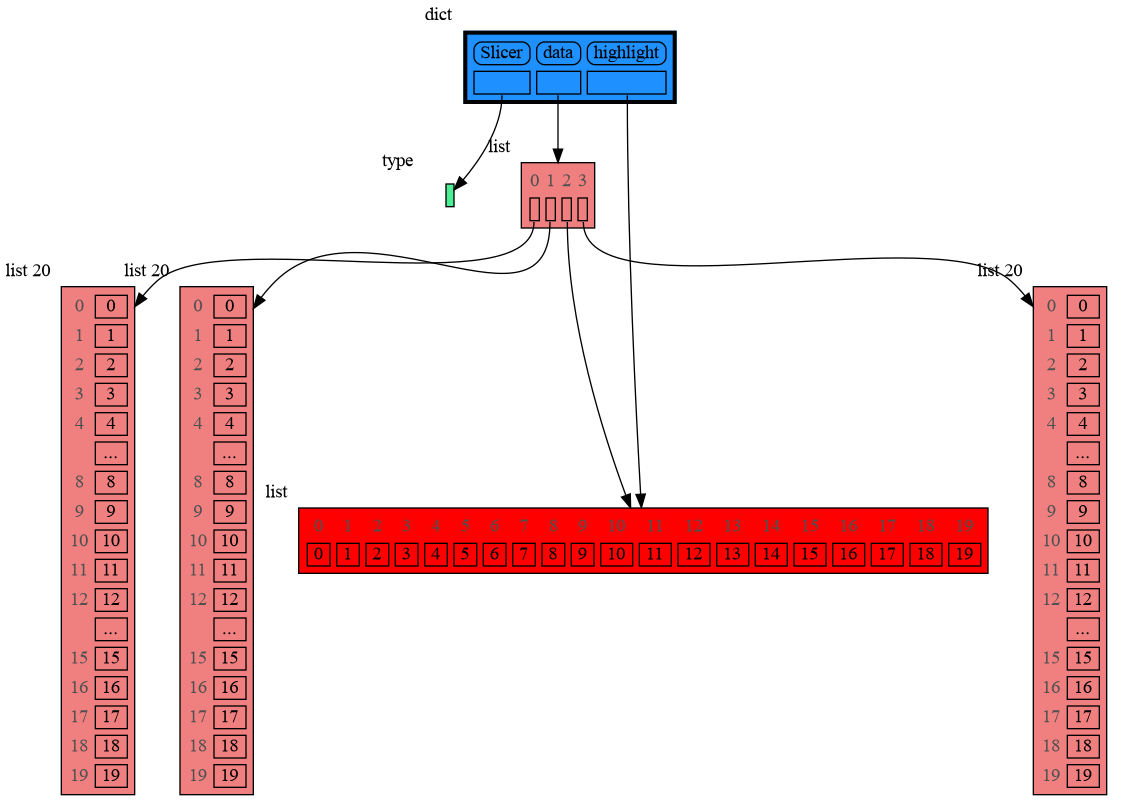

In addition to the global configuration, a temporary configuration can be set for a single show(), render(), or d() call to change the colors, orientation, and slicer. This example highlights a particular list element in red, gives it a horizontal orientation, and overwrites the default slicer for lists:

import memory_graph

from memory_graph.Slicer import Slicer

data = [ list(range(20)) for i in range(1,5)]

highlight = data[2]

memory_graph.show( locals(),

colors = {id(highlight): "red" }, # set color to "red"

vertical_orientations = {id(highlight): False }, # set horizontal orientation

slicers = {id(highlight): Slicer()} # set no slicing

)

Different extensions are available for types from other Python packages.

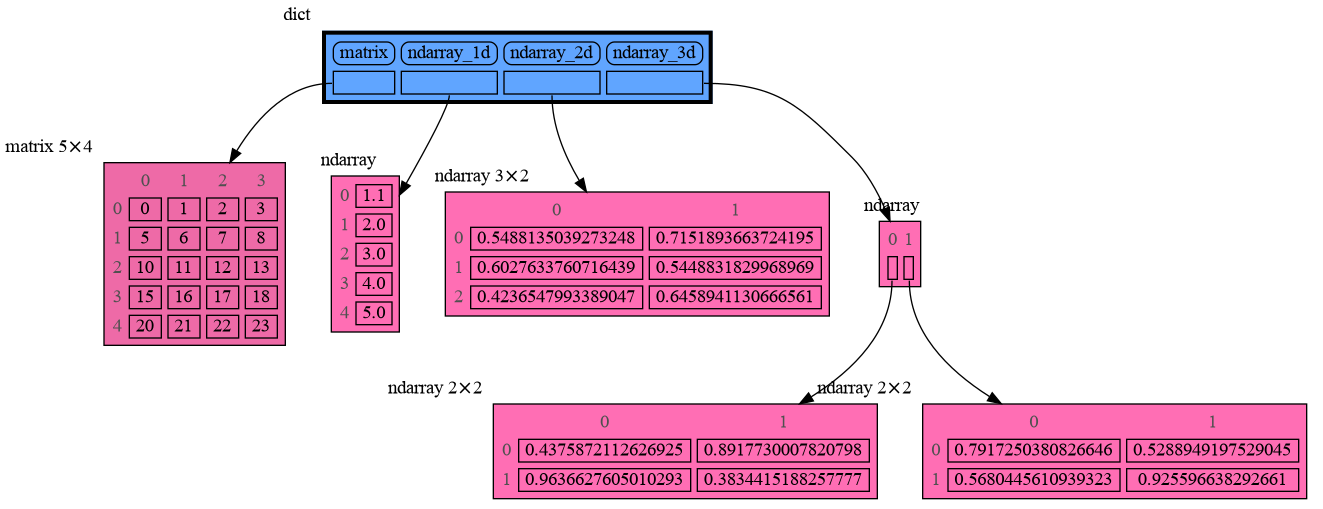

Numpy types arrray and matrix and ndarray can be graphed with the "memory_graph.extension_numpy" extension:

import memory_graph

import numpy as np

import memory_graph.extension_numpy

np.random.seed(0) # use same random numbers each run

array = np.array([1.1, 2, 3, 4, 5])

matrix = np.matrix([[i*20+j for j in range(20)] for i in range(20)])

ndarray = np.random.rand(20,20)

memory_graph.d()

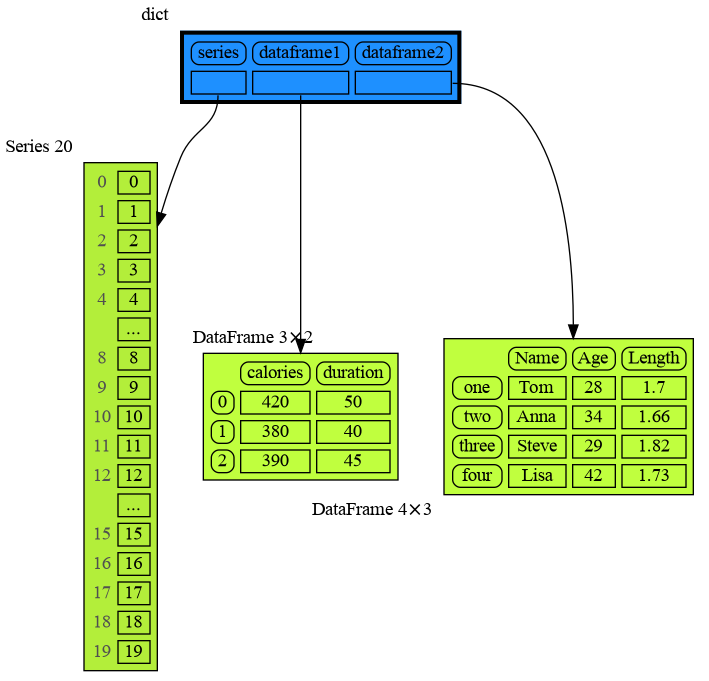

Pandas types Series and DataFrame can be graphed with the "memory_graph.extension_pandas" extension:

import memory_graph

import pandas as pd

import memory_graph.extension_pandas

series = pd.Series( [i for i in range(20)] )

dataframe1 = pd.DataFrame({ "calories": [420, 380, 390],

"duration": [50, 40, 45] })

dataframe2 = pd.DataFrame({ 'Name' : [ 'Tom', 'Anna', 'Steve', 'Lisa'],

'Age' : [ 28, 34, 29, 42],

'Length' : [ 1.70, 1.66, 1.82, 1.73] },

index=['one', 'two', 'three', 'four']) # with row names

memory_graph.d()

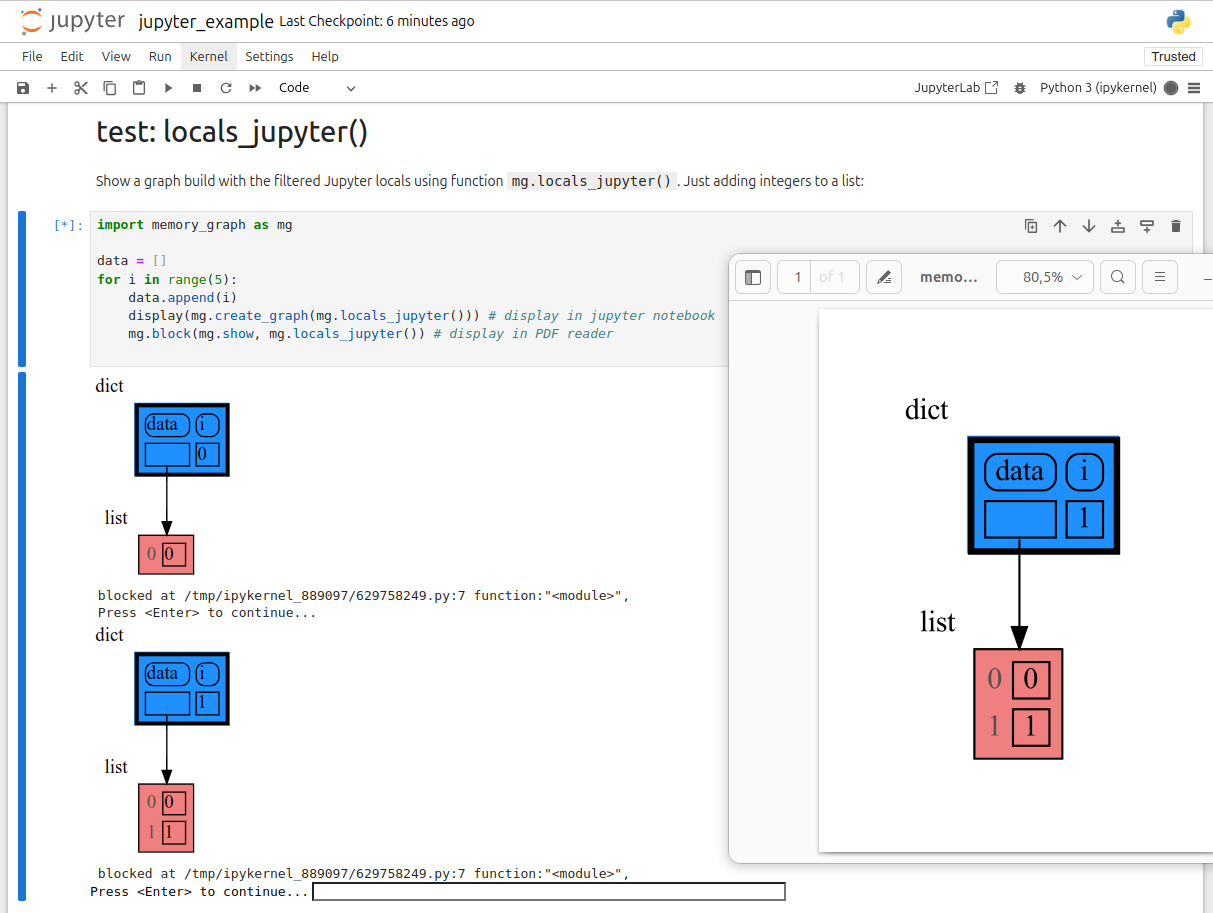

In Jupyter Notebook locals() has additional variables that cause problems in the graph, use memory_graph.locals_jupyter() to get the local variables with these problematic variables filtered out. Use memory_graph.get_call_stack_jupyter() to get the whole call stack with these variables filtered out.

See for example jupyter_example.ipynb.

Adobe Acrobat Reader doesn't refresh a PDF file when it changes on disk and blocks updates which results in an Could not open 'somefile.pdf' for writing : Permission denied error. One solution is to install a PDF reader that does refresh (Evince for example) and set it as the default PDF reader. Another solution is to render() the graph to a different output format and open it manually.

When graph edges overlap it can be hard to distinguish them. Using an interactive graphviz viewer, such as xdot, on a '*.gv' DOT output file will help.

FAQs

Draws a graph of your data to analyze its structure.

We found that memory-graph demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 1 open source maintainer collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Security News

Research

The Socket Research Team breaks down a malicious wrapper package that uses obfuscation to harvest credentials and exfiltrate sensitive data.

Research

Security News

Attackers used a malicious npm package typosquatting a popular ESLint plugin to steal sensitive data, execute commands, and exploit developer systems.

Security News

The Ultralytics' PyPI Package was compromised four times in one weekend through GitHub Actions cache poisoning and failure to rotate previously compromised API tokens.