Security News

vlt Launches "reproduce": A New Tool Challenging the Limits of Package Provenance

vlt's new "reproduce" tool verifies npm packages against their source code, outperforming traditional provenance adoption in the JavaScript ecosystem.

Patching fork of botasaurus, The All in One Framework to build Awesome Scrapers.

The web has evolved. Finally, web scraping has too.

Botasaurus is an all-in-one web scraping framework that enables you to build awesome scrapers in less time. It solves the key pain points that we, as developers, face when scraping the modern web.

Our mission is to make creating awesome scrapers easy for everyone.

Botasaurus is built for creating awesome scrapers. It comes fully baked, with batteries included. Here is a list of things it can do that no other web scraping framework can:

undetected-chromedriver and the well-known JavaScript library puppeteer-stealth. Botasaurus can easily visit websites like https://nowsecure.nl/. With Botasaurus, you don't need to waste time finding ways to unblock a website. For usage, see this FAQ.Welcome to Botasaurus! Let’s dive right in with a straightforward example to understand how it works.

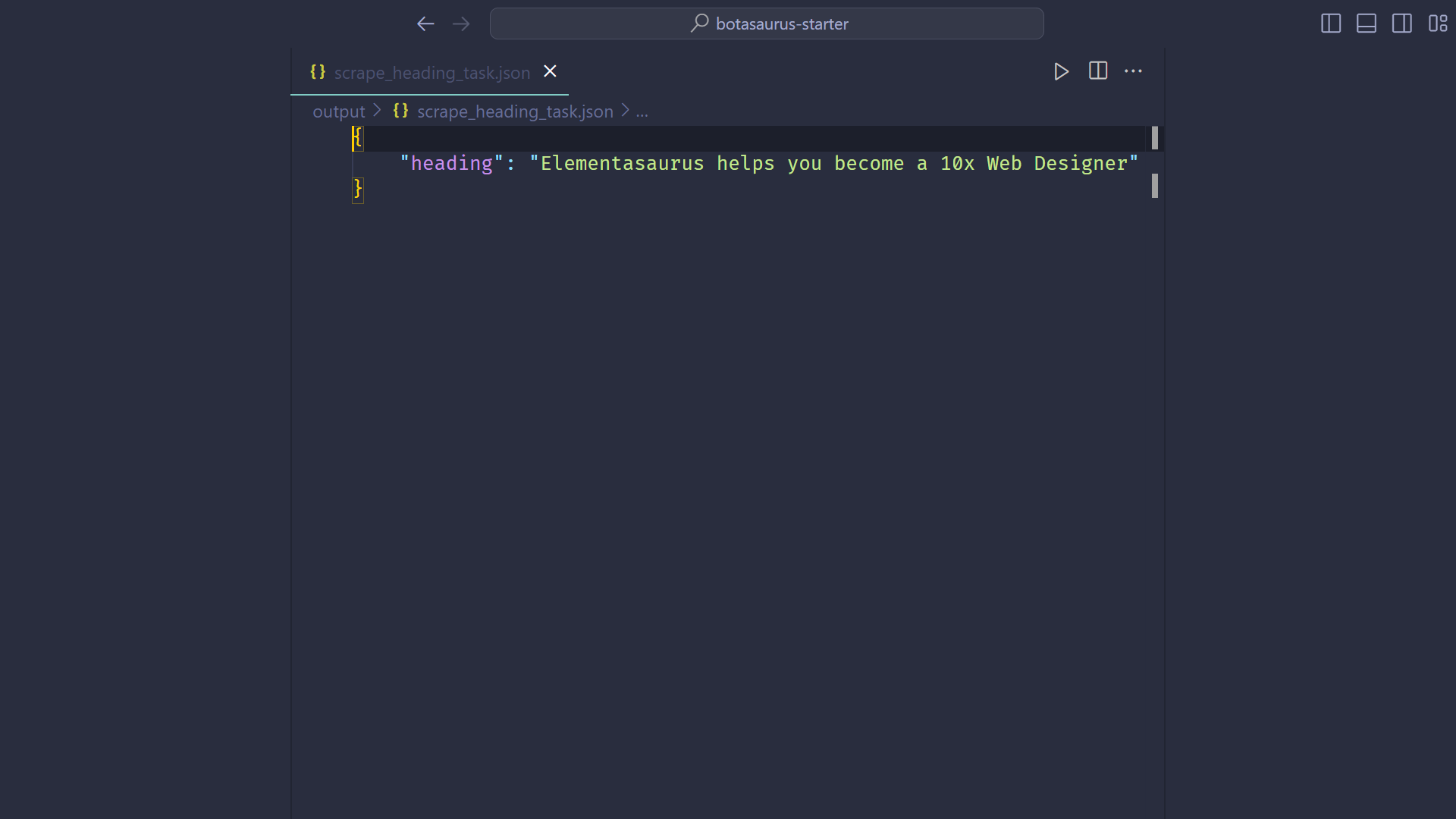

In this tutorial, we will go through the steps to scrape the heading text from https://www.omkar.cloud/.

First things first, you need to install Botasaurus. Run the following command in your terminal:

python -m pip install botasaurus

Next, let’s set up the project:

mkdir my-botasaurus-project

cd my-botasaurus-project

code . # This will open the project in VSCode if you have it installed

Now, create a Python script named main.py in your project directory and insert the following code:

from botasaurus import *

@browser

def scrape_heading_task(driver: AntiDetectDriver, data):

# Navigate to the Omkar Cloud website

driver.get("https://www.omkar.cloud/")

# Retrieve the heading element's text

heading = driver.text("h1")

# Save the data as a JSON file in output/scrape_heading_task.json

return {

"heading": heading

}

if __name__ == "__main__":

# Initiate the web scraping task

scrape_heading_task()

Let’s understand this code:

scrape_heading_task, decorated with @browser:@browser

def scrape_heading_task(driver: AntiDetectDriver, data):

def scrape_heading_task(driver: AntiDetectDriver, data):

scrape_heading_task.json by Botasaurus: driver.get("https://www.omkar.cloud/")

heading = driver.text("h1")

return {"heading": heading}

if __name__ == "__main__":

scrape_heading_task()

Time to run your bot:

python main.py

After executing the script, it will:

output/scrape_heading_task.json.

Now, let’s explore another way to scrape the heading using the request module. Replace the previous code in main.py with the following:

from botasaurus import *

@request

def scrape_heading_task(request: AntiDetectRequests, data):

# Navigate to the Omkar Cloud website

soup = request.bs4("https://www.omkar.cloud/")

# Retrieve the heading element's text

heading = soup.find('h1').get_text()

# Save the data as a JSON file in output/scrape_heading_task.json

return {

"heading": heading

}

if __name__ == "__main__":

# Initiate the web scraping task

scrape_heading_task()

In this code:

request object provided is not a standard Python request object but an Anti Detect request object, which also preserves cookies.Finally, run the bot again:

python main.py

This time, you will observe the same result as before, but instead of using Anti Detect Selenium, we are utilizing the Anti Detect request module.

Note: If you don't have Python installed, then you can run Botasaurus in Gitpod, a browser-based development environment, by following this section.

Let's learn about the features of Botasaurus that assist you in web scraping and automation.

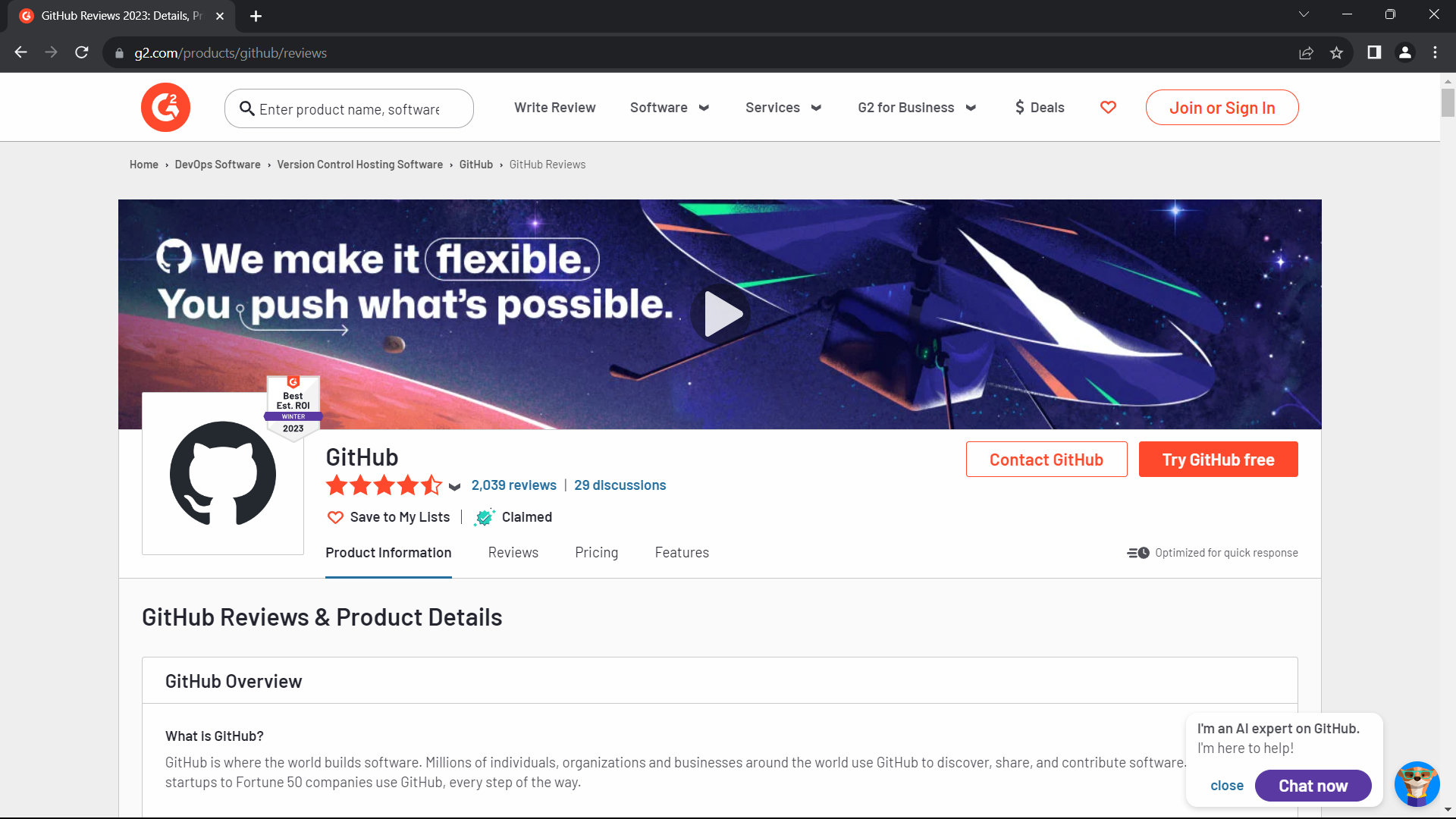

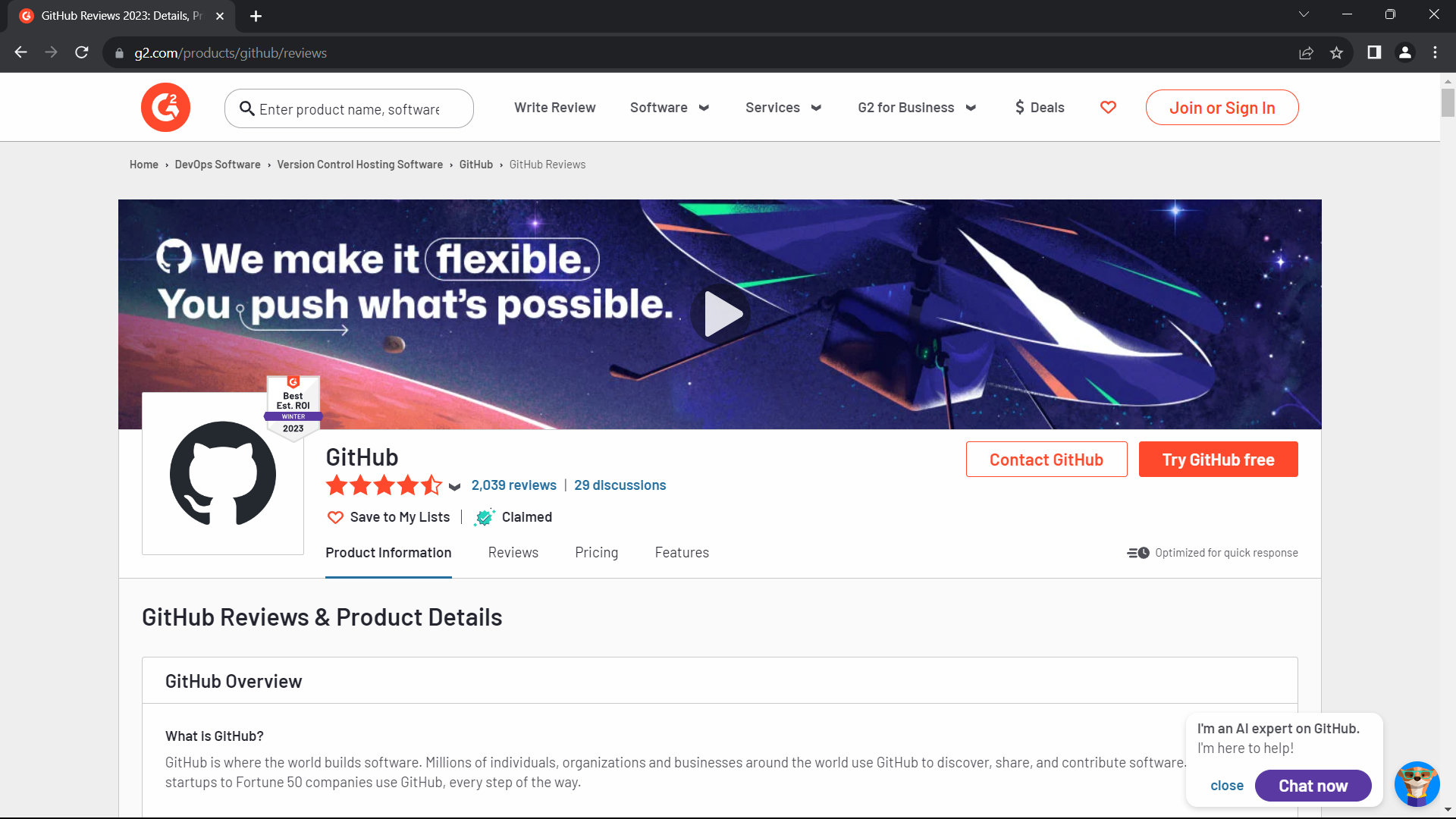

Sure, Run the following Python code to access G2.com, a website protected by Cloudflare:

from botasaurus import *

@browser()

def scrape_heading_task(driver: AntiDetectDriver, data):

driver.google_get("https://www.g2.com/products/github/reviews")

heading = driver.text('h1')

print(heading)

return heading

scrape_heading_task()

After running this script, you'll notice that the G2 page opens successfully, and the code prints the page's heading.

To scrape multiple data points or links, define the data variable and provide a list of items to be scraped:

@browser(data=["https://www.omkar.cloud/", "https://www.omkar.cloud/blog/", "https://stackoverflow.com/"])

def scrape_heading_task(driver: AntiDetectDriver, data):

# ...

Botasaurus will launch a new browser instance for each item in the list and merge and store the results in scrape_heading_task.json at the end of the scraping.

Please note that the data parameter can also handle items such as dictionaries.

@browser(data=[{"name": "Mahendra Singh Dhoni", ...}, {"name": "Virender Sehwag", ...}])

def scrape_heading_task(driver: AntiDetectDriver, data: dict):

# ...

To scrape data in parallel, set the parallel option in the browser decorator:

@browser(parallel=3, data=["https://www.omkar.cloud/", ...])

def scrape_heading_task(driver: AntiDetectDriver, data):

# ...

To determine the optimal number of parallel scrapers, pass the bt.calc_max_parallel_browsers function, which calculates the maximum number of browsers that can be run in parallel based on the available RAM:

@browser(parallel=bt.calc_max_parallel_browsers, data=["https://www.omkar.cloud/", ...])

def scrape_heading_task(driver: AntiDetectDriver, data):

# ...

Example: If you have 5.8 GB of free RAM, bt.calc_max_parallel_browsers would return 10, indicating you can run up to 10 browsers in parallel.

To cache web scraping results and avoid re-scraping the same data, set cache=True in the decorator:

@browser(cache=True, data=["https://www.omkar.cloud/", ...])

def scrape_heading_task(driver: AntiDetectDriver, data):

# ...

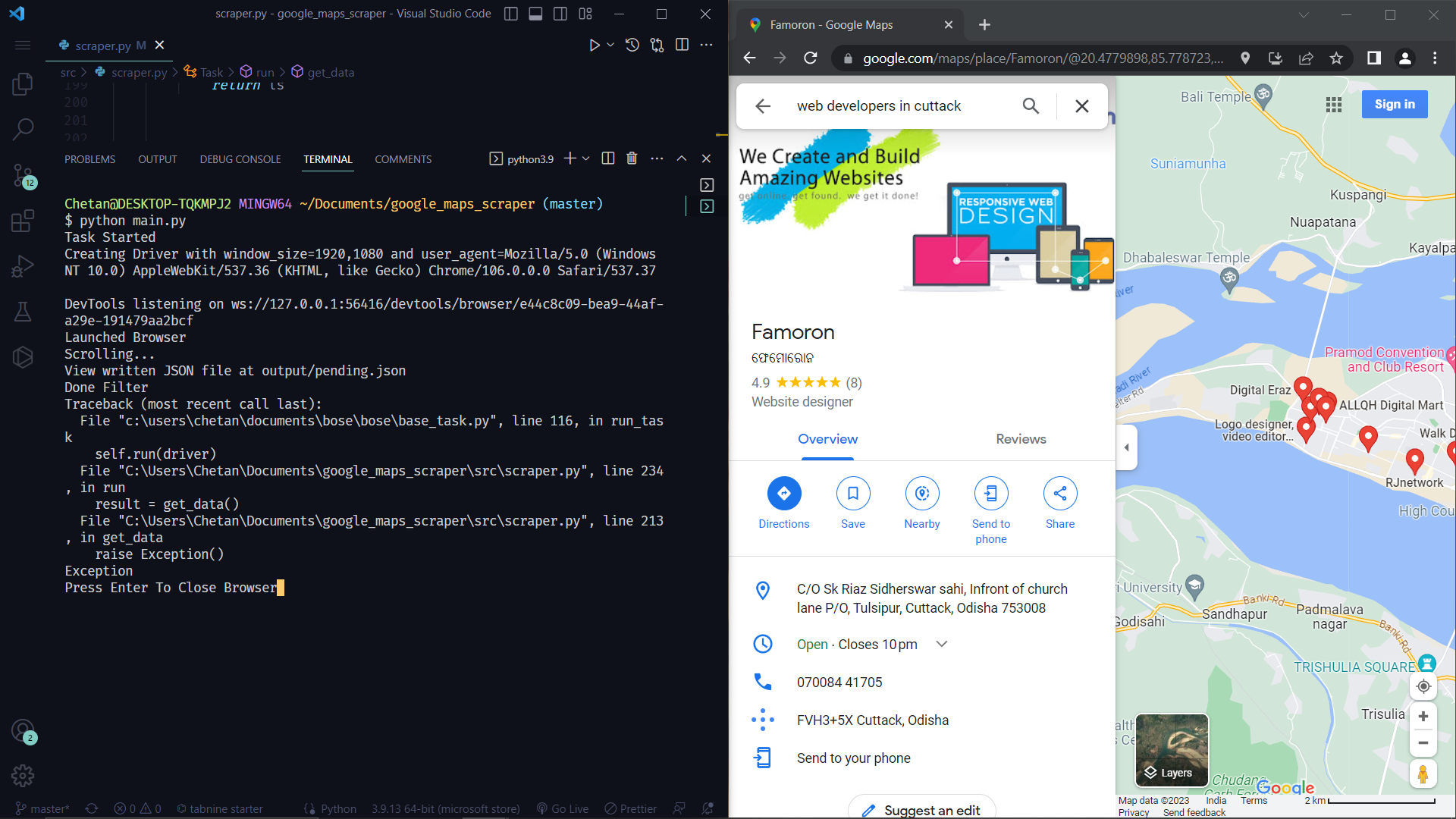

Botasaurus enhances the debugging experience by pausing the browser instead of closing it when an error occurs. This allows you to inspect the page and understand what went wrong, which can be especially helpful in debugging and removing the hassle of reproducing edge cases.

Botasaurus also plays a beep sound to alert you when an error occurs.

Blocking resources such as CSS, images, and fonts can significantly speed up your web scraping tasks, reduce bandwidth usage, and save money spent on proxies.

For example, a page that originally takes 4 seconds and 12 MB's to load might only take one second and 100 KB to load after css, images, etc have been blocked.

To block images, simply use the block_resources parameter. For example:

@browser(block_resources=True) # Blocks ['.css', '.jpg', '.jpeg', '.png', '.svg', '.gif', '.woff', '.pdf', '.zip']

def scrape_heading_task(driver: AntiDetectDriver, data):

driver.get("https://www.omkar.cloud/")

driver.prompt()

scrape_heading_task()

If you wish to block only images and fonts, while allowing CSS files, you can set block_images like this:

@browser(block_images=True) # Blocks ['.jpg', '.jpeg', '.png', '.svg', '.gif', '.woff', '.pdf', '.zip']

def scrape_heading_task(driver: AntiDetectDriver, data):

driver.get("https://www.omkar.cloud/")

driver.prompt()

scrape_heading_task()

To block a specific set of resources, such as only JavaScript, CSS, fonts, etc., specify them in the following manner:

@browser(block_resources=['.js', '.css', '.jpg', '.jpeg', '.png', '.svg', '.gif', '.woff', '.pdf', '.zip'])

def scrape_heading_task(driver: AntiDetectDriver, data):

driver.get("https://www.omkar.cloud/")

driver.prompt()

scrape_heading_task()

To configure various settings such as UserAgent, Proxy, Chrome Profile, and headless mode, you can specify them in the decorator as shown below:

from botasaurus import *

@browser(

headless=True,

profile='my-profile',

# proxy="http://your_proxy_address:your_proxy_port", TODO: Replace with your own proxy

user_agent=bt.UserAgent.user_agent_106

)

def scrape_heading_task(driver: AntiDetectDriver, data):

driver.get("https://www.omkar.cloud/")

driver.prompt()

scrape_heading_task()

You can also pass additional parameters when calling the scraping function, as demonstrated below:

@browser()

def scrape_heading_task(driver: AntiDetectDriver, data):

# ...

data = "https://www.omkar.cloud/"

scrape_heading_task(

data,

headless=True,

profile='my-profile',

proxy="http://your_proxy_address:your_proxy_port",

user_agent=bt.UserAgent.user_agent_106

)

Furthermore, it's possible to define functions that dynamically set these parameters based on the data item. For instance, to set the profile dynamically according to the data item, you can use the following approach:

@browser(profile=lambda data: data["profile"], headless=True, proxy="http://your_proxy_address:your_proxy_port", user_agent=bt.UserAgent.user_agent_106)

def scrape_heading_task(driver: AntiDetectDriver, data):

# ...

data = {"link": "https://www.omkar.cloud/", "profile": "my-profile"}

scrape_heading_task(data)

Additionally, if you need to pass metadata that is common across all data items, such as an API Key, you can do so by adding it as a metadata parameter. For example:

@browser()

def scrape_heading_task(driver: AntiDetectDriver, data, metadata):

print("metadata:", metadata)

print("data:", data)

data = {"link": "https://www.omkar.cloud/", "profile": "my-profile"}

scrape_heading_task(

data,

metadata={"api_key": "BDEC26..."}

)

Yes, we are the first Python Library to support SSL for authenticated proxies. Proxy providers like BrightData, IPRoyal, and others typically provide authenticated proxies in the format "http://username:password@proxy-provider-domain:port". For example, "http://greyninja:awesomepassword@geo.iproyal.com:12321".

However, if you use an authenticated proxy with a library like seleniumwire to scrape a Cloudflare protected website like G2.com, you will surely be blocked because you are using a non-SSL connection.

To verify this, run the following code:

First, install the necessary packages:

python -m pip install selenium_wire chromedriver_autoinstaller_fix

Then, execute this Python script:

from seleniumwire import webdriver

from chromedriver_autoinstaller_fix import install

# Define the proxy

proxy_options = {

'proxy': {

'http': 'http://username:password@proxy-provider-domain:port', # TODO: Replace with your own proxy

'https': 'http://username:password@proxy-provider-domain:port', # TODO: Replace with your own proxy

}

}

# Install and set up the driver

driver_path = install()

driver = webdriver.Chrome(driver_path, seleniumwire_options=proxy_options)

# Navigate to the desired URL

link = 'https://www.g2.com/products/github/reviews'

driver.get("https://www.google.com/")

driver.execute_script(f'window.location.href = "{link}"')

# Prompt for user input

input("Press Enter to exit...")

# Clean up

driver.quit()

You will definitely encounter a block by Cloudflare:

However, using proxies with Botasaurus prevents this issue. See the difference by running the following code:

from botasaurus import *

@browser(proxy="http://username:password@proxy-provider-domain:port") # TODO: Replace with your own proxy

def scrape_heading_task(driver: AntiDetectDriver, data):

driver.google_get("https://www.g2.com/products/github/reviews")

driver.prompt()

scrape_heading_task()

Result:

NOTE: To run the code above, you will need Node.js installed.

We can access Cloudflare-protected pages using simple HTTP requests.

All you need to do is specify the use_stealth option.

Sounds too good to be true? Run the following code to see it in action:

from botasaurus import *

@request(use_stealth=True)

def scrape_heading_task(request: AntiDetectRequests, data):

response = request.get('https://www.g2.com/products/github/reviews')

print(response.status_code)

return response.text

scrape_heading_task()

Yes, we can. Let's learn about various challenges and the best ways to solve them.

Connection Challenge

This is the most popular challenge and requires making a browser-like connection with appropriate headers to the target website. It's commonly used to protect:

Example Page: https://www.g2.com/products/github/reviews

Using cloudscraper library fails because, while it sends browser-like user agents and headers, it does not establish a browser-like connection, leading to detection.

from botasaurus import *

@request(use_stealth=True)

def scrape_heading_task(request: AntiDetectRequests, data):

response = request.get('https://www.g2.com/products/github/reviews')

print(response.status_code)

return response.text

scrape_heading_task()

from botasaurus import *

@browser()

def scrape_heading_task(driver: AntiDetectDriver, data):

driver.google_get("https://www.g2.com/products/github/reviews")

driver.prompt()

heading = driver.text('h1')

return heading

scrape_heading_task()

JS Challenge

This challenge requires performing JS computations that differentiates a Chrome controlled by Selenium/Puppeteer/Playwright from a real Chrome.

It's commonly used to protect Auth pages:

Example Pages: https://nowsecure.nl/

Bare selenium and puppeteer definitely do not work due to a lot of automation noise. undetected-chromedriver and puppeteer-stealth also fail to access sites protected by JS Challenge like https://nowsecure.nl/.

The Stealth Browser can easily solve JS Challenges. See the truth for yourself by running this code:

from botasaurus import *

from botasaurus.create_stealth_driver import create_stealth_driver

@browser(

create_driver=create_stealth_driver(

start_url="https://nowsecure.nl/",

),

)

def scrape_heading_task(driver: AntiDetectDriver, data):

driver.prompt()

heading = driver.text('h1')

return heading

scrape_heading_task()

JS with Captcha Challenge

This challenge involves JS computations plus solving a Captcha. It's used to protect pages which are rarely but sometimes visited by humans, like:

Example Page: https://www.g2.com/products/github/reviews.html?page=5&product_id=github

Again, tools like selenium, puppeteer, undetected-chromedriver, and puppeteer-stealth fail.

The Stealth Browser can easily solve these challenges. See it in action by running this code:

from botasaurus import *

from botasaurus.create_stealth_driver import create_stealth_driver

@browser(

create_driver=create_stealth_driver(

start_url="https://www.g2.com/products/github/reviews.html?page=5&product_id=github",

),

)

def scrape_heading_task(driver: AntiDetectDriver, data):

driver.prompt()

heading = driver.text('h1')

return heading

scrape_heading_task()

Notes:

wait argument.from botasaurus import *

from botasaurus.create_stealth_driver import create_stealth_driver

@browser(

create_driver=create_stealth_driver(

start_url="https://nowsecure.nl/",

wait=4, # Waits for 4 seconds before connecting to browser

),

)

def scrape_heading_task(driver: AntiDetectDriver, data):

driver.prompt()

heading = driver.text('h1')

return heading

scrape_heading_task()

Here are some recommendations for wait times:

from botasaurus import *

from botasaurus.create_stealth_driver import create_stealth_driver

@browser(

create_driver=create_stealth_driver(

start_url="https://nowsecure.nl/", # Must for Websites Protected by Cloudflare JS Challenge

),

)

However, if you are automating websites not protected by Cloudflare or protected by Cloudflare Connection Challenge, then you may pass None to start_url. With this, we will also not perform a wait before connecting to Chrome, saving you time.

from botasaurus import *

from botasaurus.create_stealth_driver import create_stealth_driver

@browser(

create_driver=create_stealth_driver(

start_url=None, # Recommended for Websites not protected by Cloudflare or protected by Cloudflare Connection Challenge

),

)

from botasaurus import *

from botasaurus.create_stealth_driver import create_stealth_driver

@browser(

user_agent=bt.UserAgent.REAL,

window_size=bt.WindowSize.REAL,

create_driver=create_stealth_driver(

start_url="https://nowsecure.nl/",

),

)

def scrape_heading_task(driver: AntiDetectDriver, data):

driver.prompt()

heading = driver.text('h1')

return heading

scrape_heading_task()

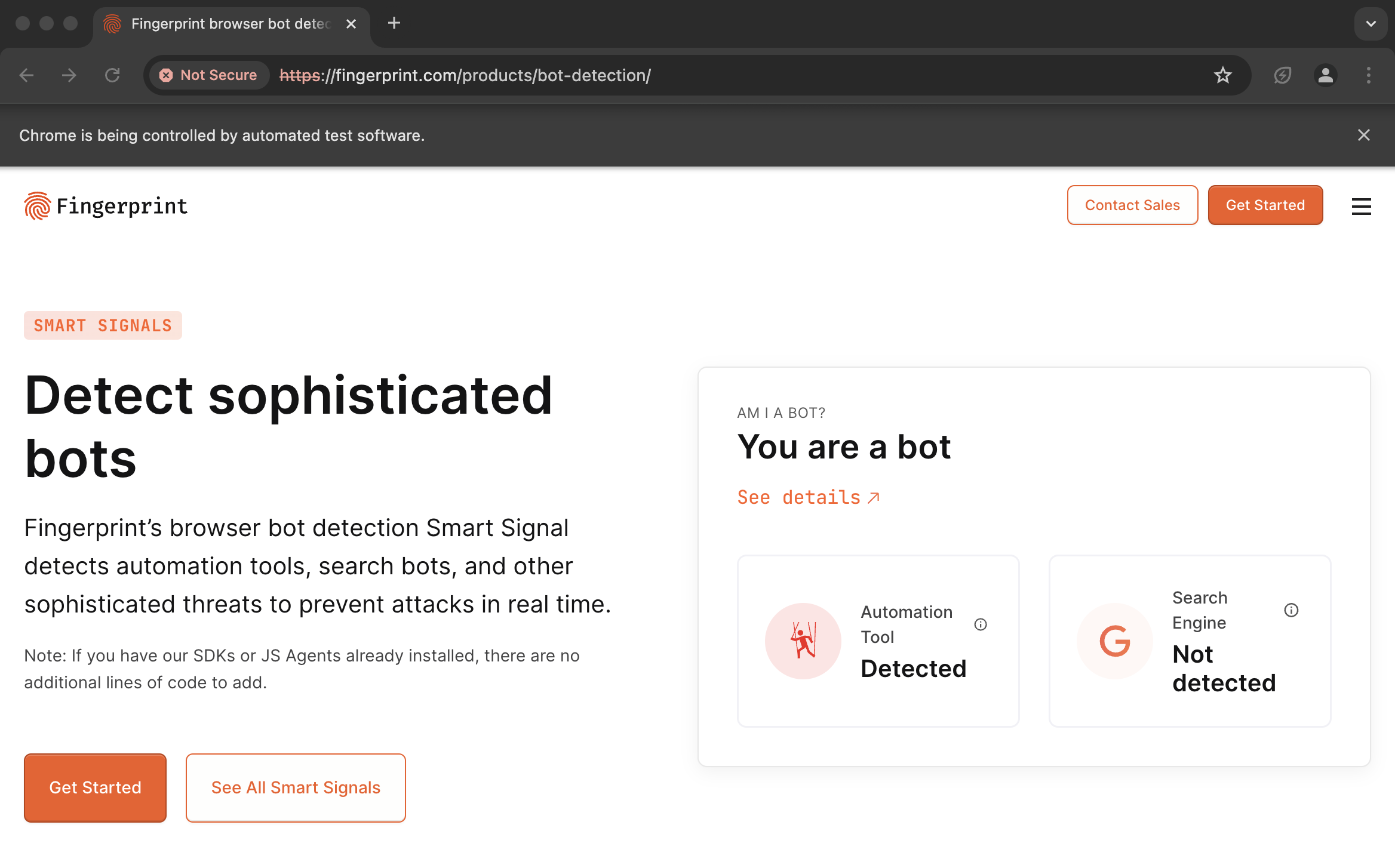

If you are doing web scraping of publicly available data, then the above code is good and recommended to be used. but stealth driver is fingerprintable using tools like fingerprint.

from botasaurus import *

from botasaurus.create_stealth_driver import create_stealth_driver

def get_start_url(data):

return data

@browser(

# proxy="http://username:password@proxy-domain:12321" # optionally use proxy

# user_agent=bt.UserAgent.REAL, # Optionally use REAL User Agent

# window_size=bt.WindowSize.REAL, # Optionally use REAL Window Size

max_retry=5,

create_driver=create_stealth_driver(

start_url=get_start_url,

raise_exception=True,

),

)

def scrape_heading_task(driver: AntiDetectDriver, data):

driver.prompt()

heading = driver.text('h1')

return heading

scrape_heading_task(["https://nowsecure.nl/", "https://steamdb.info/sub/363669/apps"])

The above code makes the scraper more robust by raising an exception when detected and retrying up to 5 times to visit the website.

Facing captchas is really common and annoying hurdle in web scraping. Fortunately, CapSolver can be used to automatically solve captchas, saving both time and effort. Here's how you can use it:

from botasaurus import *

from capsolver_extension_python import Capsolver

@browser(

extensions=[Capsolver(api_key="CAP-MY_KEY")], # Replace "CAP-MY_KEY" with your actual CapSolver Key

)

def solve_captcha(driver: AntiDetectDriver, data):

driver.get("https://recaptcha-demo.appspot.com/recaptcha-v2-checkbox.php")

driver.prompt()

solve_captcha()

To solve captcha's with CapSolver, you will need Capsolver API Key. If you do not have a CapSolver API Key, follow the instructions here to create a CapSolver account and obtain one.

Botasaurus allows the use of ANY Chrome Extension with just 1 Line of Code. Below is an example that uses the AdBlocker Chrome Extension:

from botasaurus import *

from chrome_extension_python import Extension

@browser(

extensions=[Extension("https://chromewebstore.google.com/detail/adblock-%E2%80%94-best-ad-blocker/gighmmpiobklfepjocnamgkkbiglidom")], # Simply pass the Extension Link

)

def open_chrome(driver: AntiDetectDriver, data):

driver.prompt()

open_chrome()

Also, In some cases an extension requires additional configuration like API keys or credentials, for that you can create a Custom Extension. Learn how to create and configure Custom Extension here.

Utilize the reuse_driver option to reuse drivers, reducing the time required for data scraping:

@browser(reuse_driver=True)

def scrape_heading_task(driver: AntiDetectDriver, data):

# ...

In web scraping, it is a common use case to scrape product pages, blogs, etc. But before scraping these pages, you need to get the links to these pages.

Many developers unnecessarily increase their work by writing code to visit each page one by one and scrape links, which they could have easily by just looking at the Sitemap.

The Botasaurus Sitemap Module makes this process easy as cake by allowing you to get all links or sitemaps using:

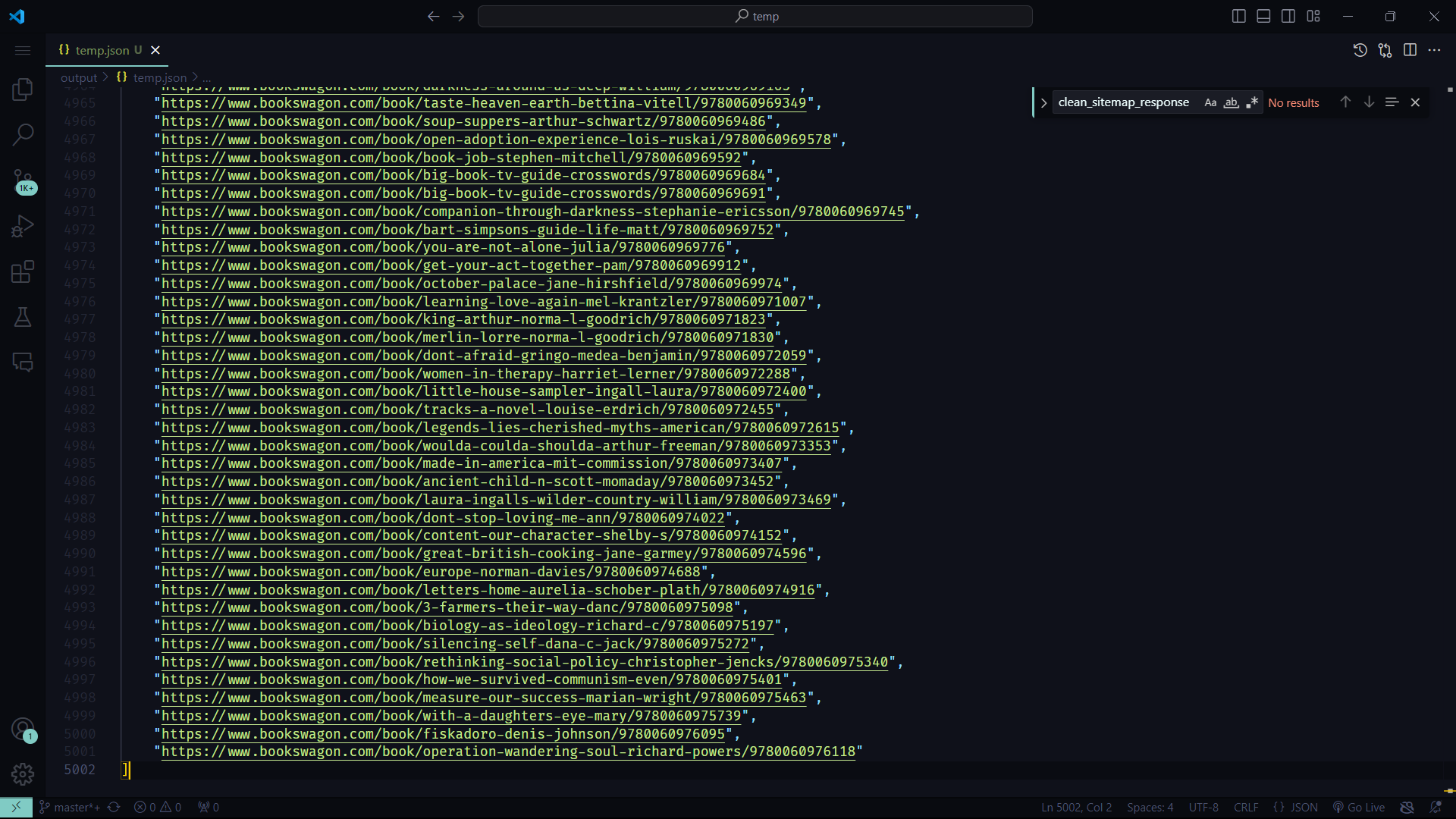

https://www.omkar.cloud/)https://www.omkar.cloud/sitemap.xml).gz compressed sitemapFor example, to get a list of books from bookswagon.com, you can use following code:

from botasaurus import *

from botasaurus.sitemap import Sitemap, Filters

links = (

Sitemap("https://www.bookswagon.com/googlesitemap/sitemapproducts-1.xml")

.filter(Filters.first_segment_equals("book"))

.links()

)

bt.write_temp_json(links)

Output:

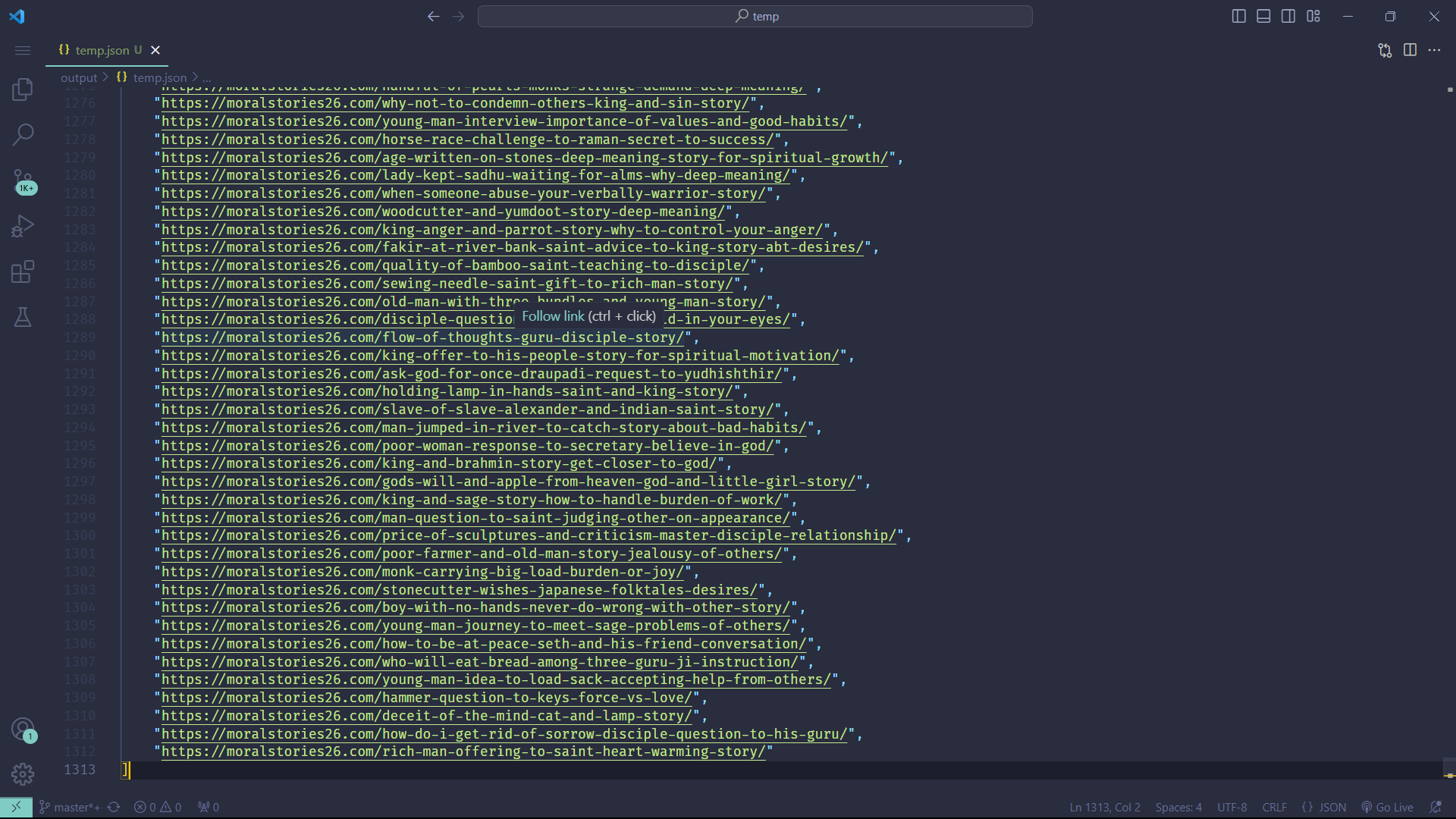

Or, Let's say you're in the mood for some reading and looking for good stories, the following code will get you over 1000+ stories from moralstories26.com:

from botasaurus import *

from botasaurus.sitemap import Sitemap, Filters

links = (

Sitemap("https://moralstories26.com/")

.filter(

Filters.has_exactly_1_segment(),

Filters.first_segment_not_equals(

["about", "privacy-policy", "akbar-birbal", "animal", "education", "fables", "facts", "family", "famous-personalities", "folktales", "friendship", "funny", "heartbreaking", "inspirational", "life", "love", "management", "motivational", "mythology", "nature", "quotes", "spiritual", "uncategorized", "zen"]

),

)

.links()

)

bt.write_temp_json(links)

Output:

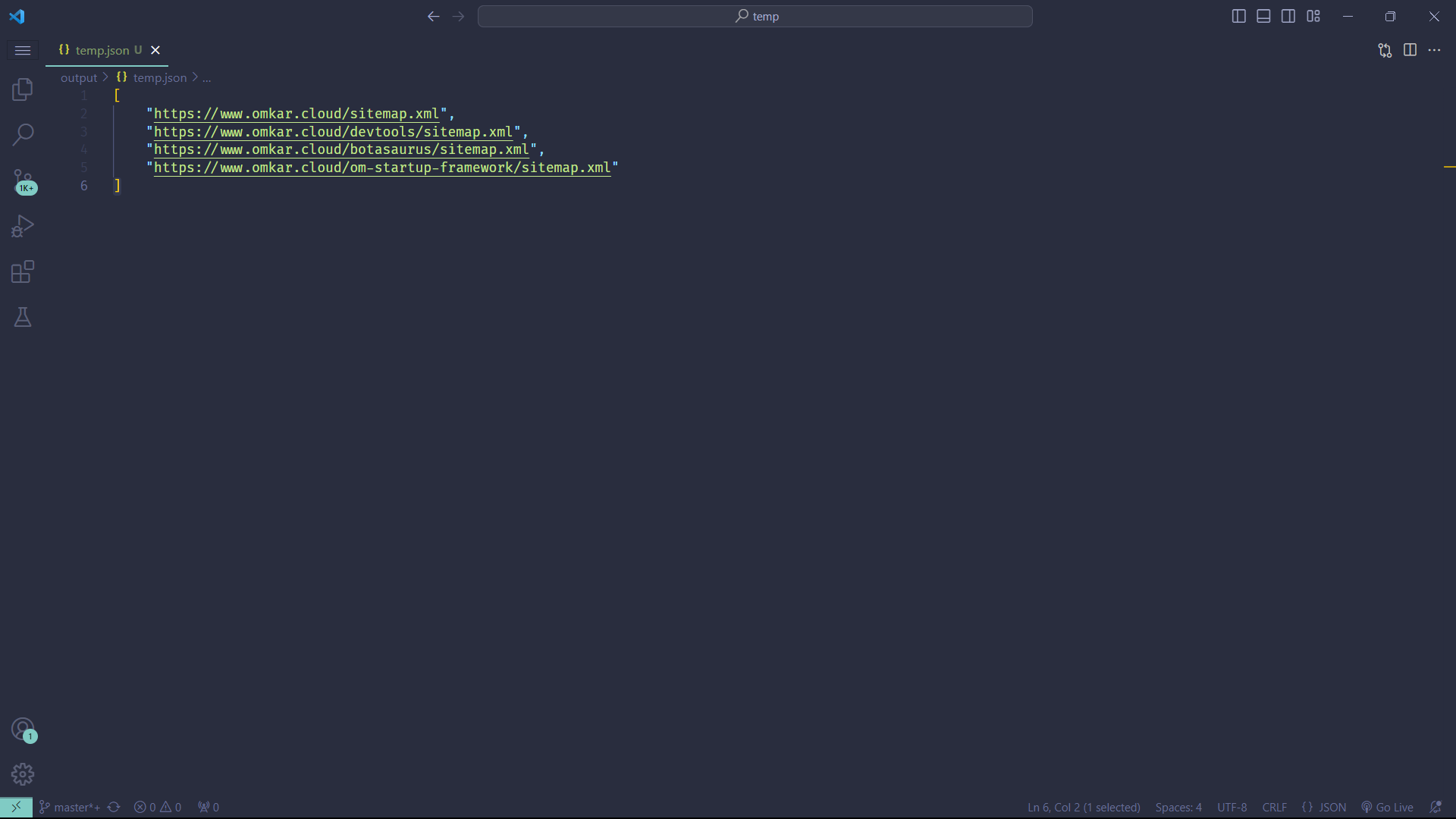

Also, Before scraping a site, it's useful to identify the available sitemaps. This can be easily done with the following code:

from botasaurus import *

from botasaurus.sitemap import Sitemap

sitemaps = Sitemap("https://www.omkar.cloud/").sitemaps()

bt.write_temp_json(sitemaps)

Output:

To ensure your scrapers run super fast, we cache the Sitemap, but you may want to periodically refresh the cache, which you can do as follows:

from botasaurus import *

from botasaurus.sitemap import Sitemap

from botasaurus.cache import Cache

sitemap = Sitemap(

[

"https://moralstories26.com/post-sitemap.xml",

"https://moralstories26.com/post-sitemap2.xml",

],

cache=Cache.REFRESH, # Refresh the cache with up-to-date stories.

)

links = sitemap.links()

bt.write_temp_json(links)

In summary, the sitemap is an awesome module for easily extracting links you want for web scraping.

Below is a practical example of how Botasaurus features come together in a typical web scraping project to scrape a list of links from a blog, and then visit each link to retrieve the article's heading and date:

from botasaurus import *

@browser(block_resources=True,

cache=True,

parallel=bt.calc_max_parallel_browsers,

reuse_driver=True)

def scrape_articles(driver: AntiDetectDriver, link):

driver.get(link)

heading = driver.text("h1")

date = driver.text("time")

return {

"heading": heading,

"date": date,

"link": link,

}

@browser(block_resources=True, cache=True)

def scrape_article_links(driver: AntiDetectDriver, data):

# Visit the Omkar Cloud website

driver.get("https://www.omkar.cloud/blog/")

links = driver.links("h3 a")

return links

if __name__ == "__main__":

# Launch the web scraping task

links = scrape_article_links()

scrape_articles(links)

Botasaurus provides a module named cl that includes commonly used cleaning functions to save development time. Here are some of the most important ones:

cl.select

What document.querySelector is to js, is what cl.select is to json. This is the most used function in Botasaurus and is incredibly useful.

This powerful function is popular for safely selecting data from nested JSON.

Instead of using flaky code like this:

from botasaurus import cl

data = {

"person": {

"data": {

"name": "Omkar",

"age": 21

}

},

"data": {

"friends": [

{"name": "John", "age": 21},

{"name": "Jane", "age": 21},

{"name": "Bob", "age": 21}

]

}

}

name = data.get('person', {}).get('data', {}).get('name', None)

if name:

name = name.upper()

else:

name = None

print(name)

You can write it as:

from botasaurus import cl

data = {

"person": {

"data": {

"name": "Omkar",

"age": 21

}

},

"data": {

"friends": [

{"name": "John", "age": 21},

{"name": "Jane", "age": 21},

{"name": "Bob", "age": 21}

]

}

}

print(cl.select(data, 'name', map_data=lambda x: x.upper()))

cl.select returns None if the key is not found, instead of throwing an error.

from botasaurus import cl

print(cl.select(data, 'name')) # Omkar

print(cl.select(data, 'friends', 0, 'name')) # John

print(cl.select(data, 'friends', 0, 'non_existing_key')) # None

You can also use map_data like this:

from botasaurus import cl

cl.select(data, 'name', map_data=lambda x: x.upper()) # OMKAR

And use default values like this:

from botasaurus import cl

cl.select(None, 'name', default="OMKAR") # OMKAR

cl.extract_numbers

from botasaurus import cl

print(cl.extract_numbers("I can extract numbers with decimal like 4.5, or with comma like 1,000.")) # [4.5, 1000]

More Functions

from botasaurus import cl

print(cl.extract_links("I can extract links like https://www.omkar.cloud/ or https://www.omkar.cloud/blog/")) # ['https://www.omkar.cloud/', 'https://www.omkar.cloud/blog/']

print(cl.rename_keys({"name": "Omkar", "age": 21}, {"name": "full_name"})) # {"full_name": "Omkar", "age": 21}

print(cl.sort_object_by_keys({"age": 21, "name": "Omkar"}, "name")) # {"name": "Omkar", "age": 21}

print(cl.extract_from_dict([{"name": "John", "age": 21}, {"name": "Jane", "age": 21}, {"name": "Bob", "age": 21}], "name")) # ["John", "Jane", "Bob"]

# ... And many more

Botasaurus provides convenient methods for reading and writing data:

# Data to write to the file

data = [

{"name": "John Doe", "age": 42},

{"name": "Jane Smith", "age": 27},

{"name": "Bob Johnson", "age": 35}

]

# Write the data to the file "data.json"

bt.write_json(data, "data.json")

# Read the contents of the file "data.json"

print(bt.read_json("data.json"))

# Write the data to the file "data.csv"

bt.write_csv(data, "data.csv")

# Read the contents of the file "data.csv"

print(bt.read_csv("data.csv"))

To pause the scraper and wait for user input before proceeding, use bt.prompt():

bt.prompt()

AntiDetectDriver is a patched Version of Selenium that has been modified to avoid detection by bot protection services such as Cloudflare.

It also includes a variety of helper functions that make web scraping tasks easier.

You can learn about these methods here.

Similar to @browser, @request supports features like

Below is an example that showcases these features:

@request(parallel=40, cache=True, proxy="http://your_proxy_address:your_proxy_port", data=["https://www.omkar.cloud/", ...])

def scrape_heading_task(request: AntiDetectDriver, link):

soup = request.bs4(link)

heading = soup.find('h1').get_text()

return {"heading": heading}

You can create an instance of AntiDetectDriver as follows:

driver = bt.create_driver()

# ... Code for scraping

driver.quit()

You can create an instance of AntiDetectRequests as follows:

anti_detect_request = bt.create_request()

soup = anti_detect_request.bs4("https://www.omkar.cloud/")

# ... Additional code

To run Botasaurus in Docker, use the Botasaurus Starter Template, which includes the necessary Dockerfile and Docker Compose configurations:

git clone https://github.com/omkarcloud/botasaurus-starter my-botasaurus-project

cd my-botasaurus-project

docker-compose build && docker-compose up

Botasaurus Starter Template comes with the necessary .gitpod.yml to easily run it in Gitpod, a browser-based development environment. Set it up in just 5 minutes by following these steps:

Open Botasaurus Starter Template, by visiting this link and sign up on gitpod.

python main.py

To configure the output of your scraping function in Botasaurus, you can customize the behavior in several ways:

Change Output Filename: Use the output parameter in the decorator to specify a custom filename for the output.

@browser(output="my-output")

def scrape_heading_task(driver: AntiDetectDriver, data):

# Your scraping logic here

Disable Output: If you don't want any output to be saved, set output to None.

@browser(output=None)

def scrape_heading_task(driver: AntiDetectDriver, data):

# Your scraping logic here

Dynamically Write Output: To dynamically write output based on data and result, pass a function to the output parameter:

def write_output(data, result):

bt.write_json(result, 'data')

bt.write_csv(result, 'data')

@browser(output=write_output)

def scrape_heading_task(driver: AntiDetectDriver, data):

# Your scraping logic here

Save Outputs in Multiple Formats: Use the output_formats parameter to save outputs in different formats like CSV and JSON.

@browser(output_formats=[bt.Formats.CSV, bt.Formats.JSON])

def scrape_heading_task(driver: AntiDetectDriver, data):

# Your scraping logic here

These options provide flexibility in how you handle the output of your scraping tasks with Botasaurus.

To execute drivers asynchronously, enable the async option and use .get() when you're ready to collect the results:

from botasaurus import *

from time import sleep

@browser(

run_async=True, # Specify the Async option here

)

def scrape_heading(driver: AntiDetectDriver, data):

print("Sleeping for 5 seconds.")

sleep(5)

print("Slept for 5 seconds.")

return {}

if __name__ == "__main__":

# Launch web scraping tasks asynchronously

result1 = scrape_heading() # Launches asynchronously

result2 = scrape_heading() # Launches asynchronously

result1.get() # Wait for the first result

result2.get() # Wait for the second result

With this method, function calls run concurrently. The output will indicate that both function calls are executing in parallel.

The async_queue feature allows you to perform web scraping tasks in asyncronously in a queue, without waiting for each task to complete before starting the next one. To gather your results, simply use the .get() method when all tasks are in the queue.

from botasaurus import *

from time import sleep

@browser(async_queue=True)

def scrape_data(driver: AntiDetectDriver, data):

print("Starting a task.")

sleep(1) # Simulate a delay, e.g., waiting for a page to load

print("Task completed.")

return data

if __name__ == "__main__":

# Start scraping tasks without waiting for each to finish

async_queue = scrape_data() # Initializes the queue

# Add tasks to the queue

async_queue.put([1])

async_queue.put(2)

async_queue.put([3, 4])

# Retrieve results when ready

results = async_queue.get() # Expects to receive: [1, 2, 3, 4]

Here's how you could use async_queue to scrape webpage titles while scrolling through a list of links:

from botasaurus import *

@browser(async_queue=True)

def scrape_title(driver: AntiDetectDriver, link):

driver.get(link) # Navigate to the link

return driver.title # Scrape the title of the webpage

@browser()

def scrape_all_titles(driver: AntiDetectDriver):

# ... Your code to visit the initial page ...

title_queue = scrape_title() # Initialize the asynchronous queue

while not end_of_page_detected(driver): # Replace with your end-of-list condition

title_queue.put(driver.links('a')) # Add each link to the queue

driver.scroll(".scrollable-element")

return title_queue.get() # Get all the scraped titles at once

if __name__ == "__main__":

all_titles = scrape_all_titles() # Call the function to start the scraping process

Note: The async_queue will only invoke the scraping function for unique links, avoiding redundant operations and keeping the main function (scrape_all_titles) cleaner.

Utilize the keep_drivers_alive option to maintain active driver sessions. Remember to call .close() when you're finished to release resources:

from botasaurus import *

from botasaurus.create_stealth_driver import create_stealth_driver

@browser(

keep_drivers_alive=True,

reuse_driver=True, # Also commonly paired with `keep_drivers_alive`

)

def scrape_data(driver: AntiDetectDriver, link):

driver.get(link)

if __name__ == "__main__":

scrape_data(["https://moralstories26.com/", "https://moralstories26.com/page/2/", "https://moralstories26.com/page/3/"])

# After completing all scraping tasks, call .close() to close the drivers.

scrape_data.close()

You can use The Cache Module in Botasaurus to easily manage cached data. Here's a simple example explaining its usage:

from botasaurus import *

from botasaurus.cache import Cache

# Example scraping function

@request

def scrape_data(data):

# Your scraping logic here

return {"processed": data}

# Sample data for scraping

input_data = {"key": "value"}

# Adding data to the cache

Cache.put(scrape_data, input_data, scrape_data(input_data))

# Checking if data is in the cache

if Cache.has(scrape_data, input_data):

# Retrieving data from the cache

cached_data = Cache.get(scrape_data, input_data)

# Removing specific data from the cache

Cache.remove(scrape_data, input_data)

# Clearing the complete cache for the scrape_data function

Cache.clear(scrape_data)

from botasaurus import *

from botasaurus.create_stealth_driver import create_stealth_driver

@browser(

create_driver=create_stealth_driver(

start_url="https://nowsecure.nl/",

),

proxy="http://username:password@datacenter-proxy-domain:12321",

max_retry=5, # A reliable default for most situations, will retry creating driver if detected.

block_resources=True, # Enhances efficiency and cost-effectiveness

)

def scrape_heading_task(driver: AntiDetectDriver, data):

heading = driver.text('h1')

print(heading)

return heading

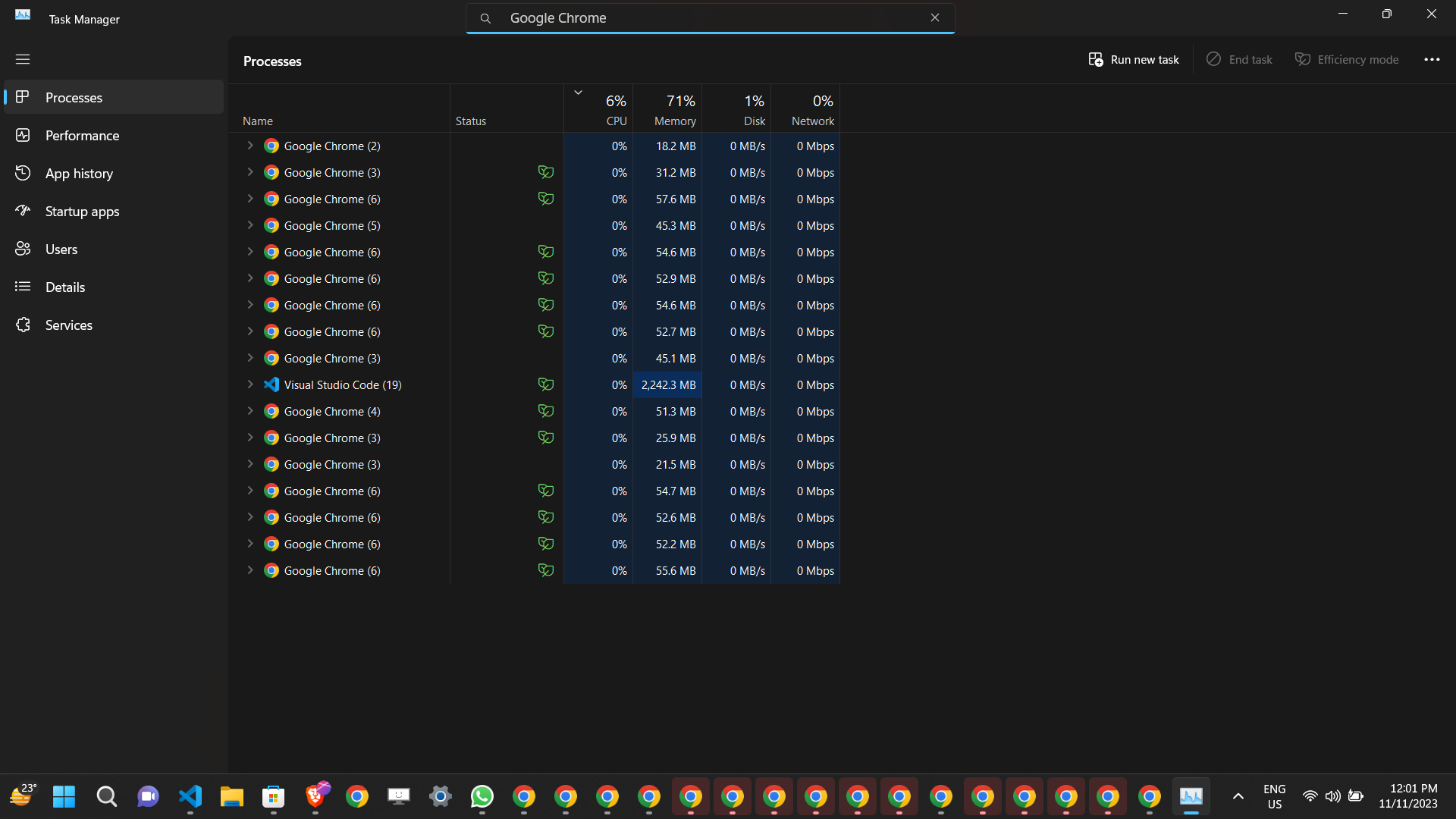

While developing a scraper, you might need to interrupt the scraping process, often done by pressing Ctrl + C. However, this action does not automatically close the Chrome browsers, which can cause your computer to hang due to resource overuse.

To prevent your PC from hanging, you need to close all running Chrome instances.

You can run following command to close all chromes

python -m botasaurus.close

Executing above command will close all Chrome instances, thereby helping to prevent your PC from hanging.

Botasaurus is a powerful, flexible tool for web scraping.

Its various settings allow you to tailor the scraping process to your specific needs, improving both efficiency and convenience. Whether you're dealing with multiple data points, requiring parallel processing, or need to cache results, Botasaurus provides the features to streamline your scraping tasks.

For further help, ask your question in GitHub Discussions. We'll be happy to help you out.

got-scraping and proxy-chain libraries. The implementation of stealth Anti Detect Requests and SSL-based Proxy Authentication wouldn't have been possible without their groundbreaking work on got-scraping and proxy-chain.puppeteer-real-browser; it helped us in creating Botasaurus Anti Detected. Show your appreciation by subscribing to their YouTube channel ⚡.Become one of our amazing stargazers by giving us a star ⭐ on GitHub!

It's just one click, but it means the world to me.

Capsolver.com is an AI-powered service that provides automatic captcha solving capabilities. It supports a range of captcha types, including reCAPTCHA, hCaptcha, and FunCaptcha, AWS Captcha, Geetest, image captcha among others. Capsolver offers both Chrome and Firefox extensions for ease of use, API integration for developers, and various pricing packages to suit different needs.

By using Botasaurus, you agree to comply with all applicable local and international laws related to data scraping, copyright, and privacy. The developers of Botasaurus are not responsible for any misuse of this software. It is the sole responsibility of the user to ensure adherence to all relevant laws regarding data scraping, copyright, and privacy, and to use Botasaurus in an ethical and legal manner.

We take the concerns of the Botasaurus Project very seriously. For any inquiries or issues, please contact Chetan Jain at chetan@omkar.cloud. We will take prompt and necessary action in response to your emails.

FAQs

Patching fork of botasaurus, The All in One Framework to build Awesome Scrapers.

We found that botasaurus2 demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 1 open source maintainer collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Security News

vlt's new "reproduce" tool verifies npm packages against their source code, outperforming traditional provenance adoption in the JavaScript ecosystem.

Research

Security News

Socket researchers uncovered a malicious PyPI package exploiting Deezer’s API to enable coordinated music piracy through API abuse and C2 server control.

Research

The Socket Research Team discovered a malicious npm package, '@ton-wallet/create', stealing cryptocurrency wallet keys from developers and users in the TON ecosystem.