Security News

The Changelog Podcast: Practical Steps to Stay Safe on npm

Learn the essential steps every developer should take to stay secure on npm and reduce exposure to supply chain attacks.

django-pg-zero-downtime-migrations

Advanced tools

Django postgresql backend that apply migrations with respect to database locks

Django postgresql backend that apply migrations with respect to database locks.

pip install django-pg-zero-downtime-migrations

To enable zero downtime migrations for postgres just setup django backend provided by this package and add most safe settings:

DATABASES = {

'default': {

'ENGINE': 'django_zero_downtime_migrations.backends.postgres',

#'ENGINE': 'django_zero_downtime_migrations.backends.postgis',

...

}

}

ZERO_DOWNTIME_MIGRATIONS_LOCK_TIMEOUT = '2s'

ZERO_DOWNTIME_MIGRATIONS_STATEMENT_TIMEOUT = '2s'

ZERO_DOWNTIME_MIGRATIONS_FLEXIBLE_STATEMENT_TIMEOUT = True

ZERO_DOWNTIME_MIGRATIONS_RAISE_FOR_UNSAFE = True

NOTE: this backend brings zero downtime improvements only for migrations (schema and

RunSQLoperations, but not forRunPythonoperation), for other purpose it works the same as standard django backend.

NOTE: this package is in beta, please check your migrations SQL before applying on production and submit issue for any question.

This backend provides same result state (except of ZERO_DOWNTIME_MIGRATIONS_KEEP_DEFAULT=True usage for django < 5.0), but different way and with additional guarantees for avoiding stuck table locks.

This backend doesn't use transactions for migrations (except RunPython operation), because not all SQL fixes can be run in transaction and it allows to avoid deadlocks for complex migration. So when your migration will down in the middle of migration file operations you need to fix db state manually (instead potential downtime). For that reason good practice to make migration modules small as possible. Also ZERO_DOWNTIME_MIGRATIONS_IDEMPOTENT_SQL=True allows to automate manual db state fixing.

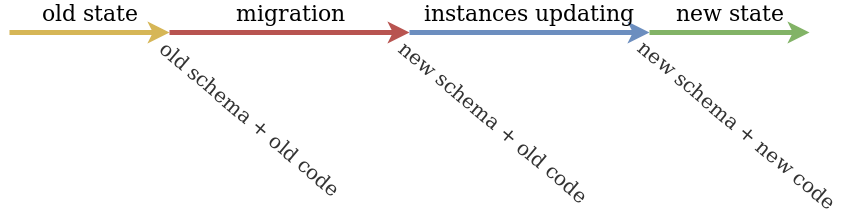

There are requirements for zero downtime deployment:

Flow:

If our deployment don't satisfy zero downtime deployment rules, then we split it to smaller deployments.

Apply lock_timeout for SQL statements that require ACCESS EXCLUSIVE lock, default None:

ZERO_DOWNTIME_MIGRATIONS_LOCK_TIMEOUT = '2s'

Allowed values:

None - current postgres setting used0 and equivalents mean that timeout will be disabledApply statement_timeout for SQL statements that require ACCESS EXCLUSIVE lock, default None:

ZERO_DOWNTIME_MIGRATIONS_STATEMENT_TIMEOUT = '2s'

Allowed values:

None - current postgres setting used0 and equivalents mean that timeout will be disabledSet statement_timeout to 0ms for SQL statements that require SHARE UPDATE EXCLUSIVE lock that useful in case when statement_timeout enabled globally and you try run long-running operations like index creation or constraint validation, default False:

ZERO_DOWNTIME_MIGRATIONS_FLEXIBLE_STATEMENT_TIMEOUT = True

Enabled option doesn't allow run potential unsafe migration, default False:

ZERO_DOWNTIME_MIGRATIONS_RAISE_FOR_UNSAFE = True

Define way to apply deferred sql, default True:

ZERO_DOWNTIME_DEFERRED_SQL = True

Allowed values:

True - run deferred sql similar to default django wayFalse - run deferred sql as soon as possibleDefine idempotent mode, default False:

ZERO_DOWNTIME_MIGRATIONS_IDEMPOTENT_SQL = False

Allowed values:

True - skip already applied sql migrationsFalse - standard non atomic django behaviourAs this backend doesn't use transactions for migrations any failed migration can be cause of stopped process in intermediate state. To avoid manual schema manipulation idempotent mode allows to rerun failed migration after fixed issue (eg. data issue or long running CRUD queries).

NOTE: idempotent mode checks rely only on name and index and constraint valid state, so it can ignore name collisions and recommended do not use it for CI checks.

Define way to drop foreign key, unique constraints and indexes before drop table or column, default True:

ZERO_DOWNTIME_MIGRATIONS_EXPLICIT_CONSTRAINTS_DROP = True

Allowed values:

True - before dropping table drop all foreign keys related to this table and before dropping column drop all foreign keys related to this column, unique constraints on this column and indexes used this column.False - standard django behaviour that will drop constraints with CASCADE mode (some constraints can be dropped explicitly too).Explicitly dropping constraints and indexes before dropping tables or columns allows for splitting schema-only changes with an ACCESS EXCLUSIVE lock and the deletion of physical files, which can take significant time and cause downtime.

Define way keep or drop code defaults on database level when adding new column, default False:

ZERO_DOWNTIME_MIGRATIONS_KEEP_DEFAULT = False

Allowed values:

True - after adding column with code default this default will not be dropped, this option allows to use ALTER TABLE ADD COLUMN SET DEFAULT NOT NULL as safe operation that much more simple and efficient than creating column without default on database level and populating column nextFalse - after adding column with code default this default will be dropped, this is standard django behaviourNOTE: this option works only for django < 5.0, in django 5.0+ explicit

db_defaultshould be used instead.

In case you using PgBouncer and expect timeouts will work as expected you need make sure that run migrations using session pool_mode or use direct database connection.

Postgres has different locks on table level that can conflict with each other https://www.postgresql.org/docs/current/static/explicit-locking.html#LOCKING-TABLES:

ACCESS SHARE | ROW SHARE | ROW EXCLUSIVE | SHARE UPDATE EXCLUSIVE | SHARE | SHARE ROW EXCLUSIVE | EXCLUSIVE | ACCESS EXCLUSIVE | |

|---|---|---|---|---|---|---|---|---|

ACCESS SHARE | X | |||||||

ROW SHARE | X | X | ||||||

ROW EXCLUSIVE | X | X | X | X | ||||

SHARE UPDATE EXCLUSIVE | X | X | X | X | X | |||

SHARE | X | X | X | X | X | |||

SHARE ROW EXCLUSIVE | X | X | X | X | X | X | ||

EXCLUSIVE | X | X | X | X | X | X | X | |

ACCESS EXCLUSIVE | X | X | X | X | X | X | X | X |

Lets split this lock to migration and business logic operations.

| lock | operations |

|---|---|

ACCESS EXCLUSIVE | CREATE SEQUENCE, DROP SEQUENCE, CREATE TABLE, DROP TABLE *, ALTER TABLE **, DROP INDEX |

SHARE | CREATE INDEX |

SHARE UPDATE EXCLUSIVE | CREATE INDEX CONCURRENTLY, DROP INDEX CONCURRENTLY, ALTER TABLE VALIDATE CONSTRAINT *** |

*: CREATE SEQUENCE, DROP SEQUENCE, CREATE TABLE, DROP TABLE shouldn't have conflicts, because your business logic shouldn't yet operate with created tables and shouldn't already operate with deleted tables.

**: Not all ALTER TABLE operations take ACCESS EXCLUSIVE lock, but all current django's migrations take it https://github.com/django/django/blob/master/django/db/backends/base/schema.py, https://github.com/django/django/blob/master/django/db/backends/postgresql/schema.py and https://www.postgresql.org/docs/current/static/sql-altertable.html.

***: Django doesn't have VALIDATE CONSTRAINT logic, but we will use it for some cases.

| lock | operations | conflict with lock | conflict with operations |

|---|---|---|---|

ACCESS SHARE | SELECT | ACCESS EXCLUSIVE | ALTER TABLE, DROP INDEX |

ROW SHARE | SELECT FOR UPDATE | ACCESS EXCLUSIVE, EXCLUSIVE | ALTER TABLE, DROP INDEX |

ROW EXCLUSIVE | INSERT, UPDATE, DELETE | ACCESS EXCLUSIVE, EXCLUSIVE, SHARE ROW EXCLUSIVE, SHARE | ALTER TABLE, DROP INDEX, CREATE INDEX |

So you can find that all django schema changes for exist table conflicts with business logic, but fortunately they are safe or has safe alternative in general.

As business logic mostly works with table rows it's also important to understand lock conflicts on row level https://www.postgresql.org/docs/current/static/explicit-locking.html#LOCKING-ROWS:

| lock | FOR KEY SHARE | FOR SHARE | FOR NO KEY UPDATE | FOR UPDATE |

|---|---|---|---|---|

FOR KEY SHARE | X | |||

FOR SHARE | X | X | ||

FOR NO KEY UPDATE | X | X | X | |

FOR UPDATE | X | X | X | X |

Main point there is if you have two transactions that update one row, then second transaction will wait until first will be completed. So for business logic and data migrations better to avoid updates for whole table and use batch operations instead.

NOTE: batch operations also can work faster because postgres can use more optimal execution plan with indexes for small data range.

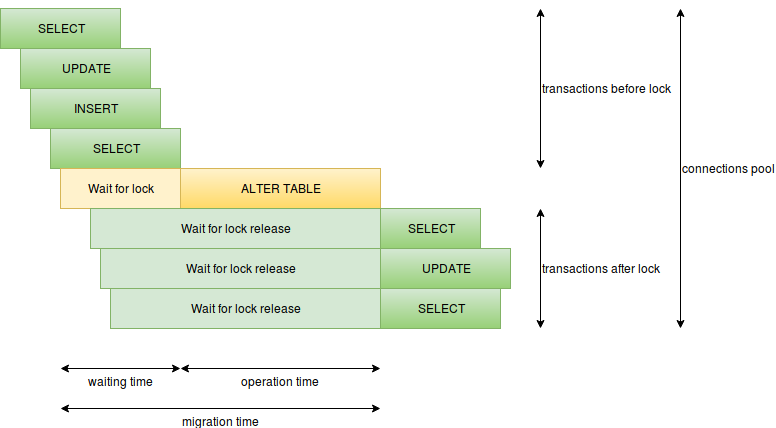

Found same diagram in interesting article http://pankrat.github.io/2015/django-migrations-without-downtimes/.

In this diagram we can extract several metrics:

CREATE INDEX or ALTER TABLE ADD CONSTRAINT, so you need a safe equivalent.Postgres has two settings to dealing with waiting time and operation time presented in diagram: lock_timeout and statement_timeout.

SET lock_timeout TO '2s' allow you to avoid downtime when you have long running query/transaction before run migration (https://www.postgresql.org/docs/current/static/runtime-config-client.html#GUC-LOCK-TIMEOUT).

SET statement_timeout TO '2s' allow you to avoid downtime when you have long running migration query (https://www.postgresql.org/docs/current/static/runtime-config-client.html#GUC-STATEMENT-TIMEOUT).

There no downtime issues for deadlocks, but too many operations in one transaction can take most conflicted lock and release it only after transaction commit or rollback. So it's a good idea to avoid ACCESS EXCLUSIVE lock operations and long time operations in one transaction. Deadlocks also can make you migration stuck on production deployment when different tables will be locked, for example, for FOREIGN KEY that take ACCESS EXCLUSIVE lock for two tables.

Postgres store values of different types different ways. If you try to convert one type to another and it stored different way then postgres will rewrite all values. Fortunately some types stored same way and postgres need to do nothing to change type, but in some cases postgres need to check that all values have same with new type limitations, for example string length.

Regarding documentation https://www.postgresql.org/docs/current/static/mvcc-intro.html data consistency in postgres is maintained by using a multiversion model. This means that each SQL statement sees a snapshot of data. It has advantage for adding and deleting columns without any indexes, CONSTRAINTS and defaults do not change exist data, new version of data will be created on INSERT and UPDATE, delete just mark you record expired. All garbage will be collected later by VACUUM or AUTO VACUUM.

Any schema changes can be processed with creation of new table and copy data to it, but it can take significant time.

| # | name | safe | safe alternative | description |

|---|---|---|---|---|

| 1 | CREATE SEQUENCE | X | safe operation, because your business logic shouldn't operate with new sequence on migration time * | |

| 2 | DROP SEQUENCE | X | safe operation, because your business logic shouldn't operate with this sequence on migration time * | |

| 3 | CREATE TABLE | X | safe operation, because your business logic shouldn't operate with new table on migration time * | |

| 4 | DROP TABLE | X | safe operation, because your business logic shouldn't operate with this table on migration time * | |

| 5 | ALTER TABLE RENAME TO | use updatable view | unsafe operation, because it's too hard write business logic that operate with two tables simultaneously, so propose to use temporary updatable view and switch names in transaction * | |

| 6 | ALTER TABLE SET TABLESPACE | add new table and copy data | unsafe operation, but probably you don't need it at all or often * | |

| 7 | ALTER TABLE ADD COLUMN | X | safe operation if without SET NOT NULL, PRIMARY KEY, UNIQUE * | |

| 8 | ALTER TABLE ADD COLUMN SET DEFAULT | X | safe operation, however it can be unsafe if code default used within NOT NULL, for db_default or NULL there are no issue * | |

| 9 | ALTER TABLE ADD COLUMN SET NOT NULL | +/- | unsafe operation, because doesn't work without SET DEFAULT or after migration old code can insert rows without new column and raise exception, so propose to use ALTER TABLE ADD COLUMN SET DEFAULT with db_default or ALTER TABLE ADD COLUMN and then populate column and then ALTER TABLE ALTER COLUMN SET NOT NULL * and ** | |

| 10 | ALTER TABLE ADD COLUMN PRIMARY KEY | add index and add constraint | unsafe operation, because you spend time in migration to CREATE INDEX, so propose ALTER TABLE ADD COLUMN and then CREATE INDEX CONCURRENTLY and then ALTER TABLE ADD CONSTRAINT PRIMARY KEY USING INDEX *** | |

| 11 | ALTER TABLE ADD COLUMN UNIQUE | add index and add constraint | unsafe operation, because you spend time in migration to CREATE INDEX, so propose ALTER TABLE ADD COLUMN and then CREATE INDEX CONCURRENTLY and then ALTER TABLE ADD CONSTRAINT UNIQUE USING INDEX *** | |

| 12 | ALTER TABLE ALTER COLUMN TYPE | +/- | unsafe operation, because you spend time in migration to check that all items in column valid or to change type, but some operations can be safe **** | |

| 13 | ALTER TABLE ALTER COLUMN SET NOT NULL | add check constraint before | unsafe operation, because you spend time in migration to check that all items in column NOT NULL, so propose ALTER TABLE ADD CONSTRAINT CHECK and then ALTER TABLE VALIDATE CONSTRAINT and then ALTER TABLE ALTER COLUMN SET NOT NULL ** | |

| 14 | ALTER TABLE ALTER COLUMN DROP NOT NULL | X | safe operation | |

| 15 | ALTER TABLE ALTER COLUMN SET DEFAULT | X | safe operation | |

| 16 | ALTER TABLE ALTER COLUMN DROP DEFAULT | X | safe operation | |

| 17 | ALTER TABLE DROP COLUMN | X | safe operation, because your business logic shouldn't operate with this column on migration time, however better ALTER TABLE ALTER COLUMN DROP NOT NULL, ALTER TABLE DROP CONSTRAINT and DROP INDEX before * and ***** | |

| 18 | ALTER TABLE RENAME COLUMN | use updatable view | unsafe operation, because it's too hard write business logic that operate with two columns simultaneously, so propose to use temporary updatable view and switch names in transaction * | |

| 19 | ALTER TABLE ADD CONSTRAINT CHECK | add as not valid and validate | unsafe operation, because you spend time in migration to check constraint | |

| 20 | ALTER TABLE DROP CONSTRAINT (CHECK) | X | safe operation | |

| 21 | ALTER TABLE ADD CONSTRAINT FOREIGN KEY | add as not valid and validate | unsafe operation, because you spend time in migration to check constraint, lock two tables | |

| 22 | ALTER TABLE DROP CONSTRAINT (FOREIGN KEY) | X | safe operation, lock two tables | |

| 23 | ALTER TABLE ADD CONSTRAINT PRIMARY KEY | add index and add constraint | unsafe operation, because you spend time in migration to create index *** | |

| 24 | ALTER TABLE DROP CONSTRAINT (PRIMARY KEY) | X | safe operation *** | |

| 25 | ALTER TABLE ADD CONSTRAINT UNIQUE | add index and add constraint | unsafe operation, because you spend time in migration to create index *** | |

| 26 | ALTER TABLE DROP CONSTRAINT (UNIQUE) | X | safe operation *** | |

| 27 | ALTER TABLE ADD CONSTRAINT EXCLUDE | add new table and copy data | ||

| 28 | ALTER TABLE DROP CONSTRAINT (EXCLUDE) | X | ||

| 29 | CREATE INDEX | CREATE INDEX CONCURRENTLY | unsafe operation, because you spend time in migration to create index | |

| 30 | DROP INDEX | X | DROP INDEX CONCURRENTLY | safe operation *** |

| 31 | CREATE INDEX CONCURRENTLY | X | safe operation | |

| 32 | DROP INDEX CONCURRENTLY | X | safe operation *** |

*: main point with migration on production without downtime that your old and new code should correctly work before and after migration, lets look this point closely in Dealing with logic that should work before and after migration section.

**: postgres will check that all items in column NOT NULL that take time, lets look this point closely in Dealing with NOT NULL constraint section.

***: postgres will have same behaviour when you skip ALTER TABLE ADD CONSTRAINT UNIQUE USING INDEX and still unclear difference with CONCURRENTLY except difference in locks, lets look this point closely in Dealing with UNIQUE constraint.

****: lets look this point closely in Dealing with ALTER TABLE ALTER COLUMN TYPE section.

*****: if you check migration on CI with python manage.py makemigrations --check you can't drop column in code without migration creation, so in this case you can be useful back migration flow: apply code on all instances and then migrate database

Migrations: CREATE SEQUENCE, DROP SEQUENCE, CREATE TABLE, DROP TABLE, ALTER TABLE ADD COLUMN, ALTER TABLE DROP COLUMN.

This migrations are pretty safe, because your logic doesn't work with this data before migration

Migrations: ALTER TABLE RENAME TO.

Standard django's approach does not allow to operate simultaneously for old and new code with old and new table name, hopefully next workaround allows to rename table by splitting migration to few steps:

Migrations: ALTER TABLE RENAME COLUMN.

Standard django's approach does not allow to operate simultaneously for old and new code with old and new column name, hopefully next workaround allows to rename column by splitting migration to few steps:

Migrations: ALTER TABLE SET TABLESPACE, ALTER TABLE ADD CONSTRAINT EXCLUDE.

For this migration too hard implement logic that will work correctly for all instances, so there are two ways to dealing with it:

Migrations: ALTER TABLE ADD COLUMN NOT NULL.

Postgres doesn't allow to create column with NOT NULL if table not empty and DEFAULT is not provided. So you want to ALTER TABLE ADD COLUMN DEFAULT NOT NULL.

Django has two ways to create column default: code default and db_default for django 5.0+.

Main difference between them for us in operations they do for migration and old code inserts handling after migration:

Code default migration and business logic SQL:

-- migration

ALTER TABLE tbl ADD COLUMN new_col integer DEFAULT 0 NOT NULL;

ALTER TABLE tbl ALTER COLUMN new_col DROP DEFAULT;

-- business logic

INSERT INTO tbl (old_col) VALUES (1); -- old code inserts fail

INSERT INTO tbl (old_col, new_col) VALUES (1, 1); -- new code inserts work fine

db_default migration and business logic SQL:

-- migration

ALTER TABLE tbl ADD COLUMN new_col integer DEFAULT 0 NOT NULL;

-- business logic

INSERT INTO tbl (old_col) VALUES (1); -- old code inserts work fine with default

INSERT INTO tbl (old_col, new_col) VALUES (1, 1); -- new code inserts work fine

db_default is most robust way to apply default and it's works fine with NOT NULL constraints too.

In django<5.0 you can use ZERO_DOWNTIME_MIGRATIONS_KEEP_DEFAULT=True to emulate db_default behaviour for default field.

NOT NULL column constraintPostgres checks that all column values NOT NULL (full table scan) when you are applying ALTER TABLE ALTER COLUMN SET NOT NULL, this check skipped if appropriate valid CHECK CONSTRAINT exists for postgres 12+. So to make existing column NOT NULL safe way you can follow next steps:

ALTER TABLE ADD CONSTRAINT CHECK (column IS NOT NULL) NOT VALID - create invalid check constraint for column, this operation takes ACCESS EXCLUSIVE lock only for table metadata updateALTER TABLE VALIDATE CONSTRAINT - validate constraint, at this moment all column values should be NOT NULL, this operation takes SHARE UPDATE EXCLUSIVE lock until full table scan will be completedALTER TABLE ALTER COLUMN SET NOT NULL - set column NOT NULL don't check column values if appropriate valid CHECK CONSTRAINT exists, in this case this operation takes ACCESS EXCLUSIVE lock only for table metadata updateALTER TABLE DROP CONSTRAINT - clean up CHECK CONSTRAINT that duplicates column NOT NULL, this operation takes ACCESS EXCLUSIVE lock only for table metadata updateUNIQUE constraintPostgres has two approaches for uniqueness: CREATE UNIQUE INDEX and ALTER TABLE ADD CONSTRAINT UNIQUE - both use unique index inside. Difference that we can find that we cannot apply DROP INDEX CONCURRENTLY for constraint. However it still unclear what difference for DROP INDEX and DROP INDEX CONCURRENTLY except difference in locks, but as we seen before both marked as safe - we don't spend time in DROP INDEX, just wait for lock. So as django use constraint for uniqueness we also have a hacks to use constraint safely.

ALTER TABLE ALTER COLUMN TYPENext operations are safe:

varchar(LESS) to varchar(MORE) where LESS < MOREvarchar(ANY) to textnumeric(LESS, SAME) to numeric(MORE, SAME) where LESS < MORE and SAME == SAMEFor other operations propose to create new column and copy data to it. Eg. some types can be also safe, but you should check yourself.

deferrable parametermigrate_isnotnull_check_constraints commandADD COLUMN DEFAULT NULL to a safe operation for code defaultsADD COLUMN DEFAULT NOT NULL to a safe operation for db_default in django 5.0+ZERO_DOWNTIME_MIGRATIONS_KEEP_DEFAULT setting and changed ADD COLUMN DEFAULT NOT NULL with this setting to a safe operation for django < 5.0ZERO_DOWNTIME_MIGRATIONS_EXPLICIT_CONSTRAINTS_DROP setting and enabled dropping constraints and indexes before dropping a column or tablesqlmigrate in idempotent modeinclude parameterZERO_DOWNTIME_MIGRATIONS_IDEMPOTENT_SQL settingskip_default_on_alter methodZERO_DOWNTIME_MIGRATIONS_USE_NOT_NULL settingmigrate_isnotnull_check_constraints command as deprecatedserial and integer, bigserial and bigint, as well as smallserial and smallint, implementing the same type changes as safe migrationsAutoField type change and concurrent insertion issue for django < 4.1CASCADE keyword and may affect other tablesdb_table raised an ALTER_TABLE_RENAME error (#26)django_zero_downtime_migrations_postgres_backend moduleZERO_DOWNTIME_MIGRATIONS_USE_NOT_NULL option as deprecated for postgres 12+CHECK IS NOT NULL constraint to a real NOT NULL constraintpg_attributeNOT NULL constraint creation for postgres 12NOT NULL constraint creation for extra permissions for pg_catalog.pg_attribute when the ZERO_DOWNTIME_MIGRATIONS_USE_NOT_NULL=USE_PG_ATTRIBUTE_UPDATE_FOR_SUPERUSER option is enabledAddField with the null=False parameter and the compatible CHECK IS NOT NULL constraint option as an unsafe operation, ignoring the ZERO_DOWNTIME_MIGRATIONS_USE_NOT_NULL value in this casedjango_zero_downtime_migrations_postgres_backend to django_zero_downtime_migrations.backends.postgresdjango_zero_downtime_migrations_postgres_backend module as deprecatedZERO_DOWNTIME_MIGRATIONS_LOCK_TIMEOUT and ZERO_DOWNTIME_MIGRATIONS_STATEMENT_TIMEOUT from 0ms to None to match the default django behavior that respects postgres timeoutsMeta.indexes and Meta.constraints attributesTimeoutExceptionstatement_timeout for long operations, such as index creation and constraint validation, when statement_timeout is set globallystatement_timeout and lock_timeoutNOT NULL constraint behaviorFAQs

Django postgresql backend that apply migrations with respect to database locks

We found that django-pg-zero-downtime-migrations demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 1 open source maintainer collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Security News

Learn the essential steps every developer should take to stay secure on npm and reduce exposure to supply chain attacks.

Security News

Experts push back on new claims about AI-driven ransomware, warning that hype and sponsored research are distorting how the threat is understood.

Security News

Ruby's creator Matz assumes control of RubyGems and Bundler repositories while former maintainers agree to step back and transfer all rights to end the dispute.