Security News

vlt Launches "reproduce": A New Tool Challenging the Limits of Package Provenance

vlt's new "reproduce" tool verifies npm packages against their source code, outperforming traditional provenance adoption in the JavaScript ecosystem.

Newest version = 0.3.6: torch package needs to be installed seperately to make sure your system env matches;

NexuSync is a lightweight yet powerful library for building Retrieval-Augmented Generation (RAG) systems, built on top of LlamaIndex. It offers a simple and user-friendly interface for developers to configure and deploy RAG systems efficiently. Choose between using the Ollama LLM model for offline, privacy-focused applications or the OpenAI API for a hosted solution.

.csv, .docx, .epub, .hwp, .ipynb, .mbox, .md, .pdf, .png, .ppt, .pptm, .pptx, .json, and more.Install conda for WSL2 (Windows Subsystem for Linux 2):

wget https://repo.anaconda.com/miniconda/Miniconda3-latest-Linux-x86_64.shbash Miniconda3-latest-Linux-x86_64.shInstall conda for Windows:

Install conda for Linux:

wget https://repo.anaconda.com/miniconda/Miniconda3-latest-Linux-x86_64.shbash Miniconda3-latest-Linux-x86_64.sh

4.Follow the prompts to complete the installationsource ~/.bashrcInstall conda for macOS:

curl -O https://repo.anaconda.com/miniconda/Miniconda3-latest-MacOSX-x86_64.shbash Miniconda3-latest-MacOSX-x86_64.sh

4.Follow the prompts to complete the installationsource ~/.bash_profileAfter installation on any platform, verify the installation by running:

conda --version

conda create env --name <your_env_name> python=3.10

conda activate <your_env_name>

pip install nexusync

Or git clone https://github.com/Zakk-Yang/nexusync.git

pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu118pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu121pip3 install torch torchvision torchaudioHere's how you can get started with NexuSync:

from nexusync import NexuSync

#------- Use OpenAI Model -------

# Customize your parameters for openai model, create .env file in the project folder to include OPENAI_API_KEY = 'sk-xxx'

OPENAI_MODEL_YN = True

EMBEDDING_MODEL = "text-embedding-3-large"

LANGUAGE_MODEL = "gpt-4o-mini"

TEMPERATURE = 0.4 # range from 0 to 1, higher means higher creativitiy level

CHROMA_DB_DIR = 'chroma_db' # Your path to the chroma db

INDEX_PERSIST_DIR = 'index_storage' # Your path to the index storage

CHROMA_COLLECTION_NAME = 'my_collection'

INPUT_DIRS = ["../sample_docs"] # can specify multiple document paths

CHUNK_SIZE = 1024 # Size of text chunks for creating embeddings

CHUNK_OVERLAP = 20 # Overlap between text chunks to maintain context

RECURSIVE = True # Recursive or not under one folder

#------- Use Ollama Model -------

# Customize your parameters for ollama model

OPENAI_MODEL_YN = False # if False, you will use ollama model

EMBEDDING_MODEL = "BAAI/bge-base-en-v1.5" # suggested embedding model, you can replace with any HuggingFace embedding models

LANGUAGE_MODEL = 'llama3.2' # you need to download ollama model first, please check https://ollama.com/download

BASE_URL = "http://localhost:11434" # you can swith to different base_url for Ollama model

TEMPERATURE = 0.4 # range from 0 to 1, higher means higher creativitiy level

CHROMA_DB_DIR = 'chroma_db' # Your path to the chroma db

INDEX_PERSIST_DIR = 'index_storage' # Your path to the index storage

CHROMA_COLLECTION_NAME = 'my_collection'

INPUT_DIRS = ["../sample_docs"] # can specify multiple document paths

CHUNK_SIZE = 1024 # Size of text chunks for creating embeddings

CHUNK_OVERLAP = 20 # Overlap between text chunks to maintain context

RECURSIVE = True # Recursive or not under one folder

# example for Ollama Model

ns = NexuSync(input_dirs=INPUT_DIRS,

openai_model_yn=False,

embedding_model=EMBEDDING_MODEL,

language_model=LANGUAGE_MODEL,

base_url = BASE_URL, # OpenAI model does not need base_url, here we use Ollama Model as an example

temperature=TEMPERATURE,

chroma_db_dir = CHROMA_DB_DIR,

index_persist_dir = INDEX_PERSIST_DIR,

chroma_collection_name=CHROMA_COLLECTION_NAME,

chunk_overlap=CHUNK_OVERLAP,

chunk_size=CHUNK_SIZE,

recursive=RECURSIVE

)

#------- Start Quering (one-time, no memory and without stream chat) -----

query = "main result of the paper can llm generate novltive ideas"

text_qa_template = """

Context Information:

--------------------

{context_str}

--------------------

Query: {query_str}

Instructions:

1. Carefully read the context information and the query.

2. Think through the problem step by step.

3. Provide a concise and accurate answer based on the given context.

4. If the answer cannot be determined from the context, state "Based on the given information, I cannot provide a definitive answer."

5. If you need to make any assumptions, clearly state them.

6. If relevant, provide a brief explanation of your reasoning.

Answer: """

response = ns.start_query(text_qa_template = text_qa_template, query = query )

print(f"Query: {query}")

print(f"Response: {response['response']}")

print(f"Response: {response['metadata']}")

# First, initalize the stream chat engine

ns.initialize_stream_chat(

text_qa_template=text_qa_template,

chat_mode="context",

similarity_top_k=3

)

query = "main result of the paper can llm generate novltive ideas"

for item in ns.start_chat_stream(query):

if isinstance(item, str):

# This is a token, print or process as needed

print(item, end='', flush=True)

else:

# This is the final response with metadata

print("\n\nFull response:", item['response'])

print("Metadata:", item['metadata'])

break

chat_history = ns.chat_engine.get_chat_history()

print("Chat History:")

for entry in chat_history:

print(f"Human: {entry['query']}")

print(f"AI: {entry['response']}\n")

#------- Incrementaly Refresh Index without Rebuilding it -----

# If you have files modified, inserted or deleted, you don't need to rebuild all the index

ns.refresh_index()

#------- Rebuild Index -----

# Rebuild the index when either of the following is changed:

# - openai_model_yn

# - embedding_model

# - language_model

# - base_url

# - chroma_db_dir

# - index_persist_dir

# - chroma_collection_name

# - chunk_overlap

# - chunk_size

# - recursive

from nexusync import rebuild_index

from nexusync import NexuSync

OPENAI_MODEL_YN = True # if False, you will use ollama model

EMBEDDING_MODEL = "text-embedding-3-large" # suggested embedding model

LANGUAGE_MODEL = 'gpt-4o-mini' # you need to download ollama model first, please check https://ollama.com/download

TEMPERATURE = 0.4 # range from 0 to 1, higher means higher creativitiy level

CHROMA_DB_DIR = 'chroma_db'

INDEX_PERSIST_DIR = 'index_storage'

CHROMA_COLLECTION_NAME = 'my_collection'

INPUT_DIRS = ["../sample_docs"] # can specify multiple document paths

CHUNK_SIZE = 1024

CHUNK_OVERLAP = 20

RECURSIVE = True

# Assume we changed the model from Ollama to OPENAI

rebuild_index(input_dirs=INPUT_DIRS,

openai_model_yn=OPENAI_MODEL_YN,

embedding_model=EMBEDDING_MODEL,

language_model=LANGUAGE_MODEL,

temperature=TEMPERATURE,

chroma_db_dir = CHROMA_DB_DIR,

index_persist_dir = INDEX_PERSIST_DIR,

chroma_collection_name=CHROMA_COLLECTION_NAME,

chunk_overlap=CHUNK_OVERLAP,

chunk_size=CHUNK_SIZE,

recursive=RECURSIVE

)

# Reinitiate the ns after rebuilding the index

ns = NexuSync(input_dirs=INPUT_DIRS,

openai_model_yn=OPENAI_MODEL_YN,

embedding_model=EMBEDDING_MODEL,

language_model=LANGUAGE_MODEL,

temperature=TEMPERATURE,

chroma_db_dir = CHROMA_DB_DIR,

index_persist_dir = INDEX_PERSIST_DIR,

chroma_collection_name=CHROMA_COLLECTION_NAME,

chunk_overlap=CHUNK_OVERLAP,

chunk_size=CHUNK_SIZE,

recursive=RECURSIVE

)

# Test the new built index

query = "main result of the paper can llm generate novltive ideas"

text_qa_template = """

Context Information:

--------------------

{context_str}

--------------------

Query: {query_str}

Instructions:

1. Carefully read the context information and the query.

2. Think through the problem step by step.

3. Provide a concise and accurate answer based on the given context.

4. If the answer cannot be determined from the context, state "Based on the given information, I cannot provide a definitive answer."

5. If you need to make any assumptions, clearly state them.

6. If relevant, provide a brief explanation of your reasoning.

Answer: """

response = ns.start_query(text_qa_template = text_qa_template, query = query )

print(f"Query: {query}")

print(f"Response: {response['response']}")

print(f"Response: {response['metadata']}")

git clone https://github.com/Zakk-Yang/nexusync.git

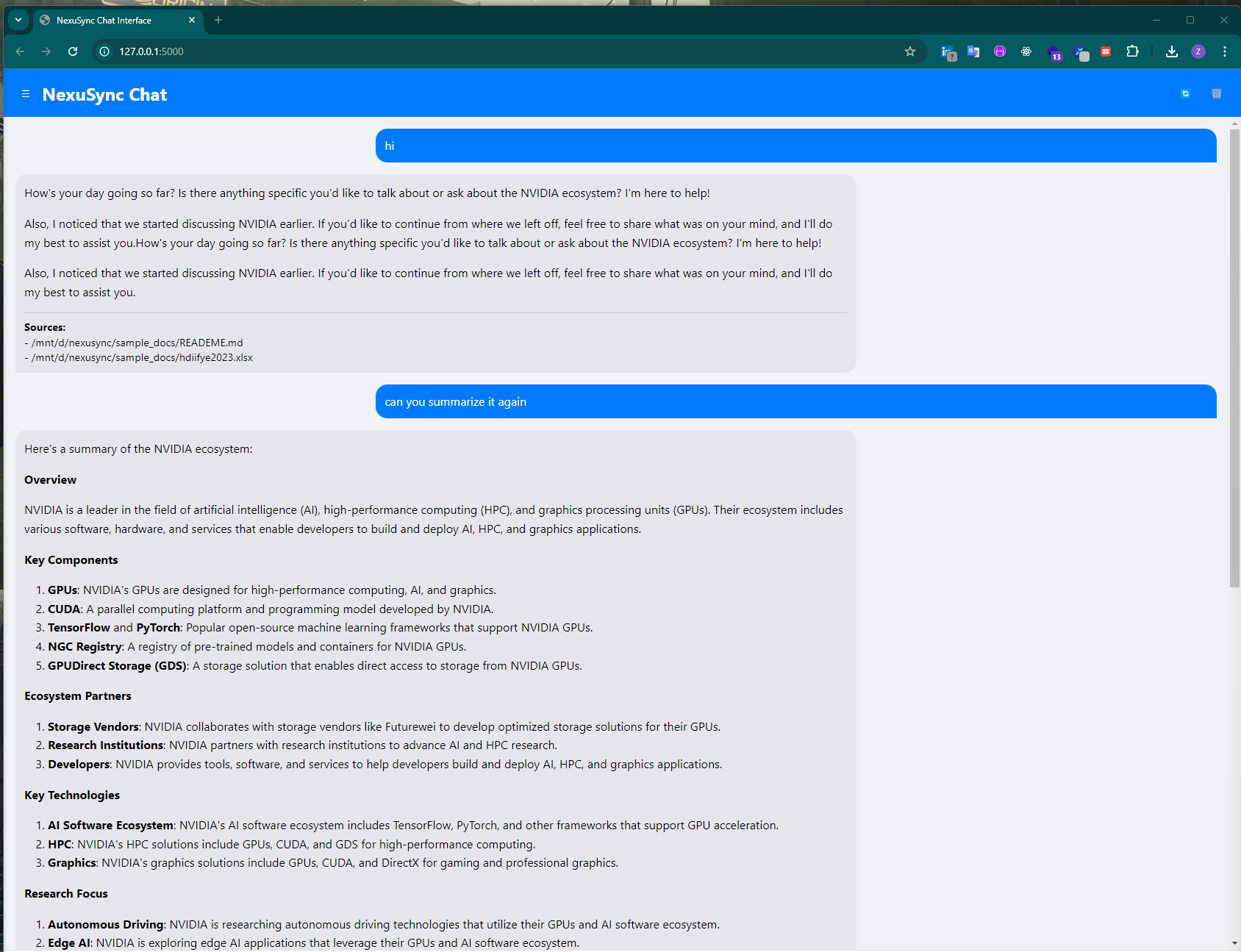

python back_end_api.py

Ensure that the parameters in back_end_api.py align with the settings in the side panel of the interface. If not, copy and paste your desired Embedding Model and Language Model in the side panel and click "Apply Settings".

For more detailed usage examples, check out the demo notebooks.

This project is licensed under the MIT License - see the LICENSE file for details.

For questions or suggestions, feel free to open an issue or contact the maintainer:

Name: Zakk Yang Email: zakkyang@hotmail.com GitHub: Zakk-Yang

If you find this project helpful, please give it a ⭐ on GitHub! Your support is appreciated.

FAQs

A powerful document indexing and querying tool built on top of LlamaIndex

We found that nexusync demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 1 open source maintainer collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Security News

vlt's new "reproduce" tool verifies npm packages against their source code, outperforming traditional provenance adoption in the JavaScript ecosystem.

Research

Security News

Socket researchers uncovered a malicious PyPI package exploiting Deezer’s API to enable coordinated music piracy through API abuse and C2 server control.

Research

The Socket Research Team discovered a malicious npm package, '@ton-wallet/create', stealing cryptocurrency wallet keys from developers and users in the TON ecosystem.